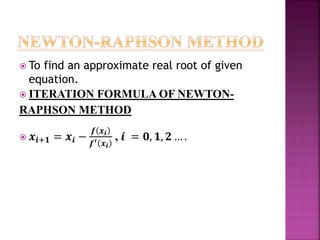

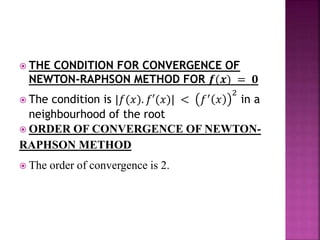

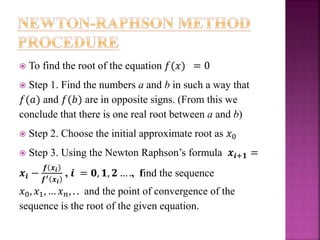

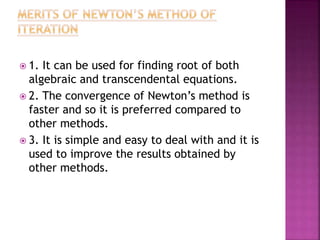

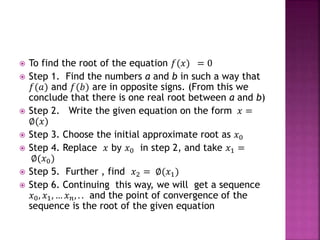

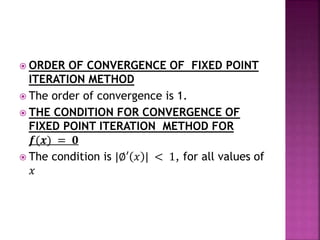

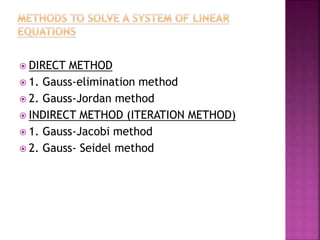

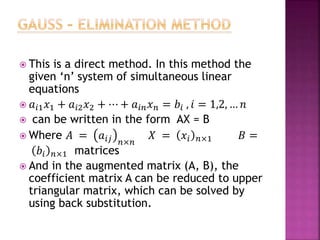

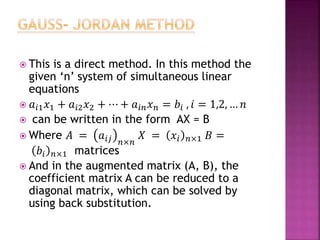

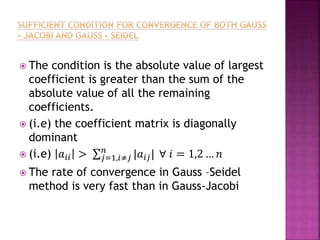

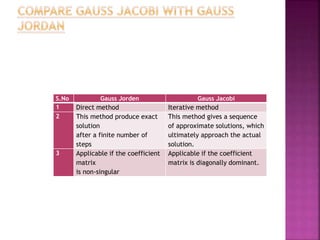

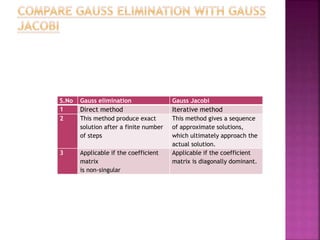

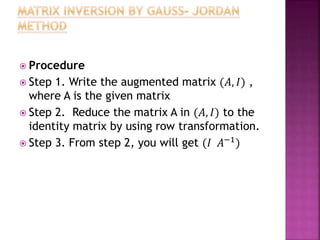

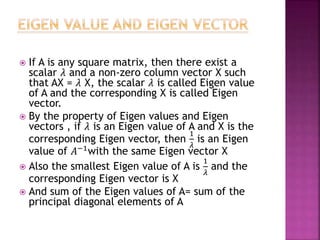

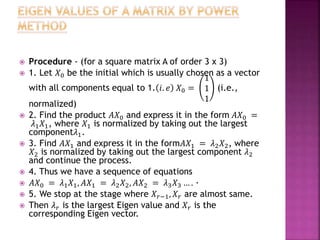

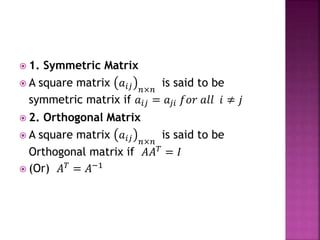

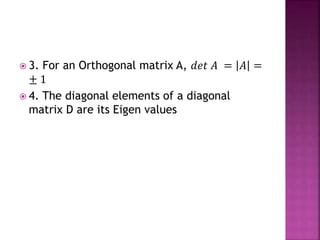

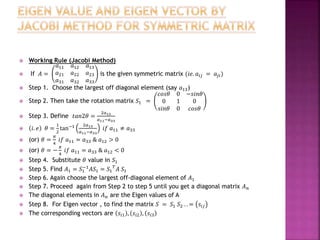

The document discusses methods for finding roots of equations, particularly focusing on the Newton-Raphson method and fixed-point iteration. It compares direct methods like Gauss elimination and Gauss-Jordan with iterative methods including Gauss-Jacobi and Gauss-Seidel, emphasizing their convergence conditions and applications. Additionally, it outlines procedures for determining eigenvalues and eigenvectors, including the Jacobi method for symmetric matrices.