The document describes three numerical methods for finding the roots or solutions of equations: the bisection method, Newton's method for single variable equations, and Newton's method for systems of nonlinear equations.

The bisection method works by repeatedly bisecting the interval within which a root is known to exist, narrowing in on the root through iterative halving. Newton's method approximates the function with its tangent line to find a better root estimate with each iteration. For systems of equations, Newton's method involves calculating the Jacobian matrix and solving a system of linear equations at each step to update the solution estimate. Examples are provided to illustrate each method.

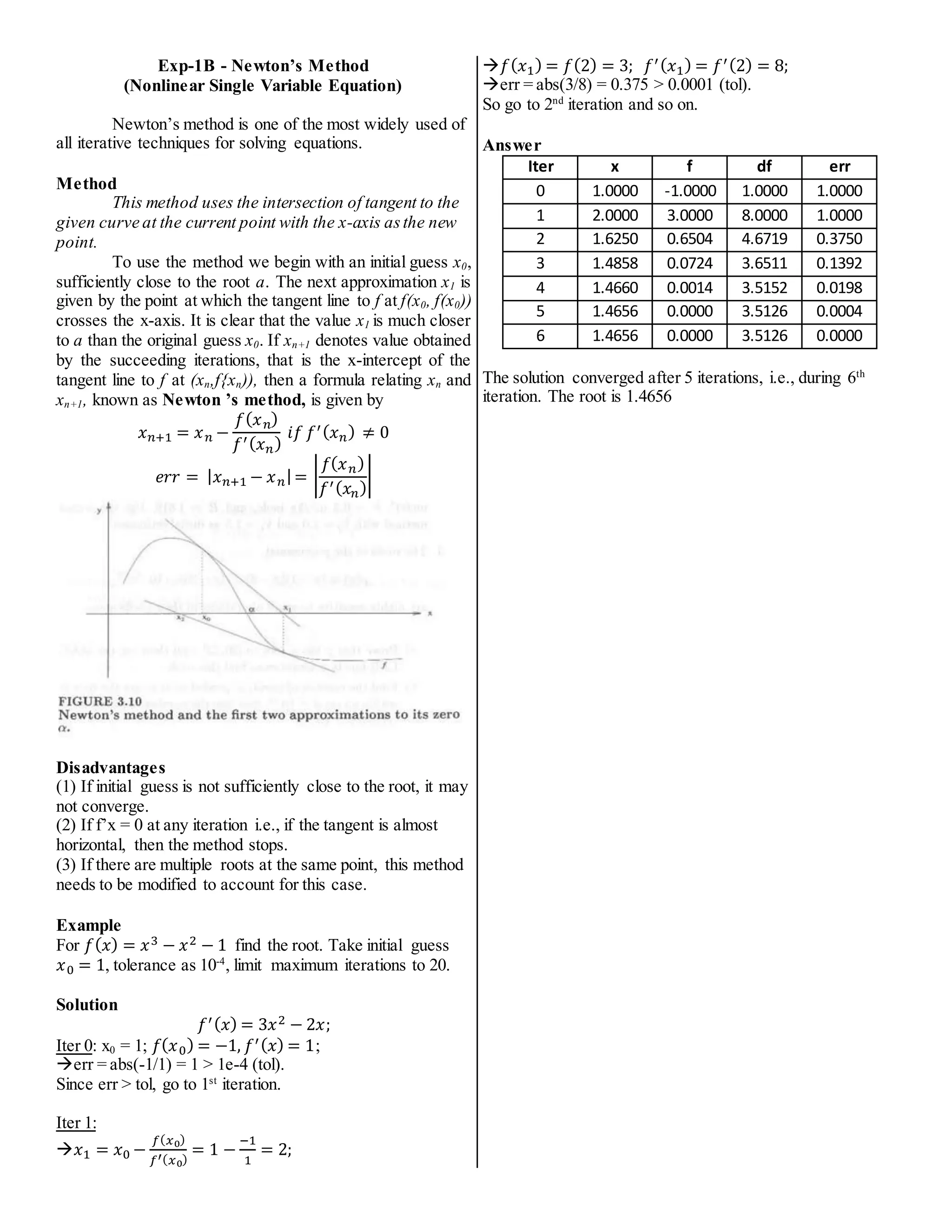

![Exp-1A - Finding Roots of an Equation

Bisection Method

The root-finding problem consists of: Given a

continuous function, find the values of x that satisfy the

equation f(x) = 0. The solutions of this equation are called

the zeros of ‘f’ or the roots of the equation. In general, it is

impossible to solve exactly. Therefore, one must rely on

some numerical methods for an approximate solution. 2

kinds of methods iterative numerical methods exist: (1)

convergence is guaranteed (2) convergence depends on the

initial guess.

Method

Let f(x) be a given function, continuous on an

interval [a, b], such that f (a) f(b)<0. Then there must exist

at least one zero ‘a’ in (a, b).

The bisection method is based on halving the

interval [a,b] to determine a smaller and smaller interval

within which a must lie.

The procedure is carried out by first defining the

midpoint of [a, b], c = (a + b)/2 and then computing the

product f(c) f(b). If the product is negative, then the root is

in the interval [c, b]. If the product is positive, the root is in

the interval [a, c]. If the product is zero, then either a or b is

a root, and the process is stopped. Thus, a new interval

containing ‘a’ is obtained.

The process of halving the new interval continues

until the root is located as accurately as desired. Any of the

3 following criteria can be used for termination of iterations.

|𝑎𝑛 − 𝑏𝑛| < 𝜖 (𝑜𝑟)

|𝑎𝑛−𝑏𝑛|

|𝑎𝑛|

< 𝜖 (𝑜𝑟)|𝑓(𝑎𝑛)| < 𝜖.

For this program, 𝑒𝑟𝑟𝑜𝑟 =

|𝑎𝑛−𝑏𝑛|

2

< 𝜖 (𝑡𝑜𝑙𝑒𝑟𝑎𝑛𝑐𝑒) is

used. Also if a problem takes to many iterations to converge

to the above specified tolerance level, the program is

terminated when maximum number of specified iterations

are reached.

Disadvantages

(1) It requires specifying lower limit and upper limit within

which a zero must exist.

(2) Its rate of convergence is slow.

Example

For 𝑓(𝑥) = 𝑥3 − 𝑥2 − 1 find the root between x [1, 2] to a

tolerance 10-4

and limit maximum iterations to 20.

Solution

Iter 0: a = 1, b = 2, f(a) = f(1) = -1, f(b) = f(2) = 3.

Since f(1) (f2) = (-1)(3) = -3 < 0, there lies a root between

1 and 2.

Since err = abs(b-a)/2 = abs(2-1)/2 = 0.5 > 0.0001 (tol)

perform next iteration.

Set iter = 0;

Iter 1: c = (a+b)/2 = (-1+3)/2 = 1.5. f(c) = f(1.5) = 0.125.

Since f(c)f(a) = f(1.5)f(1) = (0.125)(-1) = -0.125 < 0, the

root lies between [1, 1.5].

Since f(c)f(b) = f(1.5)f(2) = (0.125)(3) = 0.375 > 0, the

root does not lie between [1.5, 2].

Thus new range is [1, 1.5].

Since err = abs(b-a)/2 = abs(1.5-1)/2 = 0.25 > 0.0001 (tol)

perform next iteration.

iter = iter + 1 = 0 + 1 = 1. Since iter < 20 (maxiter),

perform next iteration.

Answer

ITER a b c f_c err

0 1 2 1.5 0.125 0.5

1 1 1.5 1.25 -0.609375 0.25

2 1.25 1.5 1.375 -0.291016 0.125

3 1.375 1.5 1.4375 -0.095947 0.0625

4 1.4375 1.5 1.46875 0.0112 0.03125

5 1.4375 1.4688 1.453125 -0.043194 0.015625

6 1.4531 1.4688 1.460938 -0.016203 0.007813

7 1.4609 1.4688 1.464844 -0.002554 0.003906

8 1.4648 1.4688 1.466797 0.00431 0.001953

9 1.4648 1.4668 1.46582 0.000875 0.000977

10 1.4648 1.4658 1.465332 -0.00084 0.000488

11 1.4653 1.4658 1.465576 0.000017 0.000244

12 1.4653 1.4656 1.465454 -0.000411 0.000122

After 12 iterations, err < 0.0001, hence solution is

converged.

The root is 1.4655 and function value at root is -0.00041138](https://image.slidesharecdn.com/matlablabmanual-theory-211125130156/75/Matlab-lab-manual-1-2048.jpg)

![Exp-1C - Newton’s Method

(Nonlinear System of Equations)

Given system of nonlinear equations

𝑓

1(𝑥1,𝑥2,⋯,𝑥𝑛) = 0;

𝑓2(𝑥1,𝑥2,⋯, 𝑥𝑛) = 0;

⋮ ⋮ ⋮

𝑓

𝑛(𝑥1,𝑥2,⋯, 𝑥𝑛) = 0;

and initial guess 𝑋0 = [𝑥1

0,𝑥2

0,⋯, 𝑥𝑛

0]′;

Method

For system of nonlinear equations, Newton’s

method consists of approximating each nonlinear function

by its tangent plane; and the common root of the resulting

linear equations provides the next approximation.

Jacobian matrix for the given system of equations is

determined by

[𝐽] =

[

𝜕𝑓

1

𝜕𝑥1

⋯

𝜕𝑓

1

𝜕𝑥𝑛

⋮ ⋱ ⋮

𝜕𝑓

𝑛

𝜕𝑥1

⋯

𝜕𝑓

𝑛

𝜕𝑥𝑛]

Function value at ith

iteration Fi

is calculated as

𝐹𝑖 = 𝐹(𝑋𝑖) = [𝑓

1

𝑖

,𝑓

2

𝑖

,… 𝑓

𝑛

𝑖]′;

Change in the solution at ith

iteration, ∆Xi

is obtained by

solving system of equations 𝐽 ∆𝑋 = −𝐹 i.e.,

[

𝜕𝑓

1

𝜕𝑥1

𝑖

⋯

𝜕𝑓

1

𝜕𝑥𝑛

𝑖

⋮ ⋱ ⋮

𝜕𝑓

𝑛

𝜕𝑥1

𝑖

⋯

𝜕𝑓

𝑛

𝜕𝑥𝑛

𝑖

]

[

∆𝑥1

𝑖

⋮

∆𝑥𝑛

𝑖

] = −[

𝑓

1

𝑖

⋮

𝑓

𝑛

𝑖

]

New point Xi+1

is obtained by Xi+1

= Xi

+∆Xi

i.e,.

[

𝑥1

𝑖+1

⋮

𝑥𝑛

𝑖+1

] = [

𝑥1

𝑖

⋮

𝑥𝑛

𝑖

] + [

∆𝑥1

𝑖

⋮

∆𝑥𝑛

𝑖

]

Error for vector of ∆x’s can be calculated by either of the

formulas.

𝑒𝑟𝑟 = √(∆𝑥1

𝑖)

2

+ (∆𝑥2

𝑖 )

2

+ ⋯+ (∆𝑥𝑛

𝑖 )

2

𝑒𝑟𝑟 = max(𝑎𝑏𝑠(∆𝑥1

𝑖

,∆𝑥2

𝑖

,…, ∆𝑥𝑛

𝑖 ))

Disadvantages

(1) It is necessary to solve a system of linear equations at

every iteration to obtain the next solution point.

(2) Jacobian matrix which is the first derivative matrix

should be specified and evaluated at each step.

Example

𝑓

1(𝑥1,𝑥2) = 𝑥1

3

+ 3𝑥2

2

− 21

𝑓2(𝑥1,𝑥2) = 𝑥1

2

+ 2𝑥2 + 2

Solve the above system of equations taking initial guess

𝑋0 = [1, −1], tolerance as 10-6

, limit maximum iterations to

20.

Solution

𝐽 =

[

𝜕𝑓

1

𝜕𝑥1

𝜕𝑓2

𝜕𝑥1

𝜕𝑓

1

𝜕𝑥2

𝜕𝑓2

𝜕𝑥2]

= [3𝑥1

2

6𝑥2

2𝑥1 2

]

Jacobian at iteration 0 is

𝐽0 = 𝐽 (

1

−1

) = [

3 −6

2 2

]

Function value at iteration0 is

𝐹0 = 𝐹(𝑋0) = 𝐹 (

1

−1

) = [

−17

1

]

Change in X for iteration 0 is obtained by solving

[

3 −6

2 2

] [

∆𝑥1

0

∆𝑥1

1

] = − [

−17

1

]

Its solution is

∆𝑋0 = [

∆𝑥1

0

∆𝑥1

1

]= [

1.555556

−2.055560

]

Thus point for 1st

iteration is

𝑋1 = 𝑋0 + ∆𝑋0

𝑋1 = [

1

−1

] + [

1.555556

−2.055560

] = [

2.555556

−3.05556

]

Error at 1st

iteration is

𝑒𝑟𝑟 = max(𝑎𝑏𝑠(∆𝑥1

𝑖

,∆𝑥2

𝑖

,…, ∆𝑥𝑛

𝑖 ))

𝑒𝑟𝑟 = max(𝑎𝑏𝑠(1.555556,−2.055560))

= max(1.555556,2.055560)

= 2.055560 > 0.000001 (𝑡𝑜𝑙)

So perform next iteration.

Answer

The points and corresponding

Iter x1 x2 err

0 2.5556 -3.0556 2.0556

1 1.8650 -2.5008 0.6905

2 1.6613 -2.3593 0.2037

3 1.6432 -2.3498 0.0182

4 1.6430 -2.3498 0.0001

The solution converges after 4 iterations with the root as

[1.643, -2.3498].](https://image.slidesharecdn.com/matlablabmanual-theory-211125130156/75/Matlab-lab-manual-3-2048.jpg)

![Exp-2A – Gauss Elimination

(System of Linear Equations)

Given a system of linear equations [𝐴]{𝑋} = {𝐵}

𝐴11𝑥1 + 𝐴12𝑥2 + ⋯+ 𝐴1𝑛𝑥𝑛 = 𝐵1

𝐴21𝑥1 + 𝐴22𝑥2 + ⋯+ 𝐴2𝑛𝑥𝑛 = 𝐵2

⋮ ⋮ ⋮ ⋮ = ⋮

𝐴𝑛1𝑥1 + 𝐴𝑛2𝑥2 + ⋯+ 𝐴𝑛𝑛𝑥𝑛 = 𝐵𝑛

Method

Gauss elimination consists of reducing the above

system to an equivalent system [𝑈]{𝑋} = {𝐷}, in which U is

an upper triangular matrix. This new system can be easily

solved by back substitution.

Iter1: (k = 1)

Define row multipliers (Taking n = 4 for example)

𝑚𝑖𝑘 =

𝐴𝑖𝑘

𝐴𝑘𝑘

𝑓𝑜𝑟 𝑘 = 1,𝑓𝑜𝑟 𝑖 = 2 𝑡𝑜 𝑛

𝑚21 =

𝐴21

𝐴11

(𝑘 = 1, 𝑖 = 2)

𝑚31 =

𝐴31

𝐴11

(𝑘 = 1, 𝑖 = 2)

𝑚41 =

𝐴41

𝐴11

(𝑘 = 1, 𝑖 = 4)

For each column (j = 2 to n), multiply the 1st

row (k = 1)

by 𝑚𝑖𝑘 and subtract from the ith

row (i = 2 to n), we get

𝐴𝑖𝑗 = 𝐴𝑖𝑗 − 𝑚𝑖1 ∗ 𝐴1𝑗 𝑓𝑜𝑟 𝑗 = 2 𝑡𝑜 𝑛

𝐵𝑖 = 𝐵𝑖 − 𝑚𝑖𝑘 ∗ 𝐵𝑘

For 2nd

row (i= 2)

𝐴22 = 𝐴22 − 𝑚21 ∗ 𝐴12 (𝑘 = 1,𝑖 = 2,𝑗 = 2)

𝐴23 = 𝐴23 − 𝑚21 ∗ 𝐴13 (𝑘 = 1,𝑖 = 2,𝑗 = 3)

𝐴24 = 𝐴24 − 𝑚21 ∗ 𝐴14 (𝑘 = 1,𝑖 = 2,𝑗 = 4)

𝐵2 = 𝐵2 − 𝑚21 ∗ 𝐵1 (𝑘 = 1, 𝑖 = 2)

Here,the first rows of A and B are left unchanged, and the

entries of the first column of A below 𝐴11 are set to zeros.

[

𝐴11 𝐴12 …

0 𝐴22 − 𝑚21 ∗ 𝐴12 …

⋮ ⋮ ⋮

𝐴41 𝐴42 …

𝐴1𝑛

𝐴24 − 𝑚21 ∗ 𝐴14

⋮

𝐴44

]{

𝑥1

𝑥2

⋮

𝑥4

}

= {

𝐵1

𝐵2 − 𝑚21 ∗ 𝐵1

⋮

𝐵4

}

For 4th

row (i = 4)

𝐴42 = 𝐴42 − 𝑚41 ∗ 𝐴12 (𝑘 = 1,𝑖 = 4,𝑗 = 2)

𝐴43 = 𝐴43 − 𝑚41 ∗ 𝐴13 (𝑘 = 1,𝑖 = 4,𝑗 = 3)

𝐴44 = 𝐴44 − 𝑚41 ∗ 𝐴14 (𝑘 = 1,𝑖 = 4,𝑗 = 4)

𝐵4 = 𝐵4 − 𝑚41 ∗ 𝐵1 (𝑘 = 1,𝑖 = 4)

[

𝐴11 𝐴12 …

0 𝐴22 − 𝑚21 ∗ 𝐴12 …

⋮ ⋮ ⋮

0 𝐴42 − 𝑚41 ∗ 𝐴12 …

𝐴14

𝐴24 − 𝑚21 ∗ 𝐴14

⋮

𝐴44 − 𝑚41 ∗ 𝐴14

]{

𝑥1

𝑥2

⋮

𝑥4

}

= {

𝐵1

𝐵2 − 𝑚21 ∗ 𝐵1

⋮

𝐵4 − 𝑚41 ∗ 𝐵1

}

Iter2: (k = 2)

𝑚𝑖𝑘 =

𝐴𝑖𝑘

𝐴𝑘𝑘

𝑓𝑜𝑟 𝑘 = 2,𝑓𝑜𝑟 𝑖 = 3 𝑡𝑜 𝑛

𝑚32 =

𝐴32

𝐴22

(𝑘 = 2, 𝑖 = 3)

𝑚42 =

𝐴42

𝐴22

(𝑘 = 2, 𝑖 = 4)

For i = 3

𝐴𝑖𝑗 = 𝐴𝑖𝑗 − 𝑚𝑖2 ∗ 𝐴2𝑗 𝑓𝑜𝑟 𝑗 = 3 𝑡𝑜 𝑛

𝐴33 = 𝐴33 − 𝑚32 ∗ 𝐴23 (𝑘 = 2, 𝑖 = 3,𝑗 = 3)

𝐴34 = 𝐴34 − 𝑚32 ∗ 𝐴24 (𝑘 = 2, 𝑖 = 3,𝑗 = 4)

𝐵3 = 𝐵3 − 𝑚32 ∗ 𝐵2 (𝑘 = 2,𝑖 = 3)

For i = 4

𝐴43 = 𝐴43 − 𝑚42 ∗ 𝐴23 (𝑘 = 2, 𝑖 = 4,𝑗 = 3)

𝐴44 = 𝐴44 − 𝑚42 ∗ 𝐴24 (𝑘 = 2, 𝑖 = 4,𝑗 = 4)

𝐵4 = 𝐵4 − 𝑚42 ∗ 𝐵2 (𝑘 = 2,𝑖 = 4)

[

𝐴11 𝐴12 …

0 𝐴22 …

0 0 𝐴33 − 𝑚32 ∗ 𝐴23

0 0 𝐴43 − 𝑚42 ∗ 𝐴23

𝐴14

𝐴24 − 𝑚21 ∗ 𝐴14

𝐴34 − 𝑚32 ∗ 𝐴24

𝐴44 − 𝑚42 ∗ 𝐴24

]{

𝑥1

𝑥2

⋮

𝑥𝑛

}

= {

𝐵1

𝐵2

𝐵3 − 𝑚32 ∗ 𝐵2

𝐵4 − 𝑚42 ∗ 𝐵2

}

Iter3: (k = 3)

𝑚𝑖𝑘 =

𝐴𝑖𝑘

𝐴𝑘𝑘

𝑓𝑜𝑟 𝑘 = 3, 𝑓𝑜𝑟 𝑖 = 4

𝑚43 =

𝐴43

𝐴33

(𝑘 = 3, 𝑖 = 4)

𝐴𝑖𝑗 = 𝐴𝑖𝑗 − 𝑚𝑖3 ∗ 𝐴3𝑗 𝑓𝑜𝑟 𝑗 = 4

𝐴44 = 𝐴44 − 𝑚43 ∗ 𝐴34 (𝑘 = 3, 𝑖 = 4,𝑗 = 4)

𝐵4 = 𝐵4 − 𝑚43 ∗ 𝐵3 (𝑘 = 3,𝑖 = 4)

[

𝐴11 𝐴12 …

0 𝐴22 …

0 0 𝐴33

0 0 0

𝐴1𝑛

𝐴24

𝐴34

𝐴44 − 𝑚43 ∗ 𝐴34

]{

𝑥1

𝑥2

⋮

𝑥𝑛

}

= {

𝐵1

𝐵2

𝐵3

𝐵4 − 𝑚43 ∗ 𝐵3

}](https://image.slidesharecdn.com/matlablabmanual-theory-211125130156/75/Matlab-lab-manual-4-2048.jpg)

![Backward Substitution

From nth

equation (i.e., 4th

row)

𝑥4 =

𝐵4

𝐴44

For general equation

𝑥𝑖 =

𝐵𝑖 − ∑ 𝐴𝑖𝑗𝑥𝑗

𝑛

𝑗=𝑖+1

𝐴𝑖𝑖

From (n-1)th

equation (i.e., 3rd

row)

𝑥3 =

𝐵3 − (𝐴34𝑥4)

𝐴33

From (n-2)th

equation (i.e., 2nd

row)

𝑥2 =

𝐵2 − (𝐴24𝑥4) − (𝐴23𝑥3)

𝐴22

From (n-3)th

equation (i.e., 1st

row)

𝑥1 =

𝐵1 − (𝐴14𝑥4)− (𝐴13𝑥3)− (𝐴12𝑥2)

𝐴11

Disadvantages

(1) This method fails if pivot element becomes zero or very

small compared to rest of the elements in the pivot row.

(2) This method does not work for ill-conditioned

coefficient matrix, i.e., small perturbations in coefficient

matrix produces large changes in the solution of the system.

Example

[

1 1 1

2 3 1

−1 1 −5

1

5

3

3 1 7 −2

]{

𝑥1

𝑥2

𝑥3

𝑥4

}= {

10

31

−2

18

}

Multiplier matrix

Iter1 Iter2 Iter3

0 0 0 0

2/1 = 2 0 0 0

-1/1 = -1 2/1 = 2 0 0

3/1 = 3 -2/1 = -2 2/(-2) = -1 0

Forward Elimination

Iter 1:

1 1 1 1 10

R2 R2 –m21*R1 0 1 -1 3 11

R3 R3 –m31*R1 0 2 -4 4 8

R4 R4 –m41*R1 0 -2 4 -5 -12

Iter 2:

1 1 1 1 10

0 1 -1 3 11

R3 R3 –m32*R2 0 0 -2 -2 -14

R4 R4 –m42*R2 0 0 2 1 10

Iter 3:

1 1 1 1 10

0 1 -1 3 11

0 0 -2 -2 -14

R4 R4 –m43*R3 0 0 0 -1 -4

Backward Substitution

𝑥4 =

−4

−1

= 4

𝑥3 =

−14 + 2𝑥4

−2

=

−6

−2

= 3

𝑥2 = 11 + 𝑥3 − 3𝑥4 = 11 + 3 − 12 = 2

𝑥1 = 10 − 𝑥2 − 𝑥3 − 𝑥4 = 10 − 2 − 3 − 4 = 1

Thus solution is [x1 = 1, x2 = 2, x3 = 3, x4 = 4].](https://image.slidesharecdn.com/matlablabmanual-theory-211125130156/75/Matlab-lab-manual-5-2048.jpg)

![Exp-2B – Gauss Seidel Iteration

(System of Linear Equations)

Given a system of linear equations [𝐴]{𝑋} = {𝐵}

𝐴11𝑥1 + 𝐴12𝑥2 + ⋯+ 𝐴1𝑛𝑥𝑛 = 𝐵1

𝐴21𝑥1 + 𝐴22𝑥2 + ⋯+ 𝐴2𝑛𝑥𝑛 = 𝐵2

⋮ ⋮ ⋮ ⋮ = ⋮

𝐴𝑛1𝑥1 + 𝐴𝑛2𝑥2 + ⋯+ 𝐴𝑛𝑛𝑥𝑛 = 𝐵𝑛

Method

The above equations are expressed in recursive form as

𝑥1

𝑘+1

=

[𝐵1 − (𝐴12𝑥2

𝑘

+ ⋯+ 𝐴1𝑛𝑥𝑛

𝑘)]

𝐴11

𝑥2

𝑘+1

=

[𝐵2 − (𝐴21𝑥1

𝑘+1

+ 𝐴23𝑥3

𝑘

+ ⋯+ 𝐴2𝑛𝑥𝑛

𝑘)]

𝐴22

𝑥3

𝑘+1

=

[𝐵3 − (𝐴31𝑥1

𝑘+1

+ 𝐴32𝑥2

𝑘+1

+ 𝐴34𝑥4

𝑘

… + 𝐴3𝑛𝑥𝑛

𝑘)]

𝐴33

For any xi

k+1

𝑥𝑖

𝑘+1

=

[𝐵𝑖 − (∑ 𝐴𝑖𝑗𝑥𝑗

𝑘+1

𝑗=𝑖−1

𝑗=1 + ∑ 𝐴𝑖𝑗𝑥𝑗

𝑘

𝑗=𝑛

𝑗=𝑖+1 )]

𝐴𝑖𝑖

To compute error, a relative change criterion is used.

𝑒𝑟𝑟 =

√(∆𝑥1

𝑖)

2

+ (∆𝑥2

𝑖 )

2

+ ⋯+ (∆𝑥𝑛

𝑖 )

2

√(𝑥1

𝑖 )

2

+ (𝑥2

𝑖 )

2

+ ⋯+ (𝑥𝑛

𝑖 )

2

Advantages

(1) Efficient when there are large number of variables

(~100,000) due to lesser round-off errors.

(2) Efficient for sparse matrices where there many zero

elements due to lesser storage space required.

(3) Unlike Newton's method for finding roots of an

equation, this method does not depend on the initial guess,

but depends on the character of the matrices themselves.

However good guess reduces the number of iterations.

(4) Gauss Seidel is more efficient than Jacobi iterative

method.

Example

7𝑥1 − 2𝑥2 + 1𝑥3 + 0𝑥4 = 17

1𝑥1 − 9𝑥2 + 3𝑥3 − 1𝑥4 = 13

2𝑥1 + 0𝑥2 + 10𝑥3 + 1𝑥4 = 15

1𝑥1 − 1𝑥2 + 1𝑥3 + 6𝑥4 = 10

Writing the equations in recursive form

𝑥1

𝑘+1

=

17 − (−2𝑥2

𝑘

+ 1𝑥3

𝑘

+ 0𝑥4

𝑘)

7

𝑥2

𝑘+1

=

13 − (1𝑥1

𝑘+1

+ 3𝑥3

𝑘

− 1𝑥4

𝑘)

−9

𝑥3

𝑘+1

=

15 − (2𝑥1

𝑘+1

+ 0𝑥2

𝑘+1

+ 1𝑥4

𝑘)

10

𝑥4

𝑘+1

=

10 − (1𝑥1

𝑘+1

− 1𝑥2

𝑘+1

+ 1𝑥3

𝑘+1)

6

Starting with (𝑥1

0

= 0, 𝑥2

0

= 0, 𝑥3

0

= 0, 𝑥4

0

= 0)

𝑥1

1

=

17 − (−2(0) + 1(0) + 0(0))

7

= 2.4286

𝑥2

1

=

13 − (1(2.4286) + 3(0) − 1(0))

−9

= −1.1746

𝑥3

1

=

15 − (2(2.4286) + 0(−1.1746) + 1(0))

10

= 1.0143

𝑥4

1

=

10 − (1(2.4286) − 1(−1.1746) + 1(1.0143))

6

= 0.8971

Iteration history is

Iter x1 x2 x3 x4

1 2.4286 -1.1746 1.0143 0.8971

2 1.9481 -0.9896 1.0207 1.0069

3 2.0000 -0.9939 0.9993 1.0011

4 2.0018 -1.0002 0.9995 0.9997

5 2.0000 -1.0001 1.0000 1.0000

6 2.0000 -1.0000 1.0000 1.0000

For a tolerance of 1e-4, the solution converges after 6

iterations to (𝑥1

6

= 2, 𝑥2

6

= −1, 𝑥3

6

= 1, 𝑥4

6

= 1)](https://image.slidesharecdn.com/matlablabmanual-theory-211125130156/75/Matlab-lab-manual-6-2048.jpg)