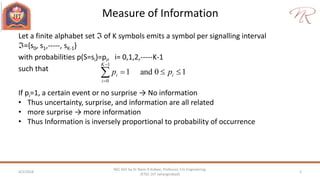

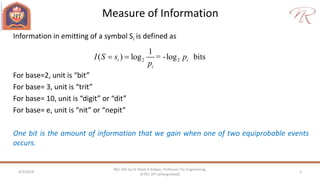

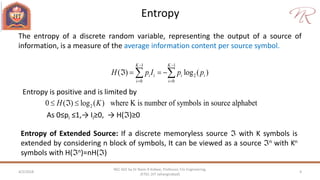

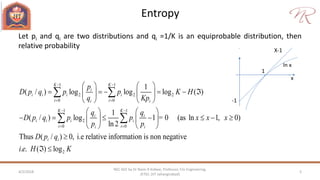

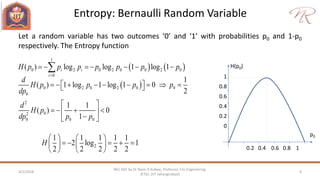

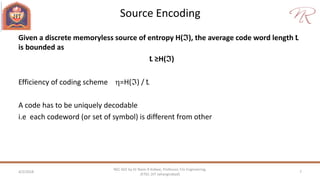

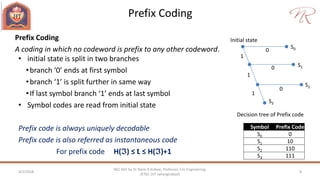

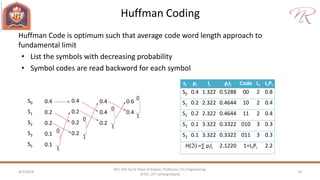

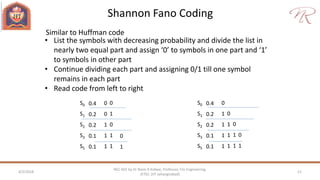

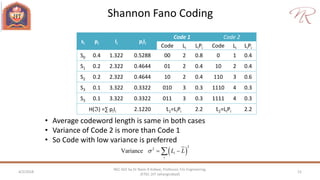

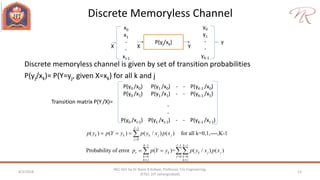

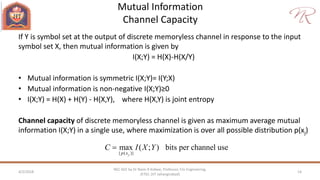

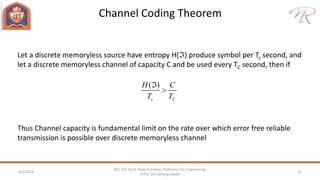

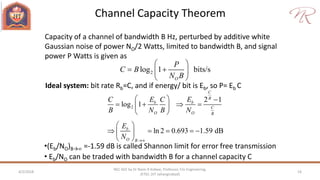

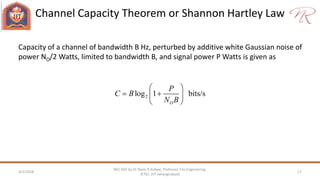

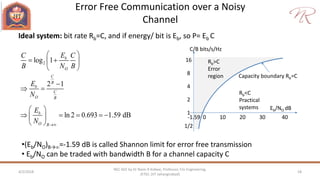

The document provides an overview of information theory and coding, focusing on measures of information, source encoding, and error-free communication over noisy channels. Key concepts include entropy, error-correcting codes, Huffman and Shannon-Fano coding techniques, and channel capacity as constrained by Shannon's theorem. It also discusses the relationships between information measures and probabilities, along with methods for encoding to achieve efficient and reliable data transmission.