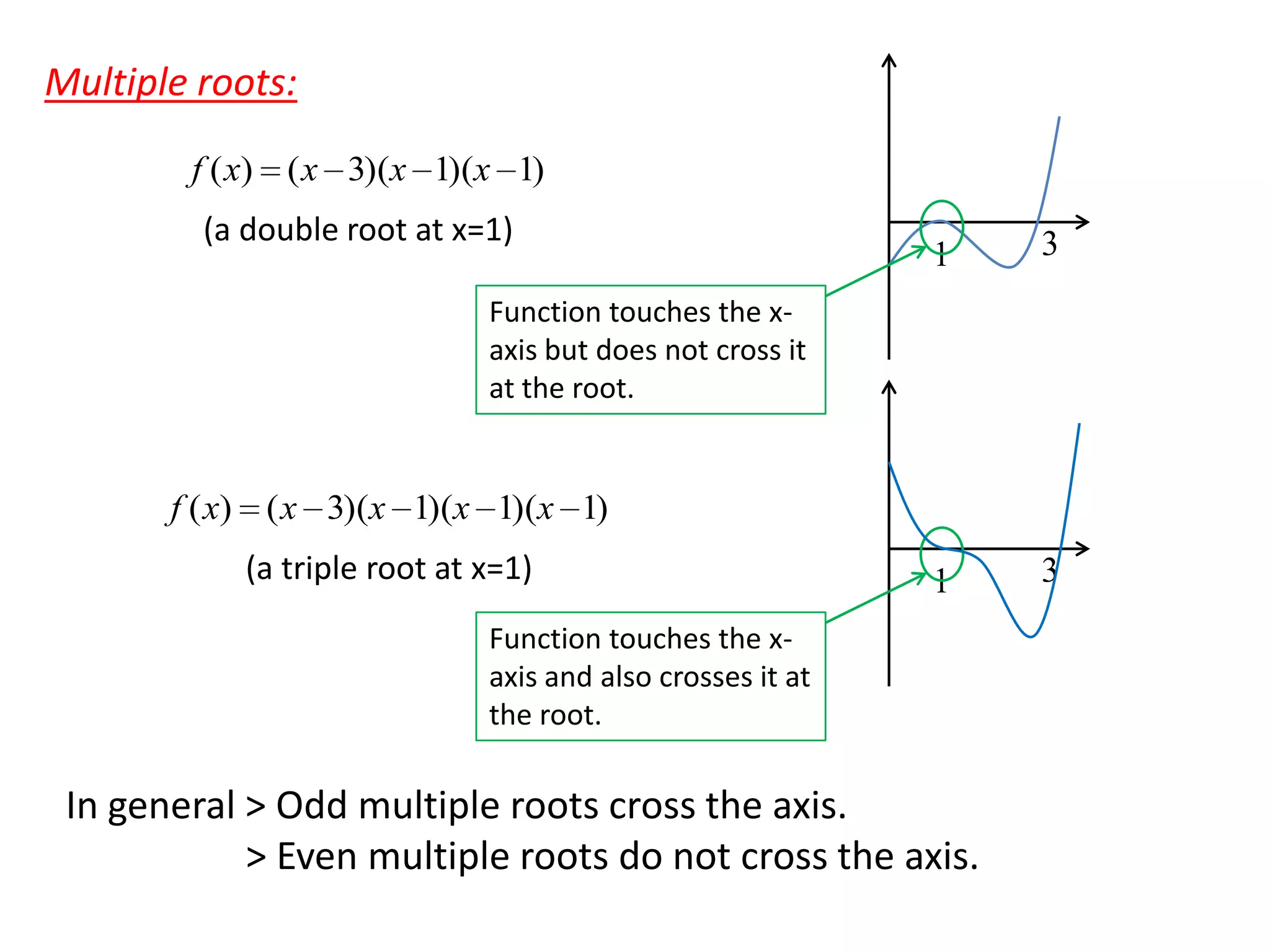

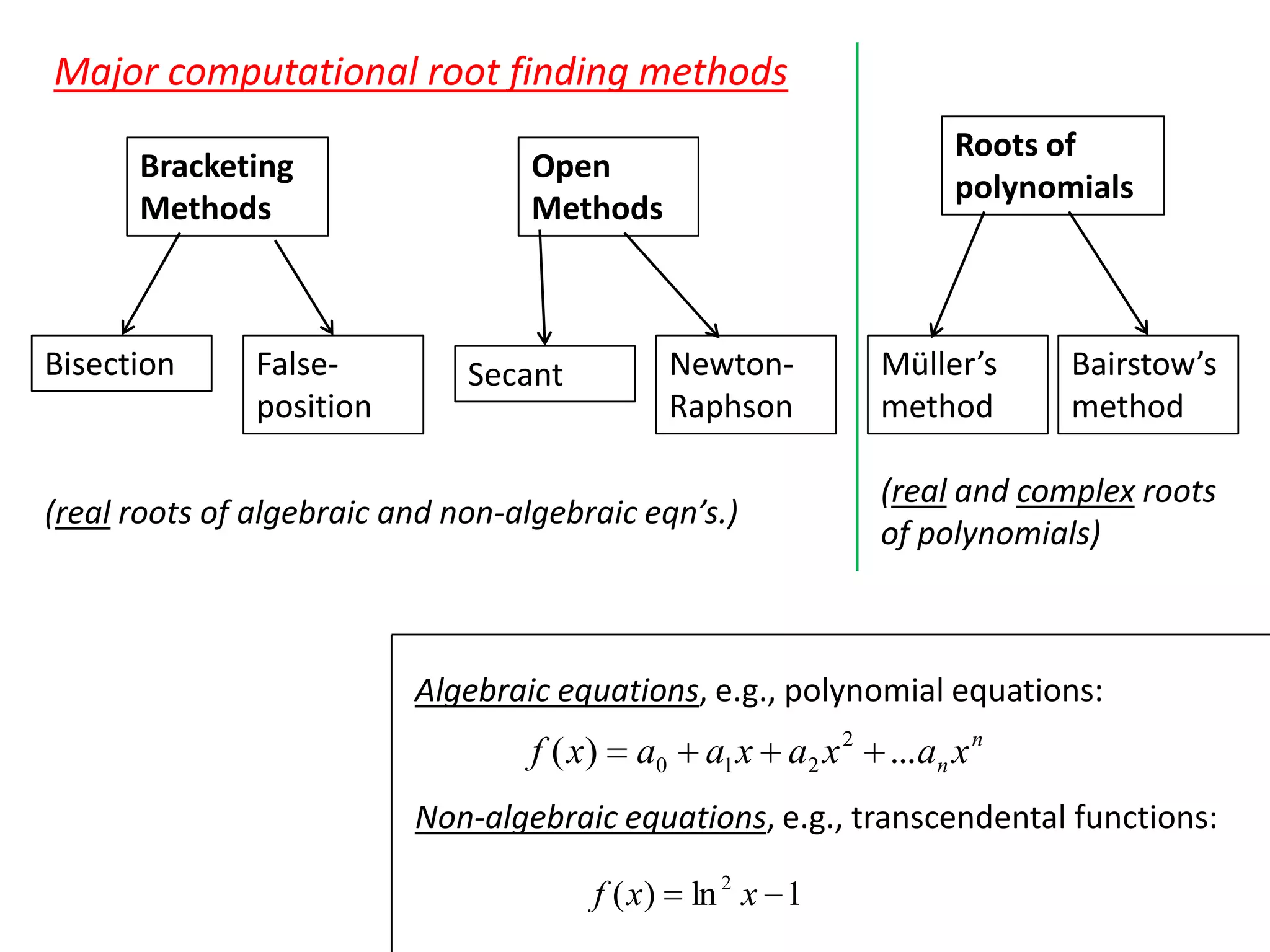

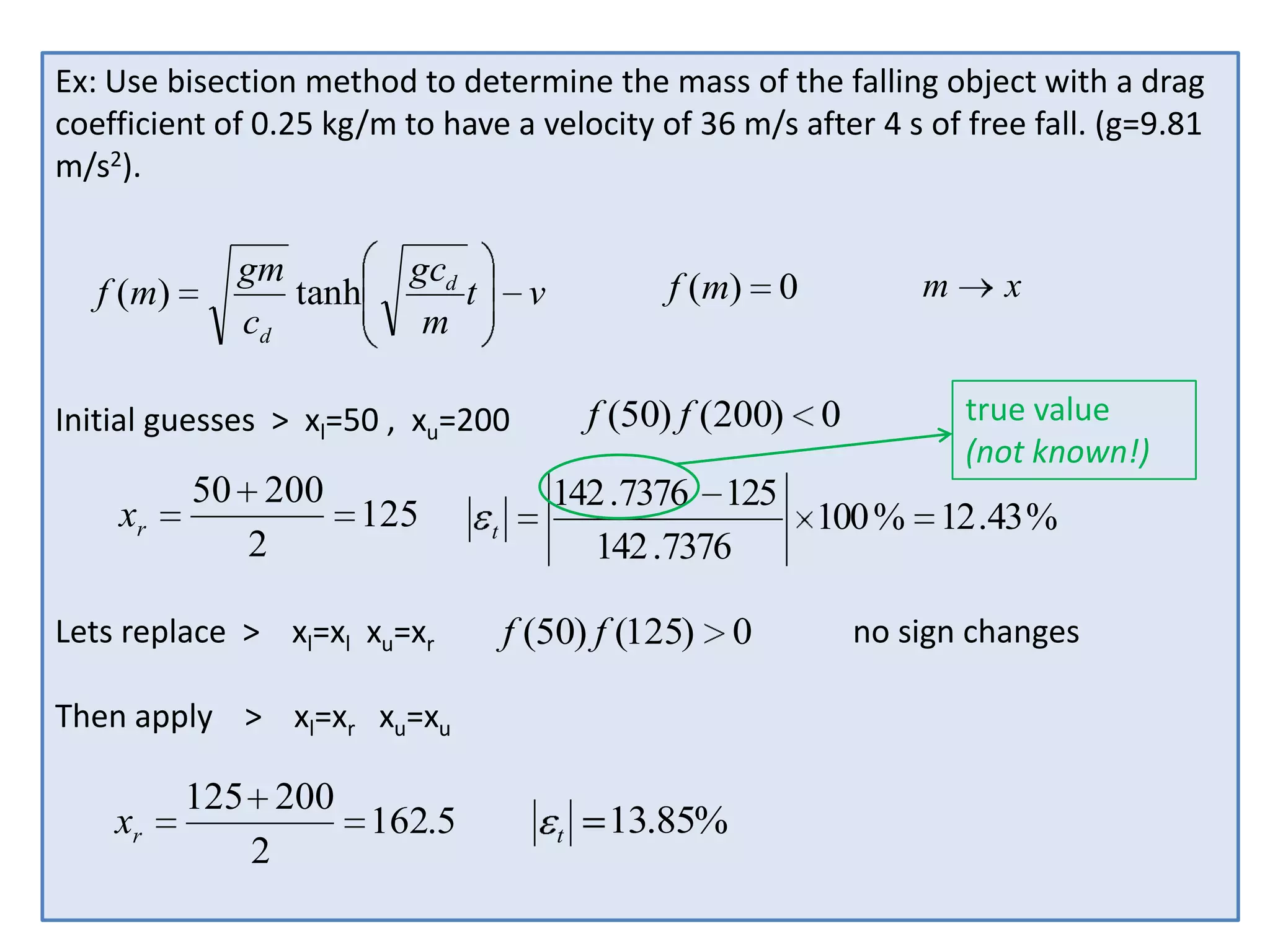

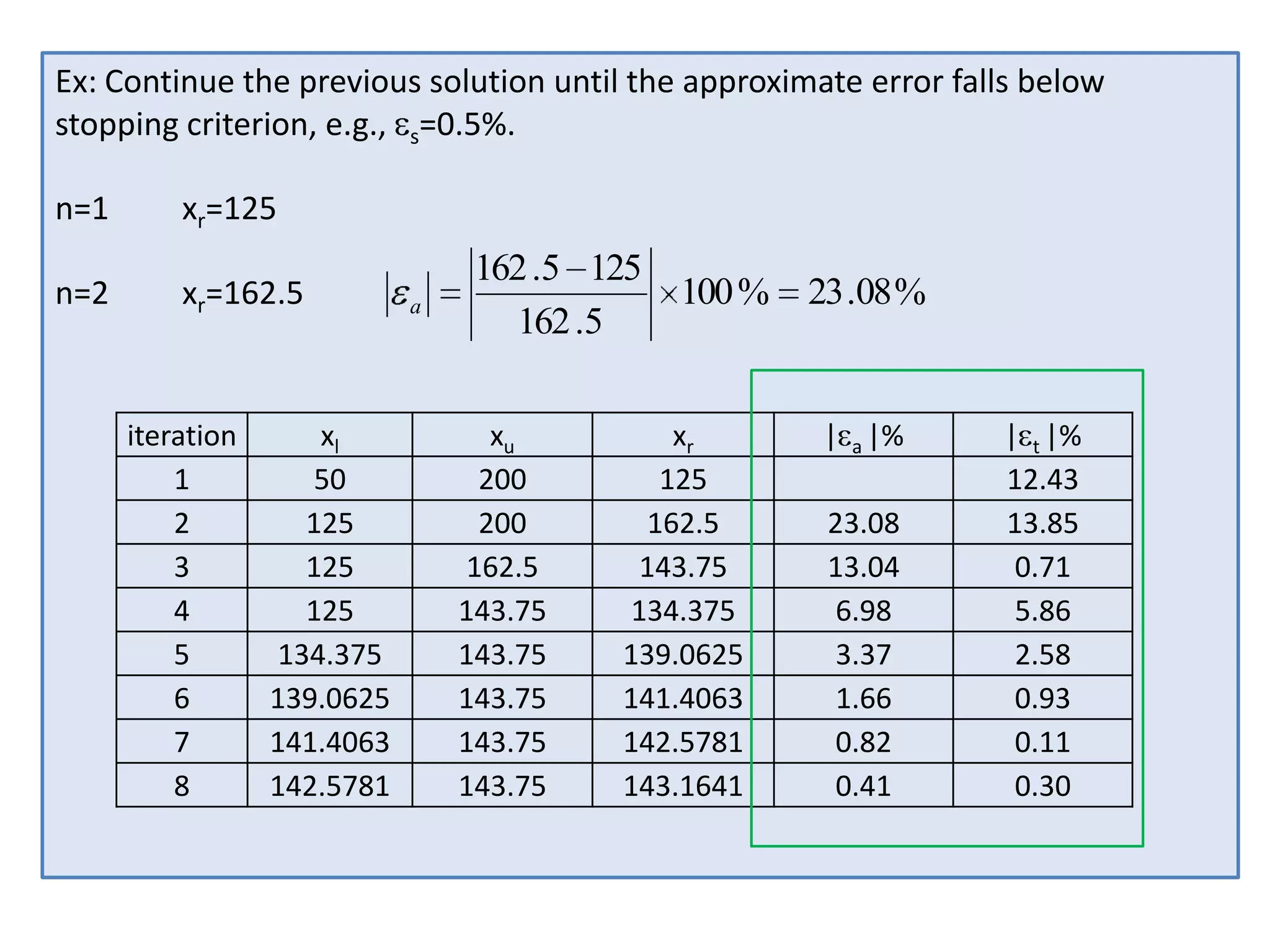

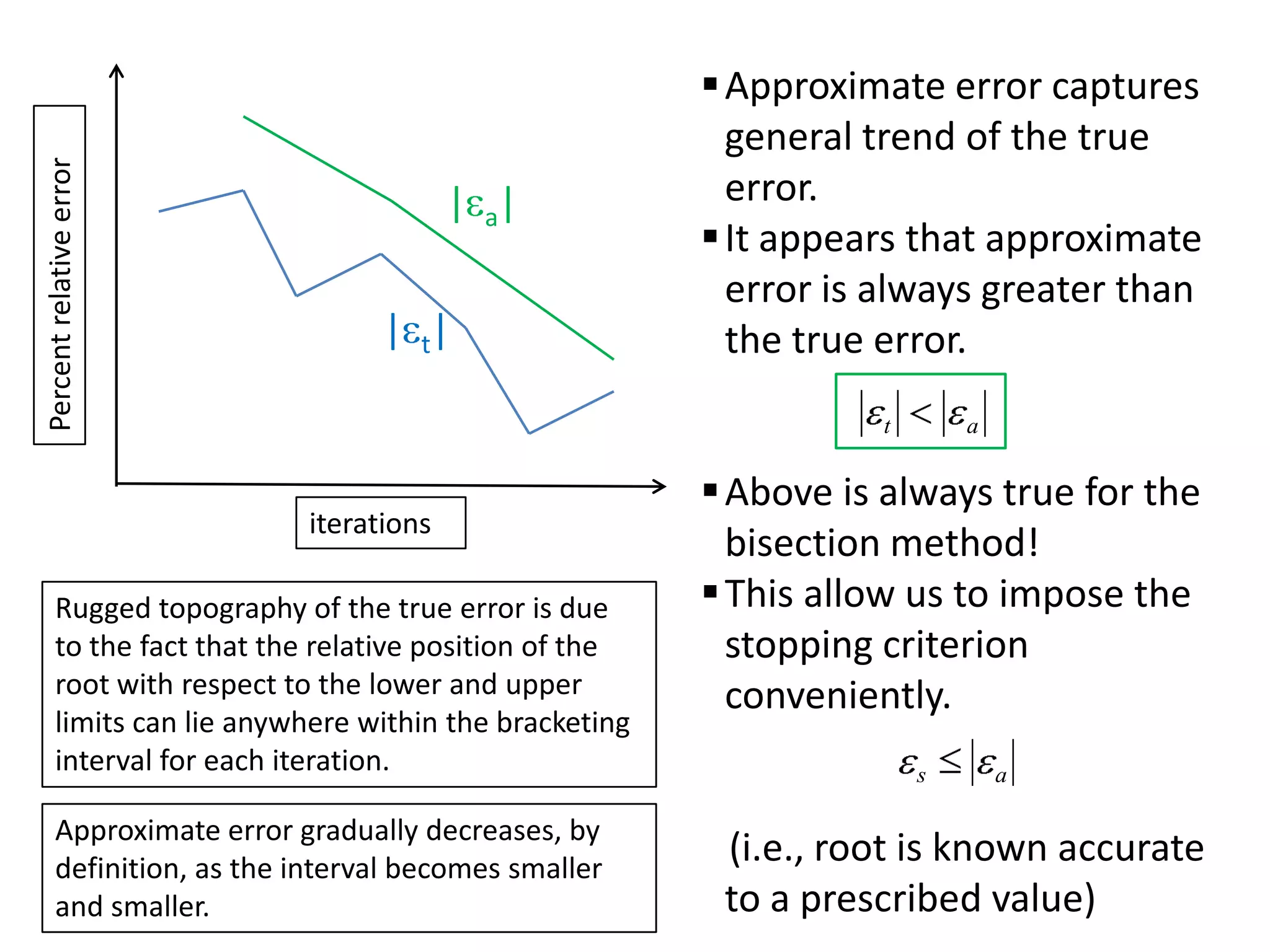

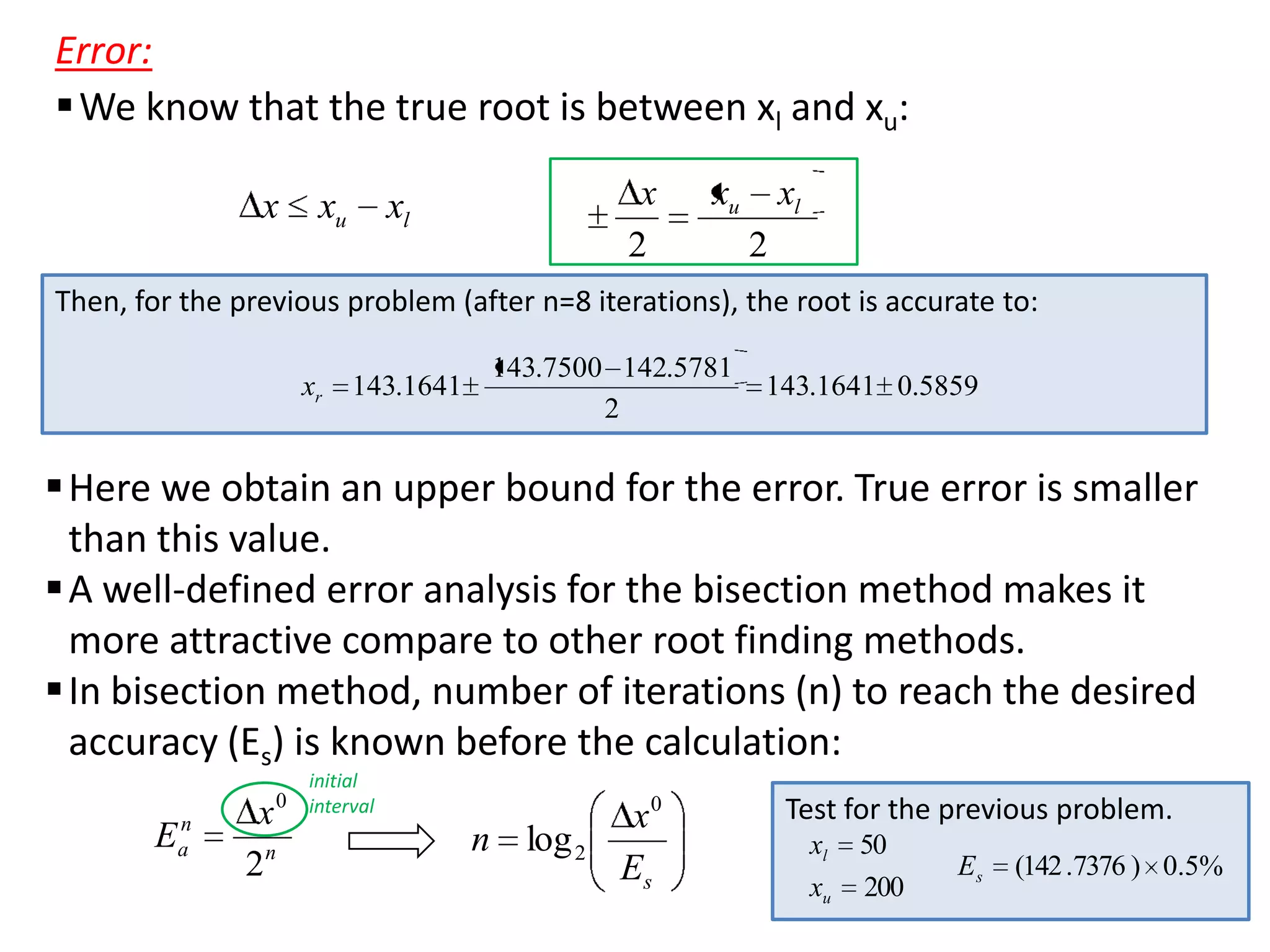

The document discusses various numerical methods for finding roots of functions, including:

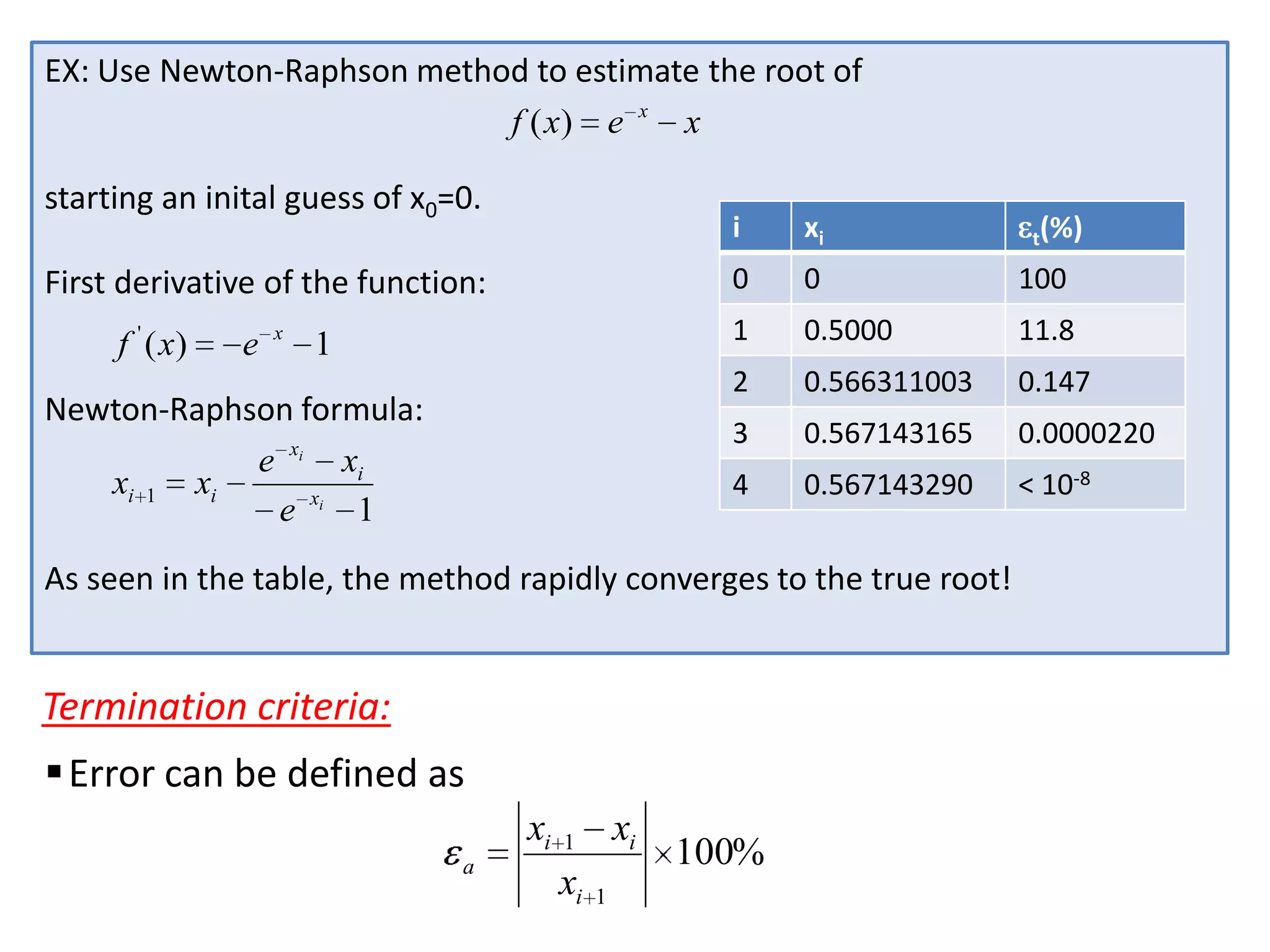

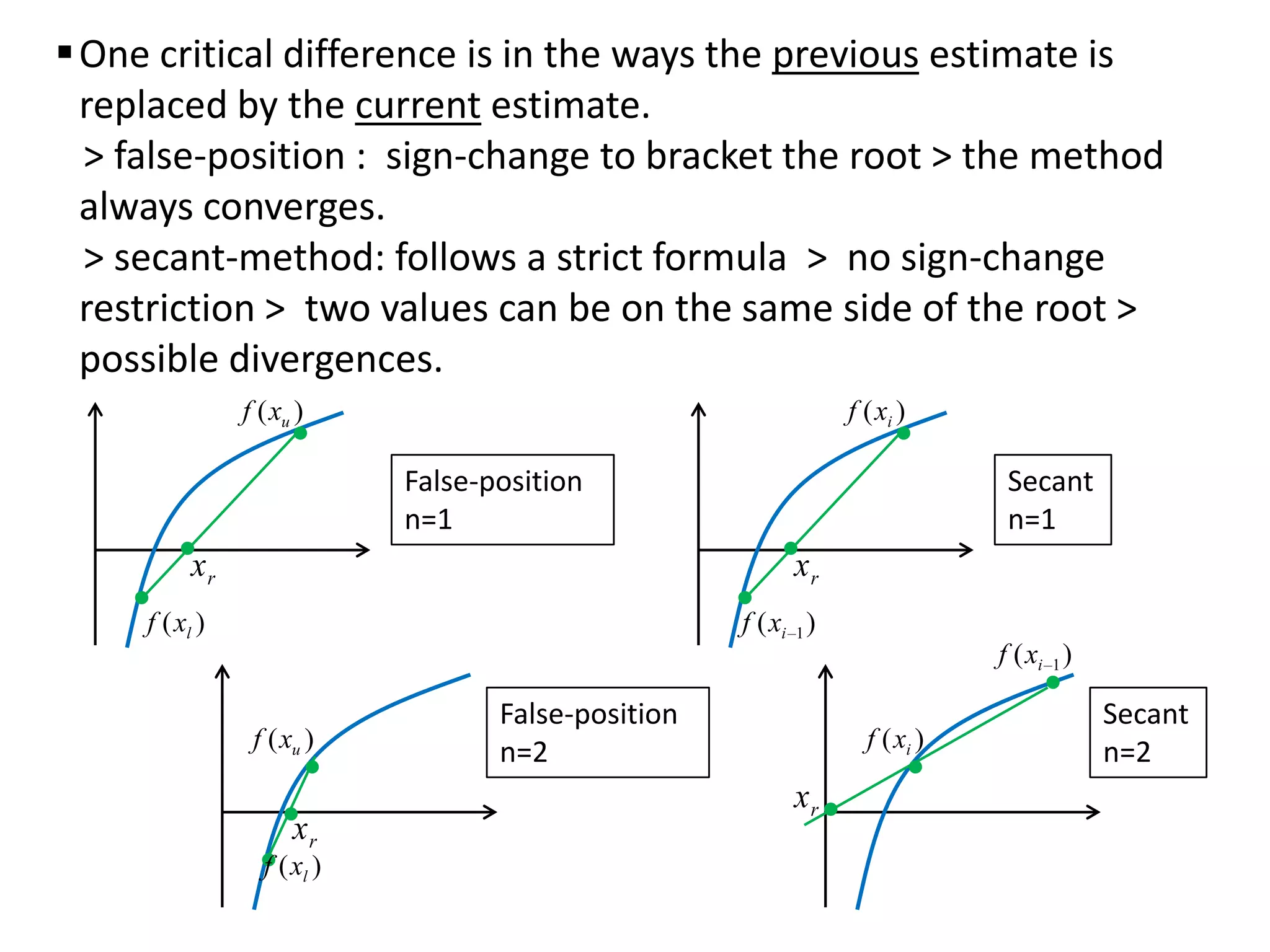

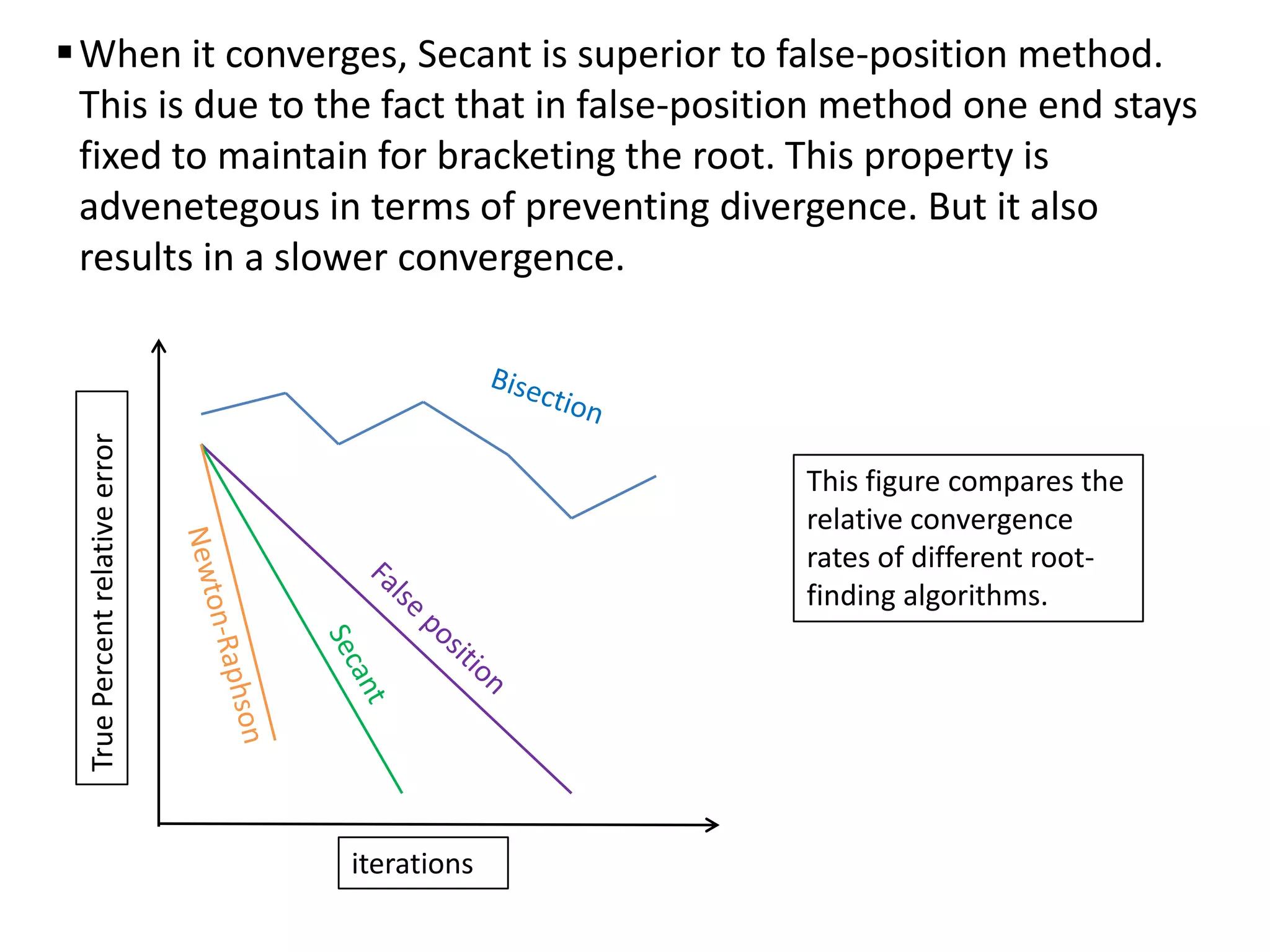

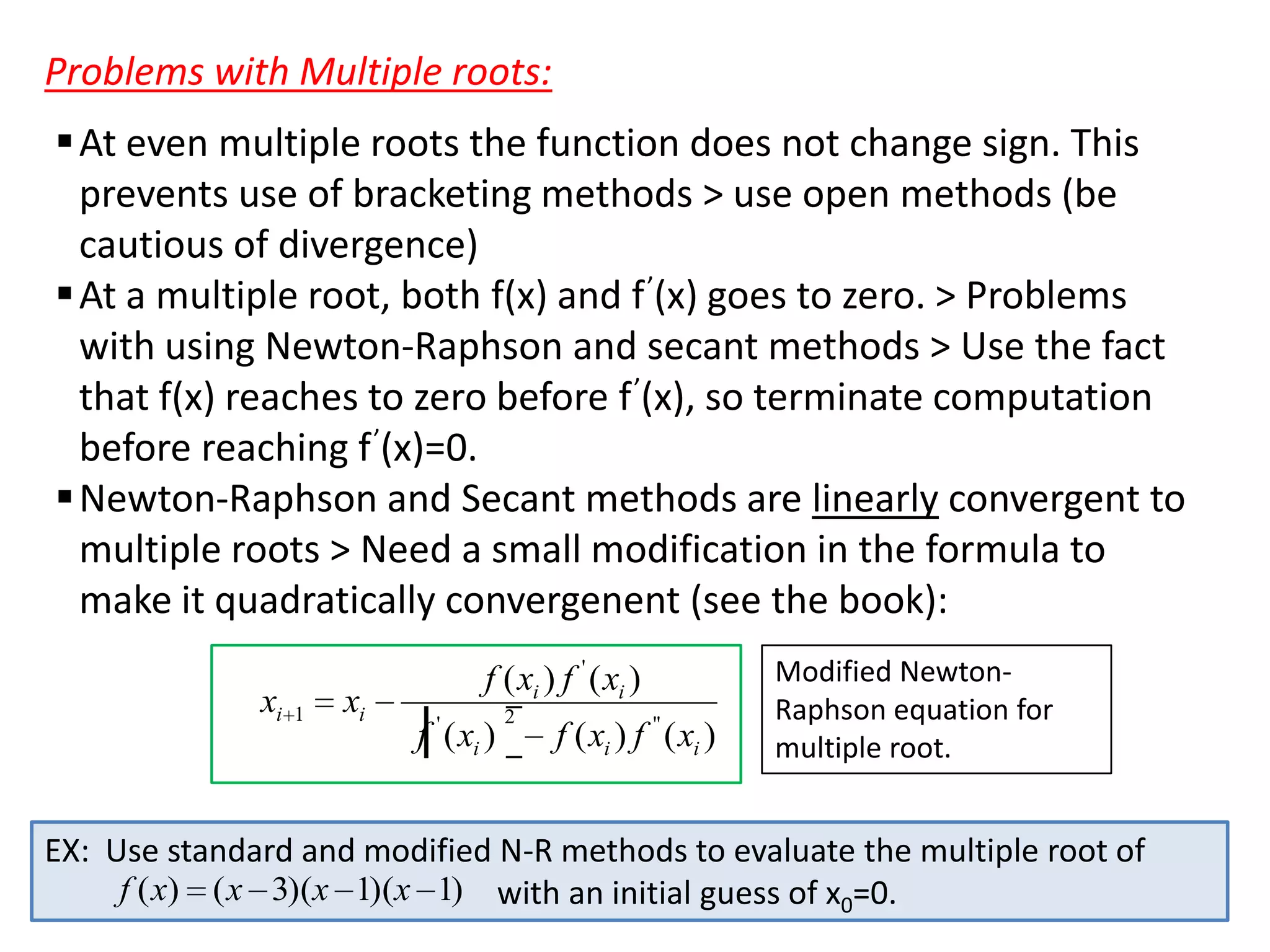

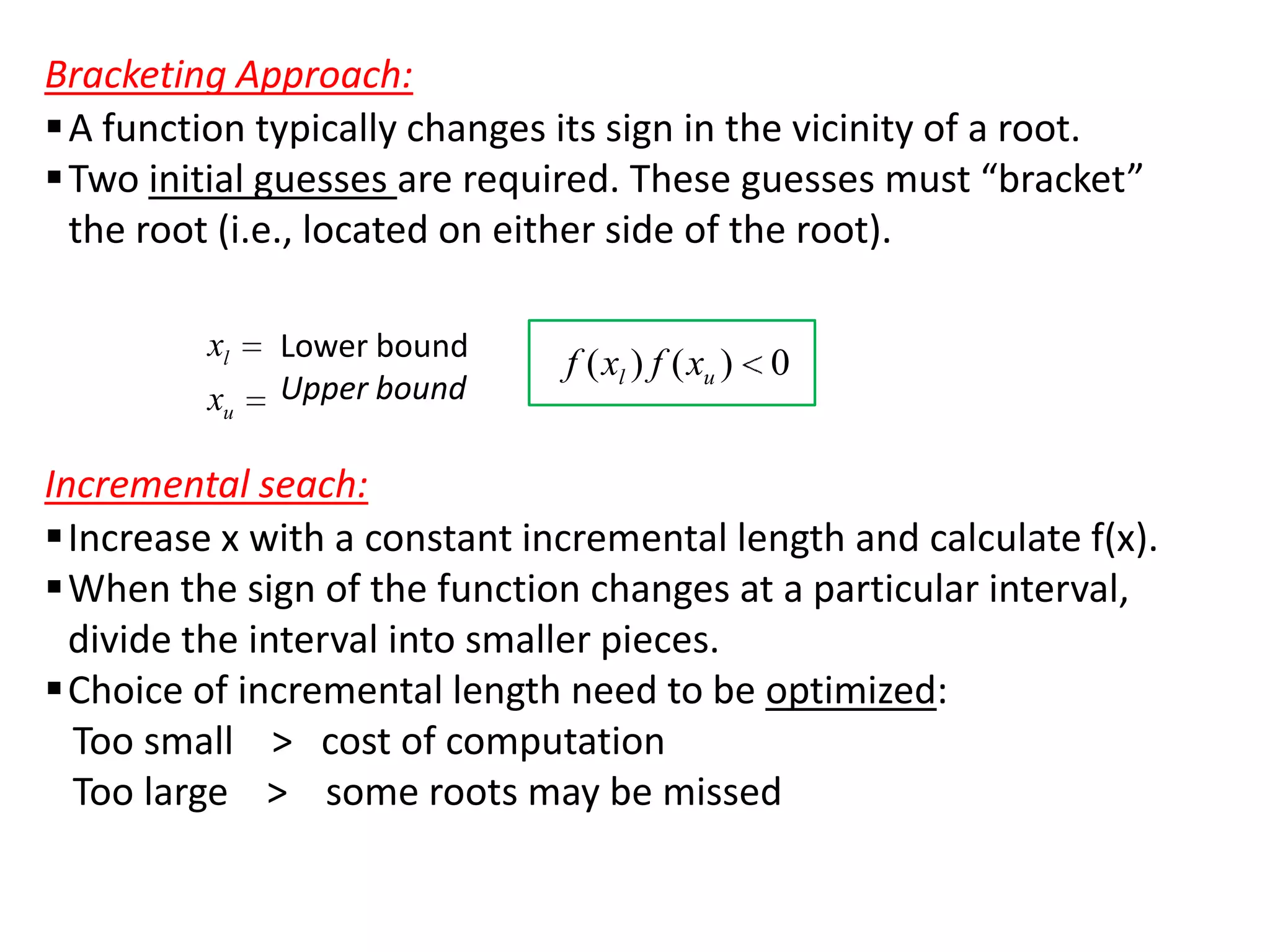

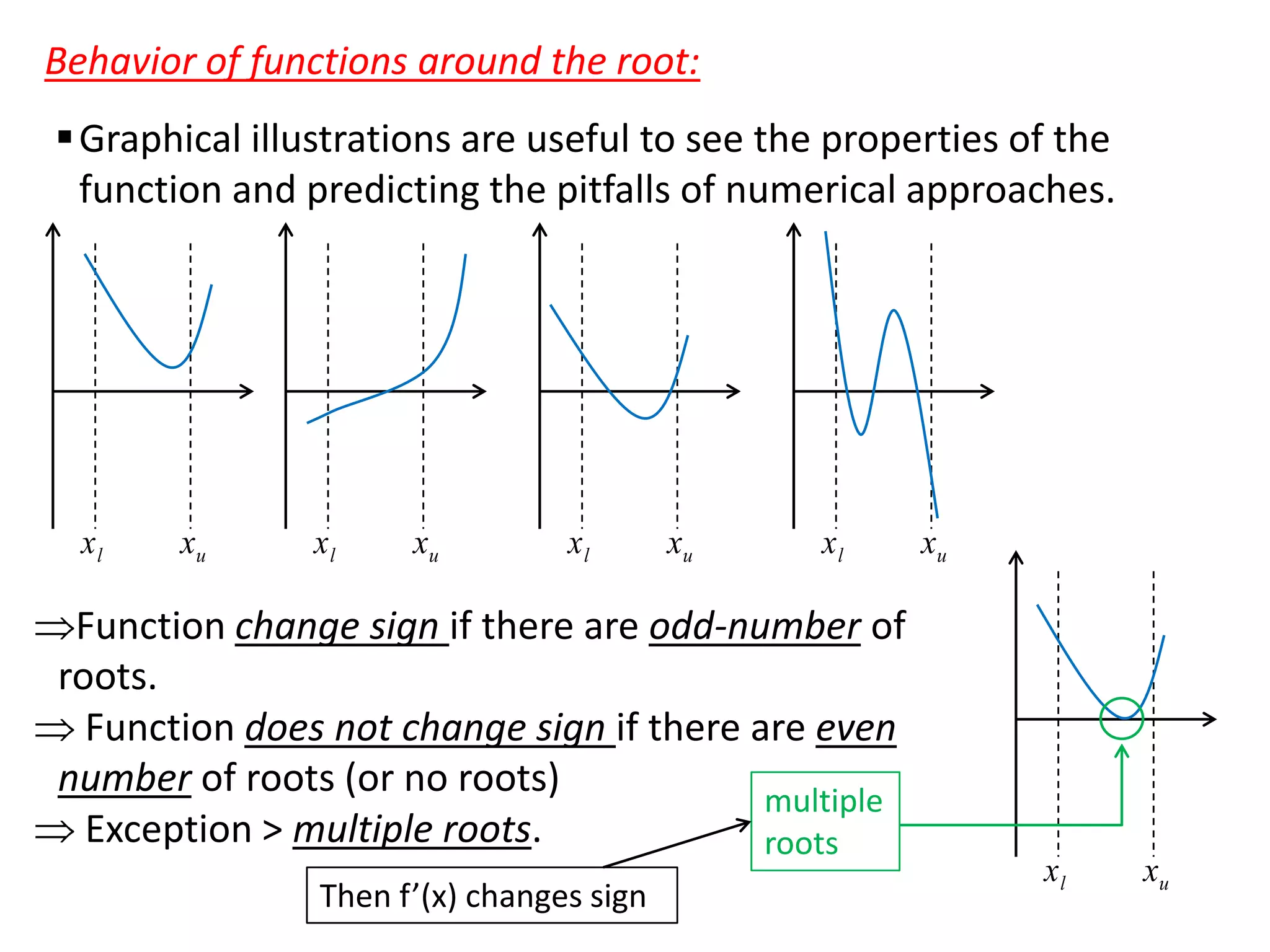

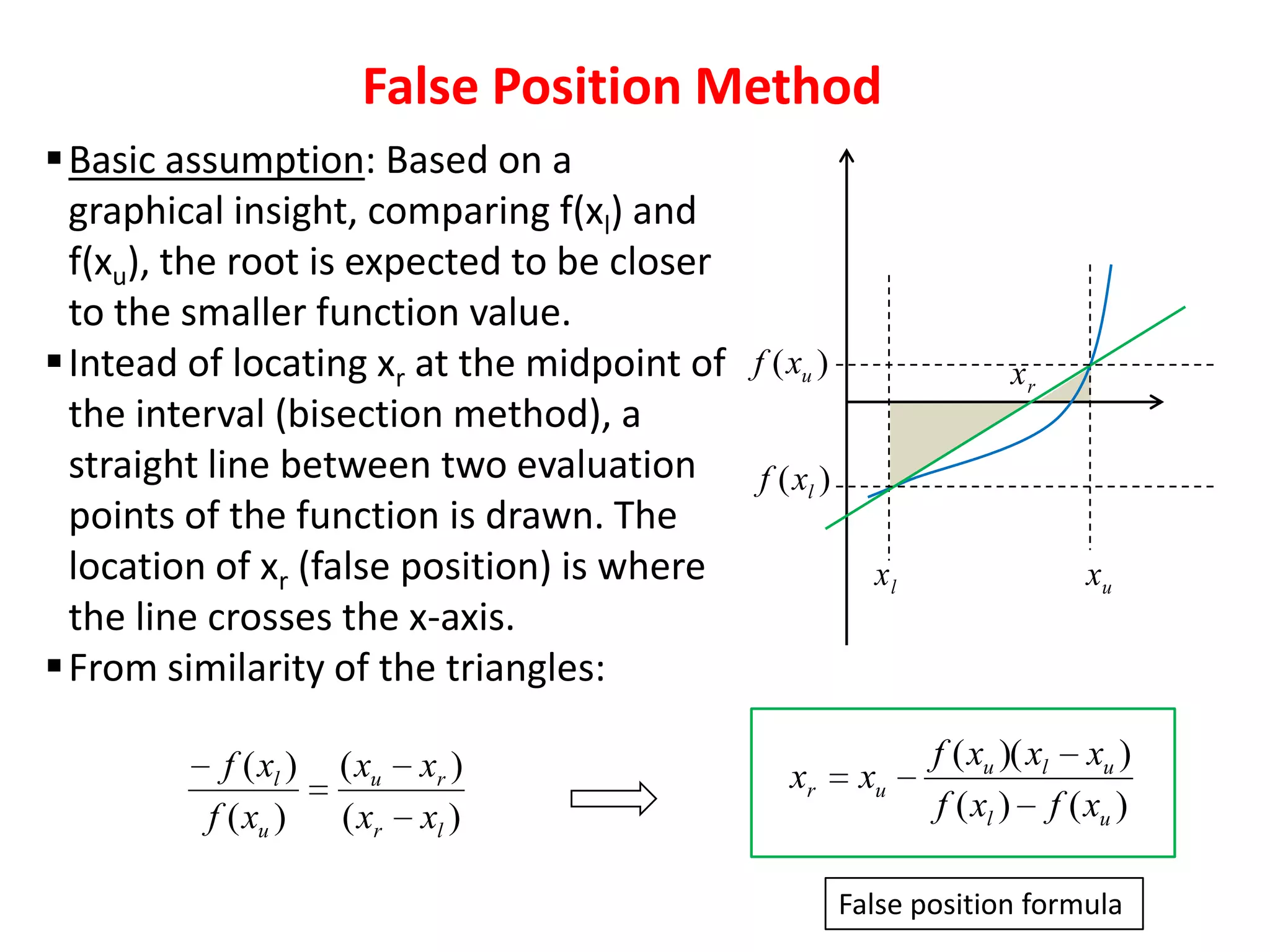

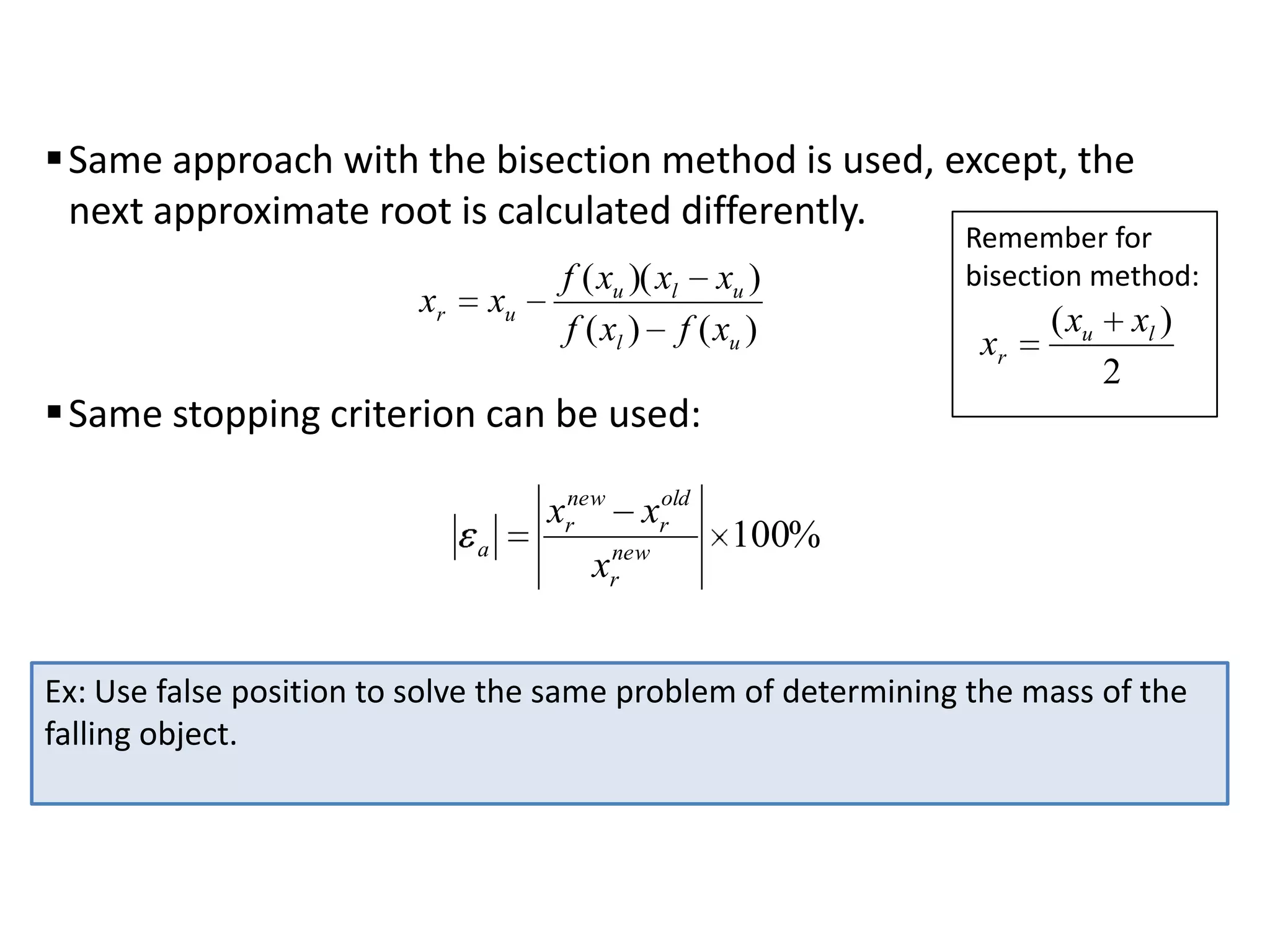

- Bracketing methods like bisection and false position that search between initial lower and upper bounds.

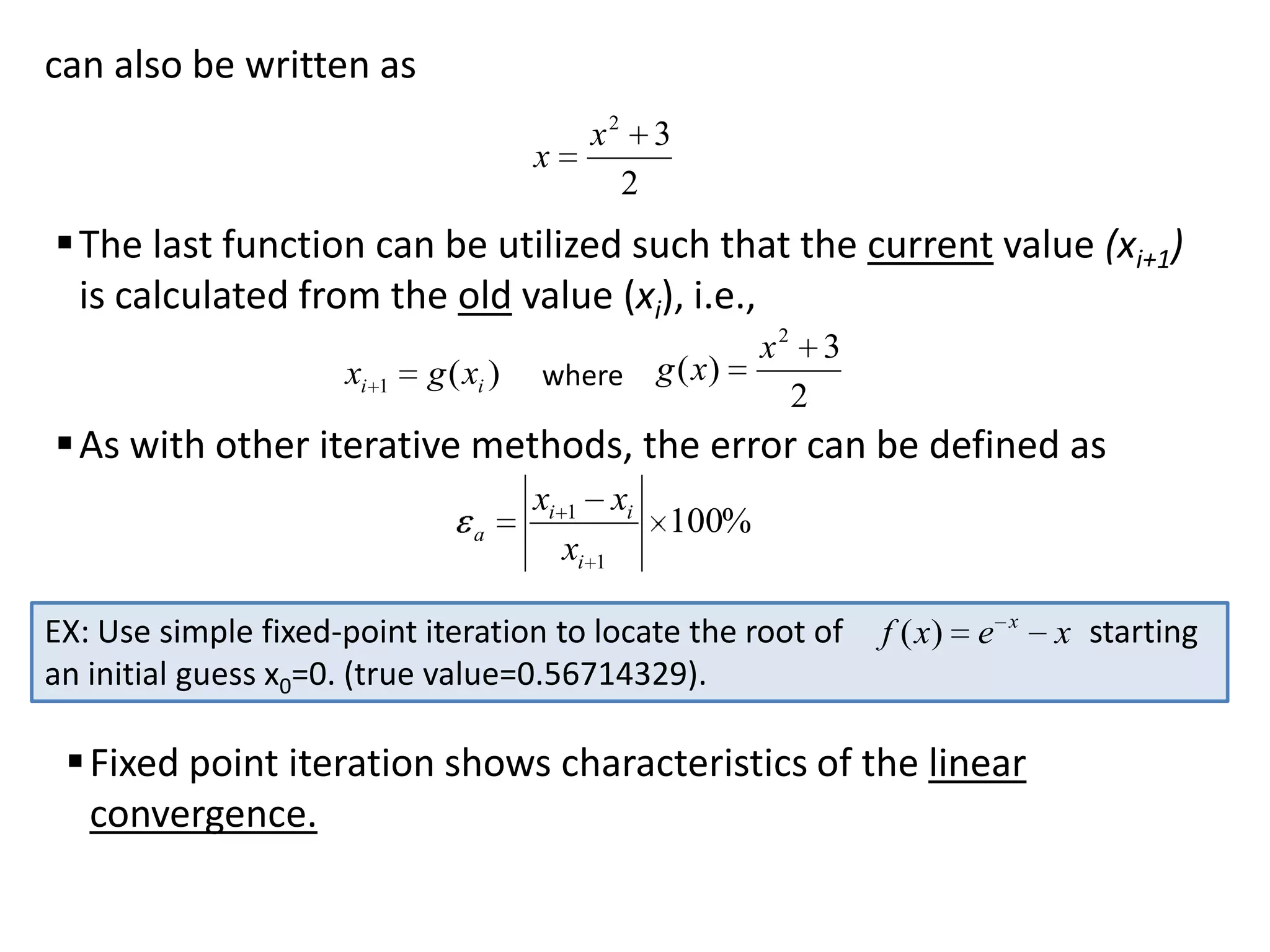

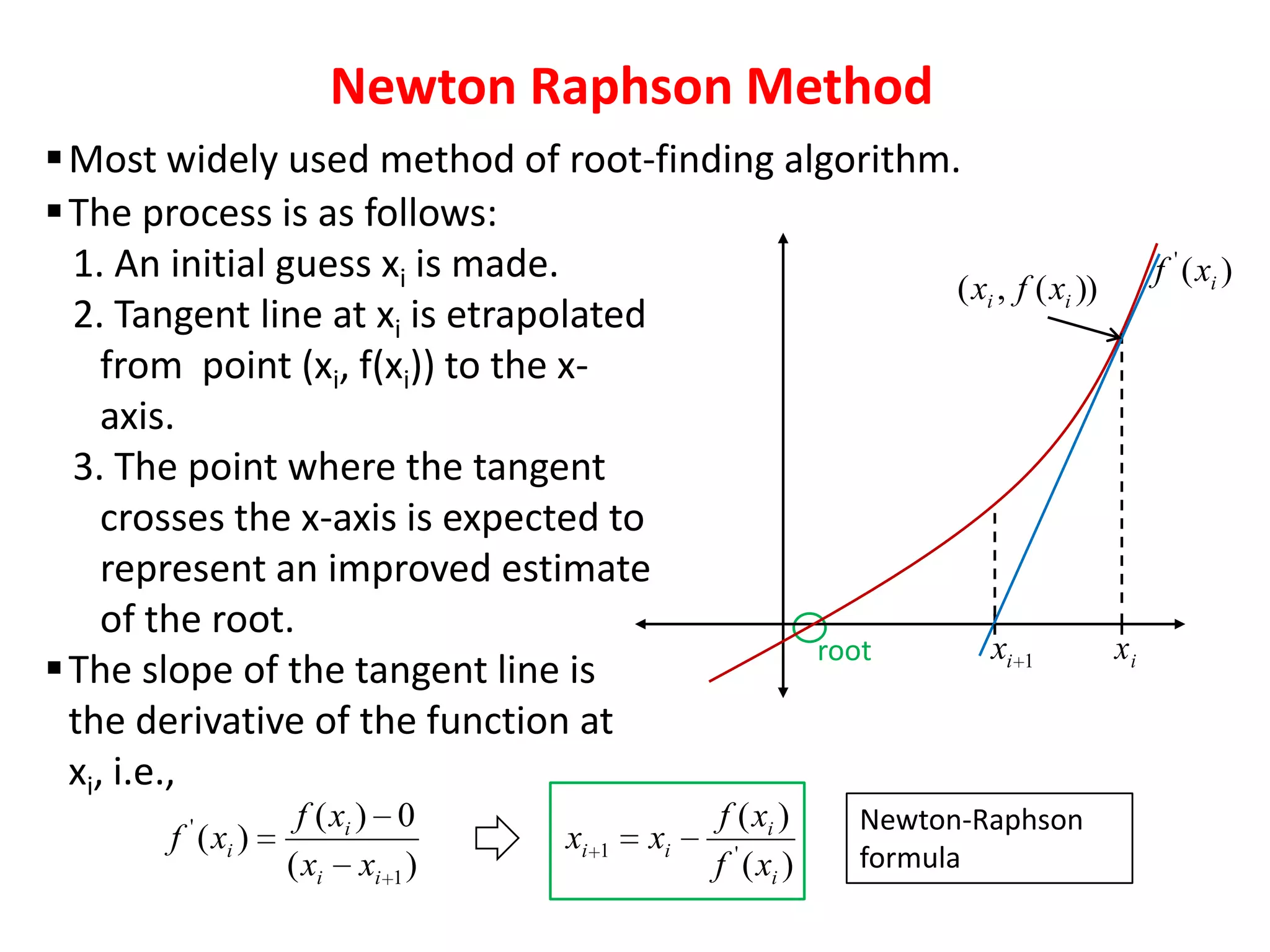

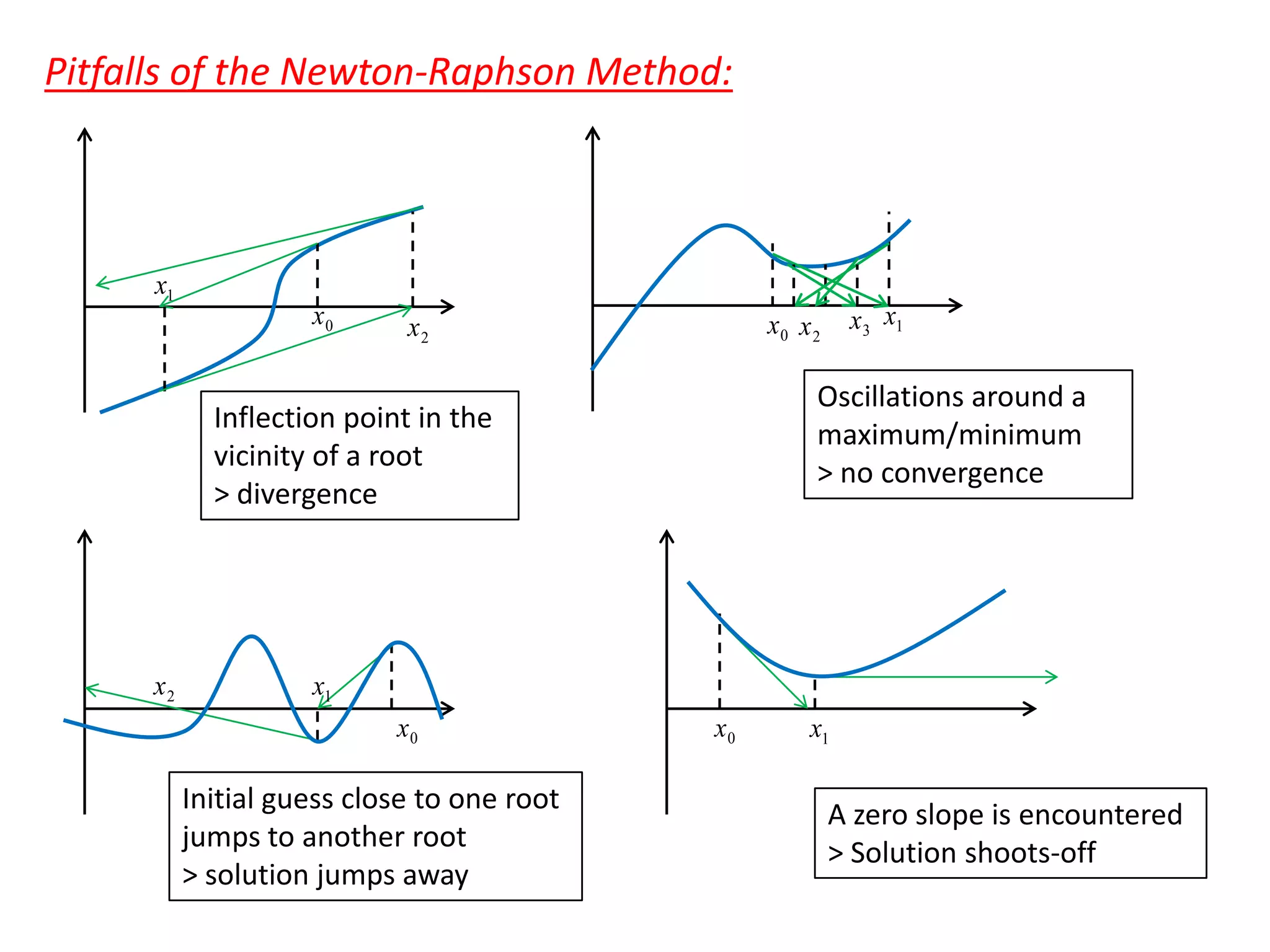

- Open methods like Newton-Raphson and secant that do not require bracketing but may not converge.

- Techniques for polynomials like Müller's and Bairstow's methods.

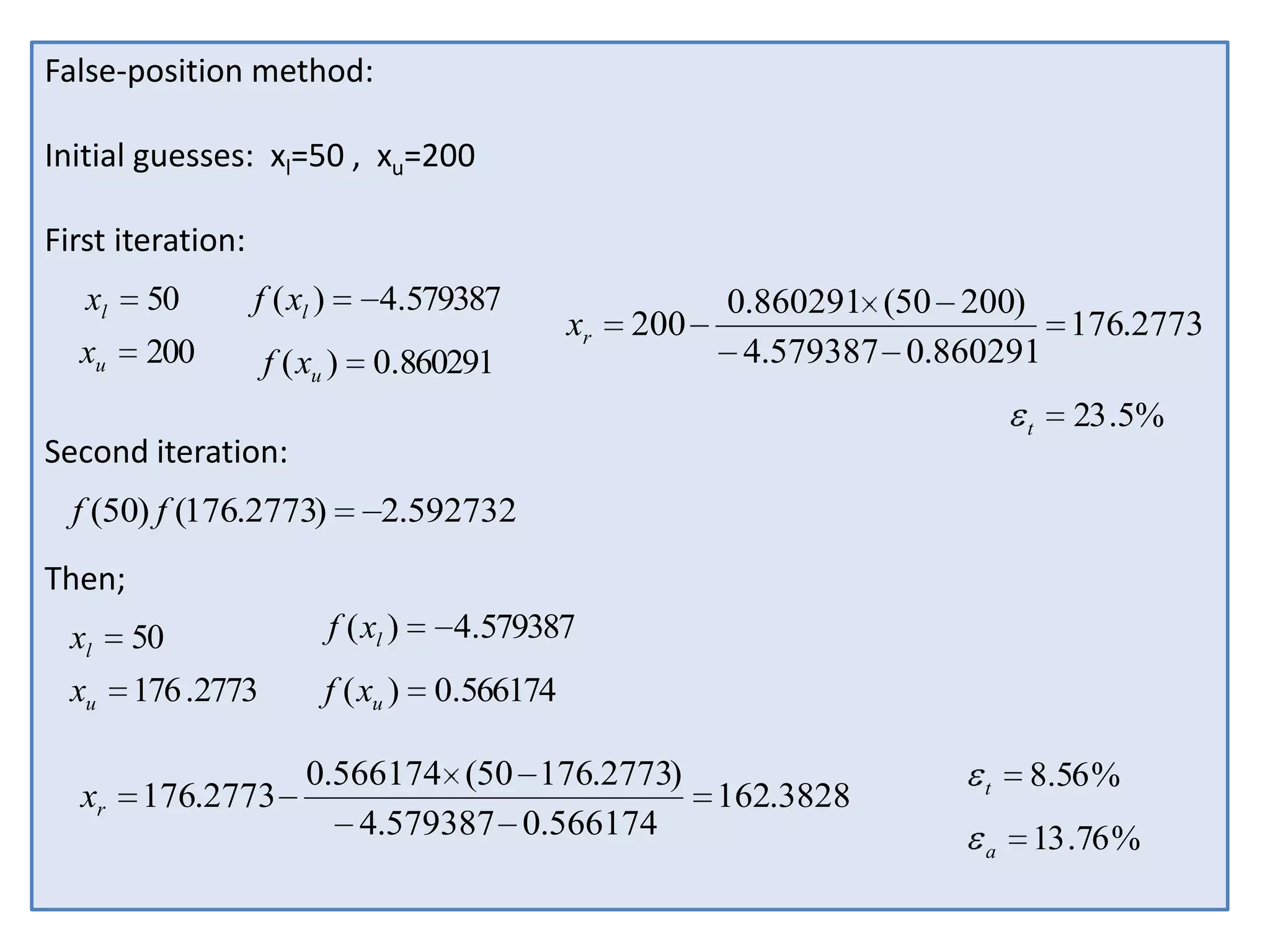

Examples demonstrate applying bisection, false position, and Newton-Raphson to find the mass in a falling object problem. The convergence properties and relative performance of the different methods are analyzed.

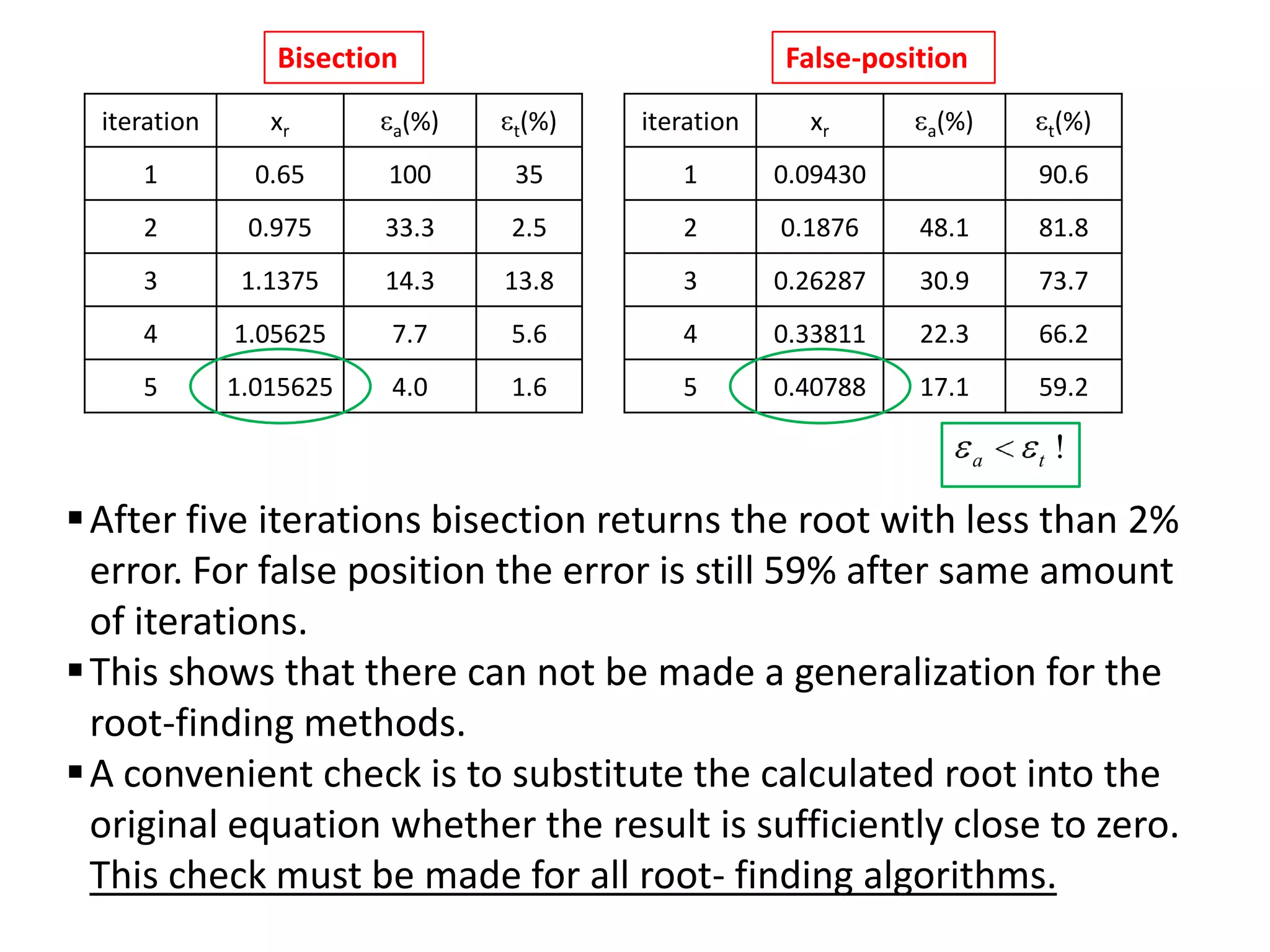

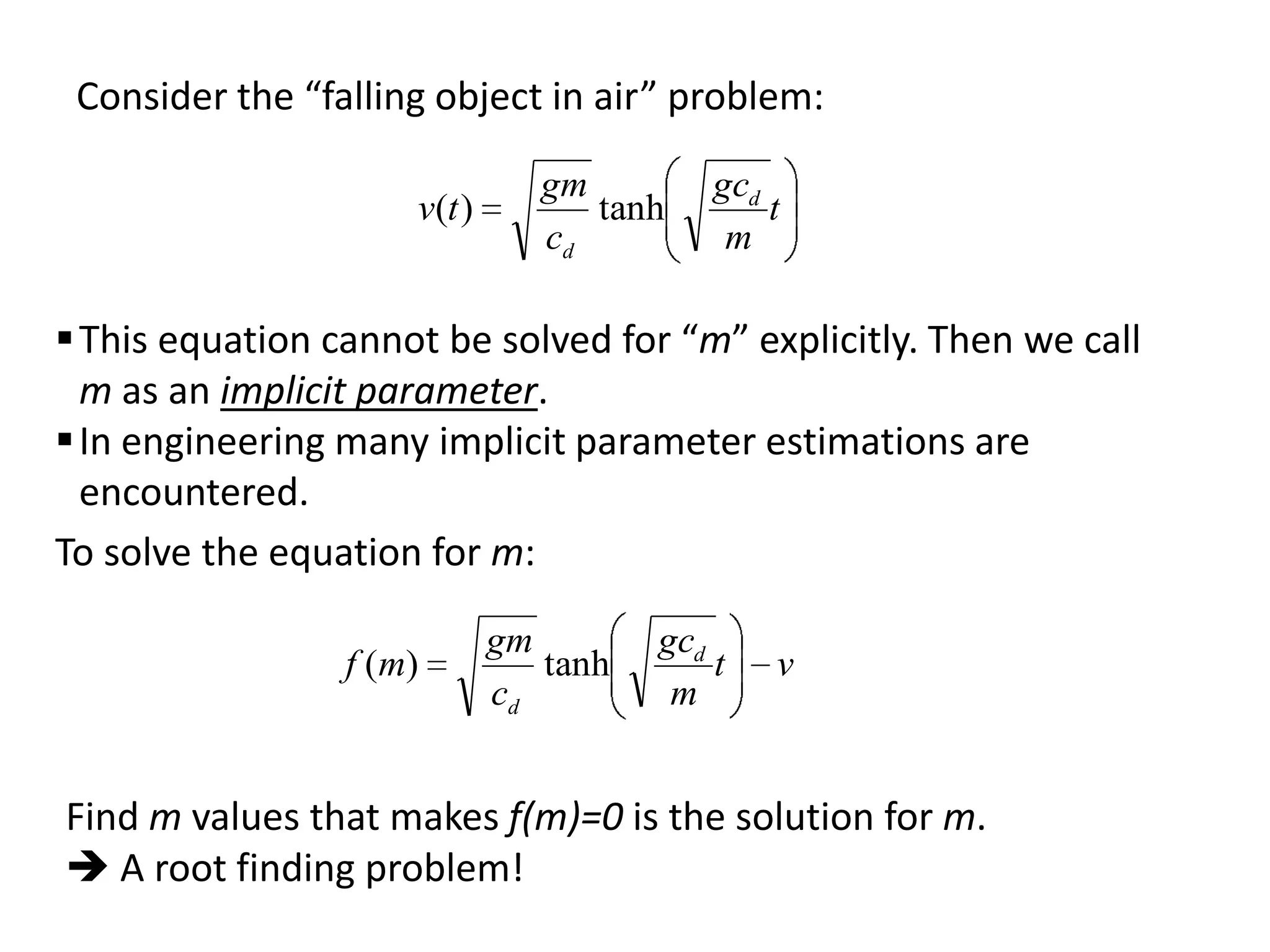

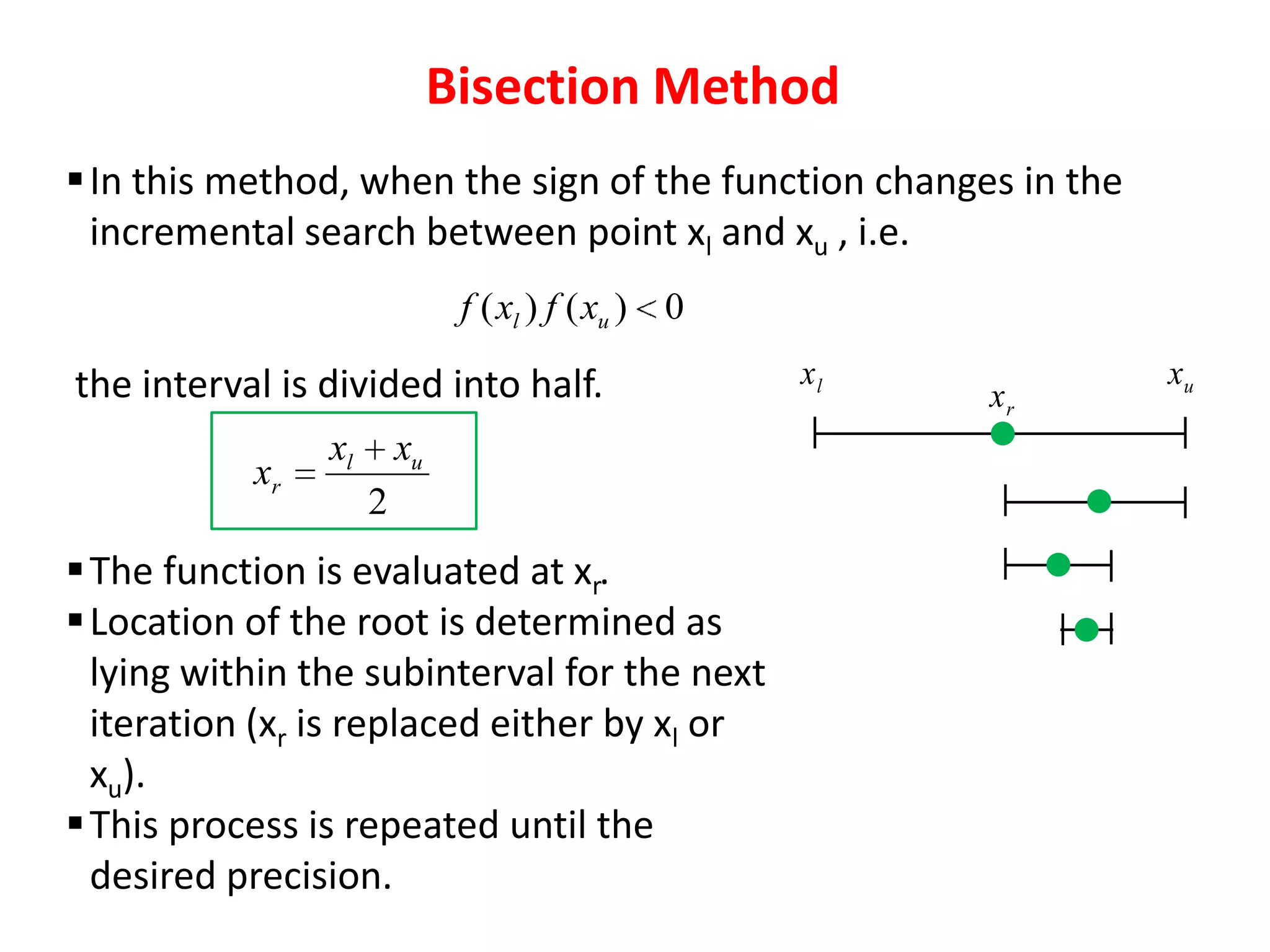

![Cases Bisection is preferable to False position

Although false position generally performs faster than

bisection, this is not always true. Consider, for example :

f ( x)

Search the root for interval [0, 1.3]

f (x)

xr

1.0

x

xl

xu

1.3

x10 1

Here, we observe that

convergence to the true root is

very slow.

One of the bracketing limits tend

to stay fixed which leads to poor

convergence.

Note that, in the example, basic

assumption of false-position that

the root is closer to the smaller

function evaluation value is

violated.](https://image.slidesharecdn.com/es272ch3a-131020141145-phpapp02/75/Es272-ch3a-19-2048.jpg)