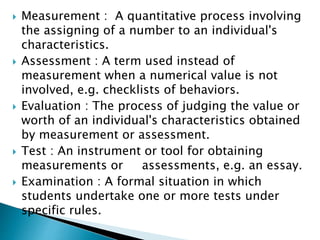

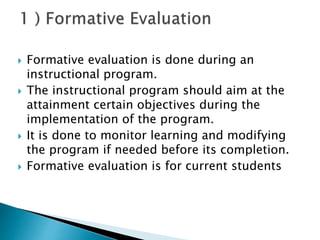

The document discusses various methods of educational evaluation, including formative and summative assessment, internal and external evaluation, and qualitative and quantitative measures. It describes different types of evaluation tools like essays, short answers, objective tests, observations, anecdotal records, checklists, rating scales, and oral exams. The purpose of evaluation is to measure student achievement and program effectiveness in order to improve the educational process.