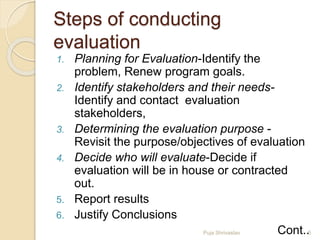

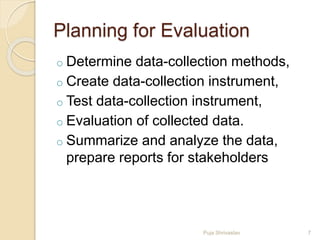

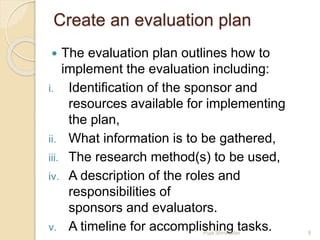

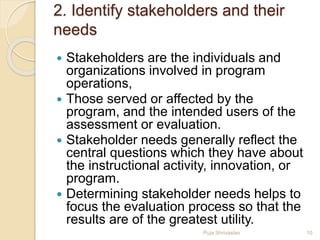

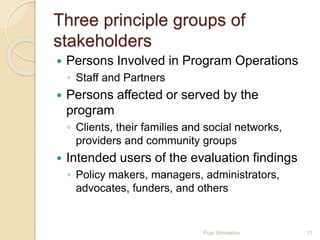

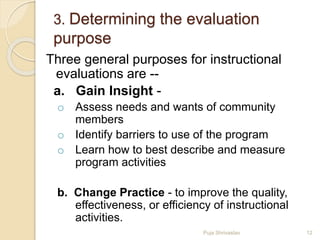

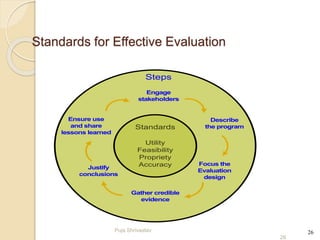

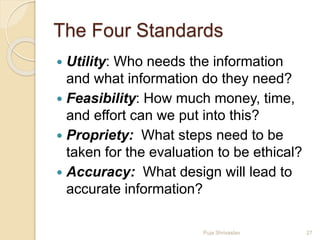

The document outlines the steps and standards for conducting a program evaluation, focusing on the assessment of instructional activities to improve effectiveness. It emphasizes the need to identify stakeholders, gather comprehensive data, and analyze findings to make informed decisions. Key elements include planning evaluation, determining purpose, justifying conclusions, and ensuring ethical standards are met throughout the process.