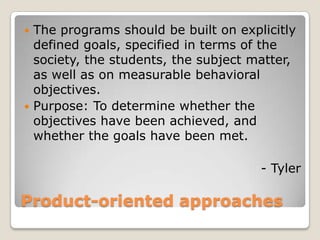

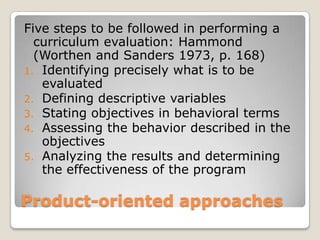

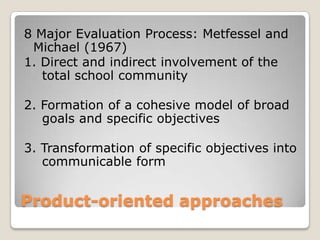

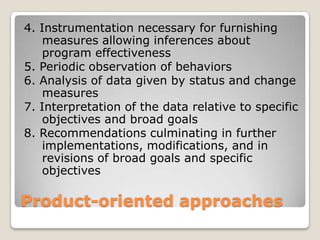

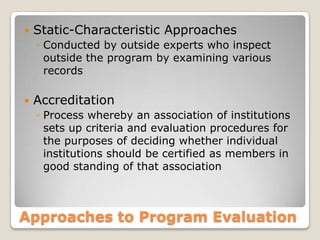

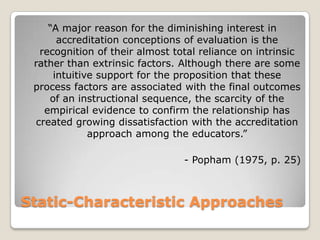

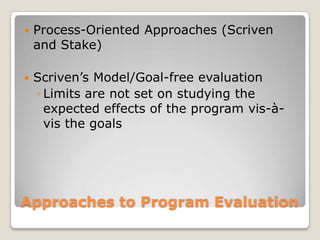

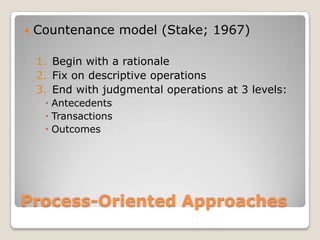

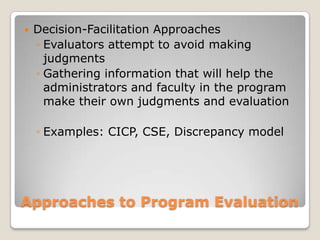

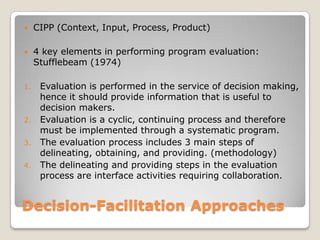

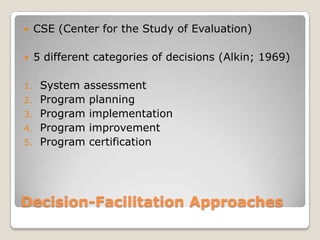

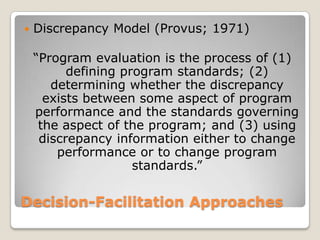

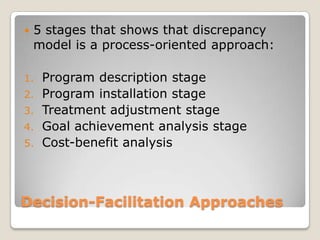

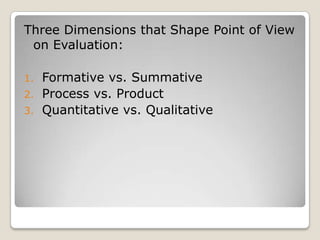

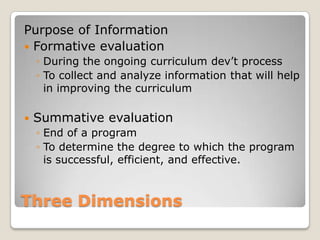

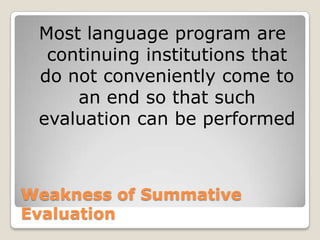

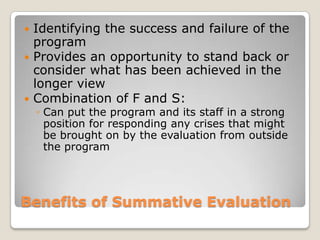

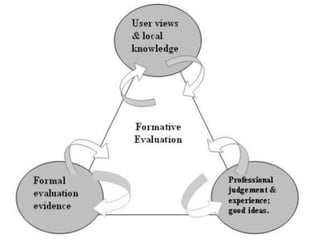

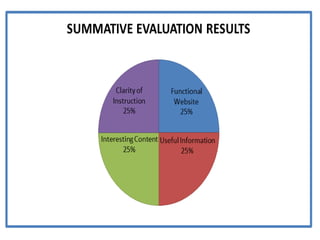

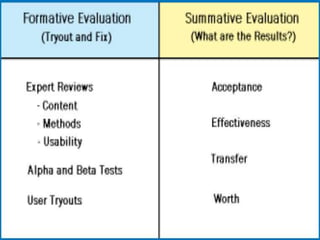

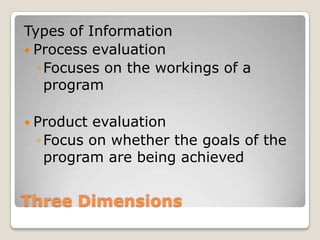

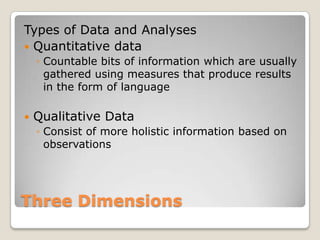

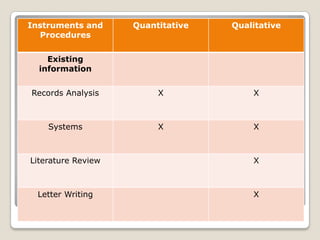

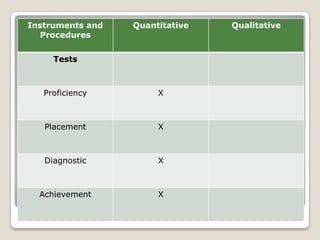

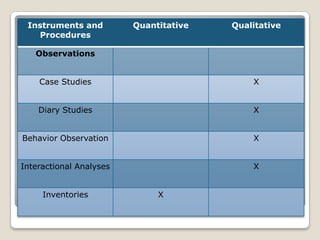

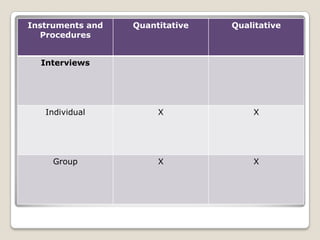

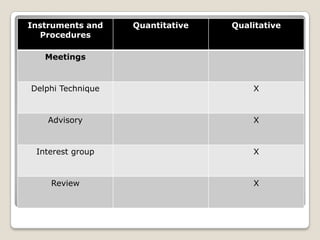

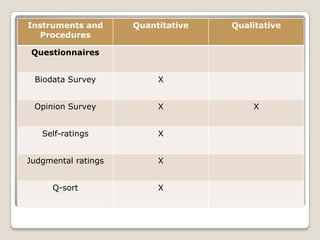

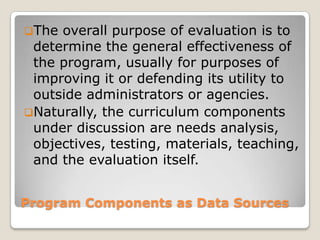

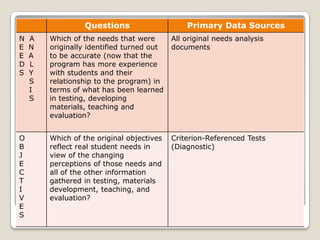

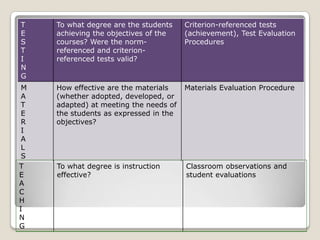

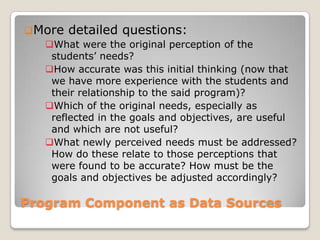

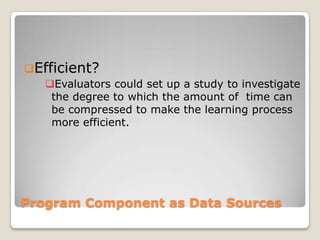

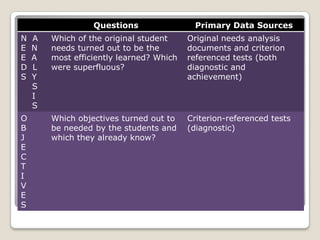

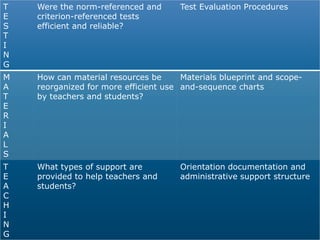

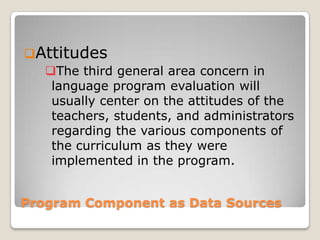

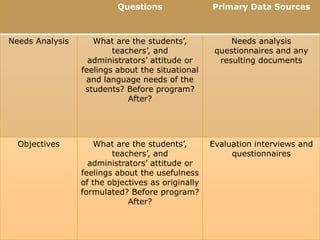

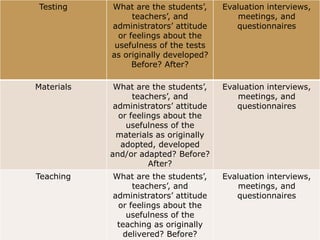

This document focuses on program evaluation, defining it as the systematic gathering and analysis of information to assess educational programs' effectiveness and improve curricula. It discusses various evaluation approaches, including product-oriented, process-oriented, and decision-facilitation models, emphasizing the significance of both quantitative and qualitative data in evaluation. Additionally, it addresses the importance of involving the school community and utilizing feedback from multiple stakeholders to enhance the overall educational process.