1. Discretization involves dividing the range of continuous attributes into intervals to reduce data size. Concept hierarchy formation recursively groups low-level concepts like numeric values into higher-level concepts like age groups.

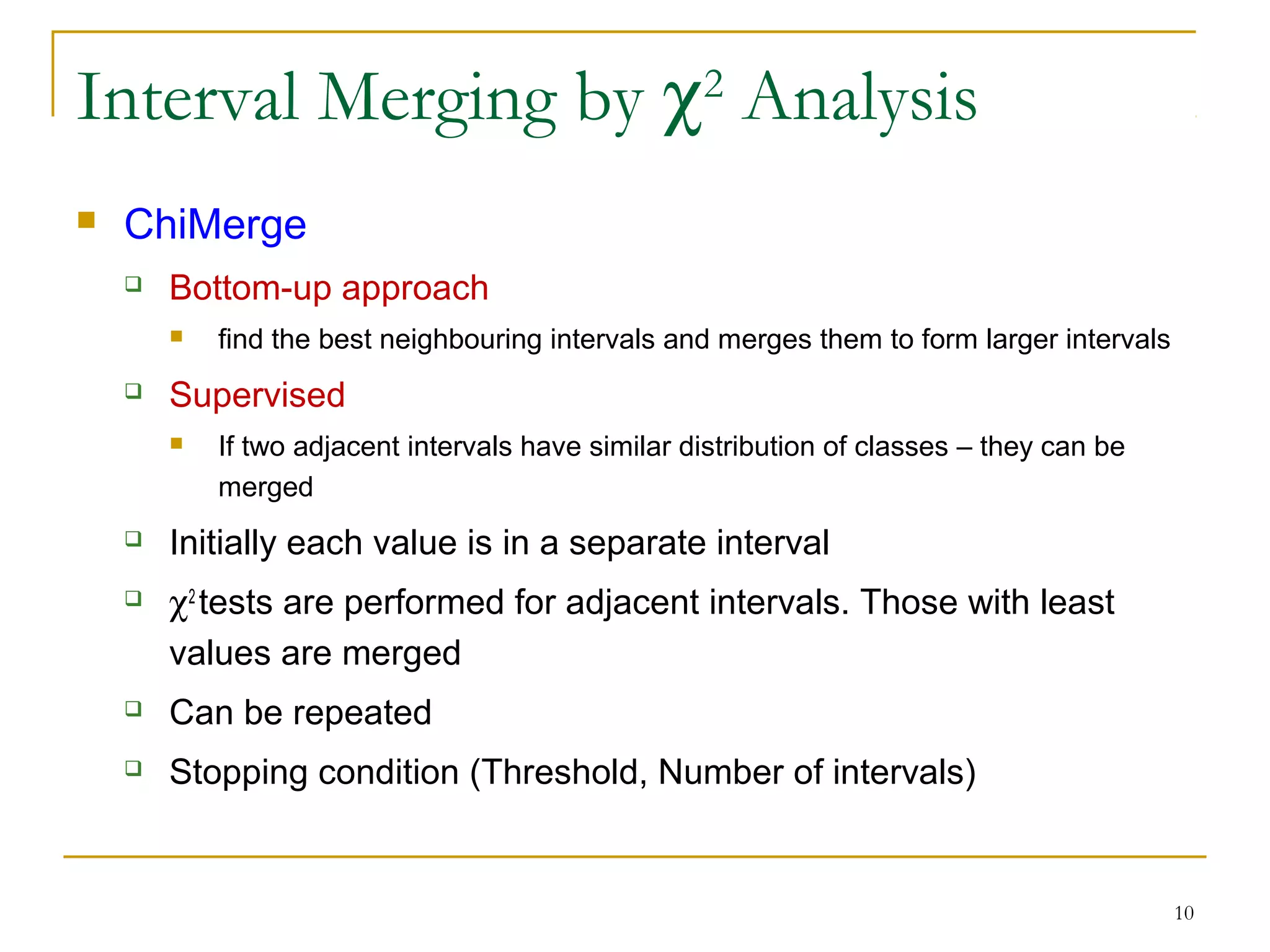

2. Common techniques for discretization and concept hierarchy generation include binning, histogram analysis, clustering analysis, and entropy-based discretization. These techniques can be applied recursively to generate hierarchies.

3. Discretization and concept hierarchies reduce data size, provide more meaningful interpretations, and make data mining and analysis easier.

![6

Techniques

Binning

Distribute values into bins

Replace by bin mean / median

Recursive application – leads to concept hierarchies

Unsupervised technique

Histogram Analysis

Data Distribution – Partition

Equiwidth – (0-100], (100-200], …

Equidepth

Recursive

Minimum Interval size

Unsupervised](https://image.slidesharecdn.com/1-150506061422-conversion-gate01/75/1-8-discretization-6-2048.jpg)