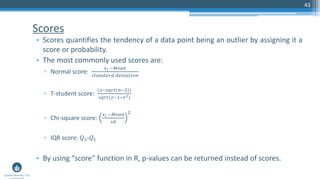

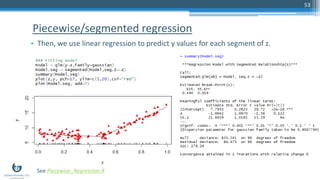

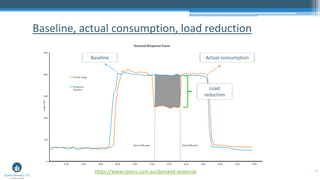

The document presents a detailed overview of anomaly detection techniques in data analytics, covering definitions, classifications, applications, and methodologies such as graphical approaches, statistical testing, and machine learning. It provides insights into practical implementations using R programming, highlighting various methods like point, contextual, and collective anomalies, alongside specific examples in fields like fraud detection and medical diagnostics. The content also discusses tools and libraries available for executing these techniques effectively.