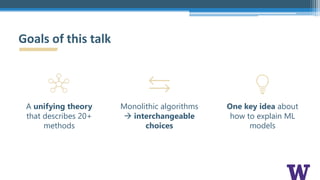

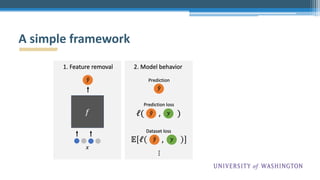

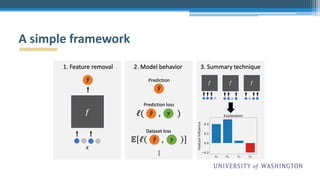

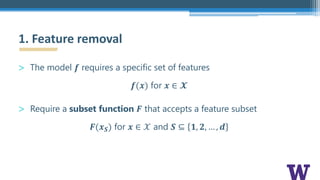

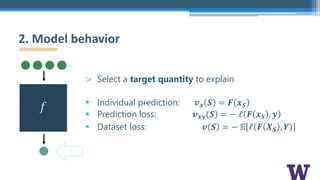

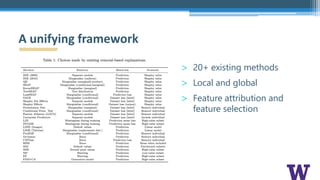

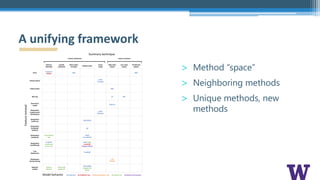

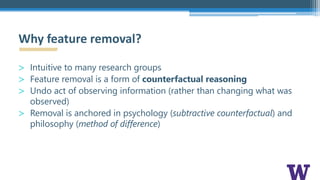

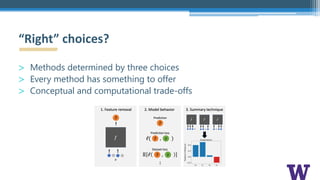

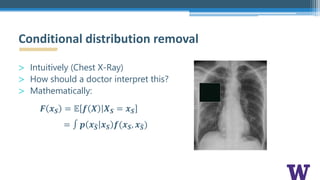

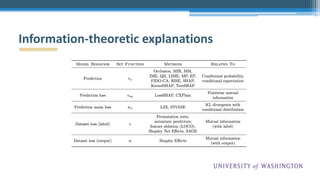

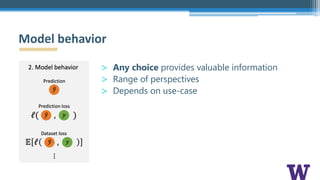

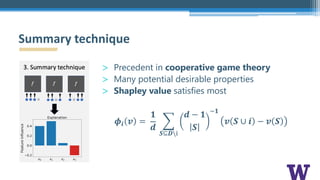

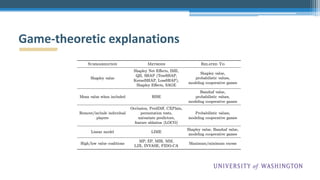

This document presents a workshop on a unified framework for model explanation, focusing on the concept of feature removal in Explainable AI (XAI). It outlines various methods and challenges in the field, emphasizing the need for a coherent theory to guide practitioners and researchers in effectively interpreting machine learning models. The talk concludes with the importance of counterfactual reasoning and the potential trade-offs in choosing appropriate methods for model explanation.