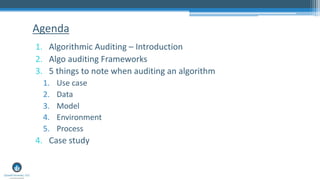

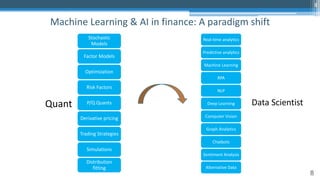

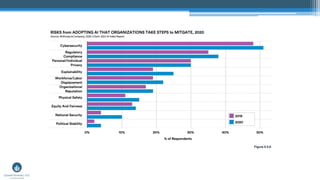

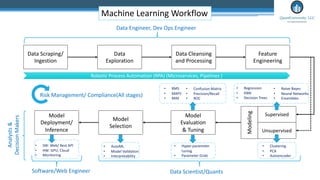

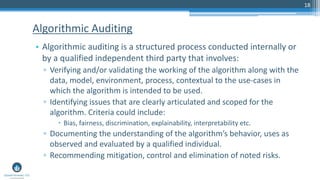

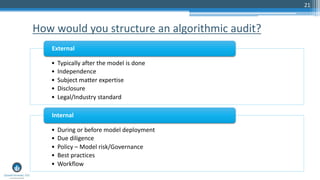

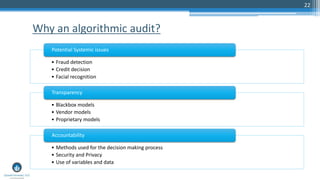

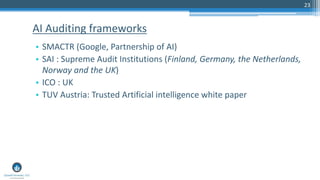

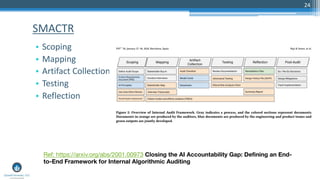

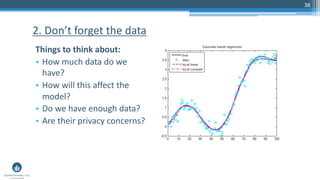

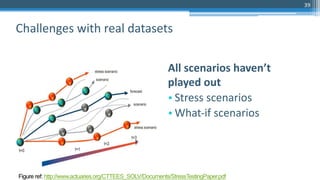

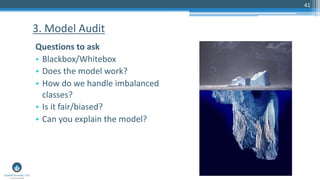

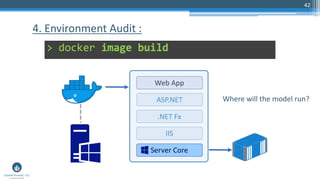

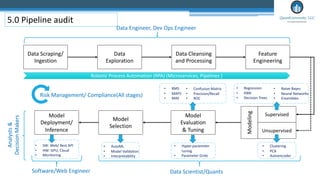

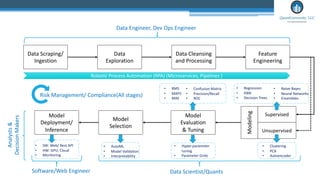

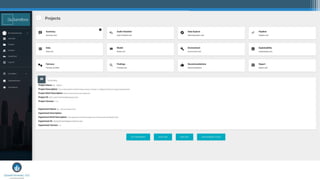

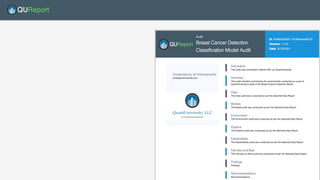

The document presents an overview of algorithmic auditing in the context of finance, emphasizing its importance for ensuring compliance, transparency, and fairness in machine learning applications. It discusses various frameworks and processes for conducting audits, the challenges faced in auditing AI algorithms, and the necessary expertise for auditors. Additionally, it highlights the role of regulatory bodies and the need for continuous skill development in the auditing community to keep pace with advancements in machine learning.