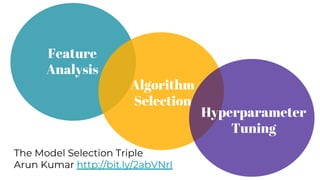

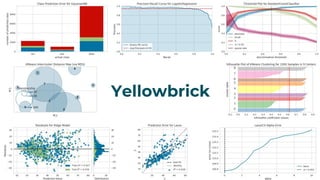

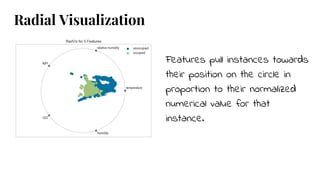

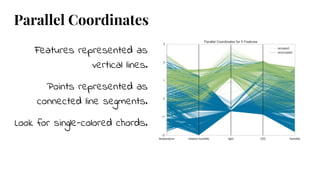

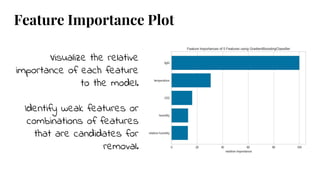

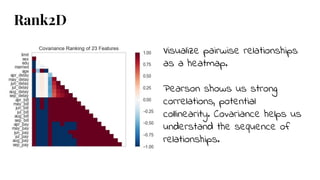

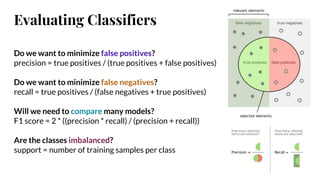

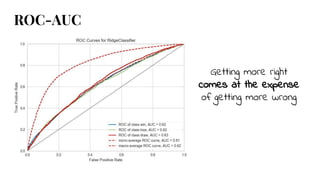

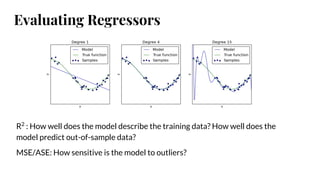

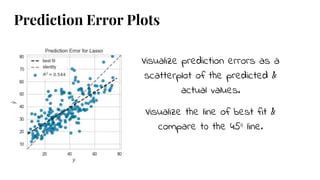

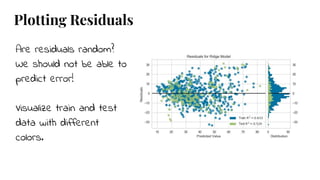

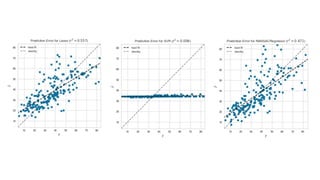

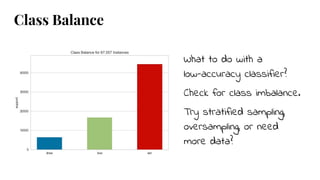

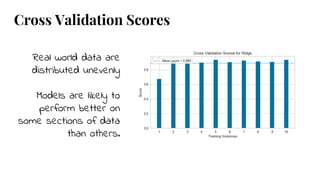

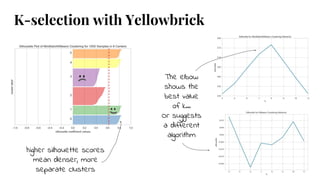

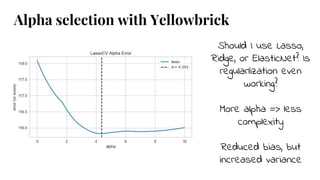

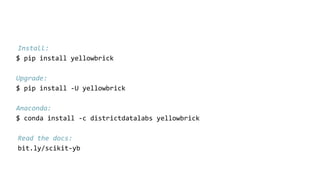

The document discusses model selection in machine learning, emphasizing the importance of feature analysis, algorithm selection, and hyperparameter tuning. Key techniques for evaluation include precision, recall, and F1 score for classifiers, as well as R² and MSE for regressors. Additionally, it introduces the Yellowbrick library for visual diagnostics in data science, providing tools for various analytical tasks.