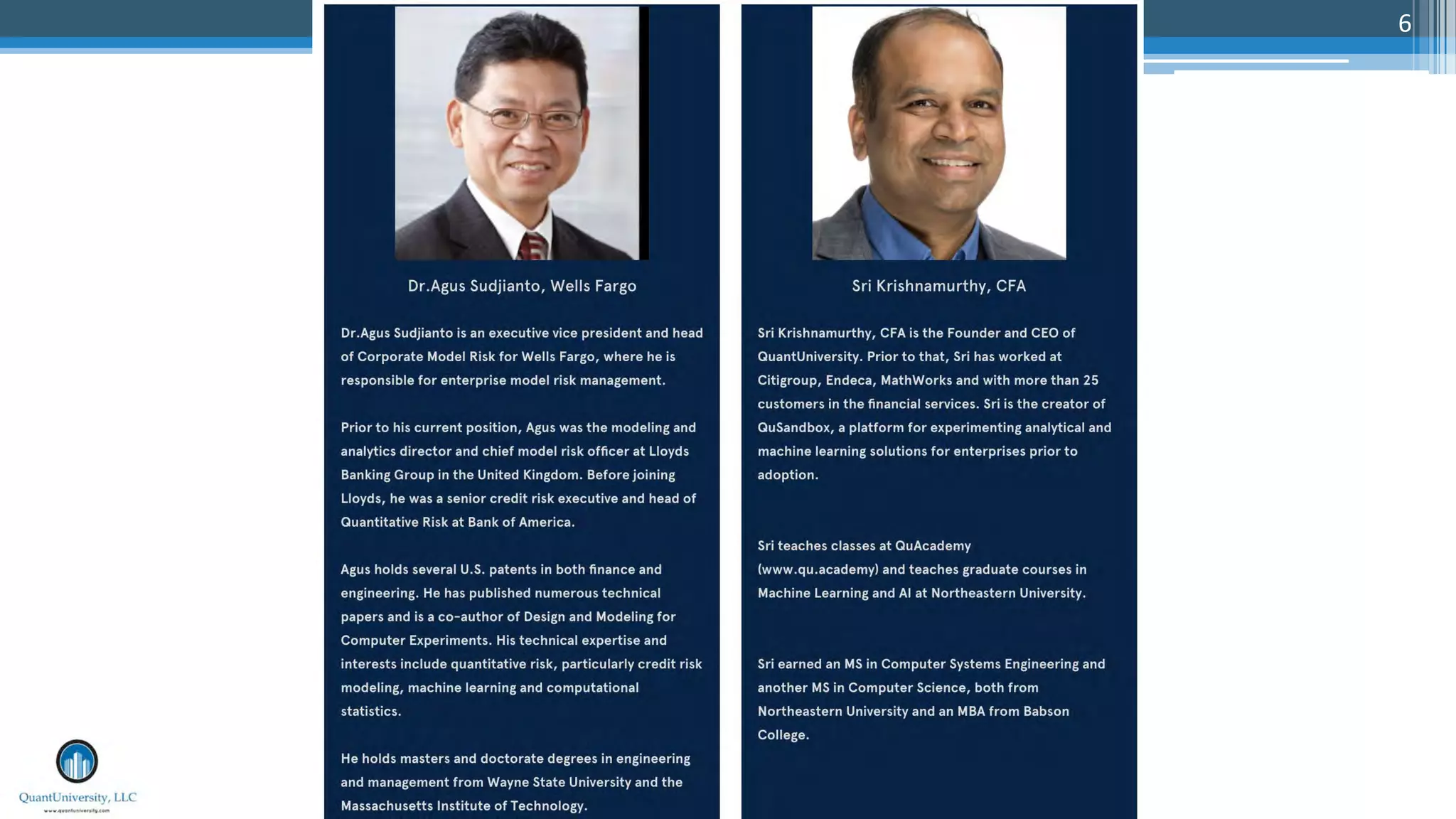

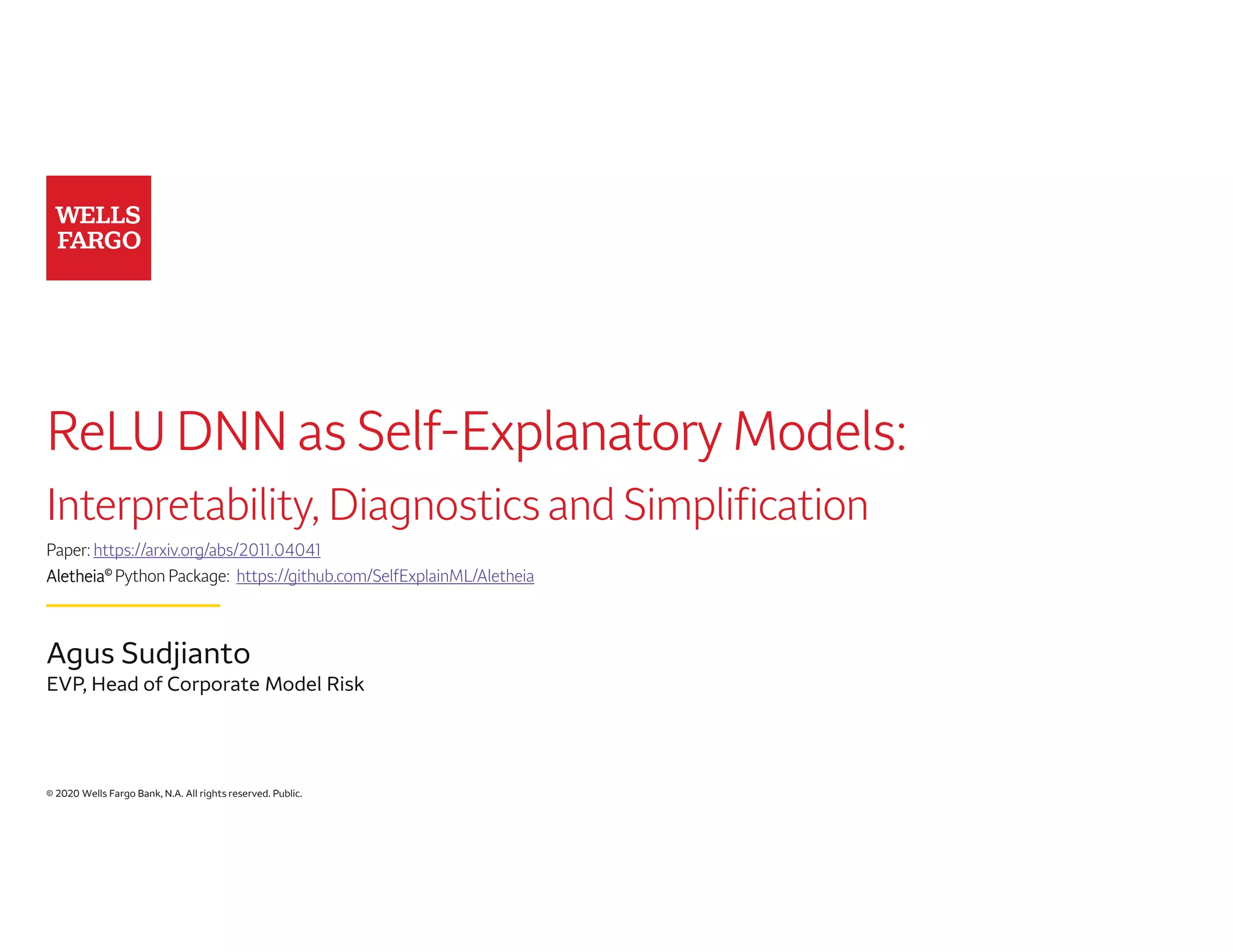

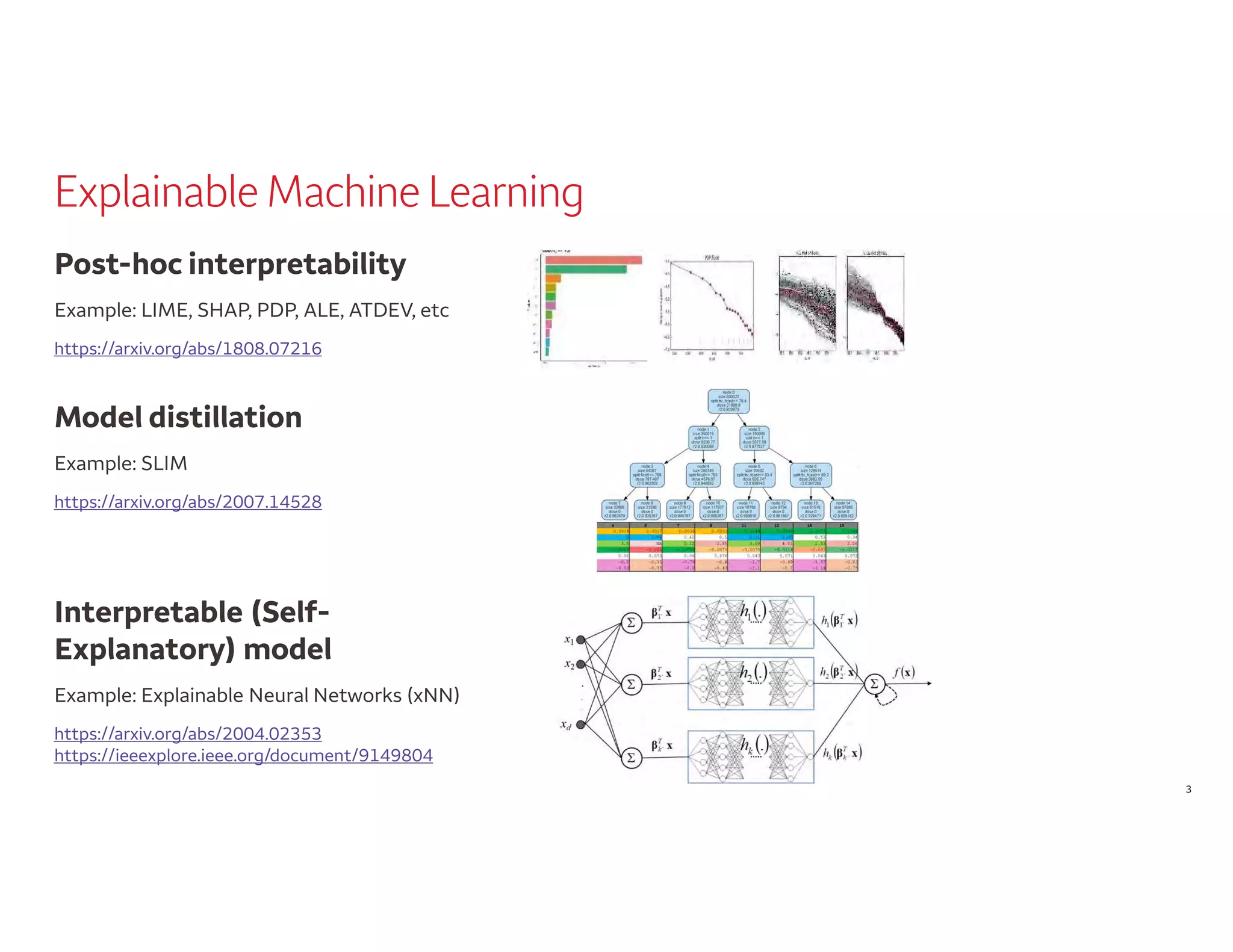

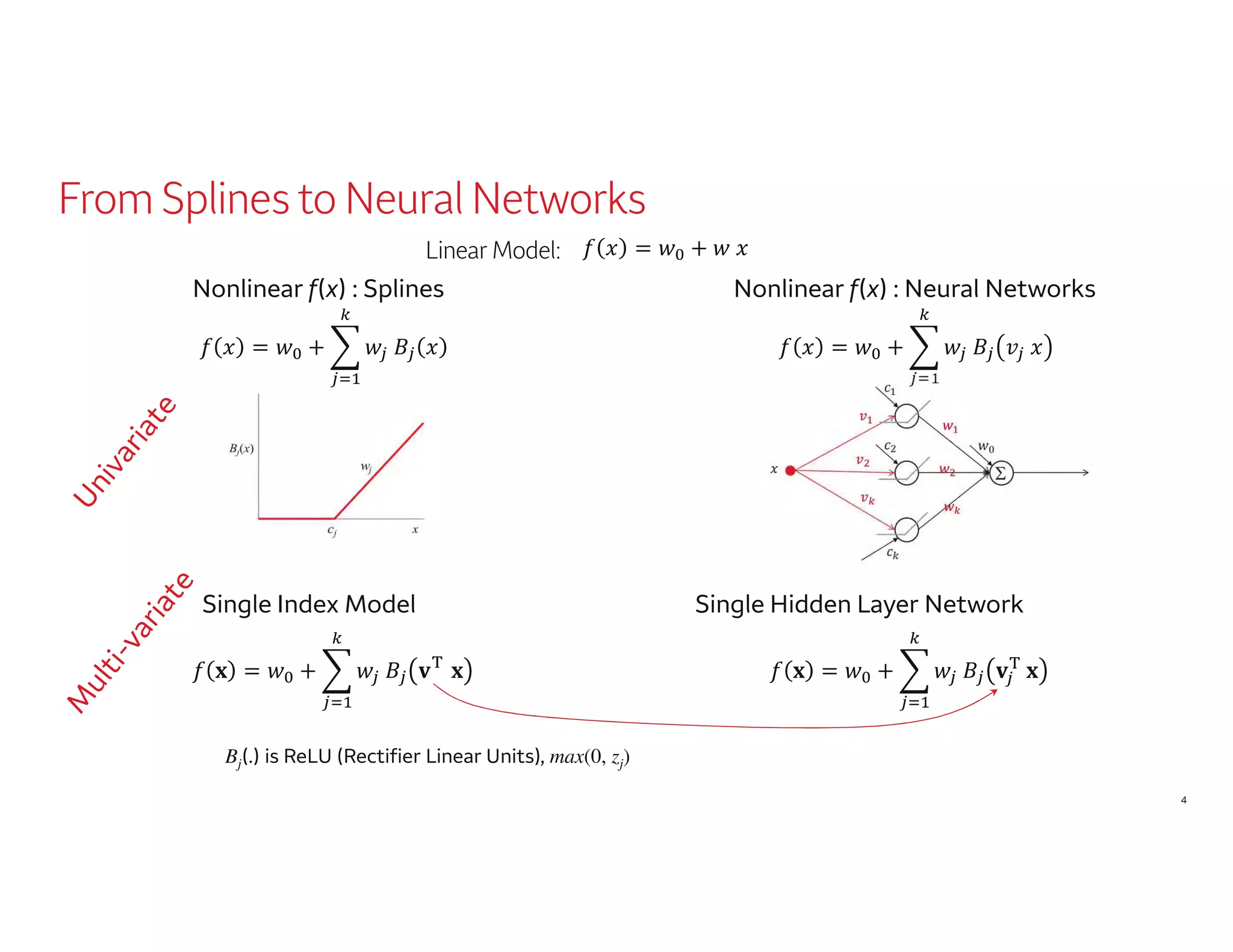

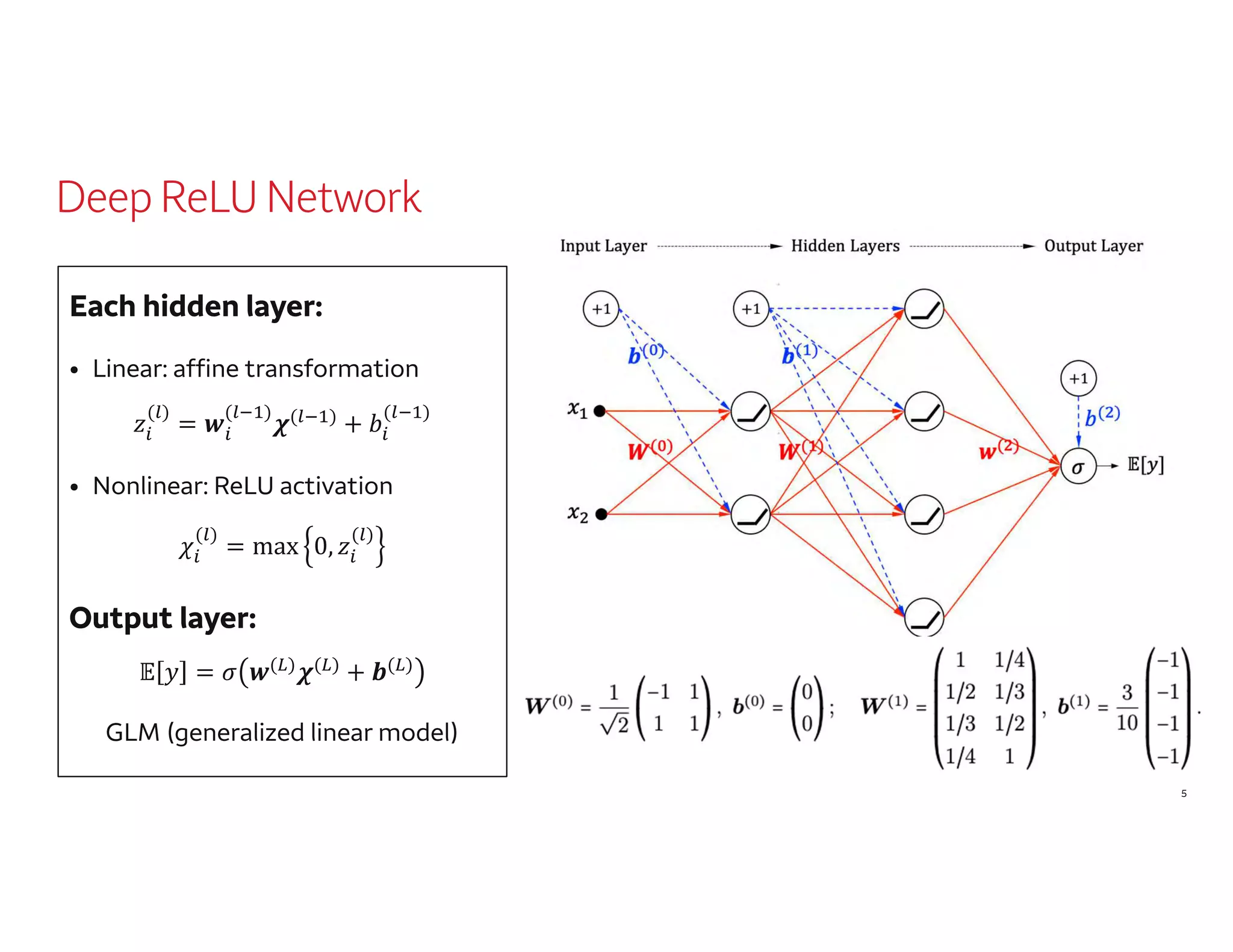

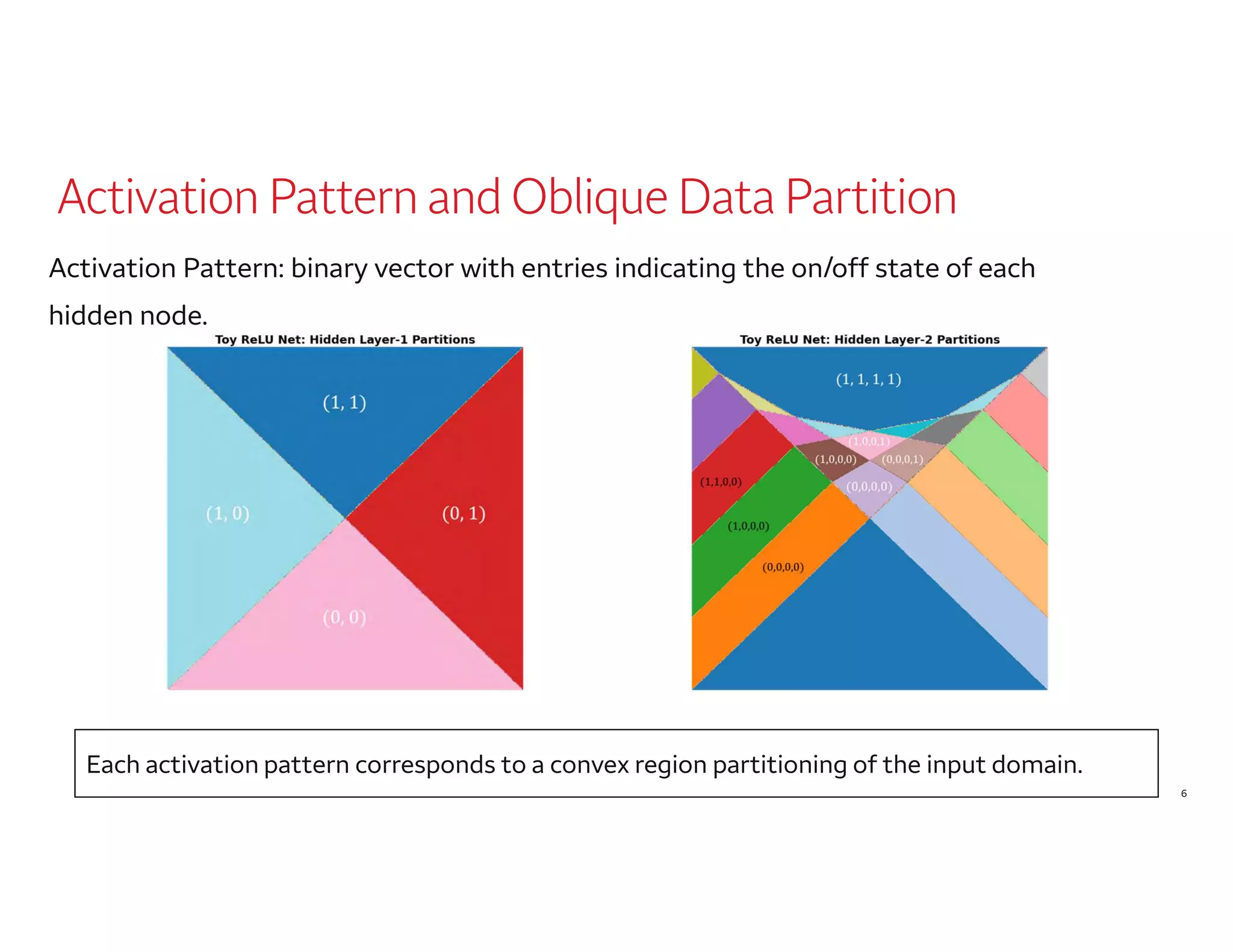

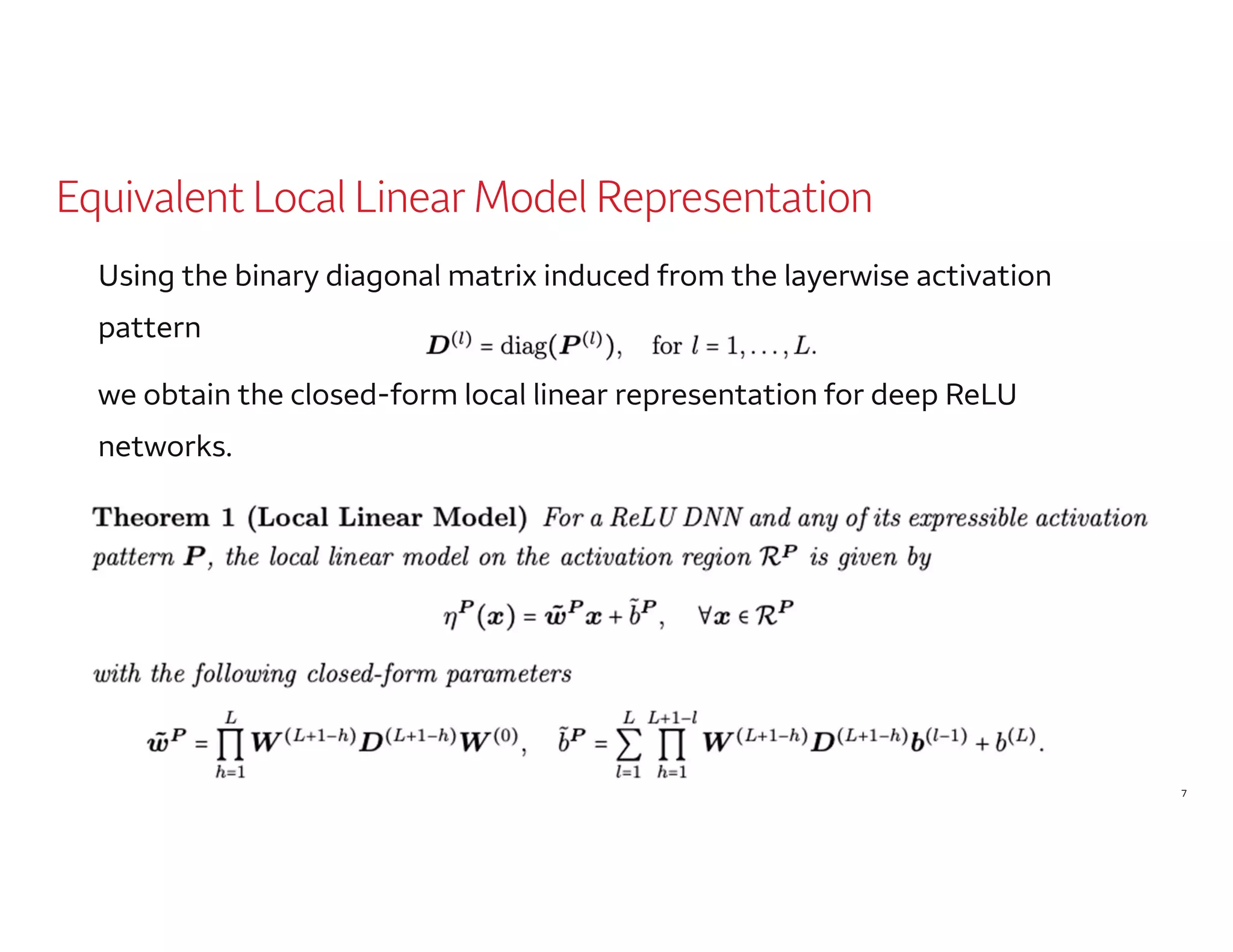

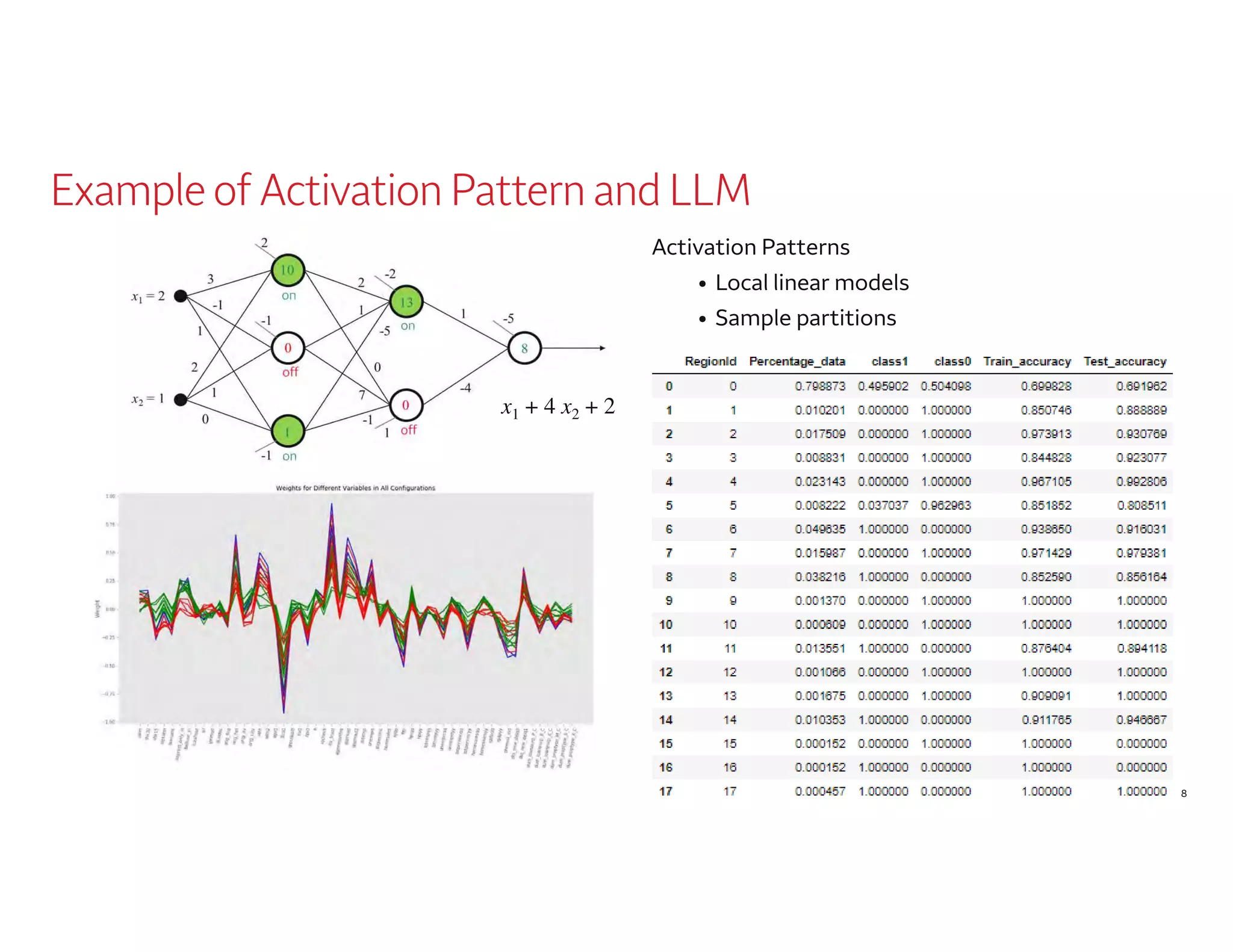

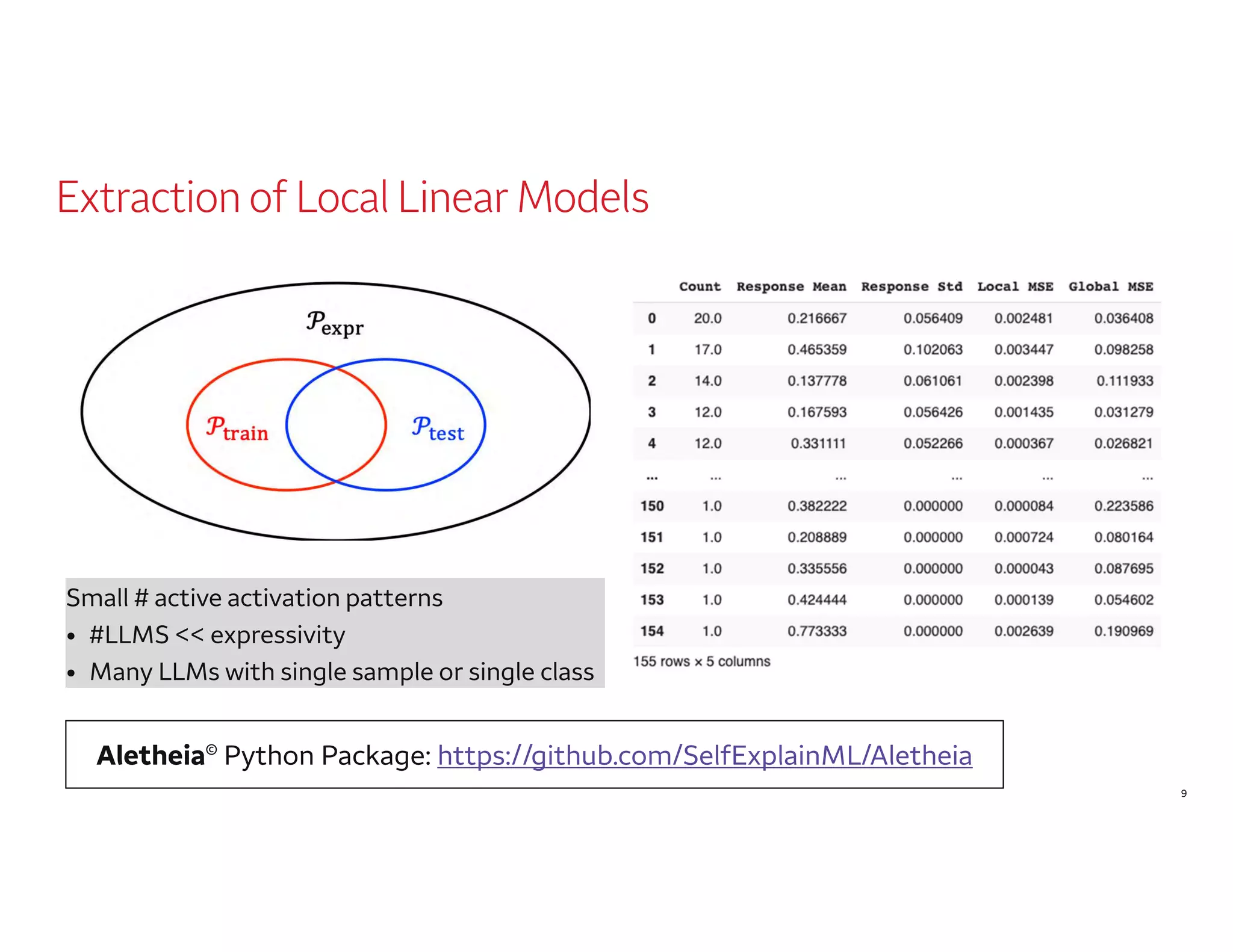

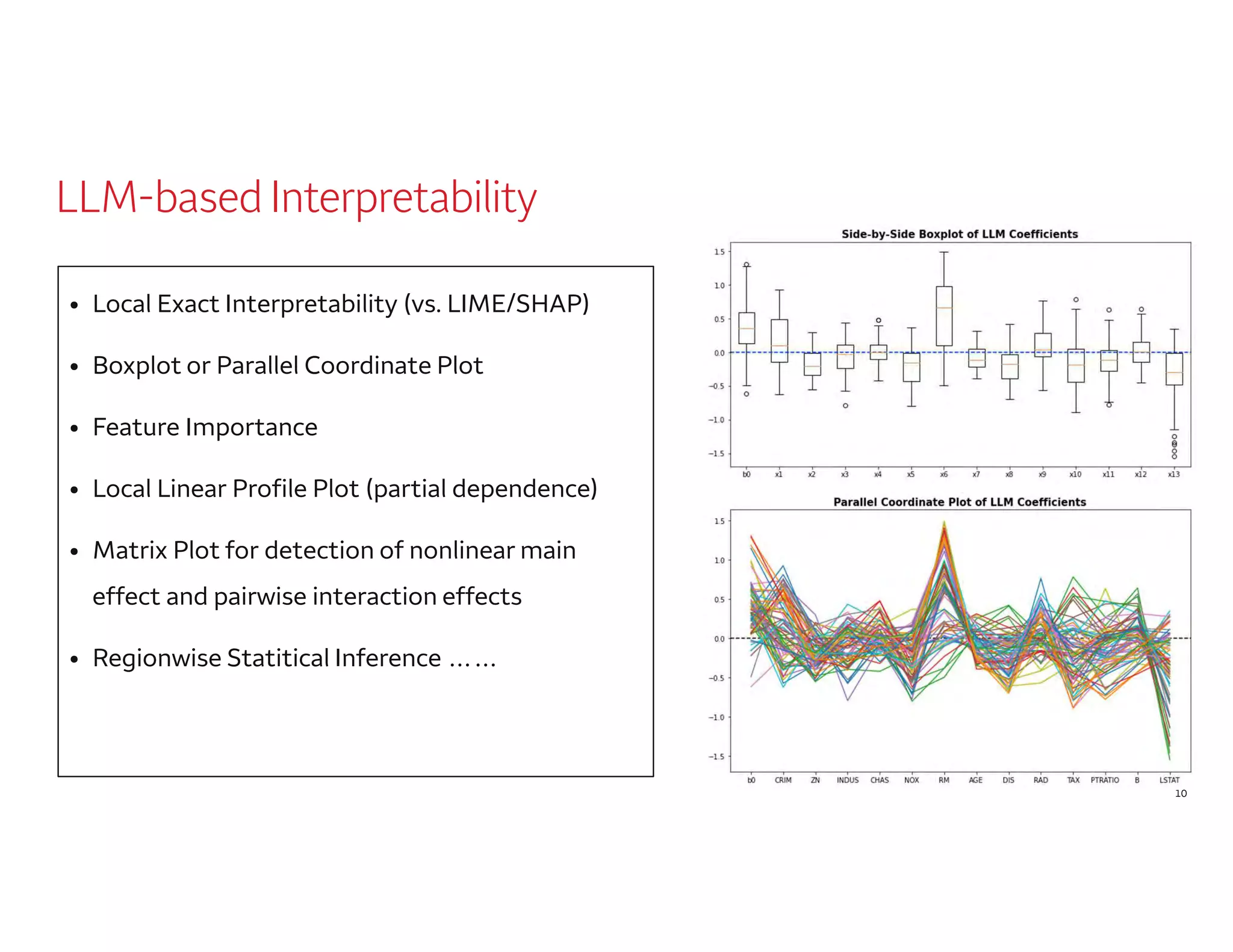

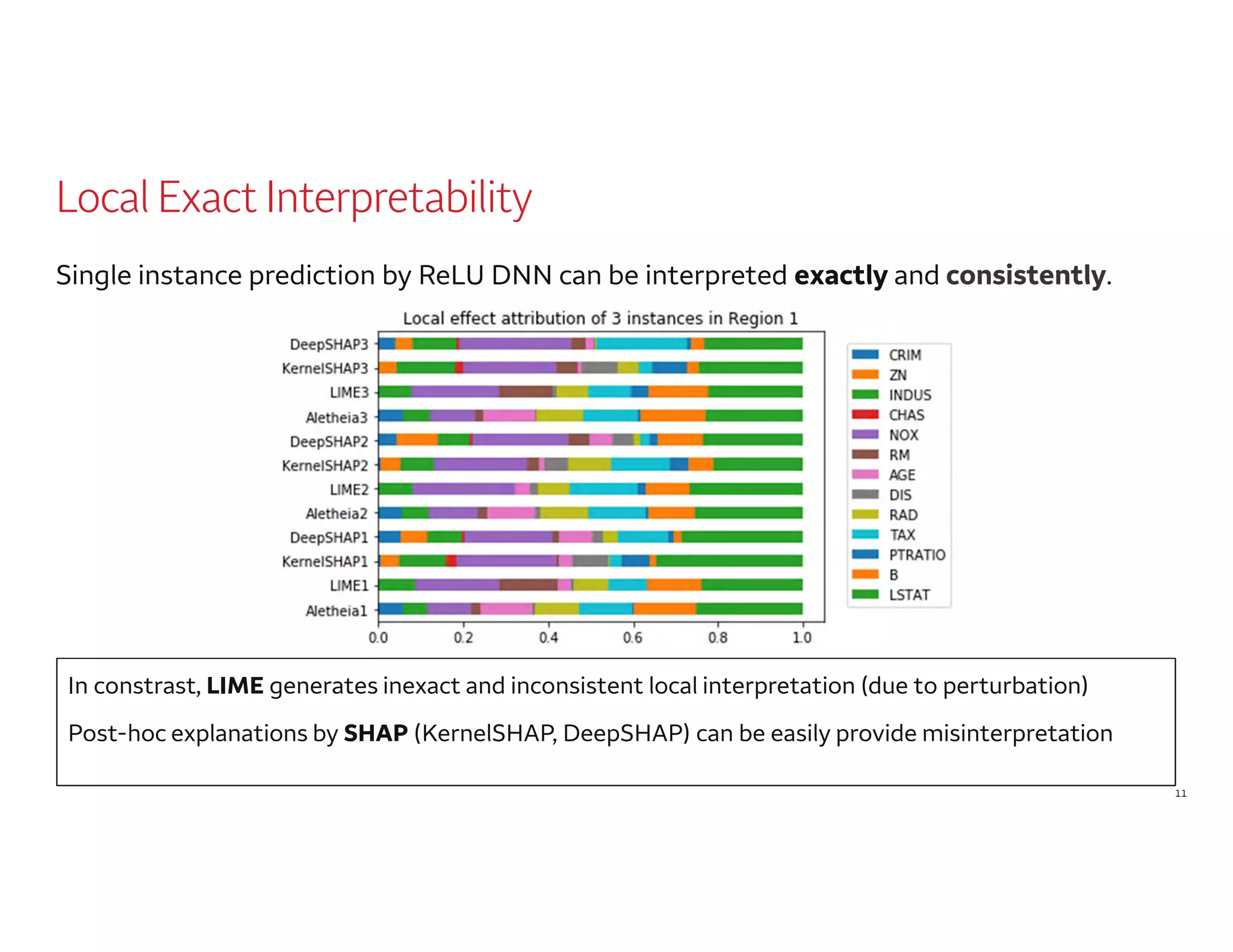

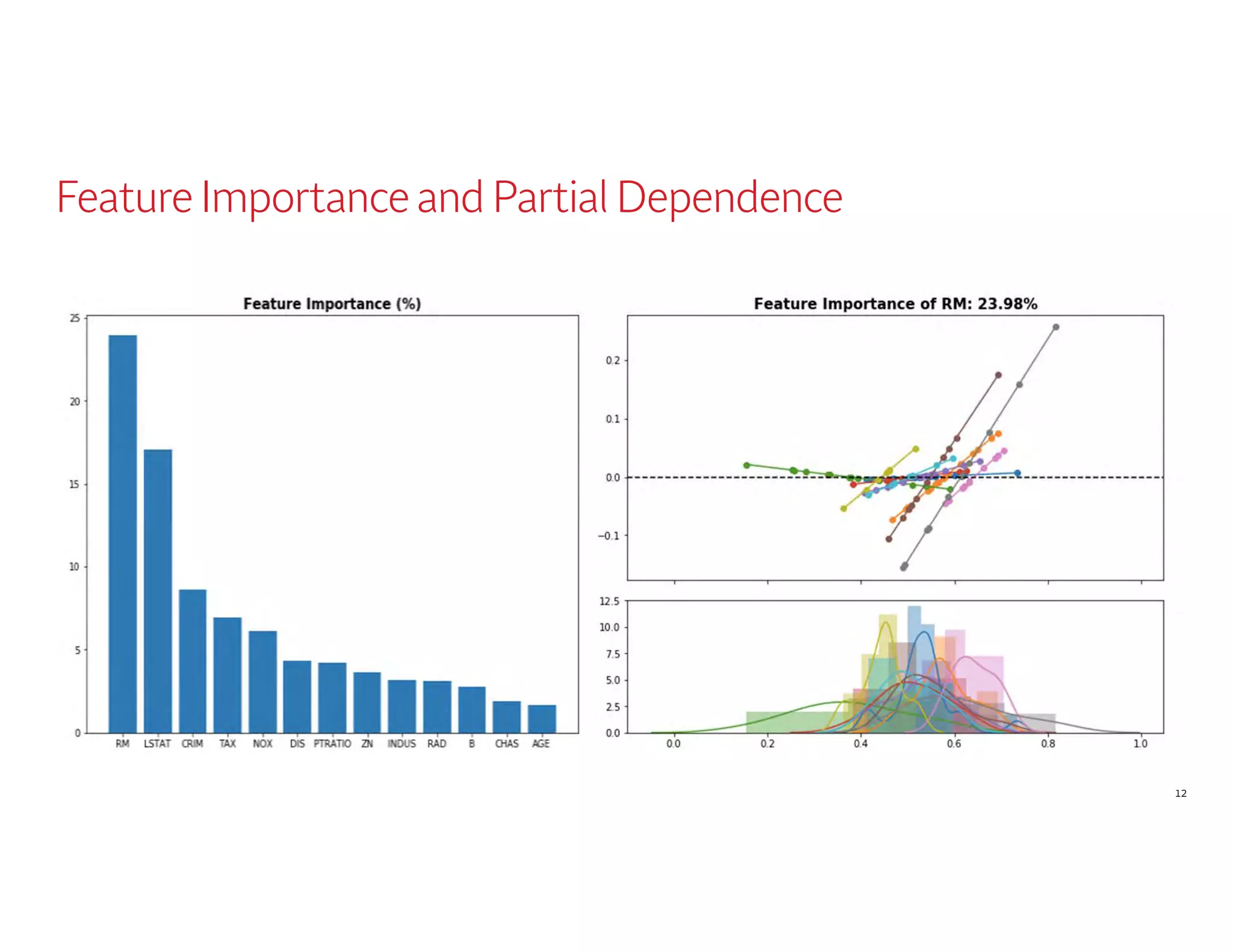

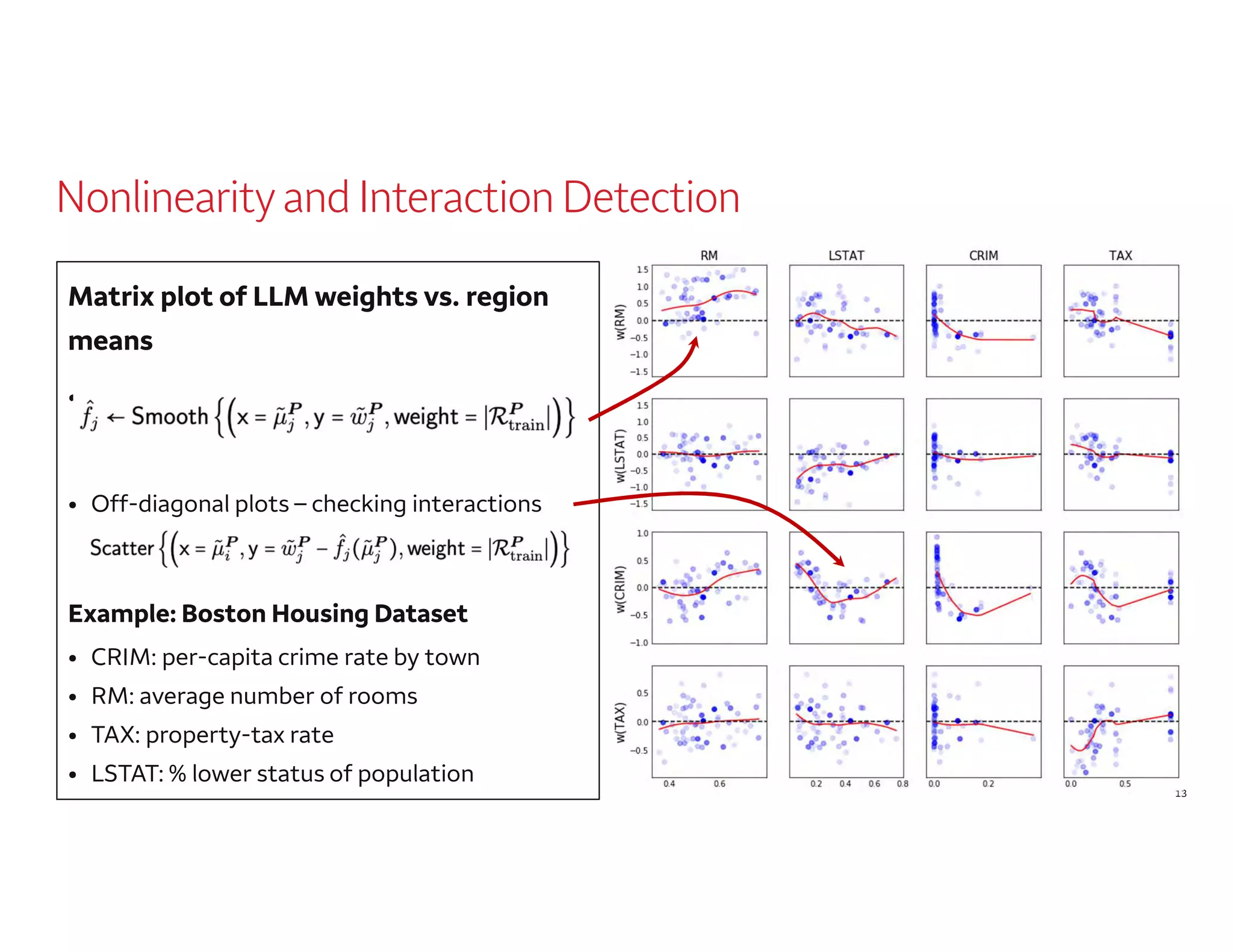

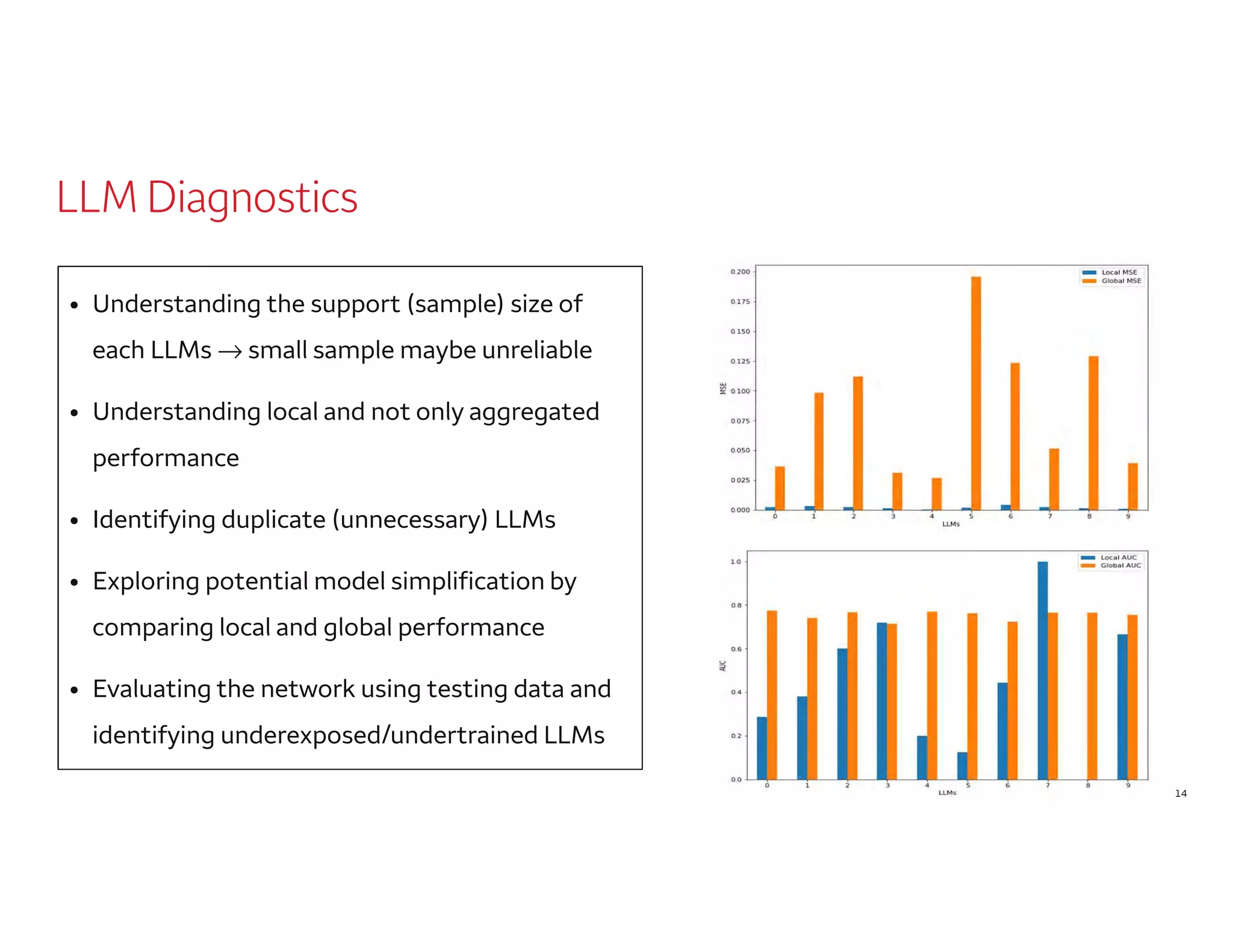

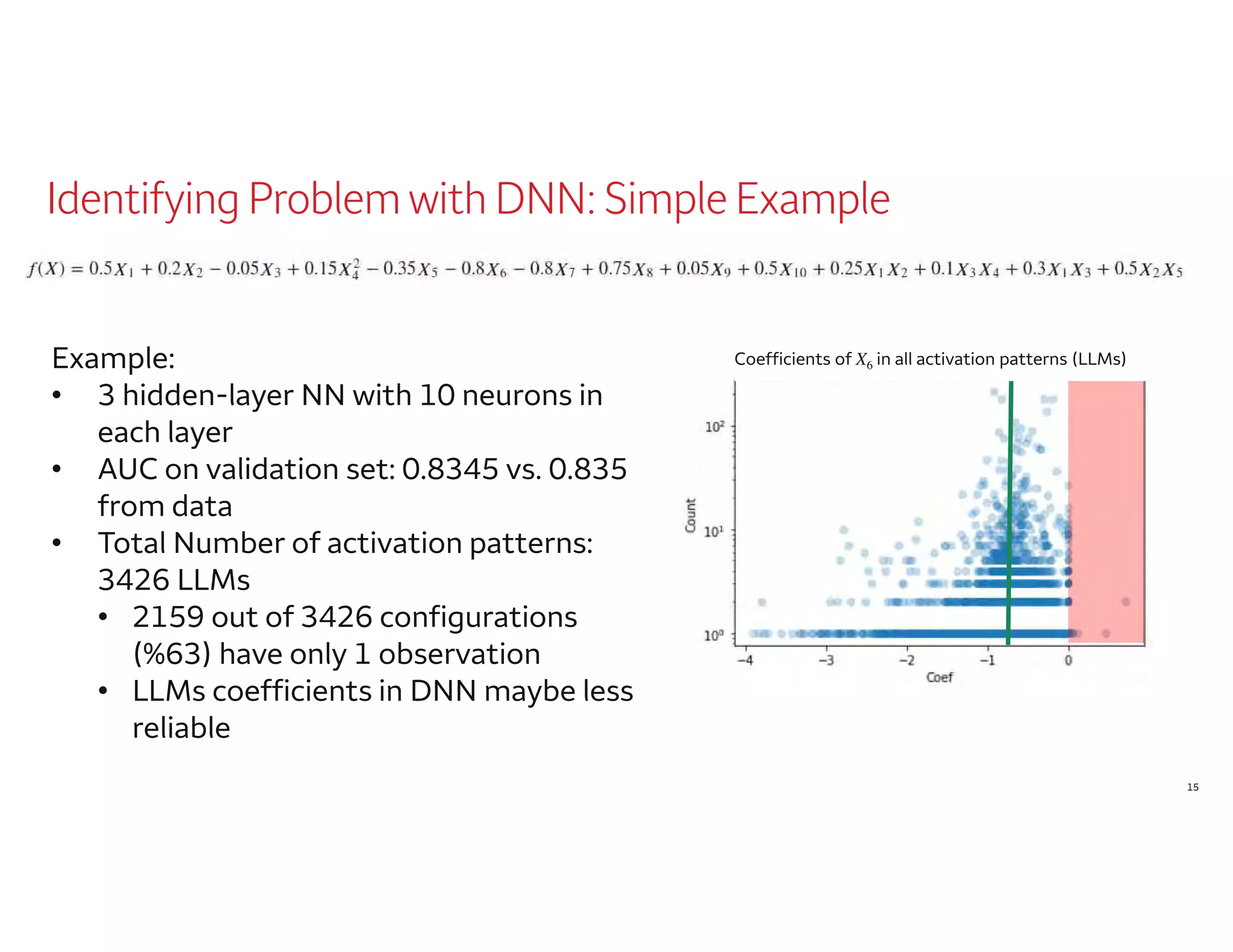

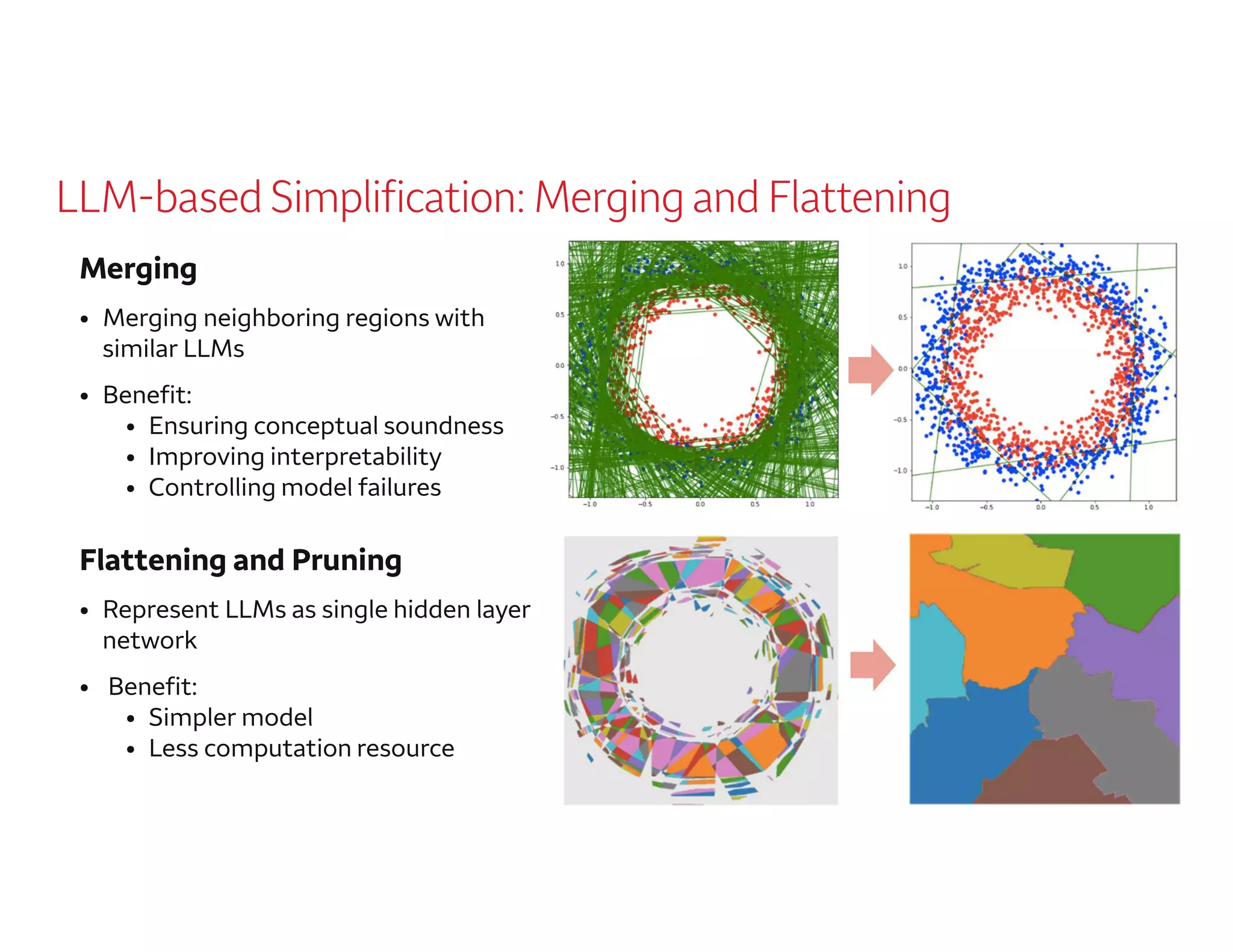

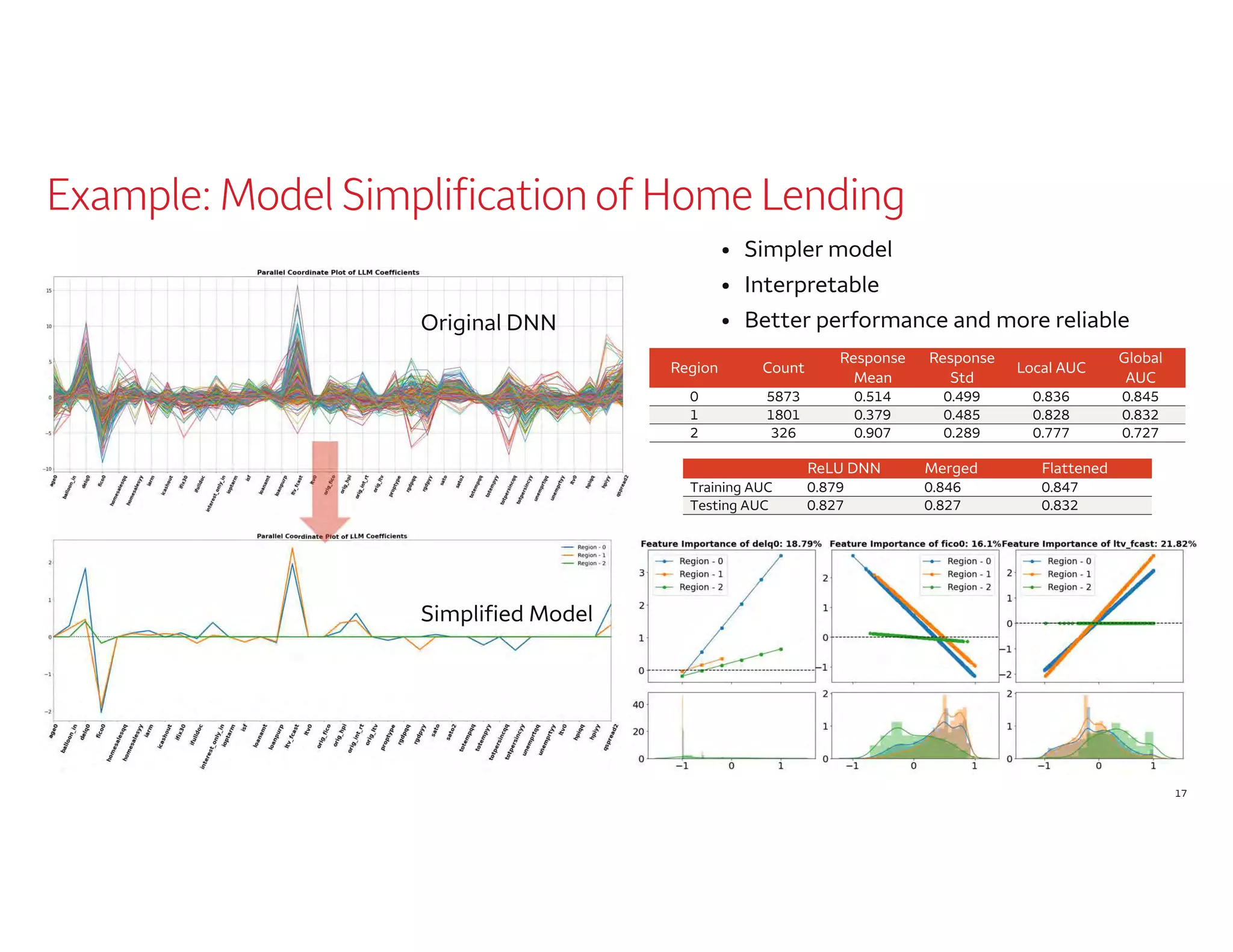

The document outlines a presentation by Dr. Agus Sudjianto on machine learning and model risk, focusing on self-explanatory models and their interpretability. It details his background in model risk management at Wells Fargo and previous roles at Lloyds Banking Group and Bank of America. The presentation emphasizes techniques like local linear models for interpretability and diagnostics in deep neural networks, alongside providing insights into model simplification methods.