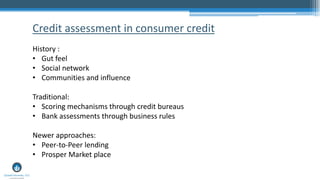

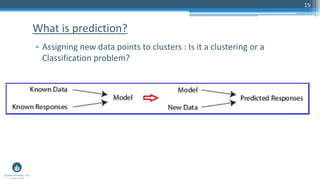

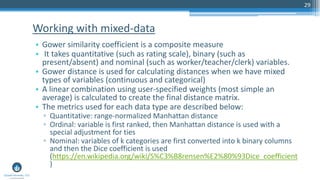

This document summarizes a presentation given by Sri Krishnamurthy on machine learning applications for credit risk. The presentation covered topics such as credit scoring models, traditional credit assessment approaches, peer-to-peer lending, and using clustering algorithms and dimensionality reduction techniques to analyze lending club loan data. Specific clustering and visualization methods discussed include partitioning around medoids, t-distributed stochastic neighborhood embedding, and analyzing mixed data types using Gower similarity coefficients.