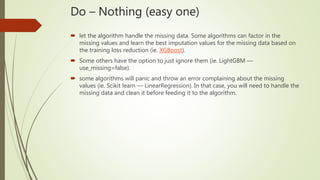

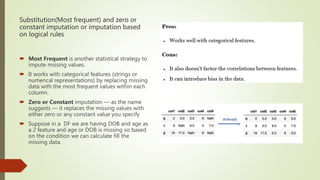

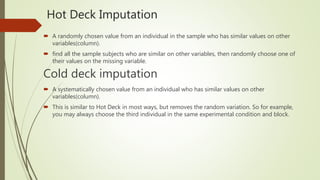

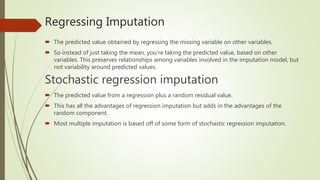

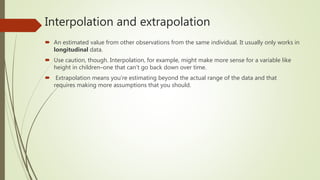

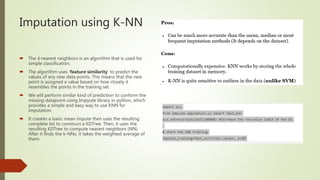

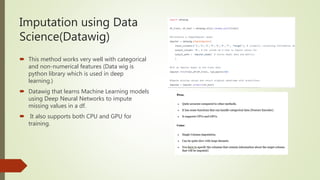

There are three main types of missing data: missing completely at random, missing at random, and not missing at random. Common techniques for handling missing data include mean/median imputation, hot deck imputation, cold deck imputation, regression imputation, stochastic regression imputation, K-nearest neighbors imputation, and multivariate imputation by chained equations. Modern deep learning methods can also be used to impute missing values, with techniques like Datawig that leverage neural networks.