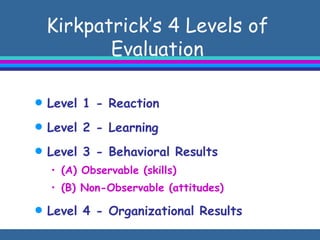

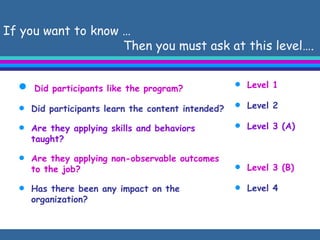

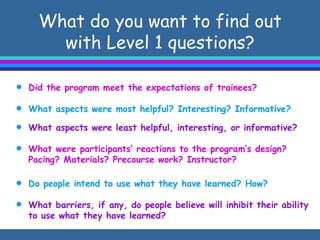

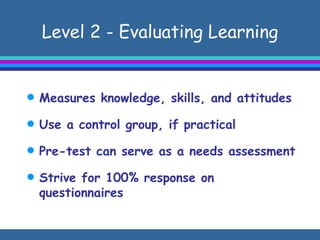

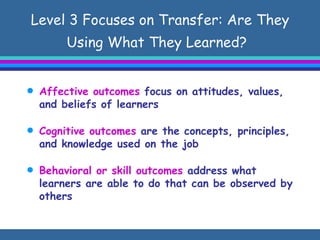

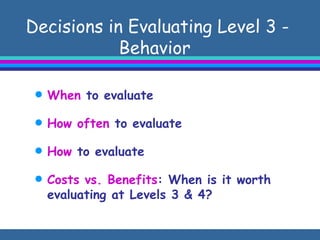

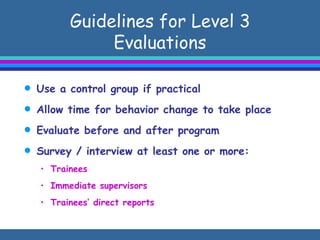

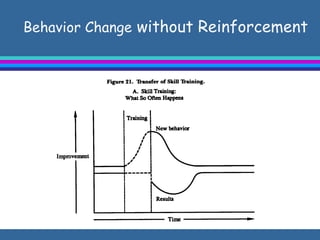

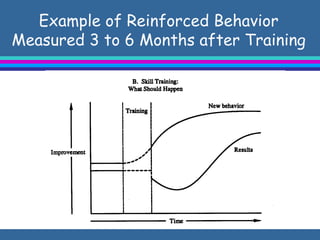

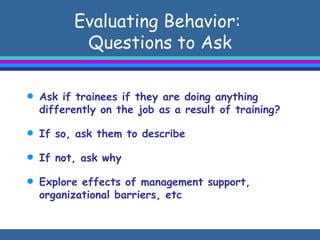

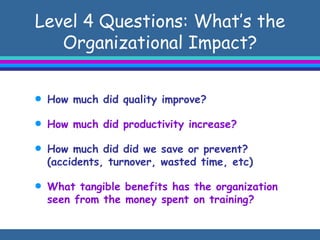

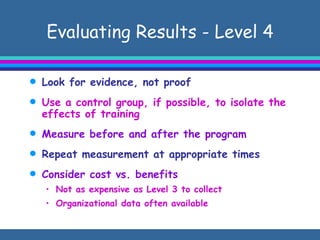

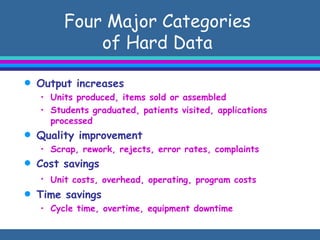

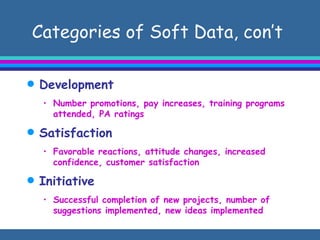

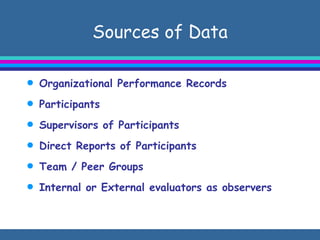

Kirkpatrick's 4 levels of evaluation assess training programs from different perspectives: Level 1 assesses participant reactions; Level 2 assesses learning; Level 3 evaluates behavior change; Level 4 measures organizational results. Each level requires different data collection methods, with higher levels being more difficult and expensive to measure. The model provides guidelines for evaluation questions, timing, methods and comparing pre- and post-training metrics to determine a program's full impact.