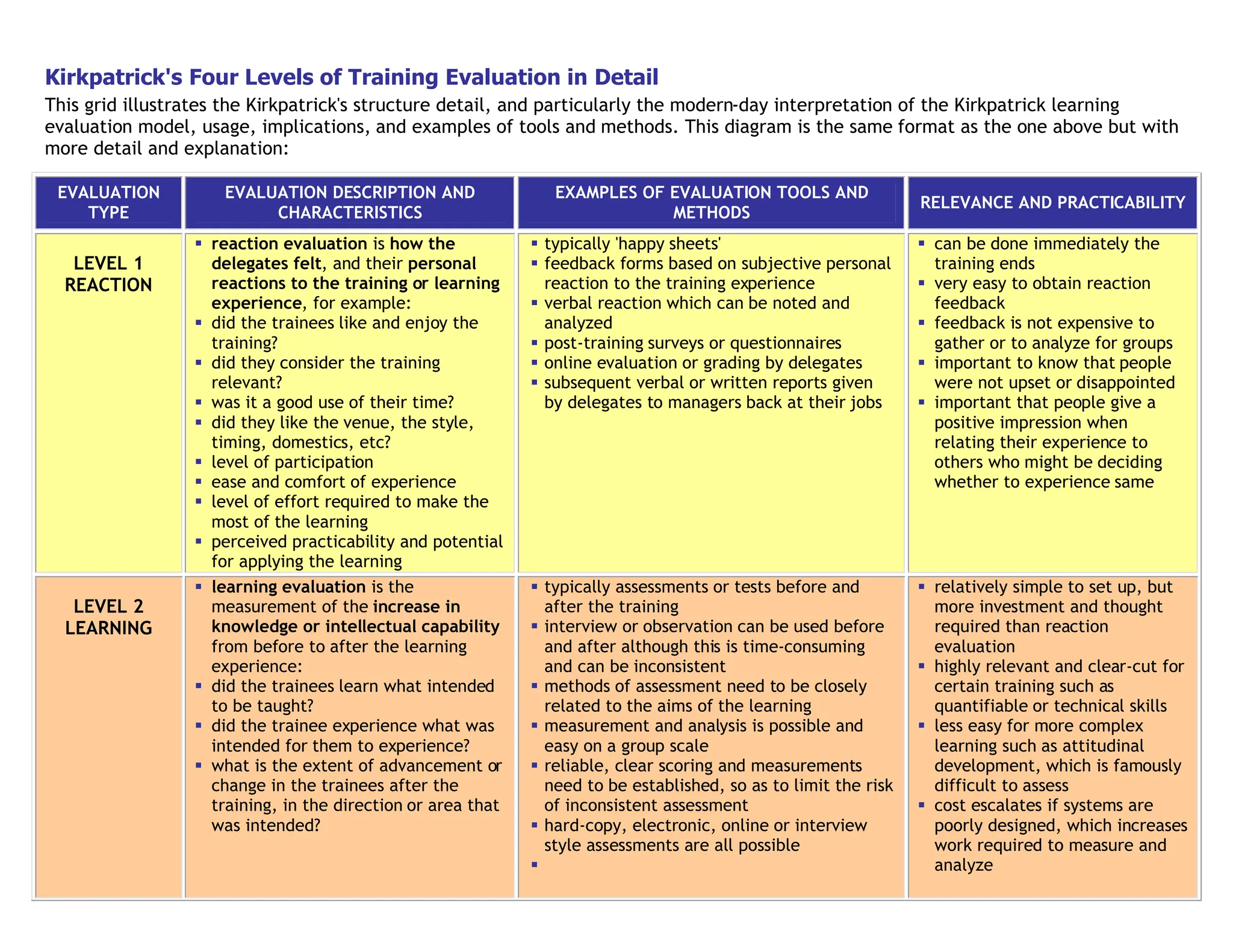

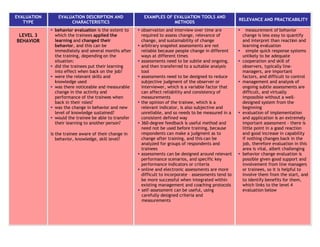

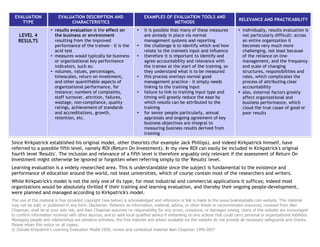

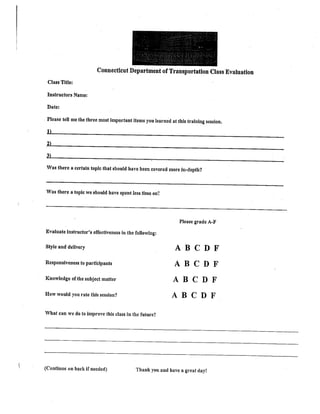

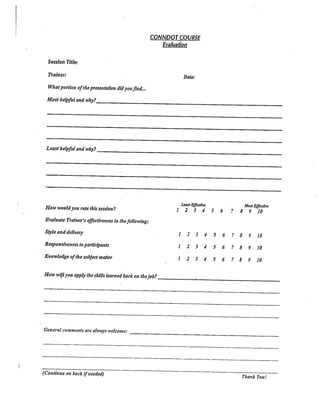

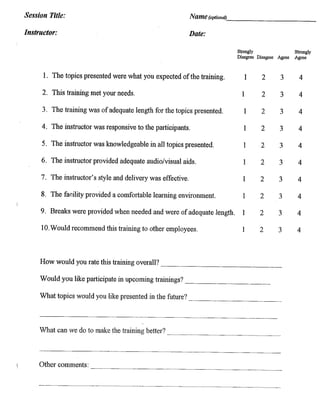

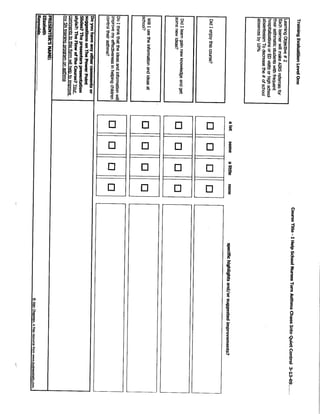

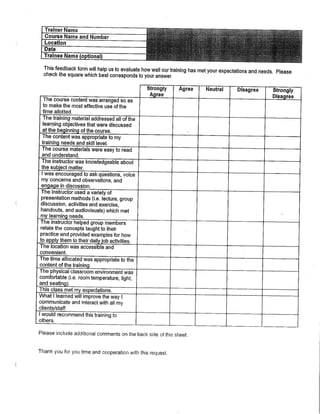

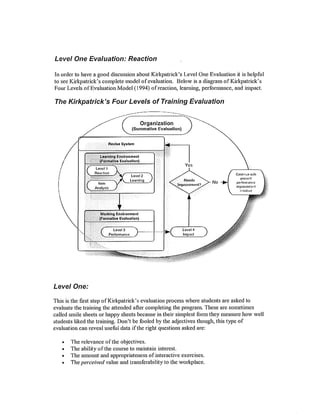

This document provides details on Kirkpatrick's four levels of training evaluation. It includes a diagram illustrating the levels and describes each level in detail. Level one evaluation, also known as reaction evaluation, measures how participants reacted to the training. It addresses whether they found the training enjoyable, relevant, and a good use of their time. Gathering reaction feedback is important for improving training design and delivery. While simple to administer, higher levels provide more meaningful data about learning outcomes, behavioral changes, and business impacts. The document outlines various tools that can be used to measure outcomes at each level.