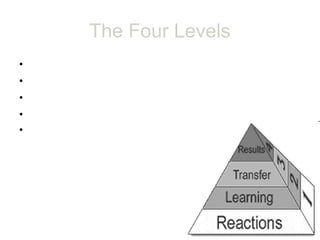

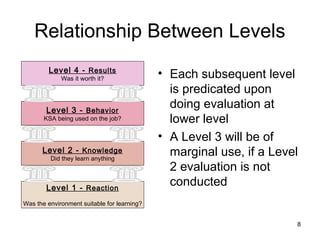

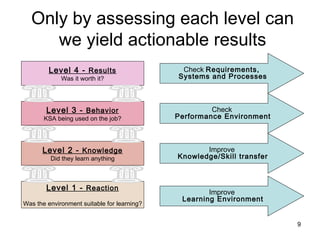

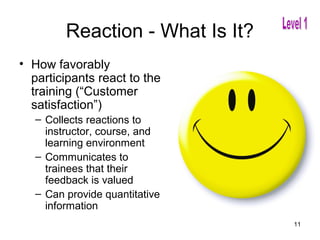

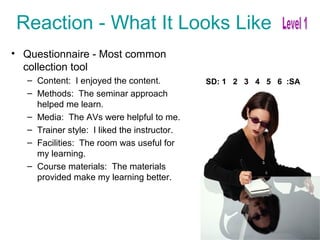

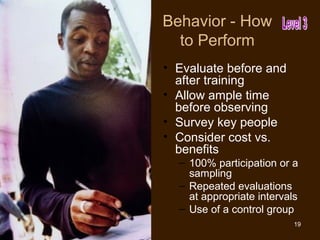

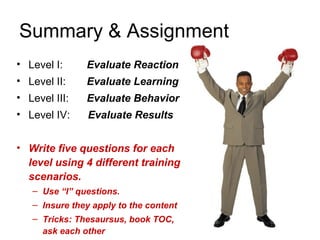

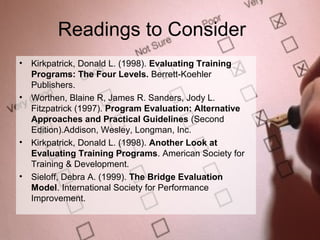

This document discusses Kirkpatrick's four-level model for evaluating training programs. It defines each of the four levels - reaction, learning, behavior, and results - and provides examples of assessment types and questions that can be used at each level. The key points are:

Level 1 assesses participants' reaction to the training. Level 2 evaluates the learning that occurred. Level 3 measures behavior change back on the job. Level 4 assesses the final business results or ROI of the training program. Each subsequent level builds upon the prior levels, and evaluation at all levels is needed to yield truly actionable insights. The document provides guidance on effectively performing assessments at each level.