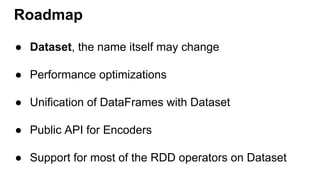

The document introduces the Dataset API in Spark, which provides type safety and performance benefits over DataFrames. Datasets allow operating on domain objects using compiled functions rather than Rows. Encoders efficiently serialize objects to and from the JVM. This allows type checking of operations and retaining objects in distributed operations. The document outlines the history of Spark APIs, limitations of DataFrames, and how Datasets address these through compiled encoding and working with case classes rather than Rows.

![Operating on domain objects

val personRDD = sc.makeRDD(Seq(Person("A",10), Person("B",20)))

//Create RDD[Person]

val personDF = sqlContext.createDataFrame(personRDD)

//Create dataframe from a RDD[Person]

personDF.rdd

//We get back RDD[Row] and not RDD[Person]](https://image.slidesharecdn.com/introduction-to-dataset-160215173515/85/Introduction-to-dataset-9-320.jpg)

![Compile time safety check

case class Person(name: String, age: Long)

val dataframe = sqlContext.read.json("people.json")

val ds : Dataset[Person] = dataframe.as[Person]

ds.filter(p => p.age > 25)

ds.filter(p => p.salary > 12500)

//error: value salary is not a member of Person](https://image.slidesharecdn.com/introduction-to-dataset-160215173515/85/Introduction-to-dataset-15-320.jpg)

![Operating on domain objects

val personRDD = sc.makeRDD(Seq(Person("A",10), Person("B",20)))

//Create RDD[Person]

val personDS = sqlContext.createDataset(personRDD)

//Create Dataset from a RDD

personDS.rdd

//We get back RDD[Person] and not RDD[Row] in Dataframe](https://image.slidesharecdn.com/introduction-to-dataset-160215173515/85/Introduction-to-dataset-16-320.jpg)

![Functional programming

case class Person(name: String, age: Int)

val dataframe = sqlContext.read.json("people.json")

val ds : Dataset[Person] = dataframe.as[Person]

// Compute histogram of age by name

val hist = ds.groupBy(_.name).mapGroups({

case (name, people) => {

val buckets = new Array[Int](10)

people.map(_.age).foreach { a =>

buckets(a / 10) += 1

}

(name, buckets)

}

})](https://image.slidesharecdn.com/introduction-to-dataset-160215173515/85/Introduction-to-dataset-17-320.jpg)

(

implicit val encoder: Encoder[T])

class DataFrame(

sqlContext: SQLContext,

queryExecution: QueryExecution)

extends Dataset[Row](sqlContext, queryExecution)(new RowEncoder)](https://image.slidesharecdn.com/introduction-to-dataset-160215173515/85/Introduction-to-dataset-24-320.jpg)