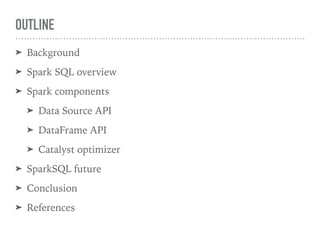

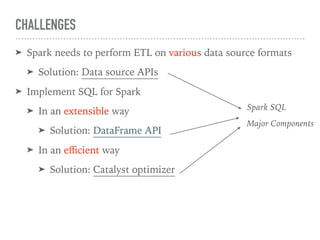

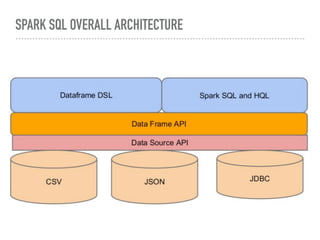

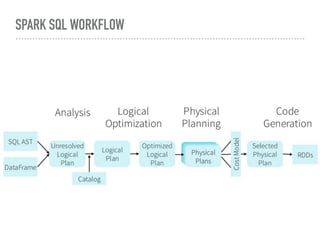

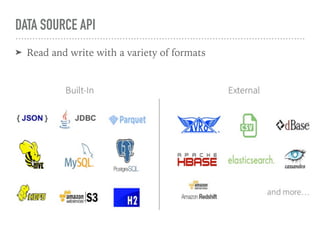

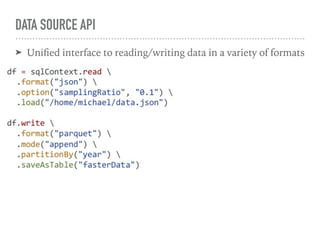

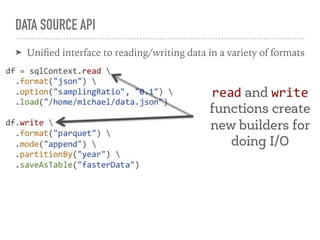

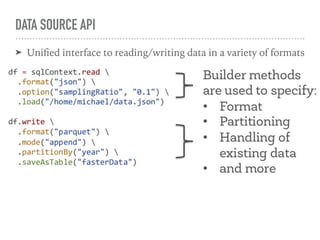

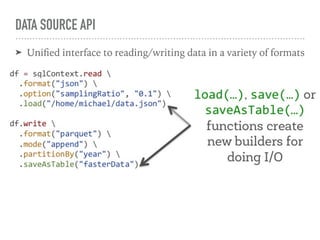

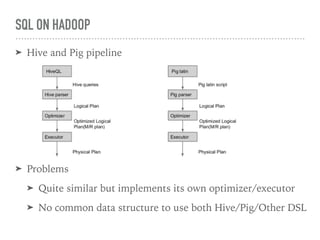

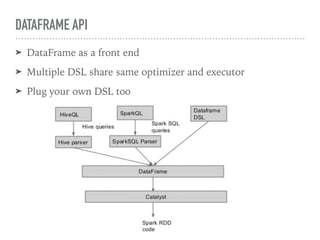

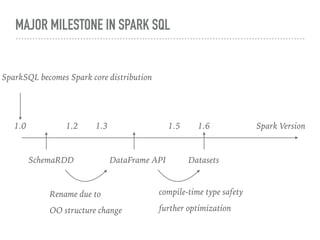

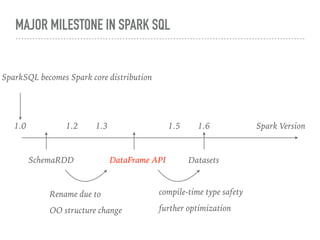

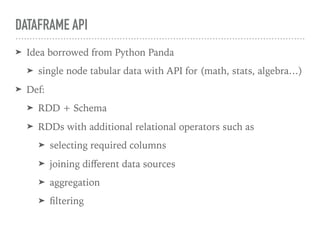

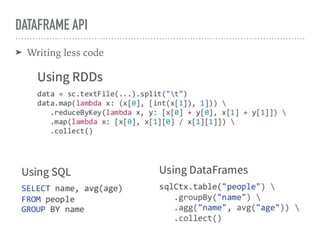

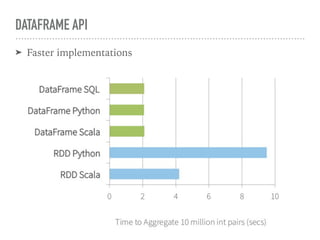

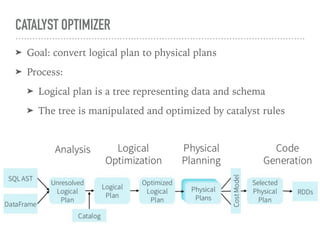

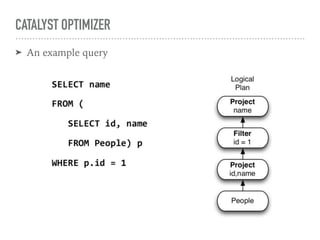

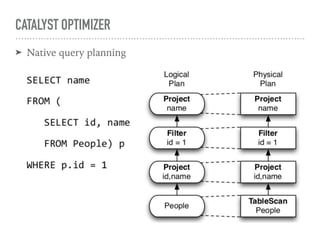

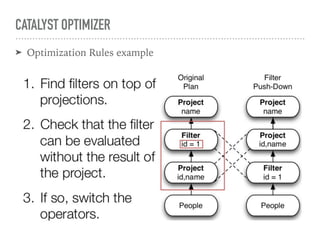

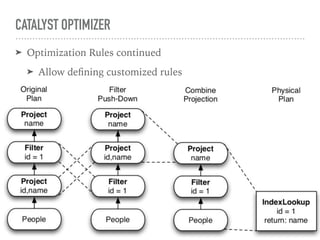

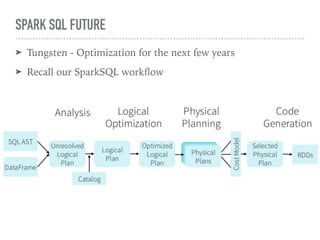

Spark SQL is a component of Apache Spark that introduces SQL support. It includes a DataFrame API that allows users to write SQL queries on Spark, a Catalyst optimizer that converts logical queries to physical plans, and data source APIs that provide a unified way to read/write data in various formats. Spark SQL aims to make SQL queries on Spark more efficient and extensible.