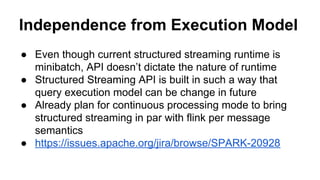

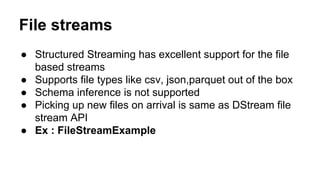

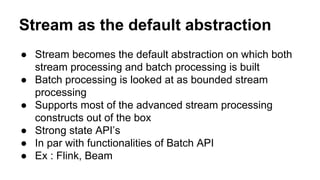

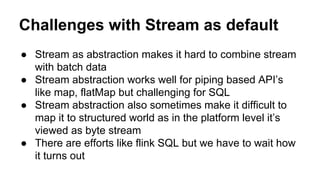

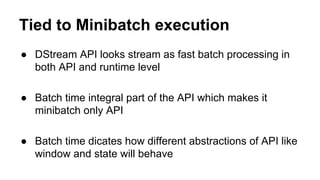

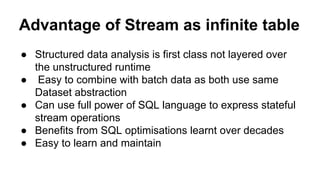

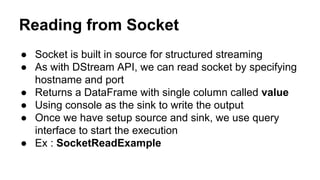

This document provides an introduction to Structured Streaming in Apache Spark. It discusses the evolution of stream processing, drawbacks of the DStream API, and advantages of Structured Streaming. Key points include: Structured Streaming models streams as infinite tables/datasets, allowing stream transformations to be expressed using SQL and Dataset APIs; it supports features like event time processing, state management, and checkpointing for fault tolerance; and it allows stream processing to be combined more easily with batch processing using the common Dataset abstraction. The document also provides examples of reading from and writing to different streaming sources and sinks using Structured Streaming.

![Questions from DStream users

● Where is batch time? Or how frequently this is going to

run?

● awaitTermination is on query not on session? Does

that mean we can have multiple queries running

parallely?

● We didn't specify local[2], how does that work?

● As this program using Dataframe, how does the schema

inference works?](https://image.slidesharecdn.com/introductiontostructuredstreaming-171014154555/85/Introduction-to-Structured-streaming-18-320.jpg)