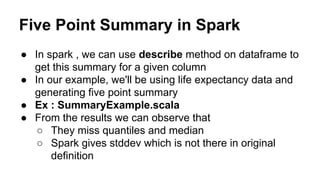

This document discusses exploratory data analysis (EDA) techniques that can be performed on large datasets using Spark and notebooks. It covers generating a five number summary, detecting outliers, creating histograms, and visualizing EDA results. EDA is an interactive process for understanding data distributions and relationships before modeling. Spark enables interactive EDA on large datasets using notebooks for visualizations and Pandas for local analysis.