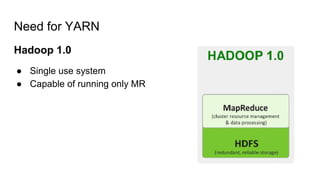

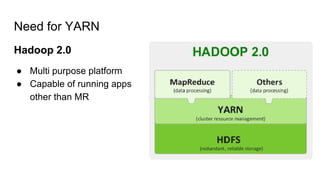

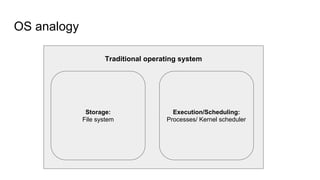

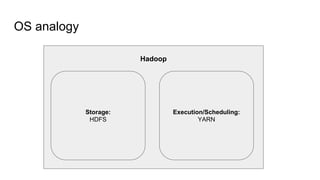

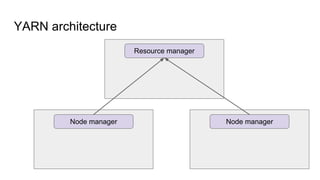

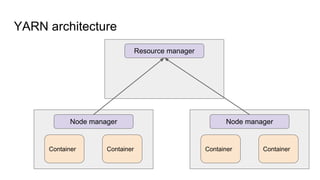

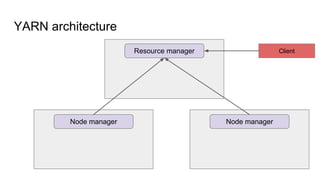

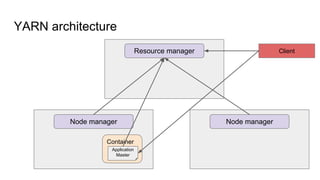

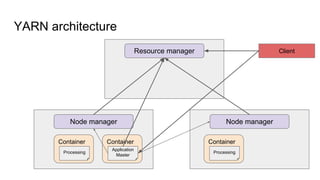

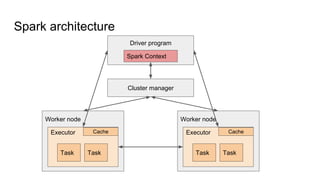

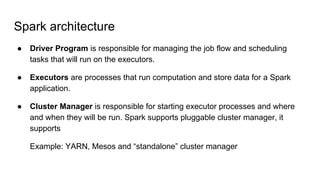

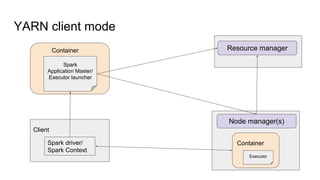

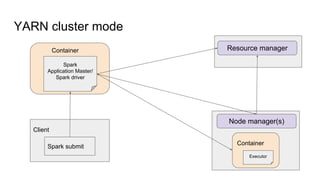

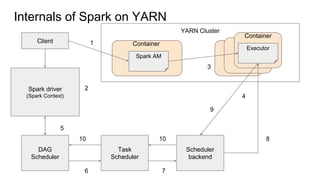

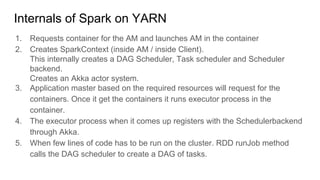

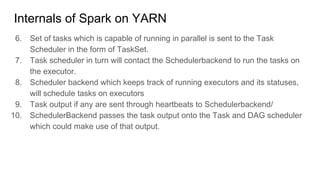

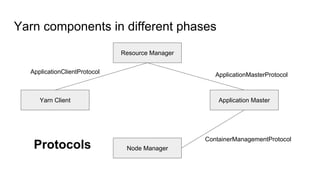

The document provides a comprehensive overview of Apache Spark running on YARN, detailing its architecture, modes (client and cluster), and the necessity for YARN in Hadoop 2.0 as a multi-purpose platform. It discusses Spark's ability to leverage existing clusters, data locality, and resource management, as well as the internals of the Spark-on-YARN execution process. Recent developments include dynamic resource allocation and improving performance through better container management and communication protocols.