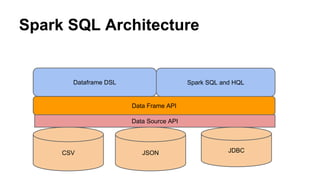

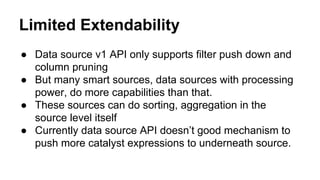

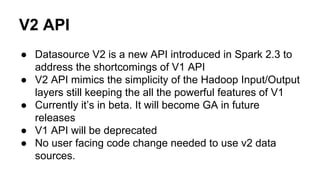

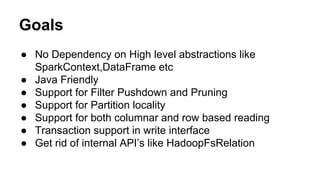

The document discusses the introduction of the DataSource V2 API in Spark 2.3, highlighting its improvements over the V1 API, which suffered from various limitations. Key enhancements in V2 include Java friendliness, support for partition locality, transaction support, and the ability to handle both row and columnar data efficiently. The transition to V2 aims to eliminate dependencies on high-level abstractions and provide a more robust interface for data sources.

![Dependency on High Level API

● Data sources are lowest level abstraction in the stack

● Data source V1 API depended on high level user facing

abstractions like SQLContext and DataFrame

def createRelation(sqlContext: SQLContext,

parameters: Map[String, String]): BaseRelation

● As the spark evolved, these abstractions got deprecated

and replaced by better ones](https://image.slidesharecdn.com/introductiontodatasourcev2-180708044933/85/Introduction-to-Datasource-V2-API-11-320.jpg)

![Lack of Support for Columnar Read

def buildScan(): RDD[Row]

● From above API , it’s apparent that API reads the data

in row format

● But many analytics sources today are columnar in

nature

● So if the underneath columnar source is read into row

format it loses all the performance benefits.](https://image.slidesharecdn.com/introductiontodatasourcev2-180708044933/85/Introduction-to-Datasource-V2-API-13-320.jpg)

![DataSourceReader Interface

● Entry Point to the Data Source

● SubClass of ReaderSupport

● Has Two Methods

○ def readSchema():StructType

○ def createDataReaderFactories:List[DataReaderFactory]

● Responsible for Schema inference and creating data

factories

● What may be list indicate here?](https://image.slidesharecdn.com/introductiontodatasourcev2-180708044933/85/Introduction-to-Datasource-V2-API-23-320.jpg)