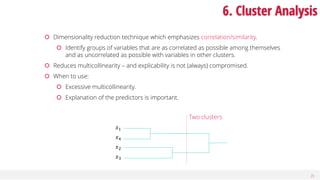

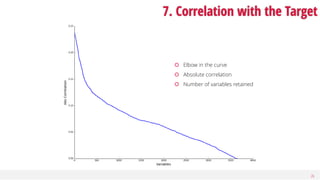

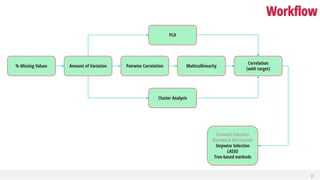

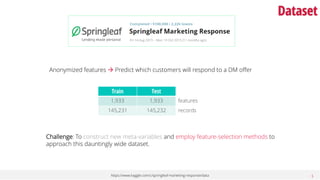

This document discusses dimensionality reduction, a vital process in machine learning that involves selecting a subset of features for model construction. It outlines various techniques such as principal component analysis, cluster analysis, and selection methods to manage the challenges of high-dimensional data and the curse of dimensionality. The guide emphasizes the importance of reducing redundancy and irrelevant features to enhance predictive power and model efficiency.

![8

Because…

True dimensionality <<< Observed dimensionality

The abundance of redundant and irrelevant features

Curse of dimensionality

With a fixed number of training samples, the predictive power reduces as the dimensionality increases.

[Hughes phenomenon]

With 𝑑 binary variables, the number of possible combinations is 𝑂(2 𝑑

).

Value of Analytics

Descriptive Diagnostic Predictive Prescriptive

Law of Parsimony [Occam’s Razor]

Other things being equal, simpler explanations are generally better than complex ones.

Overfitting

Execution time (Algorithm and data)

Hindsight Insight Foresight](https://image.slidesharecdn.com/dimreductiontechniquespydatadcoct2016-170626164019/85/Feature-Reduction-Techniques-8-320.jpg)