This document provides an overview of common statistical hypothesis tests including:

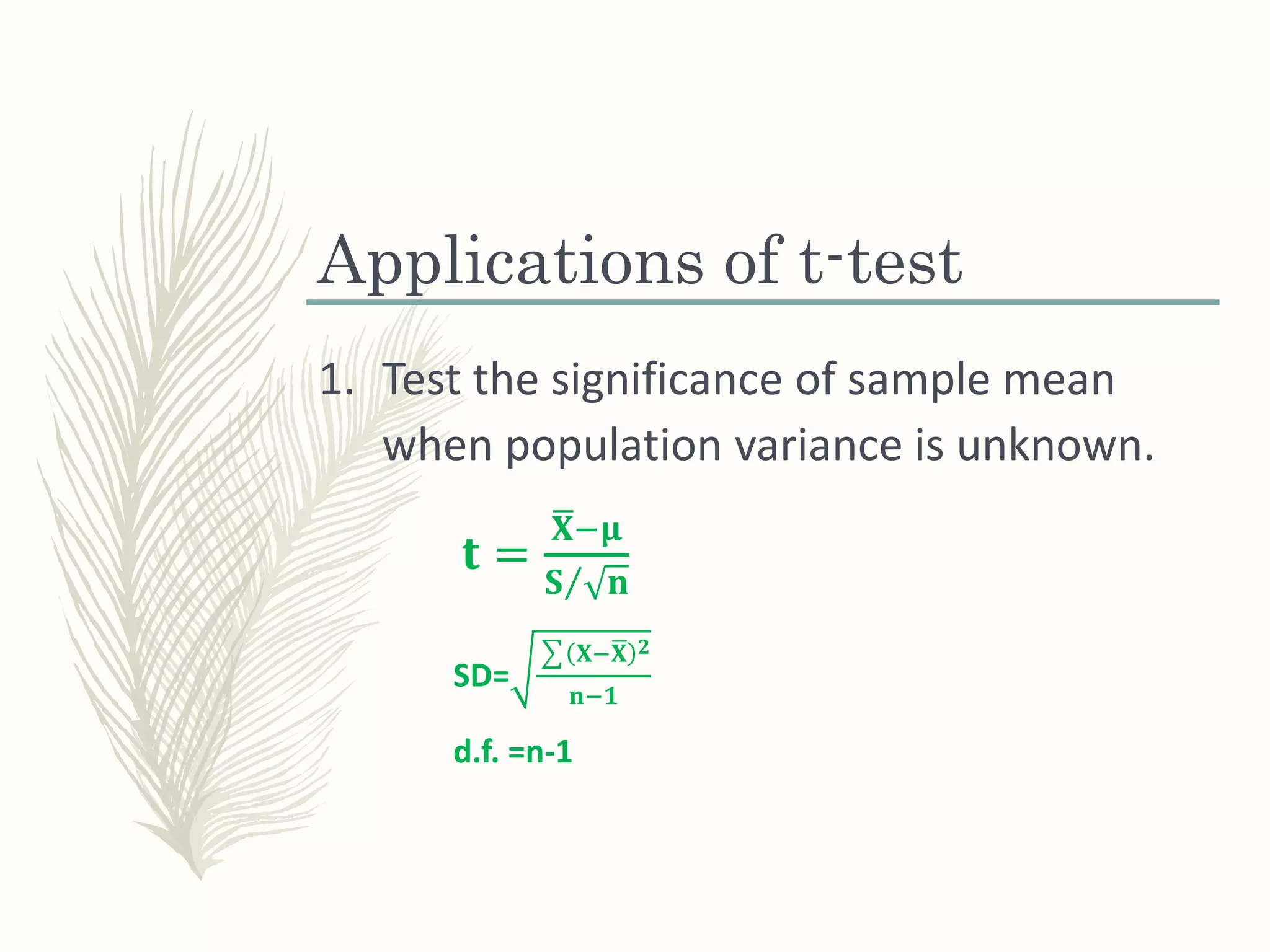

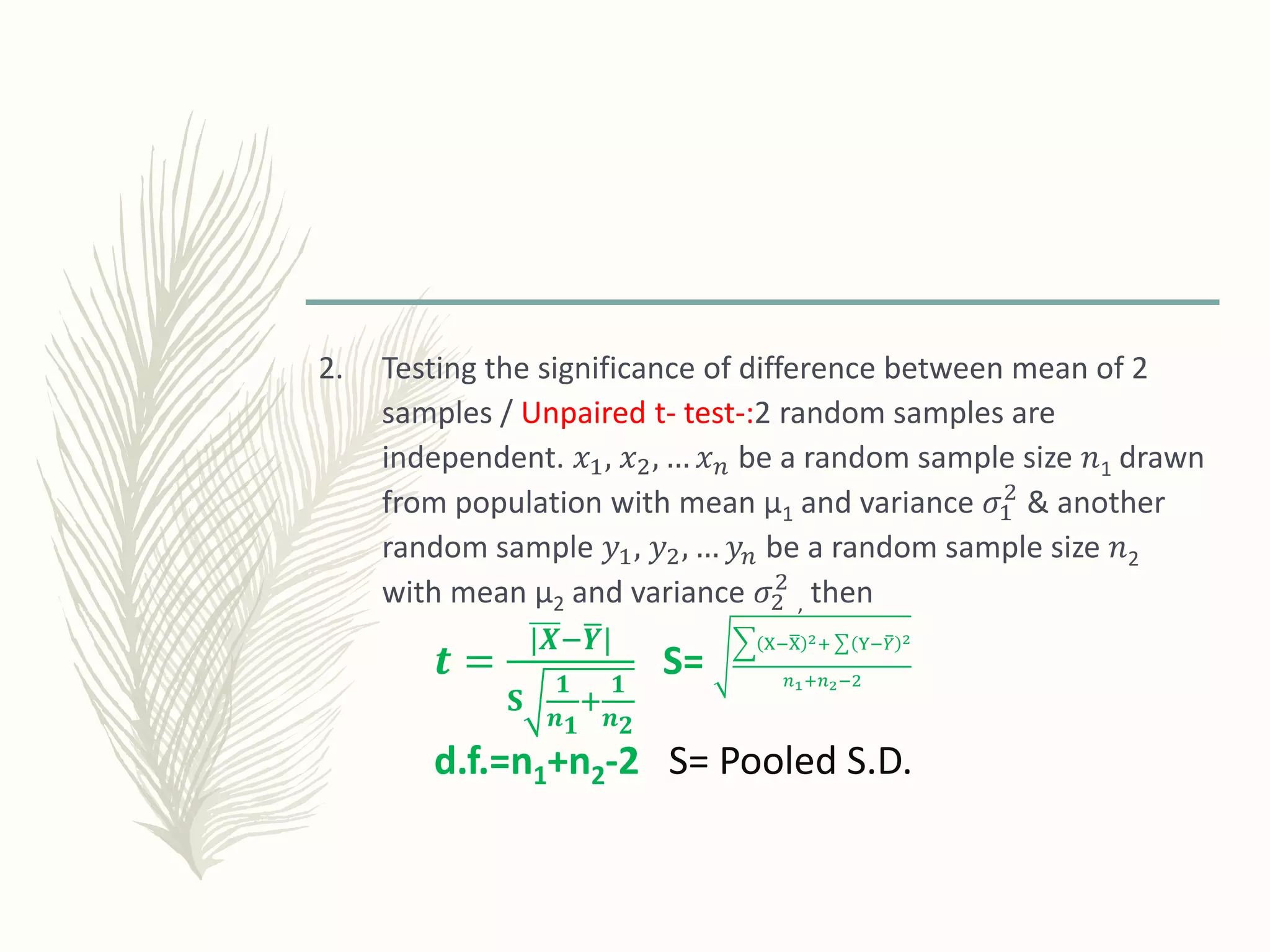

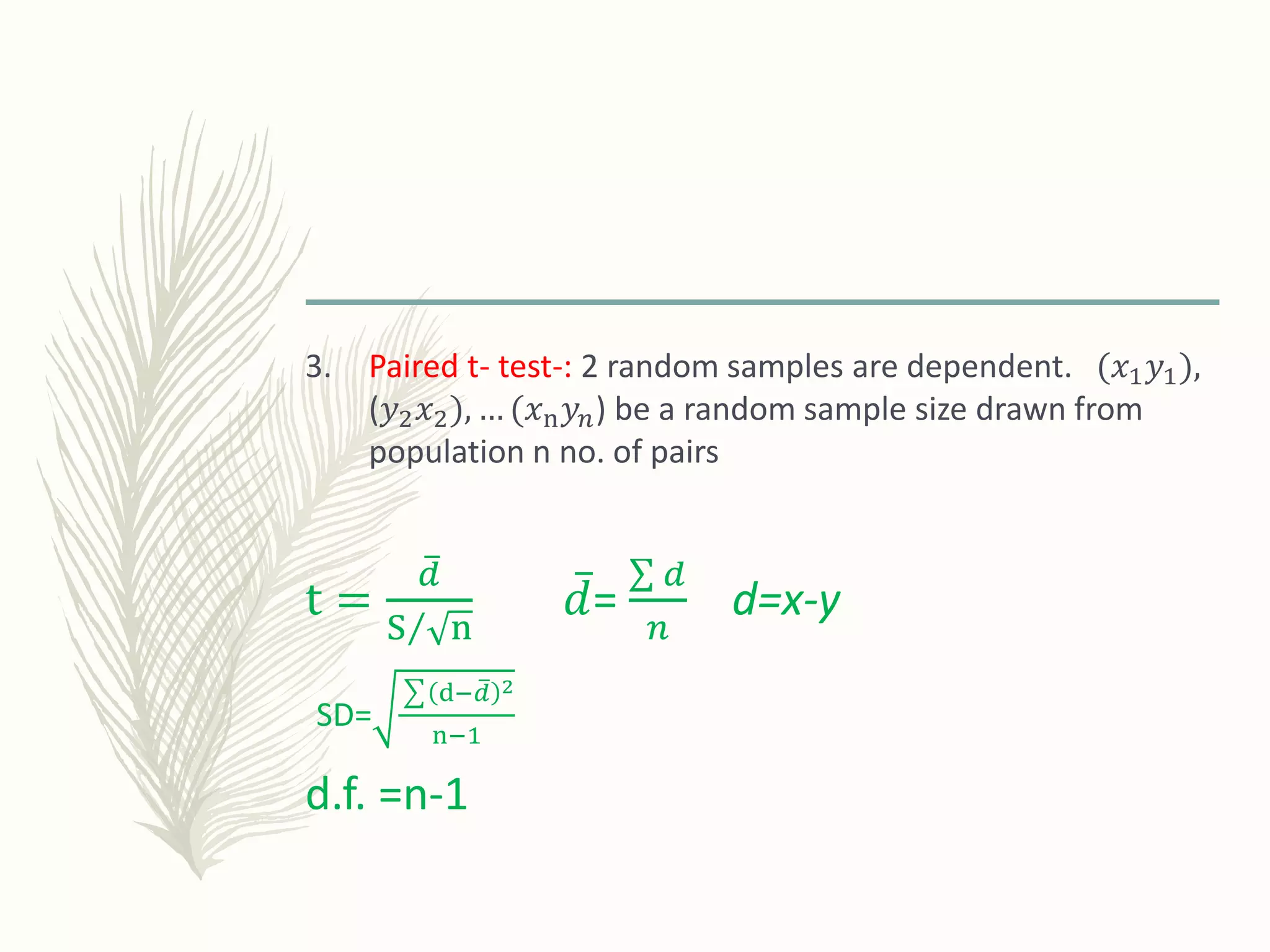

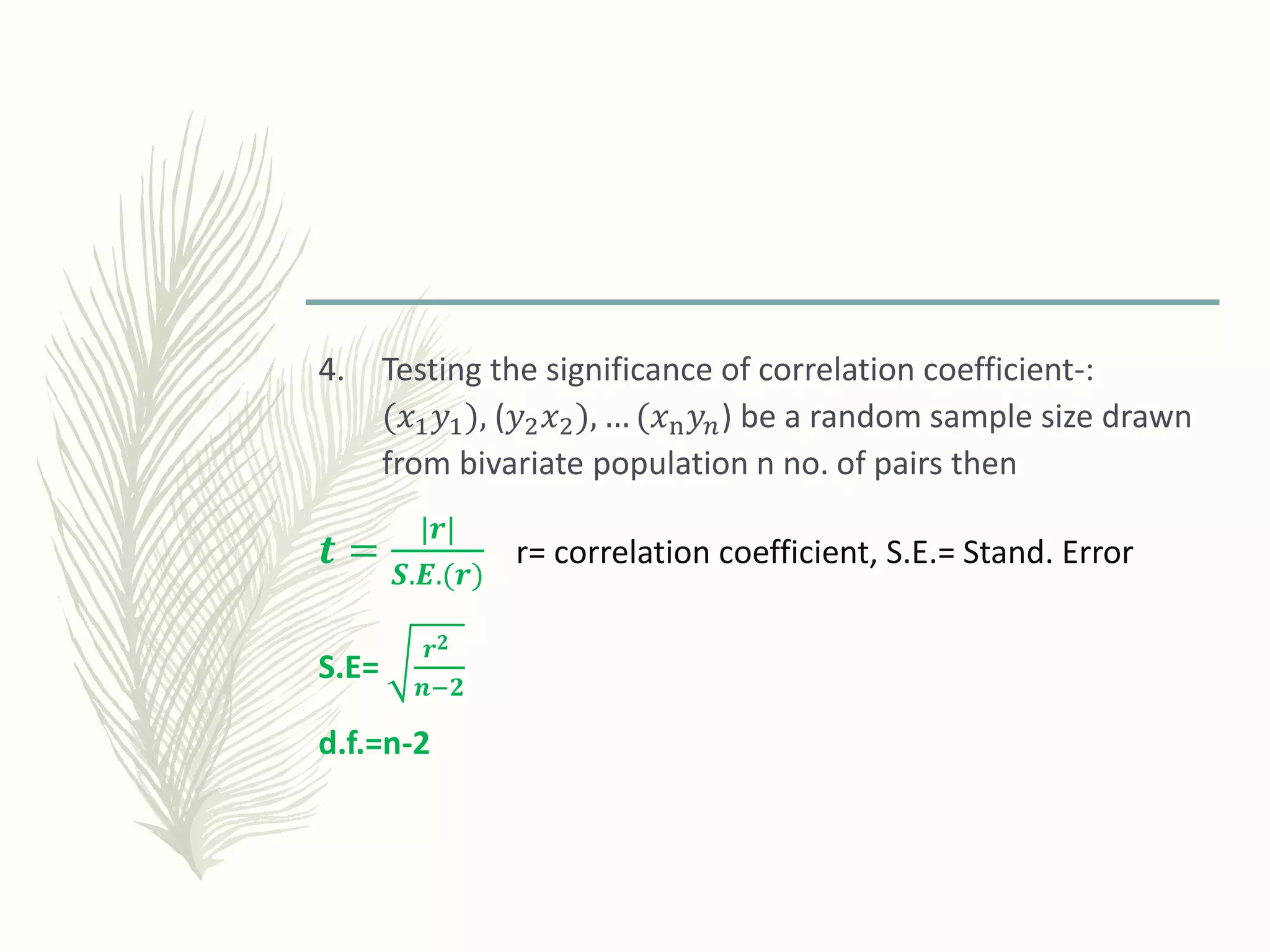

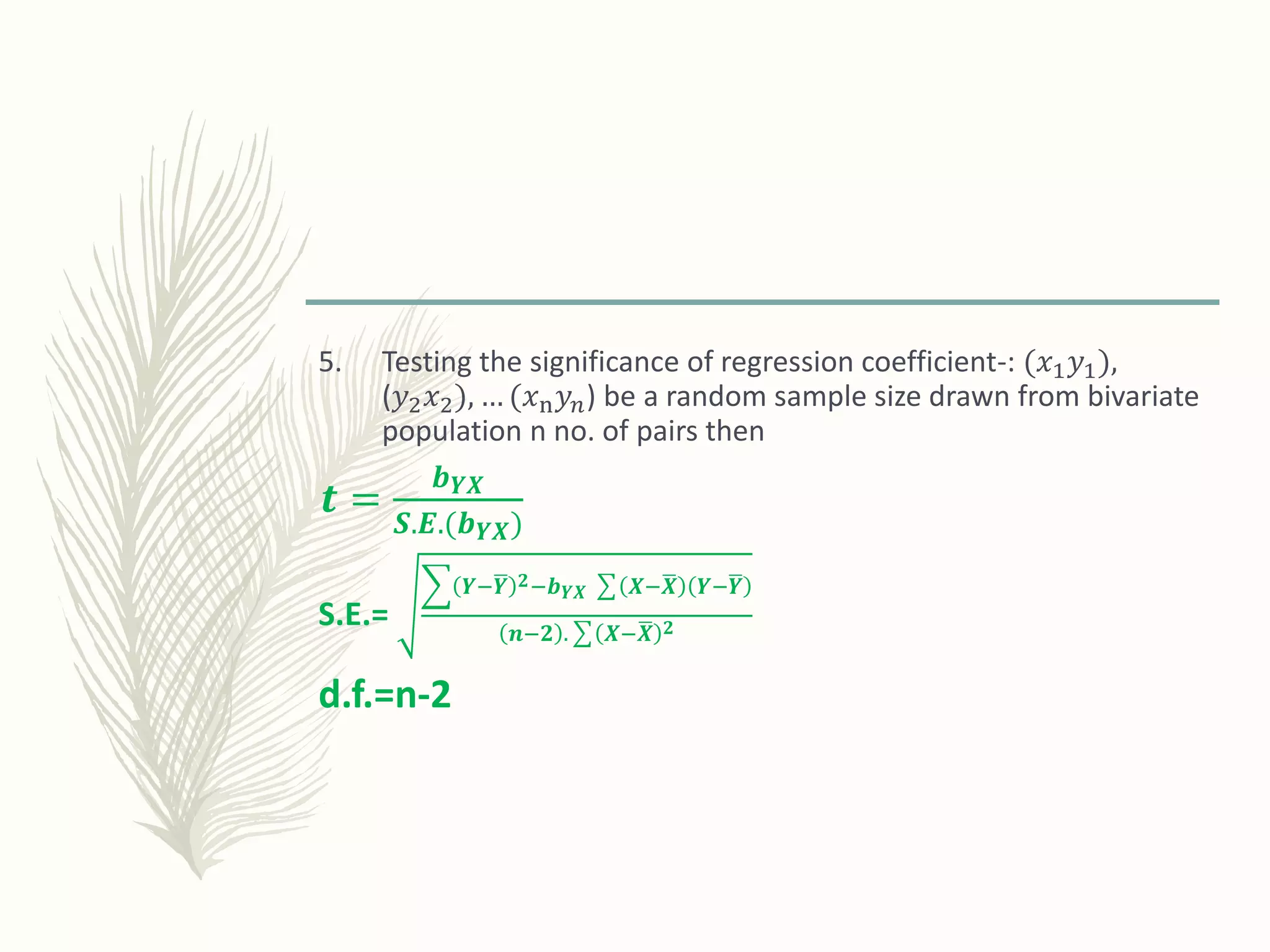

1) The t-test, which is used to test differences between sample means and the significance of sample means.

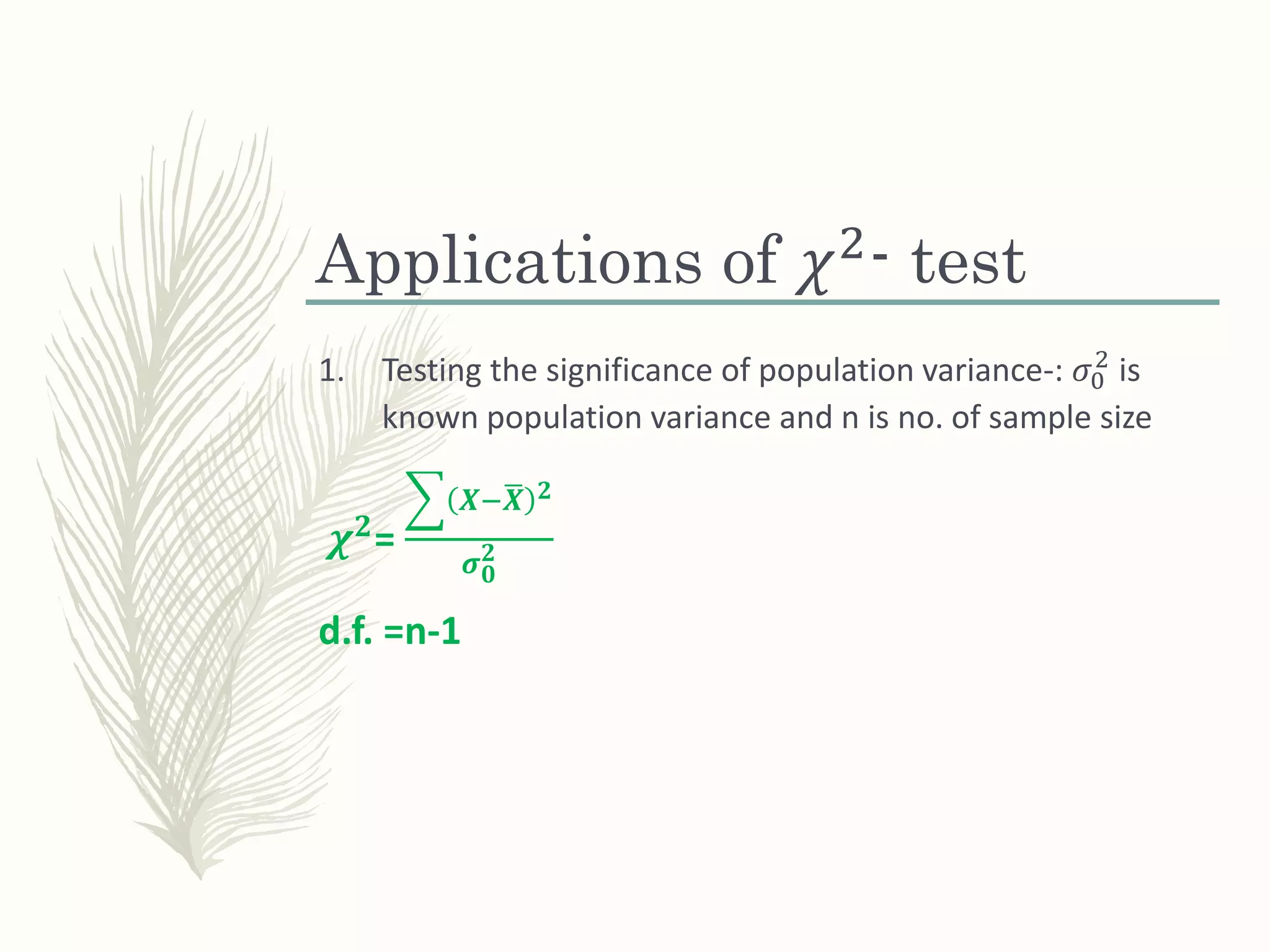

2) The chi-square test, which evaluates differences between observed and expected frequencies without distributional assumptions. It is used for goodness of fit tests and tests of independence.

3) Analysis of variance (ANOVA), which tests for differences among two or more means by analyzing variance estimates. It is used to evaluate whether experimental factors have significant effects on outcomes.

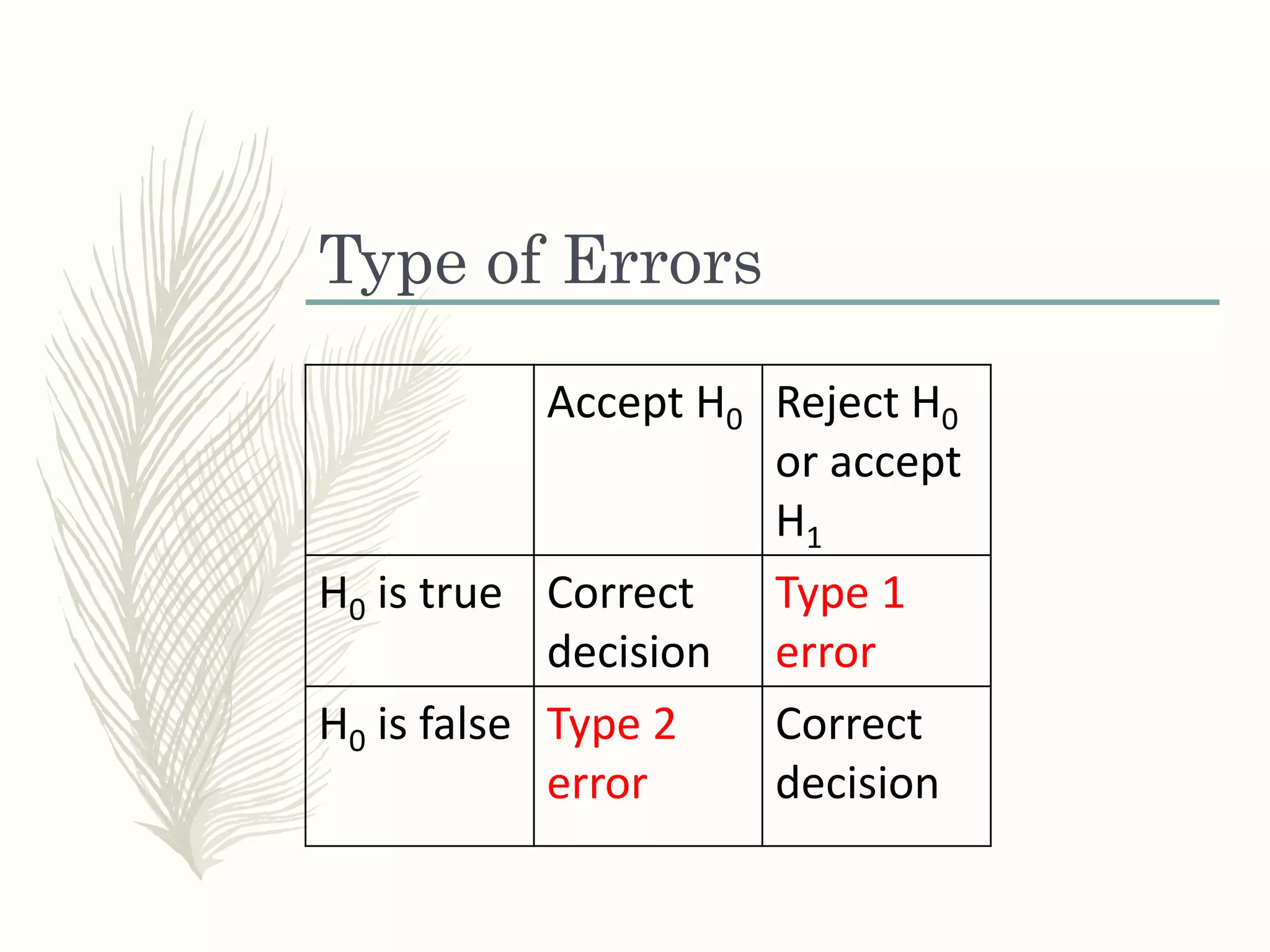

The document defines key terms like null and alternative hypotheses, type I and II errors, level of significance, and degrees of freedom. It also outlines applications and procedures

![C1 = sum of first column

R1 = sum of first row

N = sum of all rows

E(𝑶 𝟏𝟏)=

𝑹 𝟏 𝑪 𝟏

𝑵

, E(𝑶 𝟏𝟐)=

𝑹 𝟏 𝑪 𝟐

𝑵

, E(𝑶 𝟐𝟏)=

𝑹 𝟐 𝑪 𝟏

𝑵

E(𝑶 𝟏𝒏) = R1-[E(𝑶 𝟏𝟏)+ E(𝑶 𝟏𝟐)+…+E(𝑶 𝐧−𝟏)]

E(𝑶 𝐦𝟏) = C1-[E(𝑶 𝟏𝟏)+ E(𝑶 𝟐𝟏)+…+E(𝑶 𝐦−𝟏)]

d.f. = (row-1)(column-1) contd…](https://image.slidesharecdn.com/testofhypothesistestofsignificance-200724112309/75/Test-of-hypothesis-test-of-significance-18-2048.jpg)