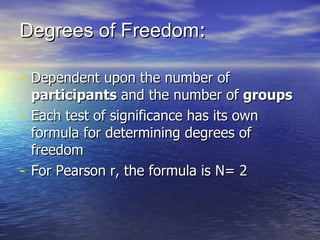

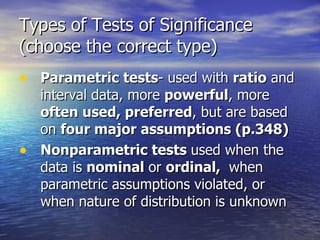

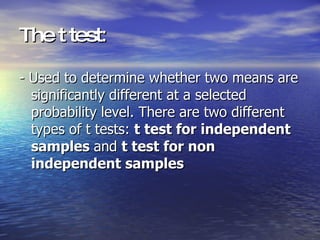

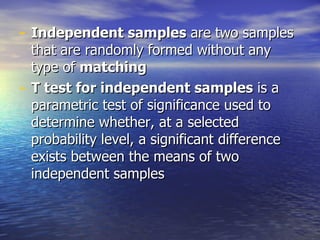

The document discusses inferential statistics, which are techniques for making inferences about populations based on sample data, emphasizing the importance of understanding concepts like standard error and types of hypothesis testing. It highlights key statistical tests such as t-tests, ANOVA, and chi-square tests, explaining their purposes and applications in determining significant differences among means. Additionally, the document outlines the concept of null hypotheses, type I and type II errors, and the conditions under which parametric and nonparametric tests should be used.