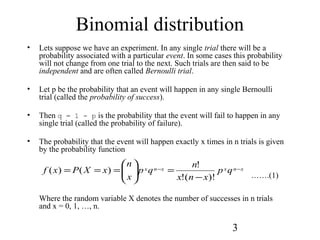

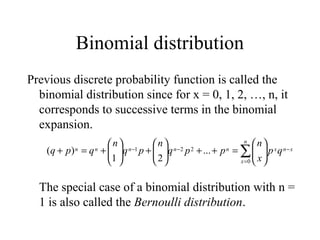

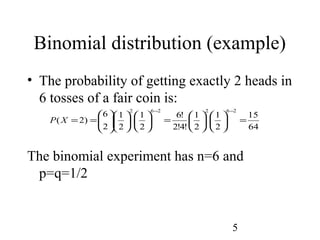

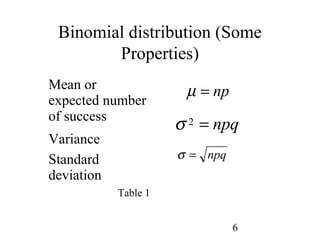

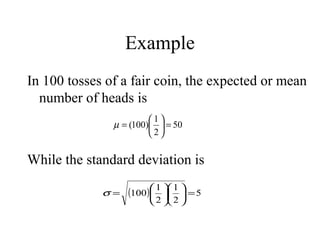

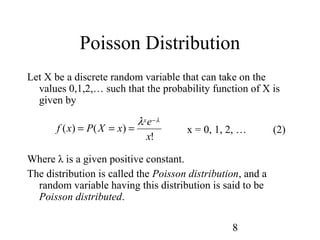

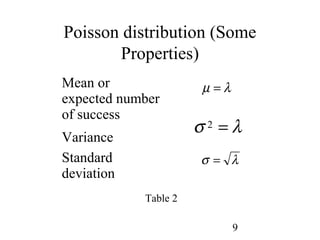

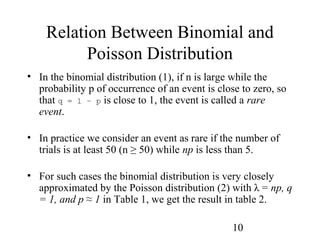

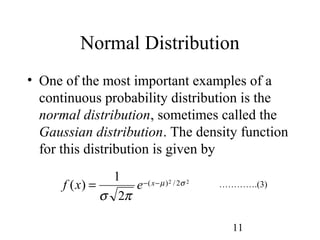

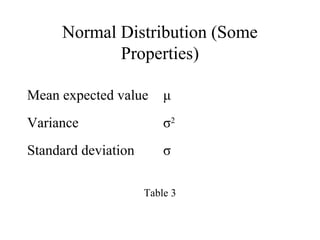

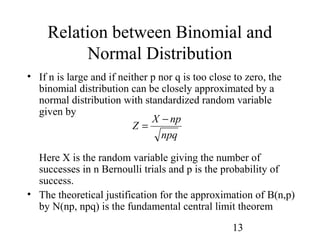

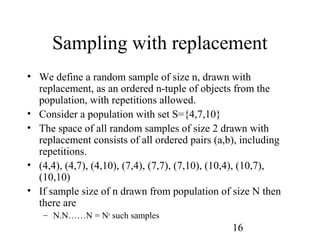

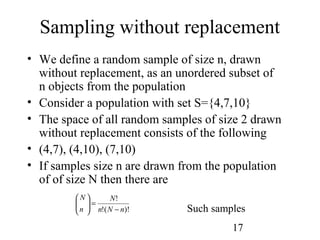

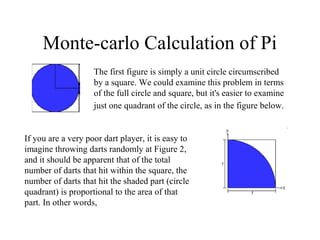

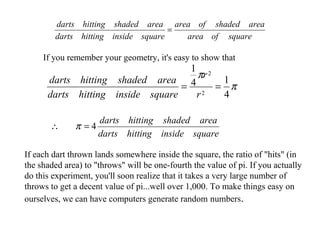

The document discusses various probability distributions including the binomial, Poisson, and normal distributions. It provides definitions and key properties of each distribution. It also discusses sampling with and without replacement as well as the Monte Carlo method for simulating physical systems using random sampling. The Monte Carlo method can be used to computationally estimate values like pi by simulating the throwing of darts at a circular target.