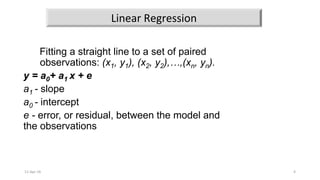

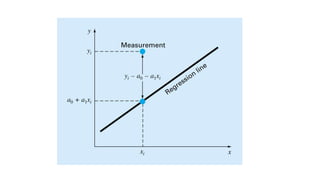

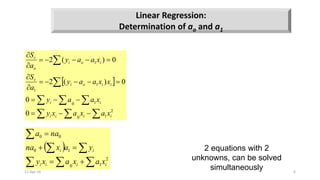

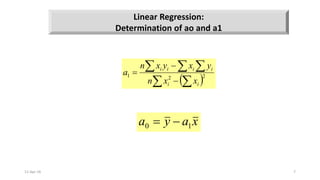

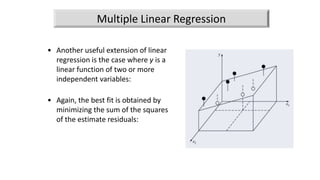

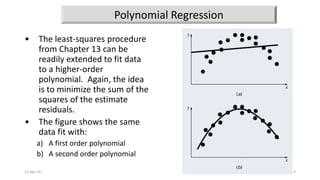

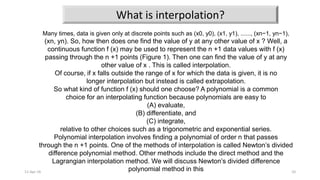

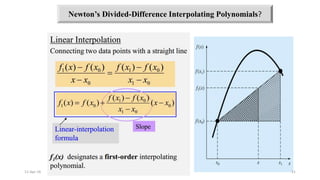

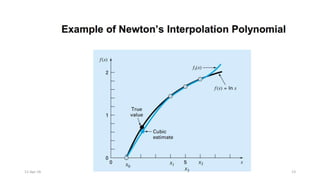

The document presents an exploration of linear regression, multiple linear regression, and polynomial regression, highlighting methods for fitting models to data. It specifies formulas and methodologies for determining parameters such as slope and intercept, and discusses interpolation techniques using Newton's divided difference method. The content outlines the importance of minimizing the sum of squares of error for achieving the best fit in modeling data.