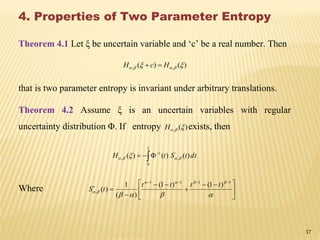

This document introduces a two parameter entropy measure for uncertain variables based on two parameter probabilistic entropy. It begins by reviewing concepts of uncertainty theory such as uncertainty space, uncertain variables, and independence of uncertain variables. It then defines entropy of an uncertain variable using Shannon entropy. Previous work on one parameter entropy of uncertain variables is summarized. The document proposes a new two parameter entropy for uncertain variables, providing its definition and examples of calculating it for specific uncertainty distributions. Properties of the two parameter entropy are discussed.

![1. INTRODUCTION

Concept of entropy was founded by [Shannon, 1948]

Let S be the sample space belongs to random events. Compose this sample

space into a finite number of mutually exclusive events nn EEE ...,,,1 , whose

respective probabilities are nn ppp ...,,,1 , then the average amount of

information or Shannon s entropy is defined:

iiii ppEInfpPH log)()(

n

1i

n

1i

(1.1)

H(P) is always non negative. Its maximum value depends on n. It is equal to

nlog when all pi are equal.

Maximum Entropy Principle

2](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-2-320.jpg)

![7

Cont…

[ De Luca and Termini, 1972] -- Fuzzy entropy by using Shannon function.

[Liu, 2009] proposed the concept of entropy of uncertain variable

(Characterizes the uncertainty of uncertain variable resulting from

information deficiency.)

[Liu et al., 2012] proposed one parametric measure of entropy of uncertain

variable

[Wada and Suyari, 2007] proposed two parametric generalization of

Shannon-Khinchin axioms and proved that the entropy function satisfying

two parameter generalized Shannon Khinchin axioms is given by Eq.(1.1) ,

n

i

ii

n

C

pp

pppS

1 ,

21, ),,(

(1.9)

where ,C is a function of and satisfying certain conditions.

One parametric [Tsallis, 1988] entropy and [Shannon, 1948] entropy

recovered for specific values of and .](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-7-320.jpg)

![Cont…

Uncertainty theory was founded by [Liu, 2007] and refined by

[Liu, 2010] .

Uncertainty theory was widely developed in many disciplines,

such as uncertain process [Liu, 2008], uncertain calculus,

uncertain differential equation [Liu, 2009], uncertain risk analysis

[Liu, 2010a], uncertain inference [Liu, 2010b], uncertain statistics

[Liu, 2010c] and uncertain logic [Li and Liu, 2009].

[Liu, 2009] proposed the definition of uncertain entropy resulting

from information deficiency. [Dai and Chen, 2009] investigated

the properties of entropy of function of uncertain variables and

introduced maximum entropy principle for uncertain variables.

Inspired by two parametric probabilistic entropy, this paper

introduces a new two parameter entropy in the framework of

uncertain theory and discusses its properties.

8](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-8-320.jpg)

![2. Preliminaries

Let be non-empty set and is a algebra over . Each element is called

an event. Uncertain measure M was introduced as a set function satisfying the

following five axioms [Liu, 2007]:

Axiom 1. (Normality Axiom) M{ }=1 for universal set .

Axiom 2. (Monotonicity Axiom) M{ 1 } M{ 2 } whenever 21 .

Axiom 3. (Self-Duality Axiom) M{ }+ M{ c

} = 1 for any event .

Axiom 4. (Countable Subadditivity Axiom) For every countable sequence of

events i , we have

M{

1i

i }

1i

M i

Axiom 5. (Product Measure Axiom) Let k be non empty sets on which Mk are

uncertain measures nk ,...,2,1 , respectively. Then product uncertain measure on

the product algebra n 21 satisfying

M{

n

k

k

1

} nk1

min

1i

Mk k .

Where kk , nk ,...,2,1 . 9](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-9-320.jpg)

![Cont…

Definition 2.1 [Liu, 2007] Let be non-empty set and is a algebra over ,

and M an uncertain measure. Then the triplet ( ,, M) is called an uncertainty

space.

Definition 2.2 [Liu, 2007] An uncertain variable is a measurable function from an

uncertainty space ( ,, M) to the set of real numbers.

Definition 2.3 [Liu, 2007] The uncertainty distribution of an uncertain variable

is defined by

)(x M{ x }.

Theorem 2.1 (Sufficient and Necessary Condition for Uncertainty distribution

[Peng and Iwamura, 2010]) A function is an uncertainty distribution if and only

if it is an increasing function except 0)( x and 1)( x .

Example 2.1 An uncertain variable is called normal if it has a normal uncertainty

distribution

1

3

)(

exp1)(

xe

x

denoted by N ( ,e ) where e and σ are real numbers with σ > 0. Then we recall the

definition of inverse uncertainty distribution as follows.

10](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-10-320.jpg)

![Cont…

Definition 2.6 (Independence of uncertain variable [Liu, 2007] )The uncertain

variables n ,, 21 are said to be independent if

M

m

i

iB

1

= ni1

min M iB

for Borel sets nBBB ,,, 21 of real numbers.

Theorem 2.2 [Liu, 2007] Assume ξ1, ξ2, . . . , ξn are independent uncertain

variables with regular uncertainty distribution Φ1,Φ2, . . . ,Φn, respectively. If

n

f : is a strictly increasing function, then uncertain variable ξ = f (ξ1, ξ2, . . .

, ξn) has inverse uncertainty distribution

10)),(,),(),(()( 11

2

1

1

1

ttttft n

Theorem 2.3 [Liu, 2007] Assume ξ1, ξ2, . . . , ξn are independent uncertain

variables with regular uncertainty distribution Φ1,Φ2, . . . ,Φn, respectively. If

n

f : is a strictly decreasing function, then uncertain variable

ξ = f (ξ1, ξ2, . . . , ξn) has inverse uncertainty distribution

10)),1(,),1(),1(()( 11

2

1

1

1

ttttft n

11](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-11-320.jpg)

![Cont…

Theorem 2.4 ([Liu, 2007]) Let n ,, 21 be independent uncertain variables with

regular uncertainty distribution ,,,, 21 n respectively. If n

f : be strictly

decreasing function with respect to mxxx ,, 21 and strictly increasing function with

,,, 21 nmm xxx then ),,( 21 nf is uncertain variable with uncertainty

distribution with inverse uncertainty distribution

.10)),1(,),1(),(,),(),(()( 11

1

11

2

1

1

1

ttttttft nmm

12](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-12-320.jpg)

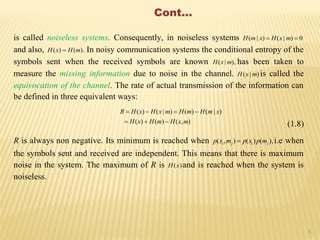

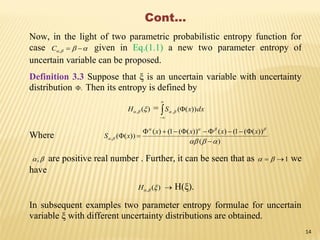

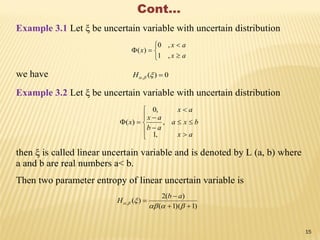

![3. Two Parameter Entropy

In this section, we will introduce the definition and results of two parameter

entropy of uncertain variable. For the purpose, we recall the entropy of uncertain

variable proposed by [Liu, 2009].

Definition 3.1 ([Liu, 2009]) Suppose that ξ is an uncertain variable with

uncertainty distribution . Then its entropy is defined by

H(ξ)= dxxS

))((

Where )).(1ln())(1()(ln)())(( xxxxxS

We set 00ln0 throughout this paper. By the enlightenment of [Tsallis, 1988]

entropy, [Liu et al. , 2012] defined the single parameter entropy.

Definition 3.2 ([Liu et al. , 2012]) Suppose that ξ is an uncertain variable with

uncertainty distribution . Then its entropy is defined by

)(qH = dxxSq

))((

Where

)1(

]))(1())((1[

))((

qq

xx

xS

qq

q

q is a positive real number . Further, it can be seen that as 1q we have

)(qH H(ξ).

13](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-13-320.jpg)

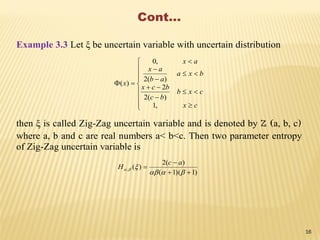

![18

Cont…

Theorem 4.3 Let ξ1, ξ2, . . . , ξn are independent uncertain variables with

regular uncertainty distribution Φ1,Φ2, . . . ,Φn, respectively. If n

f :

is a strictly monotone function, then the uncertain variable ξ = f (ξ1, ξ2, . .

. , ξn) has an entropy

.)())(,),(),(()( ,

1

0

11

2

1

1, dttStttfH n

Theorem 4.4 Let ξ and η be independent uncertain variables. Then for

any real numbers a and b,

.][||][||][ ,,, HbHabaH ](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-18-320.jpg)

![19

Conclusions

In this paper, the entropy of uncertain variable and its properties are recalled. On

the basis of the entropy of uncertain variable and inspired by two parameter

probabilistic entropy (1.9), two parameter entropy of uncertain variable is

introduced and explored its several important properties. Two parameter entropy of

uncertain variable Proposed in this paper, makes the calculation of uncertainty of

uncertain variable more general and flexible by choosing an appropriate values of

parameters α and β. Further, the results obtained by [Liu et al., 2012] for single

parameter entropy of uncertain variable and [Dai and Chen, 2009] for entropy of

uncertain variable are the special cases of results obtained in this paper.](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-19-320.jpg)

![20

References

[1] B. Liu, Fuzzy Process, Hybrid Process and Uncertain Process, Journal of

Uncertain Systems, 2 (1) (2008) 3-16.

[2] B. Liu, Some Research Problems in Uncertainty Theory, Journal of Uncertain

Systems, 3(1) (2009) 3-10.

[3] B. Liu, Uncertain Risk Analysis and Uncertain Reliability Analysis, Journal of

Uncertain Systems, 4(3) (2010a) 163-170.

[4] B. Liu, Uncertain Set Theory and Uncertain Inference Rule with Application to

Uncertain Control, Journal of Uncertain Systems, 4(2) (2010b) 83-98.

[5] B. Liu, Uncertainty Theory, 2nd Edition, Springer- Verlag, Berlin, 2007.

[6] B. Liu, Uncertainty Theory: A Branch of Mathematics for Modeling Human

Uncertainty, Springer-Verlag, Berlin, 2010c.

[7] C. Shannon, The Mathematical Theory of Communication, The University of

Illinois Press, Urbana, 1949.

[8] C. Tsallis, Possible Generalization of Boltzmann-Gibbs, Statistics, 52(1-2)

(1988) 479-487.](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-20-320.jpg)

![21

[9]De Luca and S. Termini, A Definition of Non probabilistic Entropy in the Setting

of Fuzzy Sets Theory, Information and Control, 20 (1972) 301-312.

[10] J. Liu, L. Lin, S. Wu, Single Parameter Entropy of Uncertain Variables,

Applied Mathematics, 3 (2012) 888-894.

[11] T. Wada and H. Suyari, A two-parameter generalization of Shannon–

Khinchin axioms and the uniqueness theorem, Physics Letters A, 368 (2007) 199–

205.

[12] W. Dai and X. Chen, Entropy of Function of Uncertain Variables,

Technical Report, 2009.

[13] W. Dai, Maximum Entropy Principle of Quadratic Entropy of Uncertain

Variables, Technical Report, 2010.

[14] X. Li and B. Liu, Hybrid Logic and Uncertain Logic, Journal of Uncertain

Systems, 3(2) (2009) 83-94.

[15] Z. X. Peng and K. Iwamura, A Sufficient and Necessary Condition of

Uncertainty Distribution, Journal of Inter-disciplinary Mathematics, 13(3) (2010)

277- 285.](https://image.slidesharecdn.com/paperidh110a1-140716233325-phpapp02/85/Two-parameter-entropy-of-uncertain-variable-21-320.jpg)