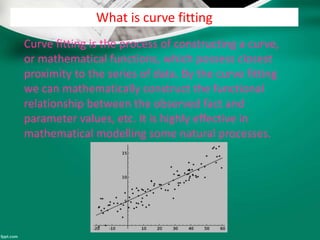

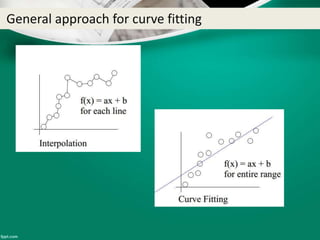

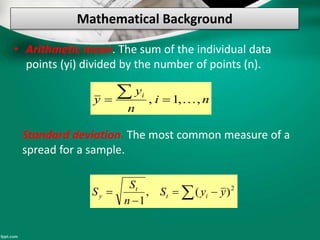

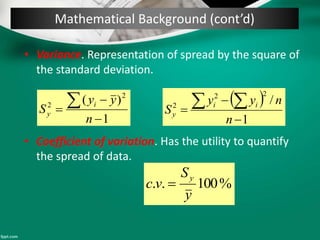

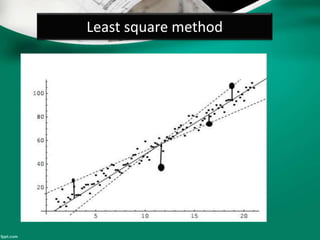

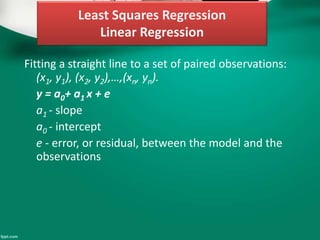

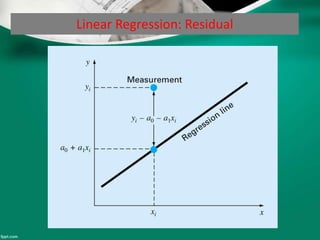

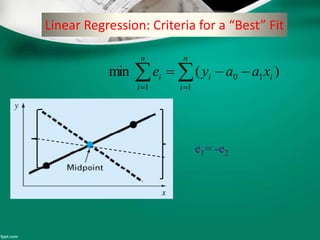

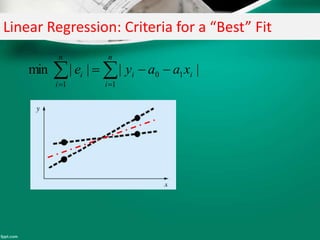

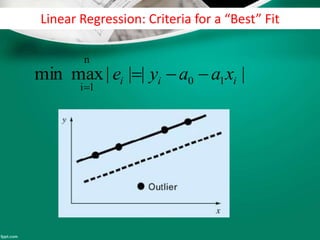

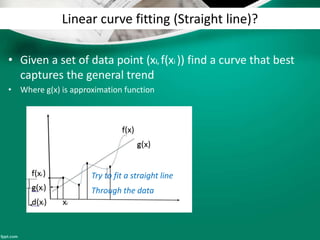

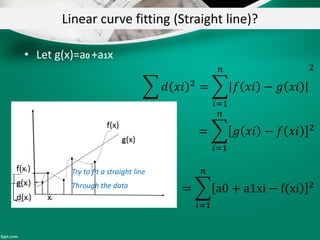

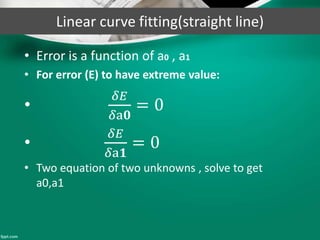

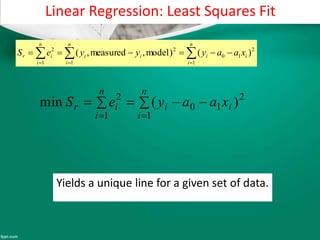

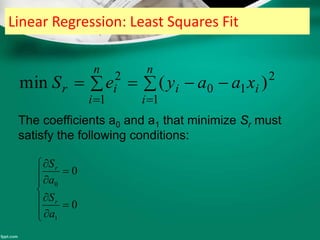

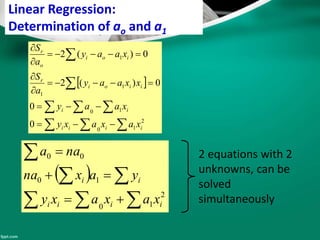

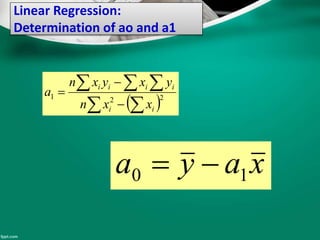

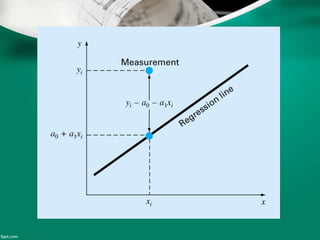

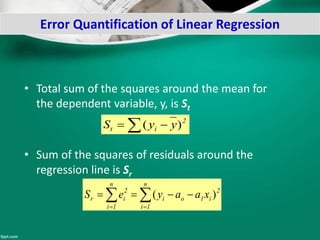

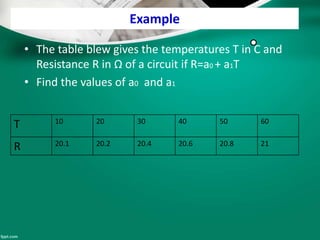

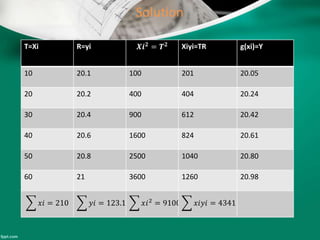

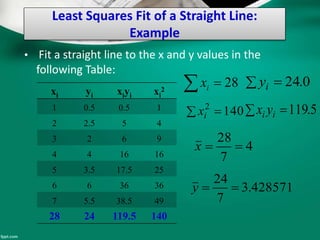

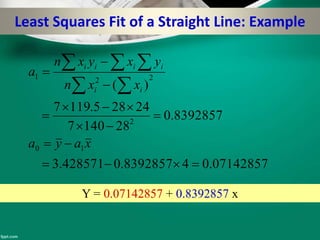

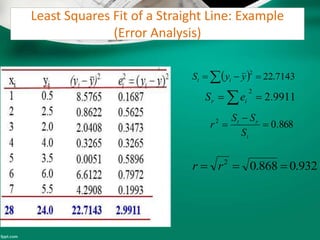

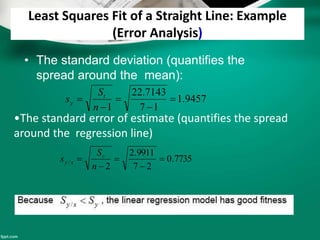

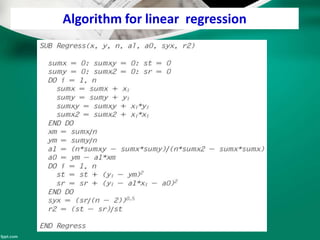

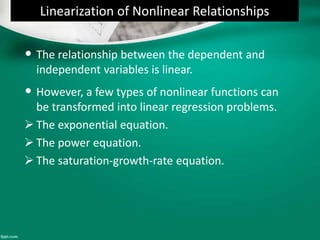

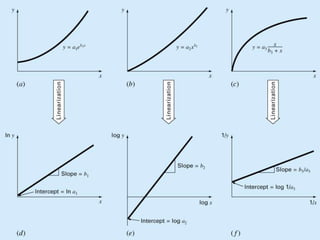

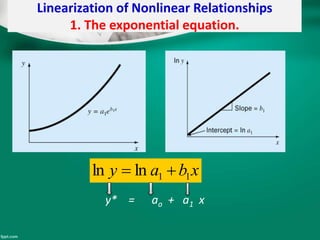

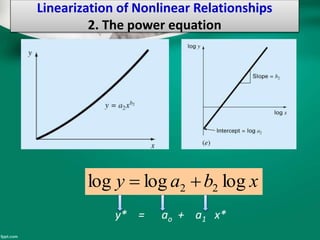

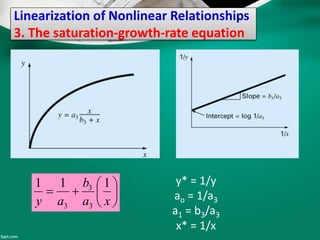

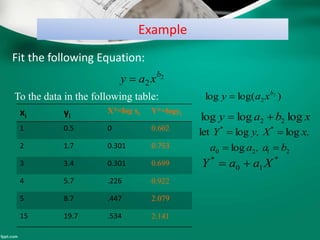

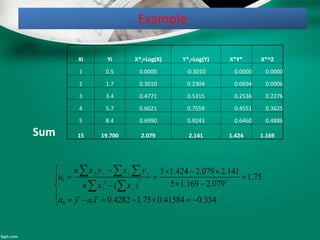

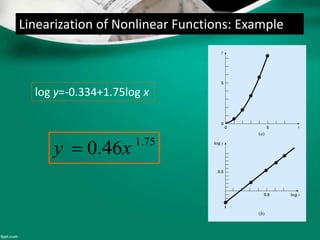

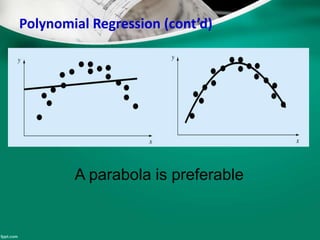

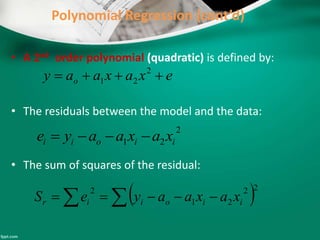

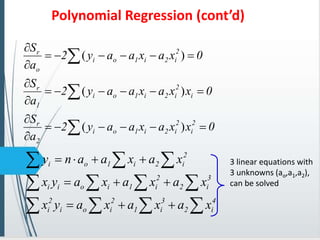

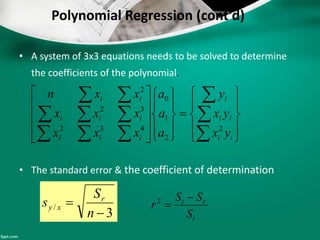

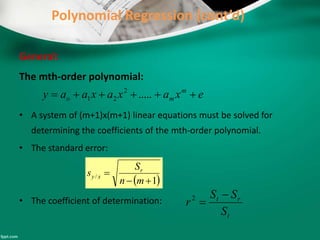

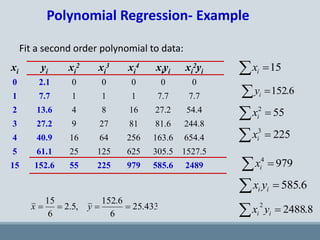

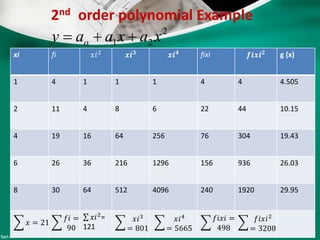

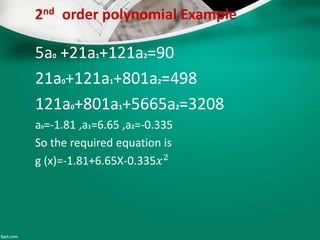

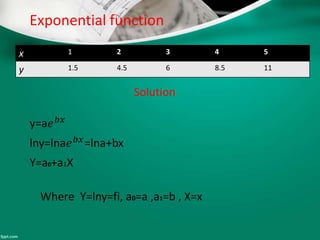

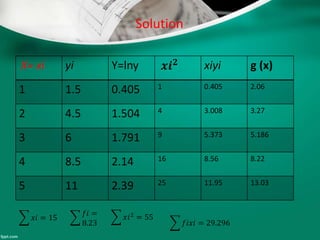

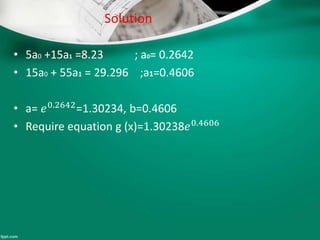

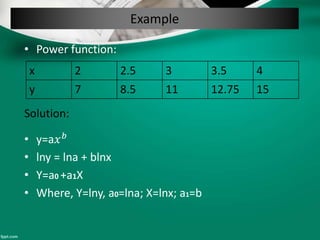

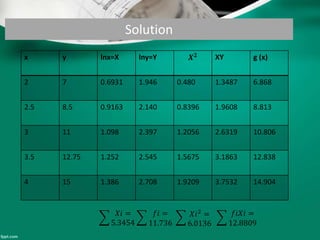

Curve fitting is the process of finding the best fit mathematical function for a series of data points. It involves constructing curves or equations that model the relationship between dependent and independent variables. The least squares method is commonly used, which finds the curve that minimizes the sum of the squares of the distances between the data points and the curve. This provides a single curve that best represents the overall trend of the data. Examples of linear and nonlinear curve fitting are provided, along with the process of linearizing nonlinear relationships to apply linear regression techniques.