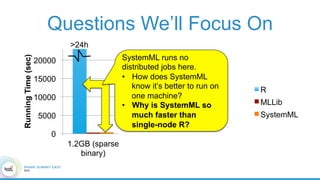

1. The document describes the origins and goals of the SystemML project for scalable machine learning.

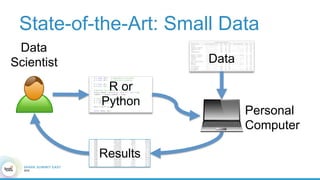

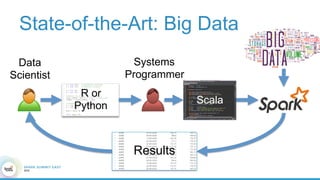

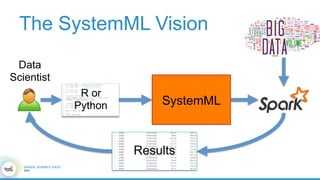

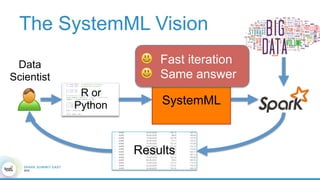

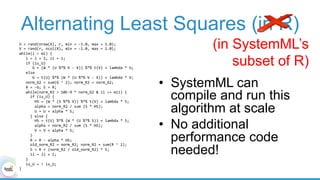

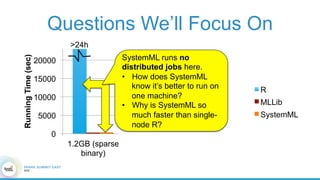

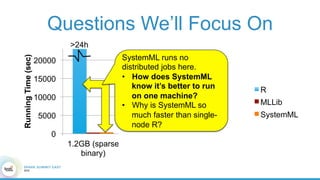

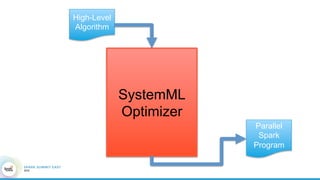

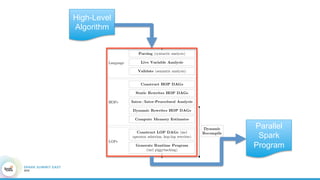

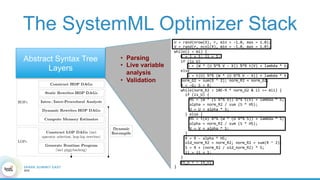

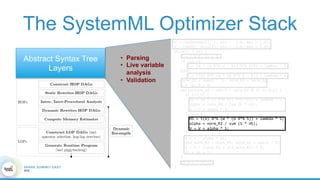

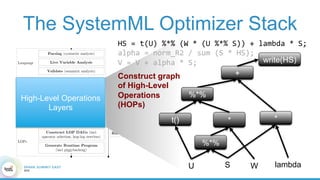

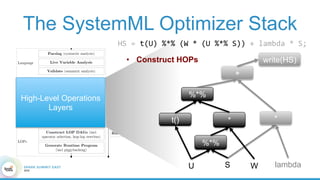

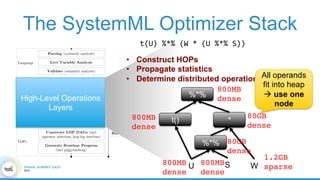

2. SystemML was created to allow data scientists to write machine learning algorithms in R and automatically compile and optimize them to run efficiently on large datasets in parallel.

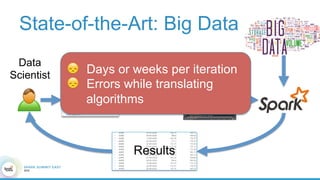

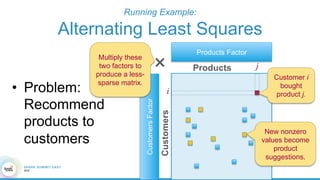

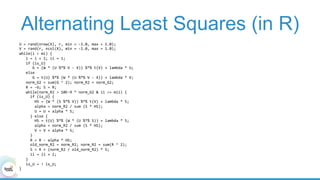

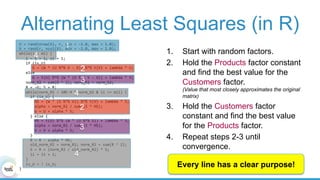

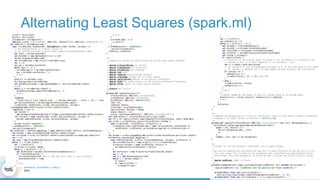

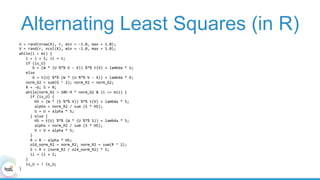

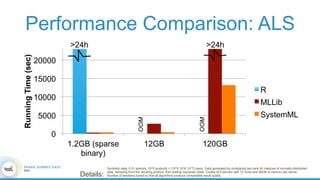

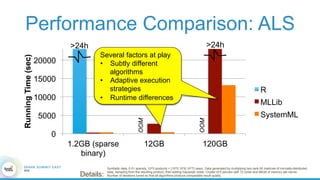

3. An example alternating least squares algorithm is shown written concisely in R, while traditional approaches required translating algorithms to other languages like Scala which was error-prone and slowed iteration. SystemML aims to allow the same algorithm to run fast at large scale with the same answer.