This document provides an overview of DML syntax and invocation. It describes DML as a declarative machine learning language with an R-like syntax. It outlines basic DML constructs like data types, control flow, functions, and imports. The document also explains how to invoke DML programs from the command line or Spark, and mentions some editor support packages. Resources for additional documentation and the SystemML GitHub repository are also provided.

![About DML Briefly

• DML = Declarative Machine Learning

• R-like syntax, some subtle differences from R

• Dynamically typed

• Data Structures

• Scalars – Boolean, Integers, Strings, Double Precision

• Cacheable – Matrices, DataFrames

• Data Structure Terminology in DML

• Value Type - Boolean, Integers, Strings, Double Precision

• Data Type – Scalar, Matrices, DataFrames*

• You can have a DataType[ValueType], not all combinations are supported

• For instance – matrix[double]

• Scoping

• One global scope, except inside functions

* Coming soon](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-4-320.jpg)

![About DML Briefly

• Control Flow

• Sequential imperative control flow (like most other languages)

• Looping –

• while (<condition>) { … }

• for (var in <for_predicate>) { … }

• parfor (var in <for_predicate>) { … } // Iterations in parallel

• Guards –

• if (<condition>) { ... } [ else if (<condition>) { ... } ... else { … } ]

• Functions

• Built-in – List available in language reference

• User Defined – (multiple return parameters)

• functionName = function (<formal_parameters>…) return (<formal_parameters>) { ... }

• Can only access variables defined in the formal_parameters in the body of the function

• External Function – same as user defined, can call external Java Package](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-5-320.jpg)

![Sample Code

source("nn/layers/affine.dml") as affine # import a file in the “affine“ namespace

[W, b] = affine::init(D, M) # calls the init function, multiple return

parfor (i in 1:nrow(X)) { # i iterates over 1 through num rows in X in parallel

for (j in 1:ncol(X)) { # j iterates over 1 through num cols in X

# Computation ...

}

}

write (M, fileM, format=“text”) # M=matrix, fileM=file, also writes to HDFS

X = read (fileX) # fileX=file, also reads from HDFS

if (ncol (A) > 1) {

# Matrix A is being sliced by a given range of columns

A[,1:(ncol (A) - 1)] = A[,1:(ncol (A) - 1)] - A[,2:ncol (A)];

}](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-8-320.jpg)

![Sample Code

interpSpline = function(

double x, matrix[double] X, matrix[double] Y, matrix[double] K) return (double q) {

i = as.integer(nrow(X) - sum(ppred(X, x, ">=")) + 1)

# misc computation …

q = as.scalar(qm)

}

eigen = externalFunction(Matrix[Double] A)

return(Matrix[Double] eval, Matrix[Double] evec)

implemented in (classname="org.apache.sysml.udf.lib.EigenWrapper", exectype="mem")](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-9-320.jpg)

![Sample Code (From LinearRegDS.dml*)

A = t(X) %*% X

b = t(X) %*% y

if (intercept_status == 2) {

A = t(diag (scale_X) %*% A + shift_X %*% A [m_ext, ])

A = diag (scale_X) %*% A + shift_X %*% A [m_ext, ]

b = diag (scale_X) %*% b + shift_X %*% b [m_ext, ]

}

A = A + diag (lambda)

print ("Calling the Direct Solver...")

beta_unscaled = solve (A, b)

*https://github.com/apache/incubator-systemml/blob/master/scripts/algorithms/LinearRegDS.dml#L133](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-10-320.jpg)

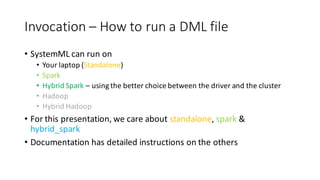

![Invocation – How to run a DML file

Standalone

In the systemml directory

bin/systemml <dml-filename> [arguments]

Example invocations:

bin/systemml LinearRegCG.dml –nvargs X=X.mtx Y=Y.mtx B=B.mtx

bin/systemml oddsRatio.dml –args X.mtx 50 B.mtx

Named arguments

Position arguments](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-16-320.jpg)

![Invocation – How to run a DML file

Spark/ Hybrid Spark

Define SPARK_HOME to point to your Apache Spark Installation

Define SYSTEMML_HOME to point to your Apache SystemML installation

In the systemml directory

scripts/sparkDML.sh<dml-filename> [systemmlarguments]

Example invocations:

scripts/sparkDML.sh LinearRegCG.dml --nvargs X=X.mtx Y=Y.mtxB=B.mtx

scripts/sparkDML.sh oddsRatio.dml --args X.mtx 50 B.mtx

Named arguments

Position arguments](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-17-320.jpg)

![Invocation – How to run a DML file

Spark/ Hybrid Spark

Define SPARK_HOME to point to your Apache Spark Installation

Define SYSTEMML_HOME to point to your Apache SystemML installation

Using the spark-submit script

$SPARK_HOME/bin/spark-submit

--master <master-url>

--class org.apache.sysml.api.DMLScript

${SYSTEMML_HOME}/SystemML.jar -f <dml-filename> <systemml arguments> -exec {hybrid_spark,spark}

Example invocation:

$SPARK_HOME/bin/spark-submit

--master local[*]

--class org.apache.sysml.api.DMLScript

${SYSTEMML_HOME}/SystemML.jar -f LinearRegCG.dml --nvargs X=X.mtx Y=Y.mtx B=B.mtx](https://image.slidesharecdn.com/s1dmlsyntaxandinvocation-160913180204/85/S1-DML-Syntax-and-Invocation-18-320.jpg)