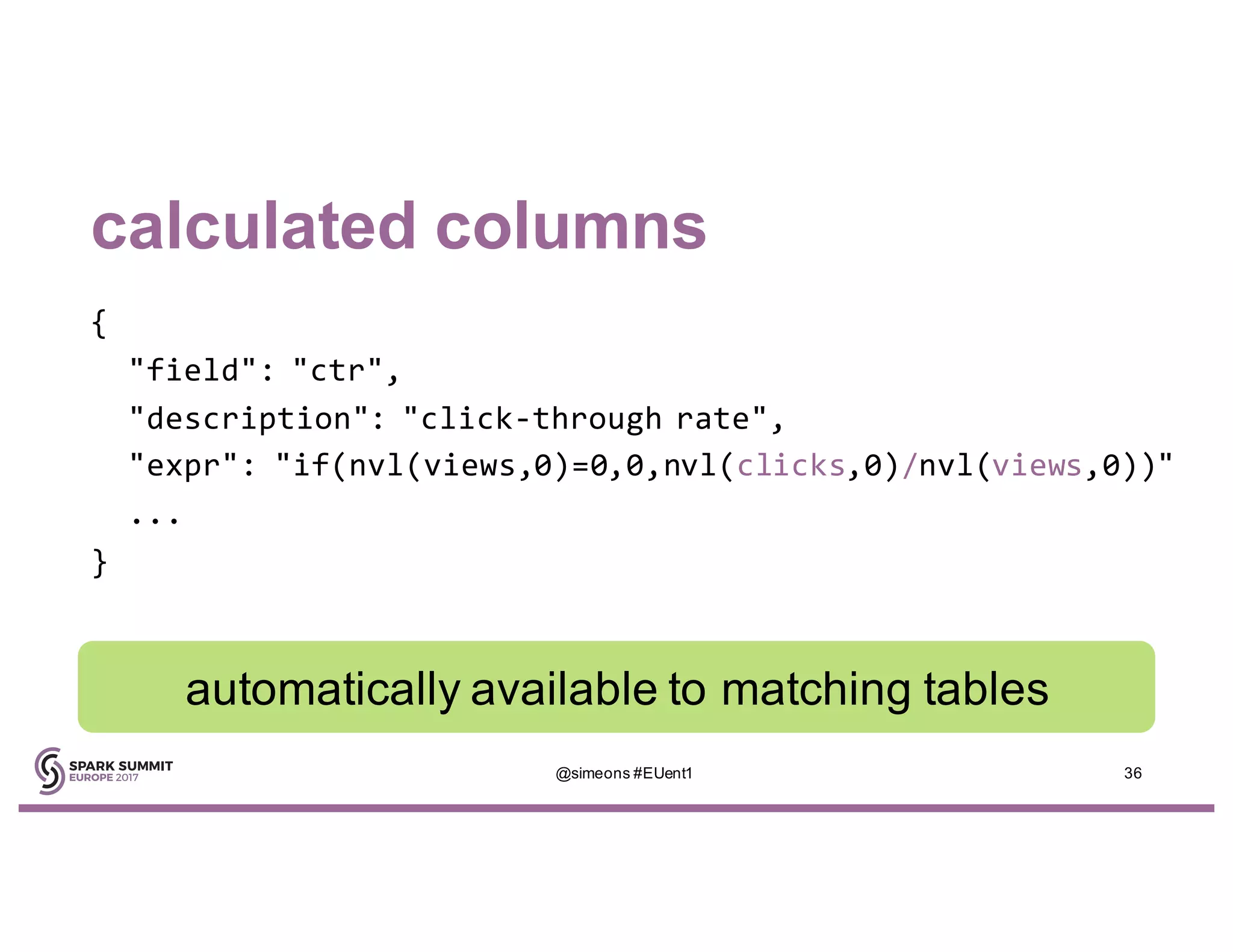

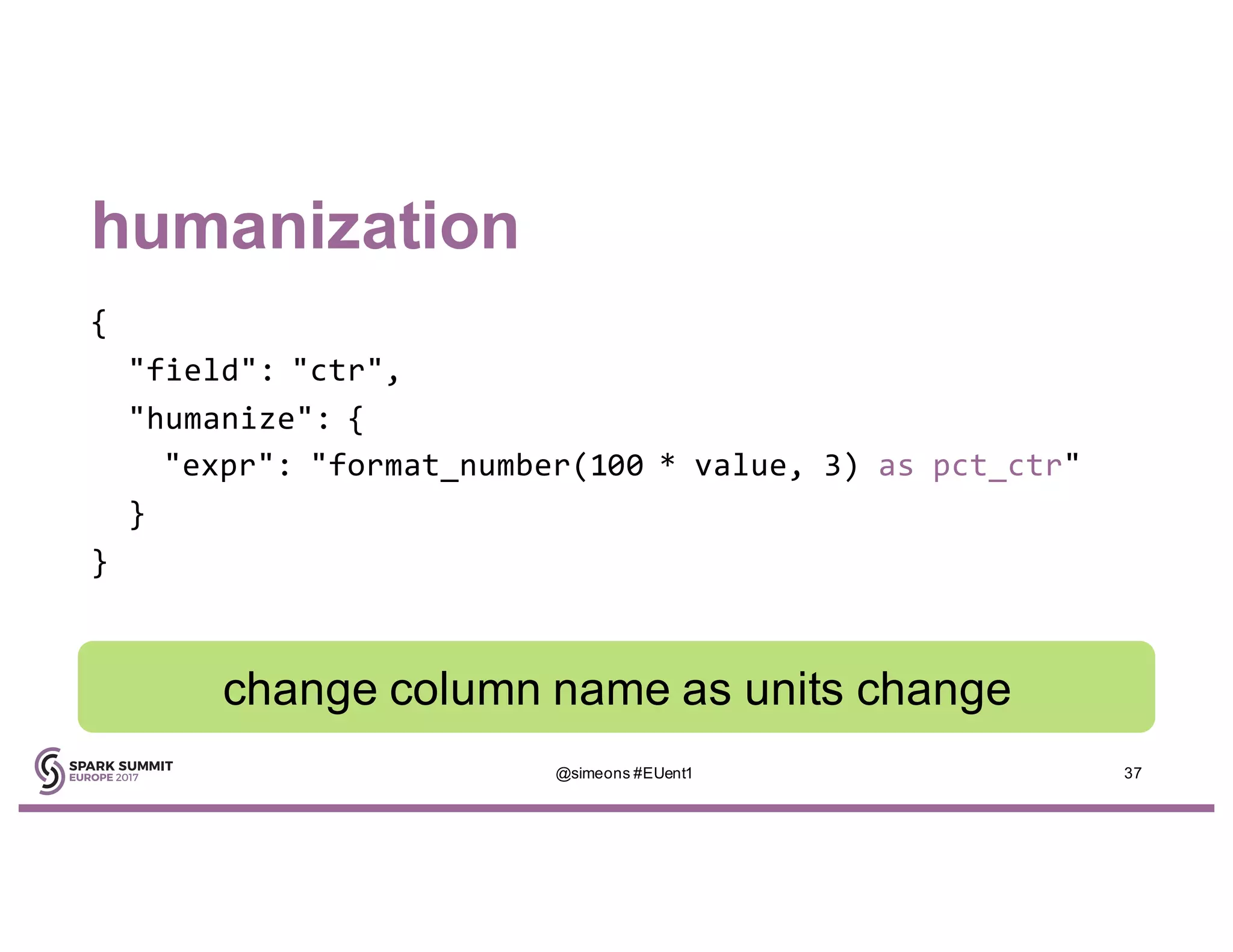

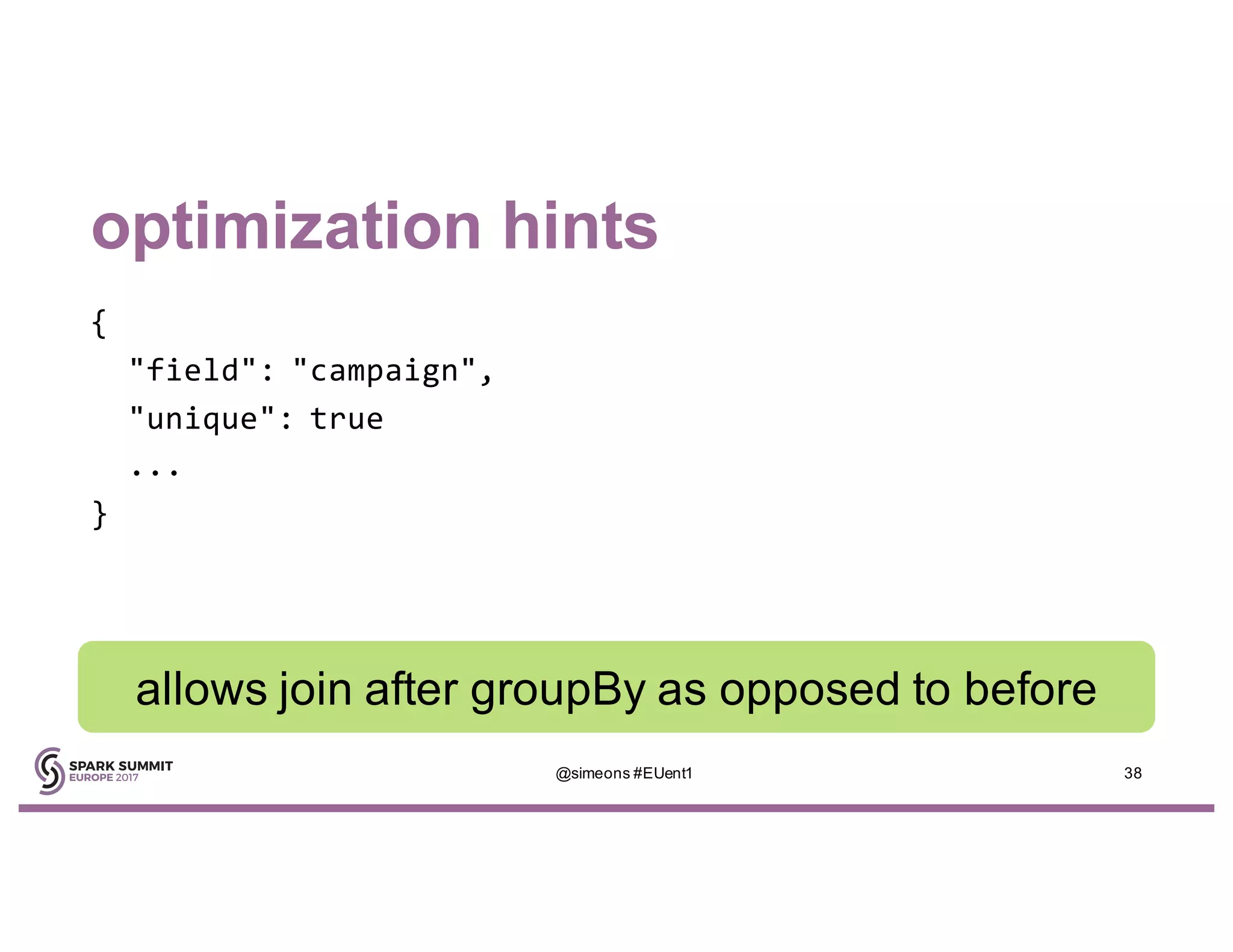

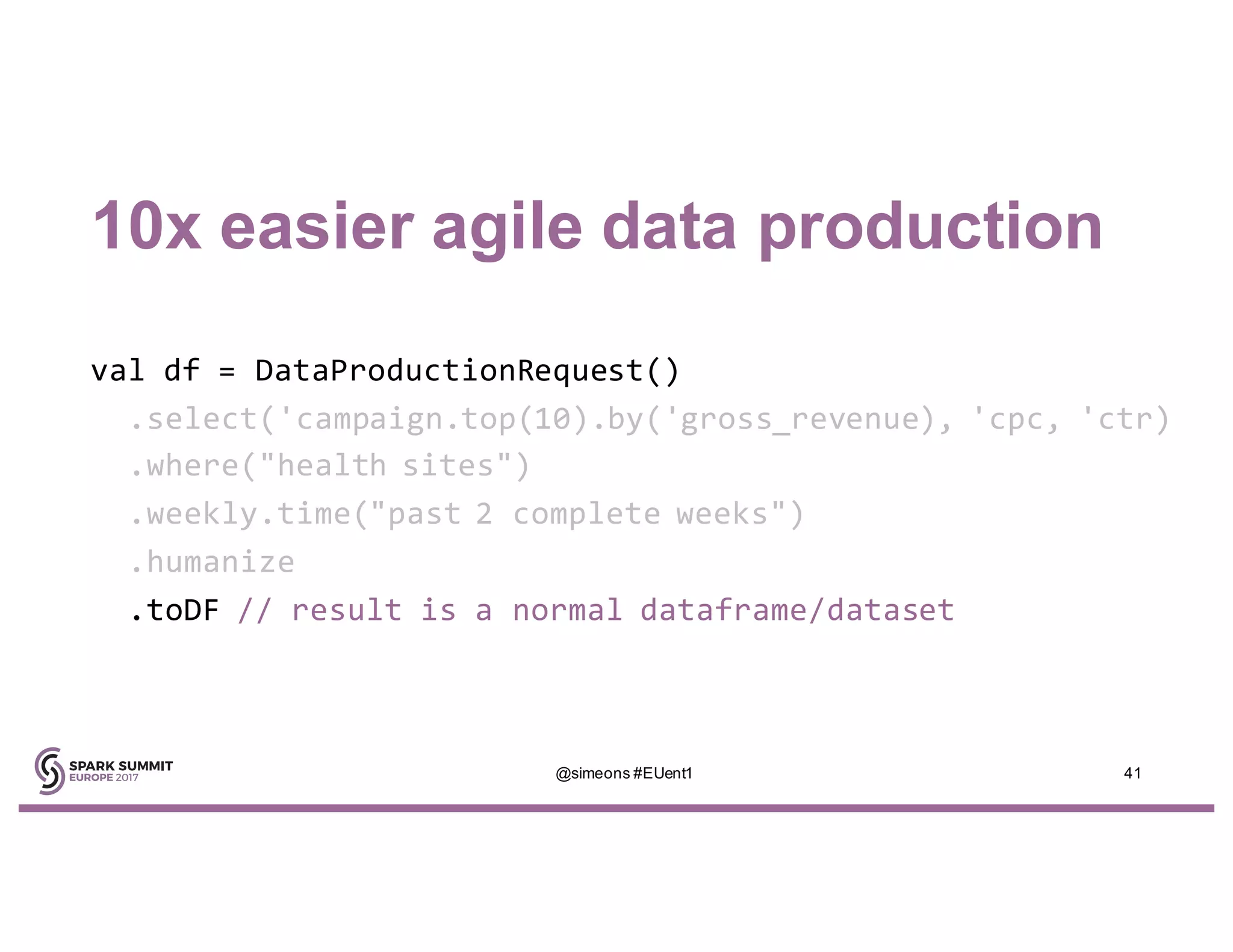

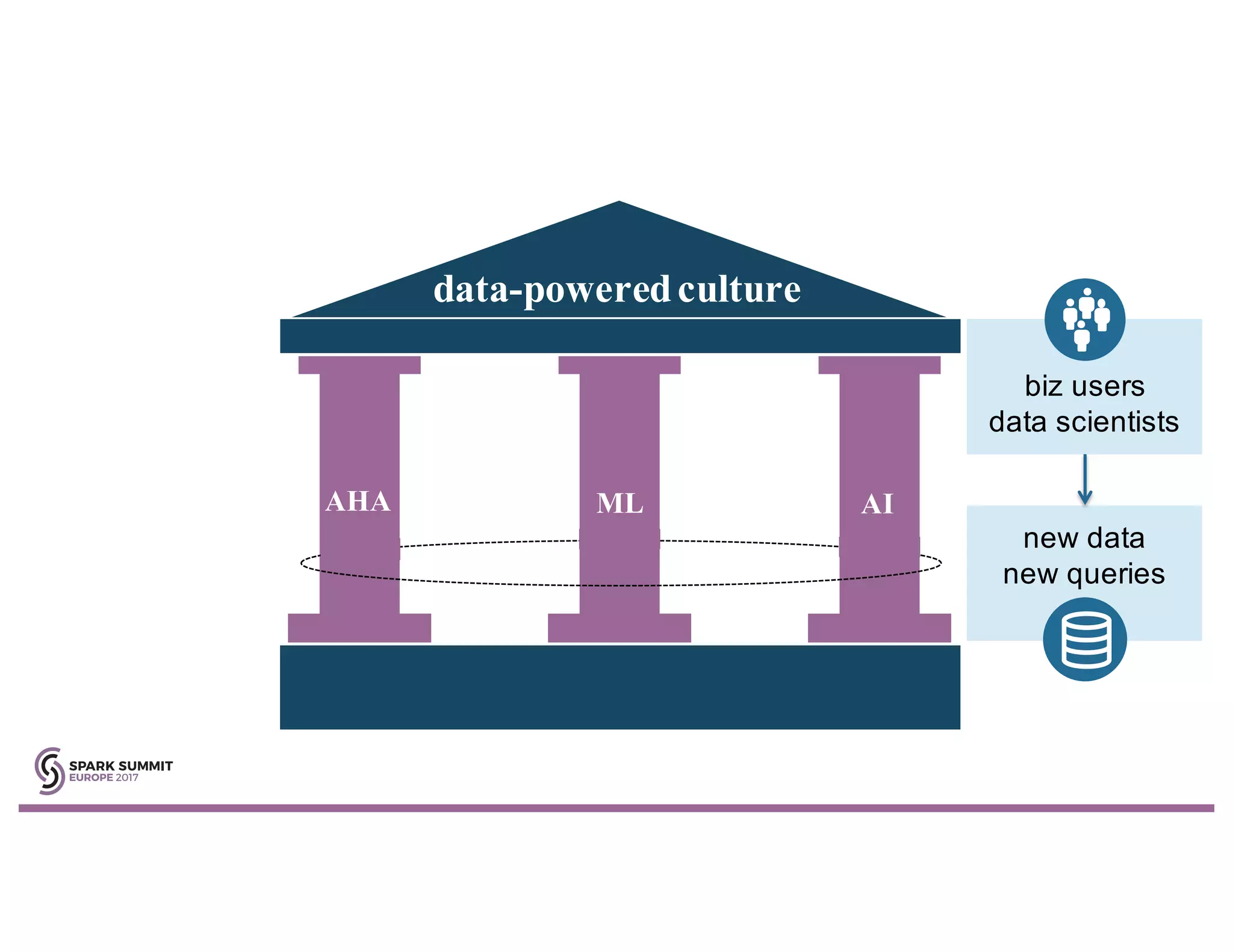

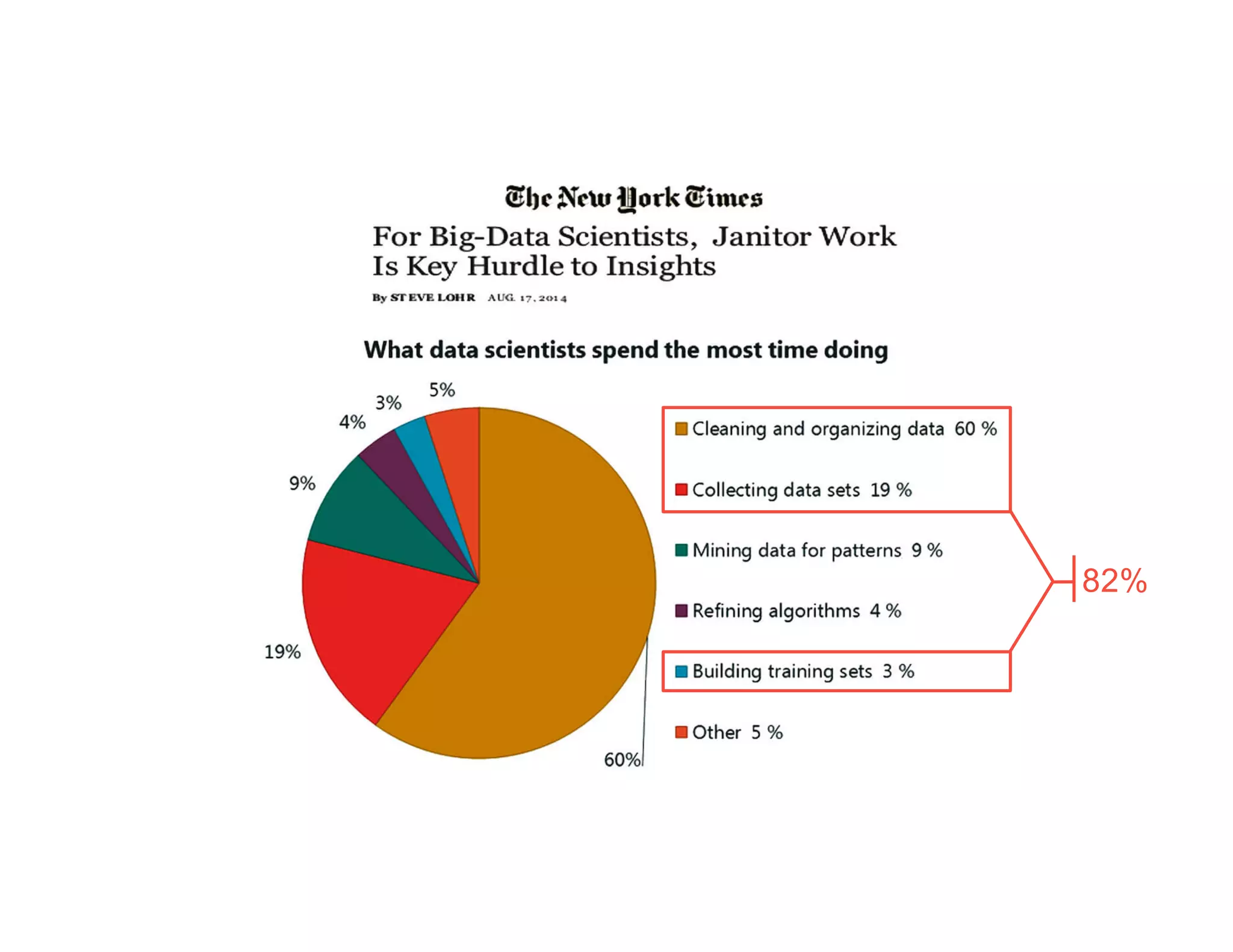

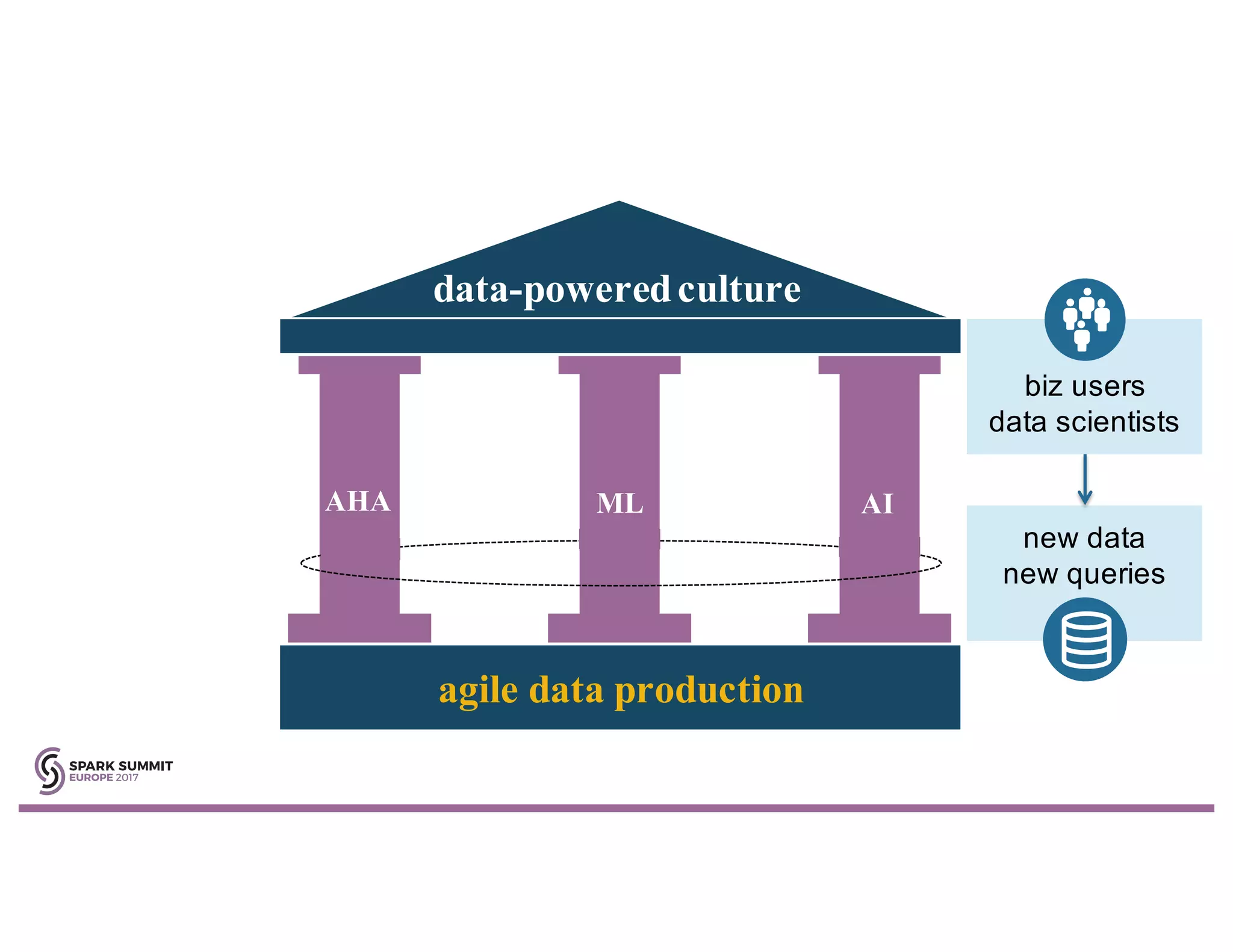

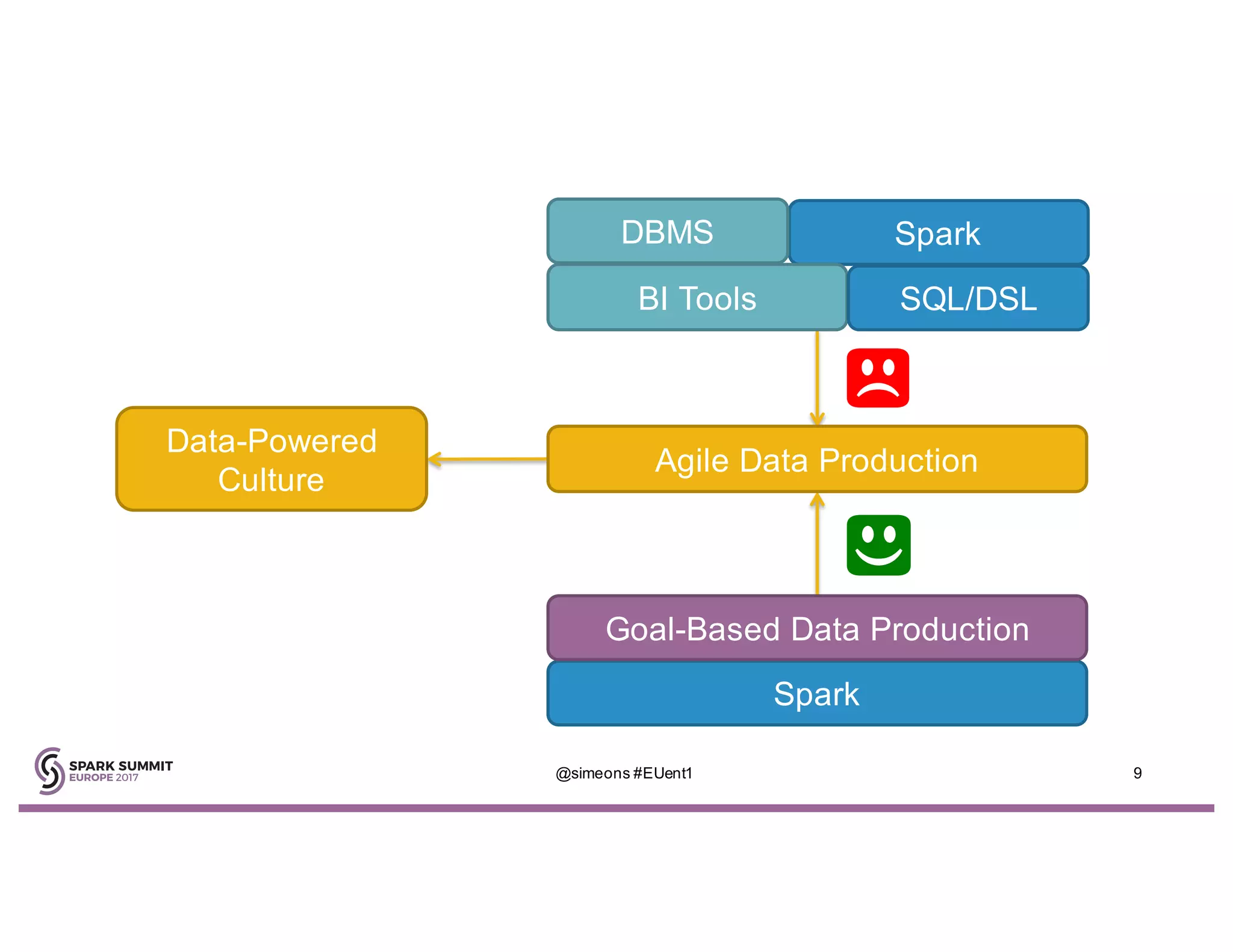

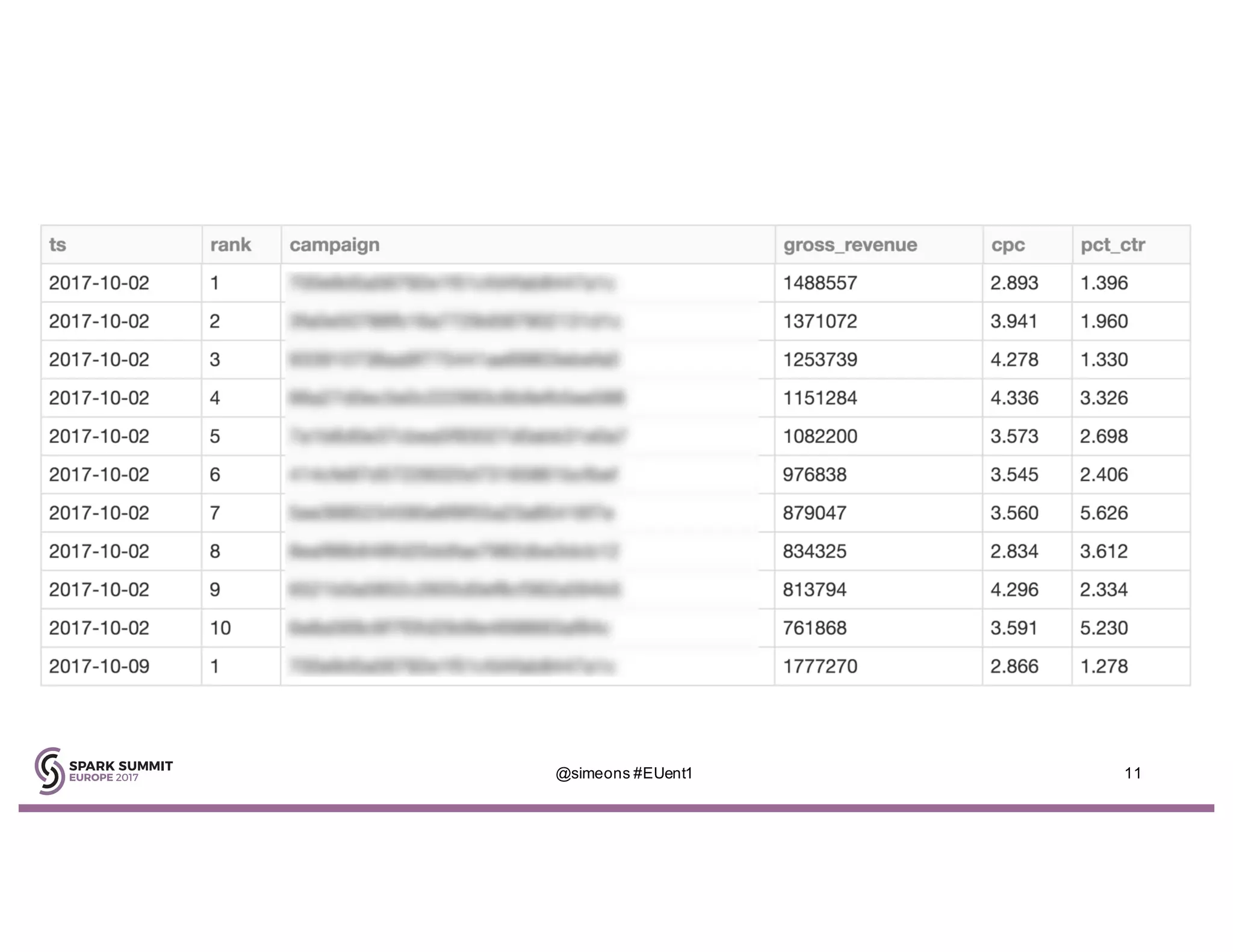

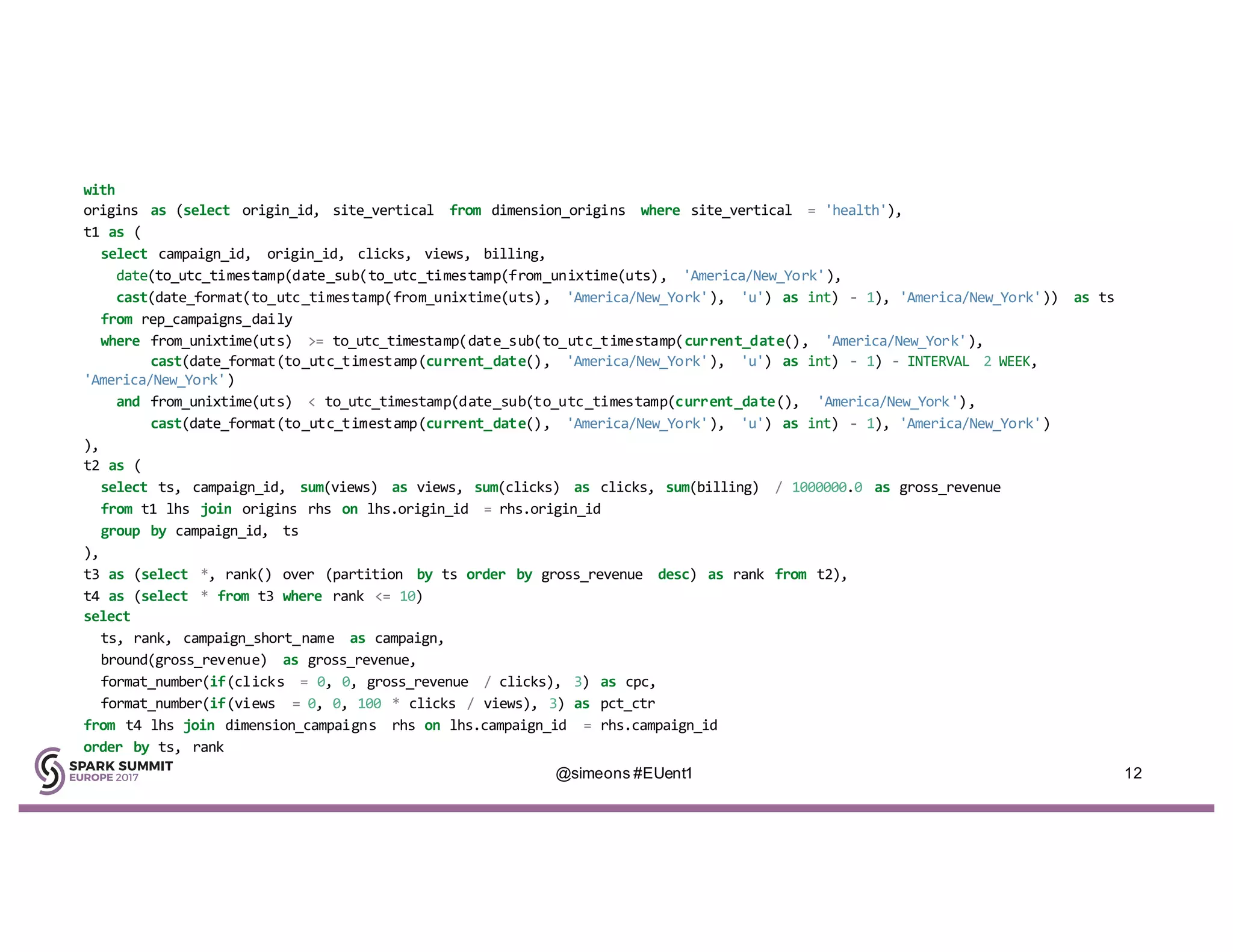

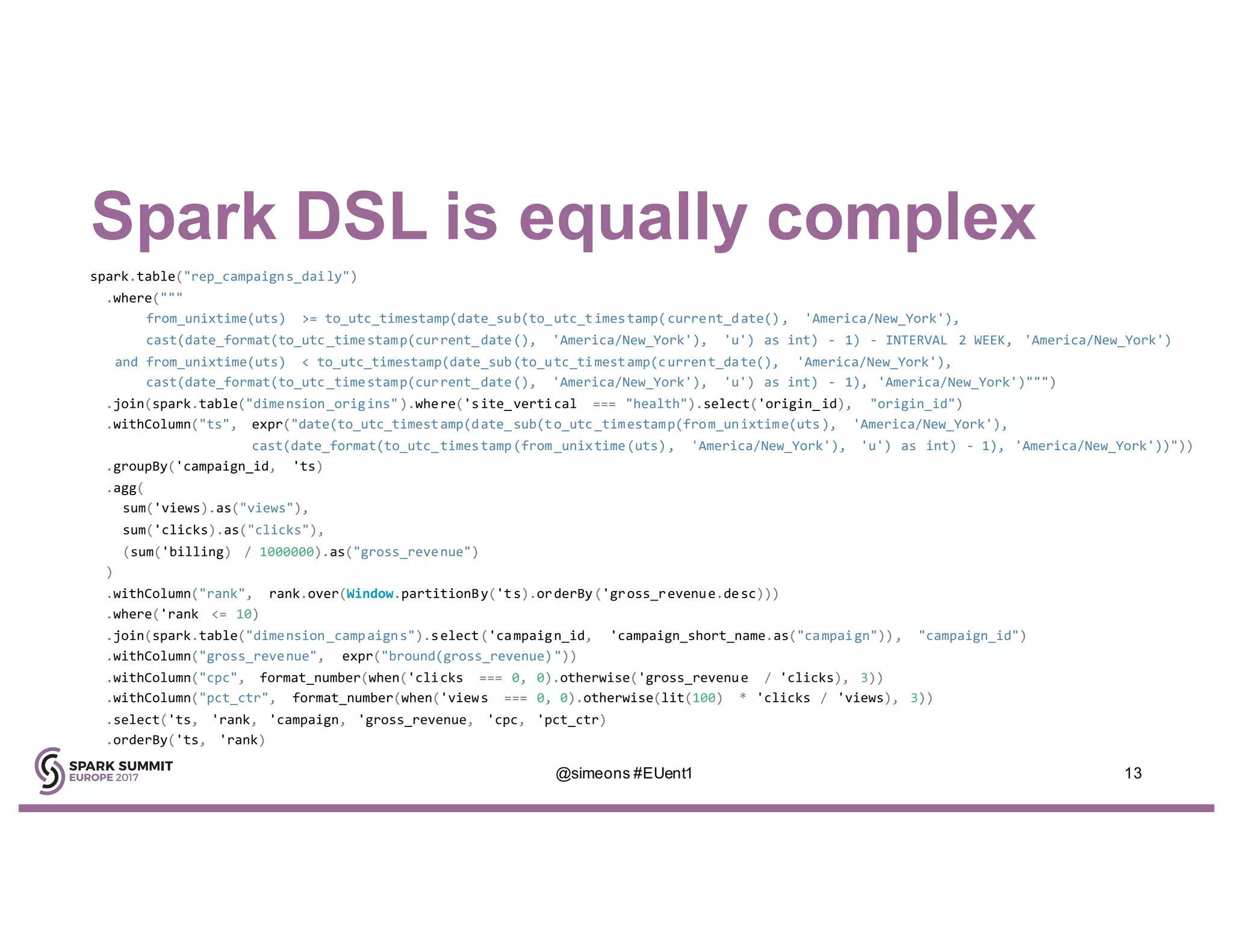

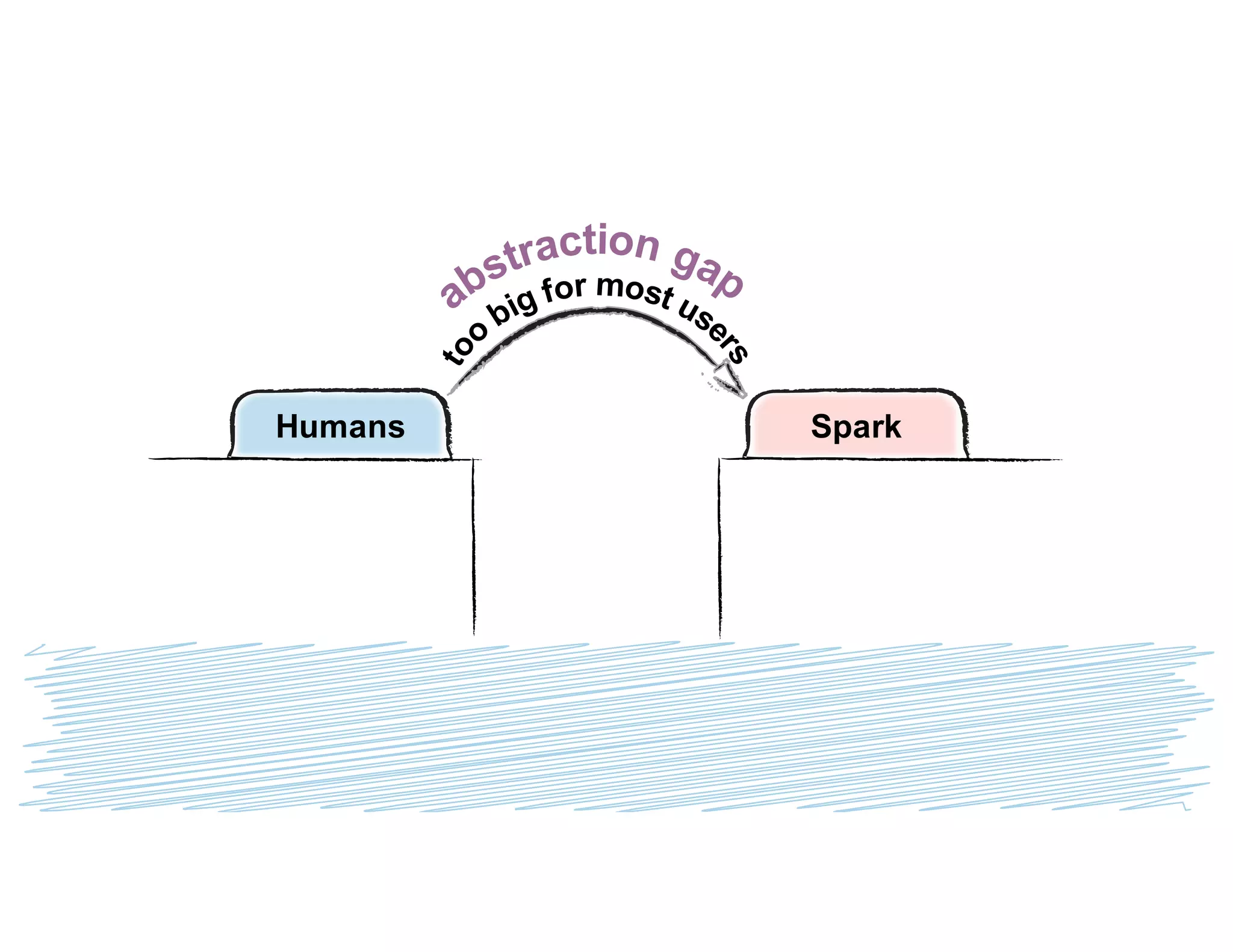

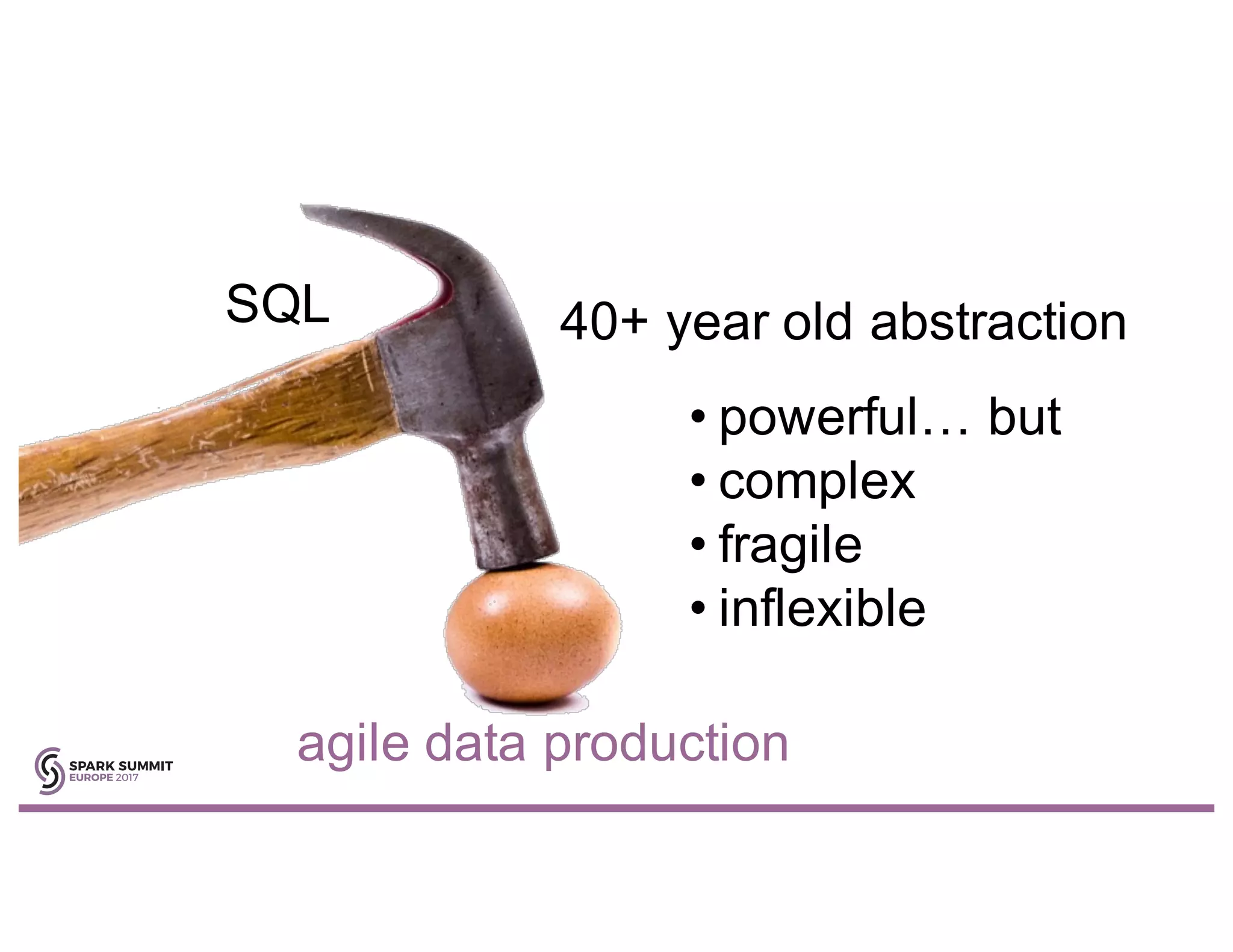

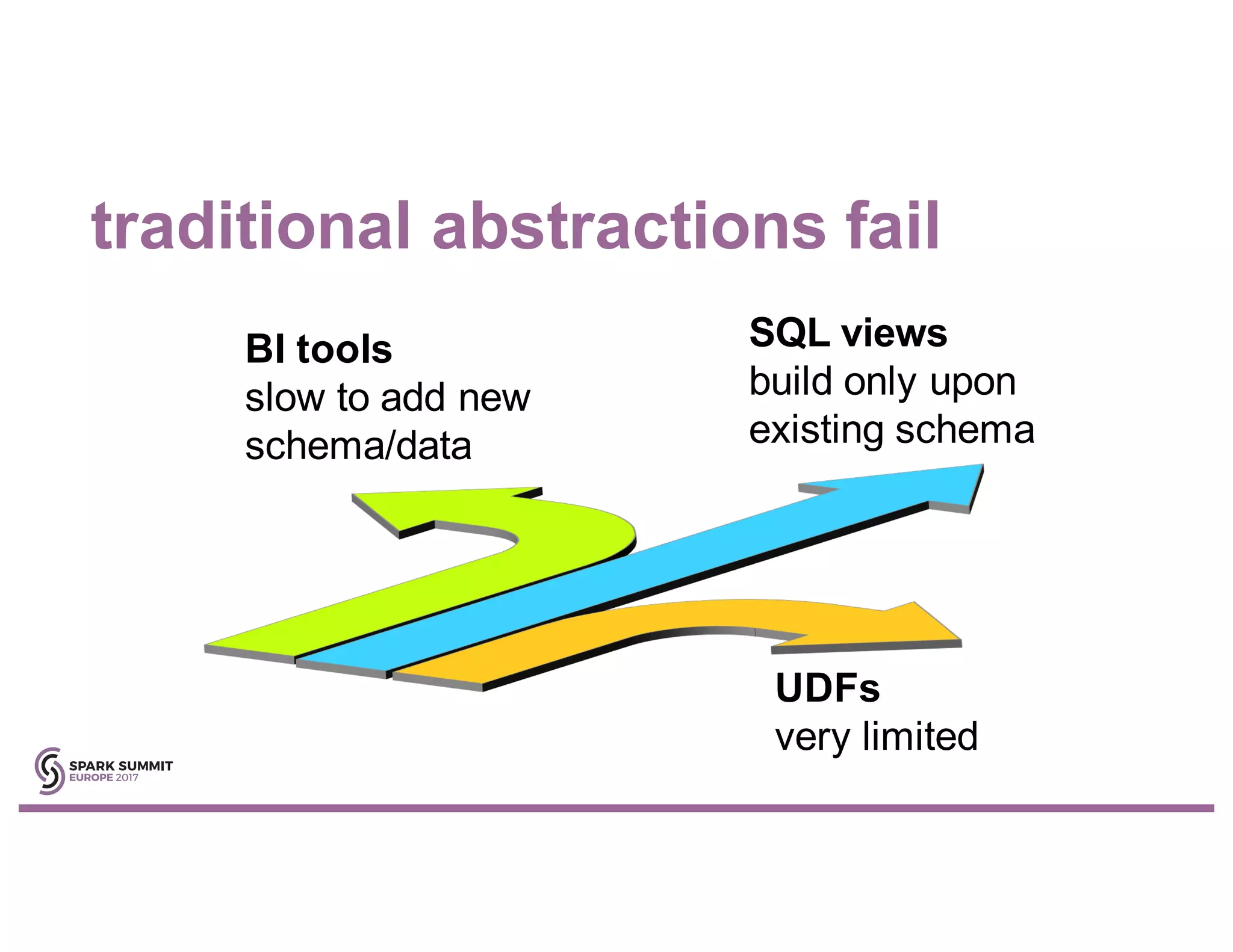

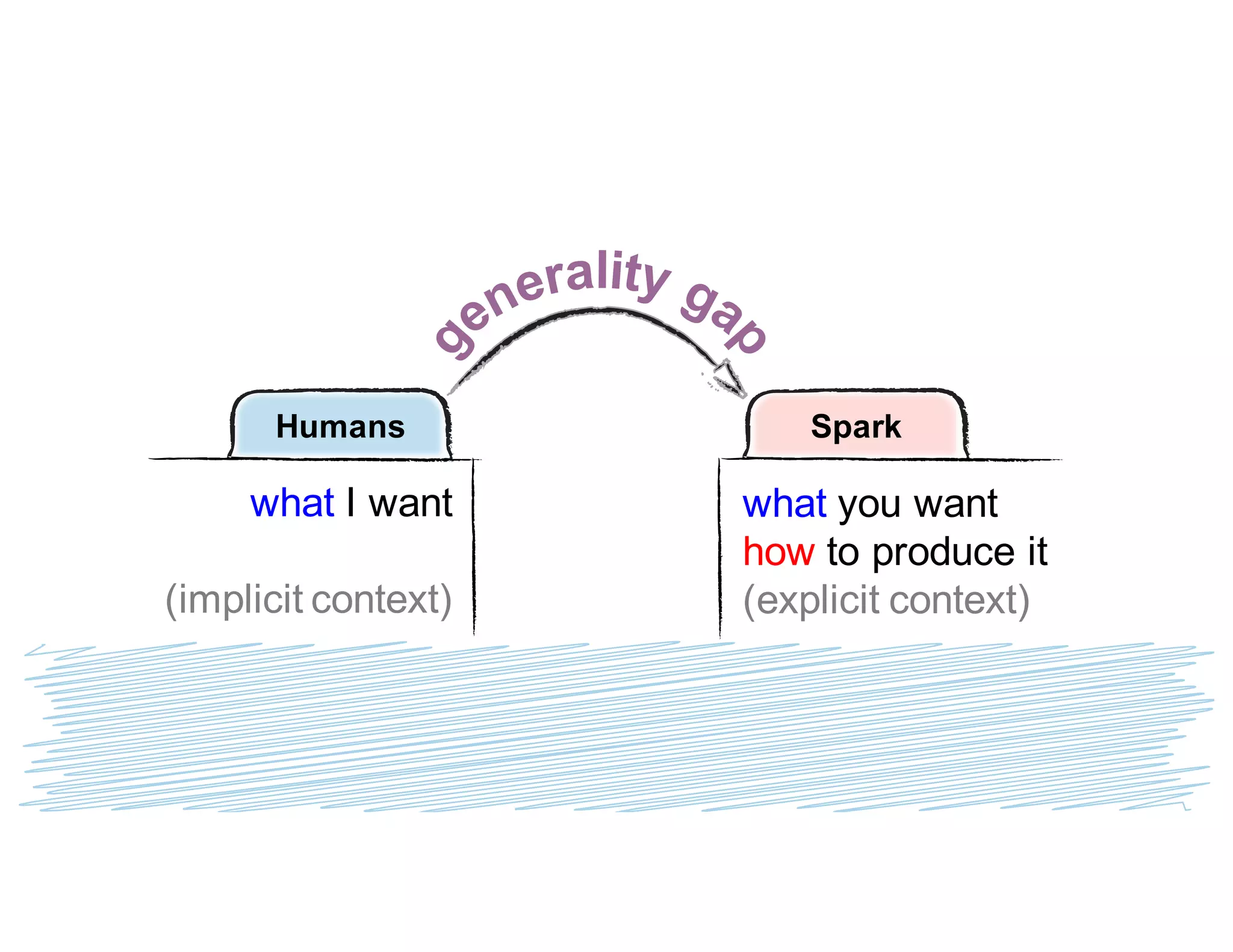

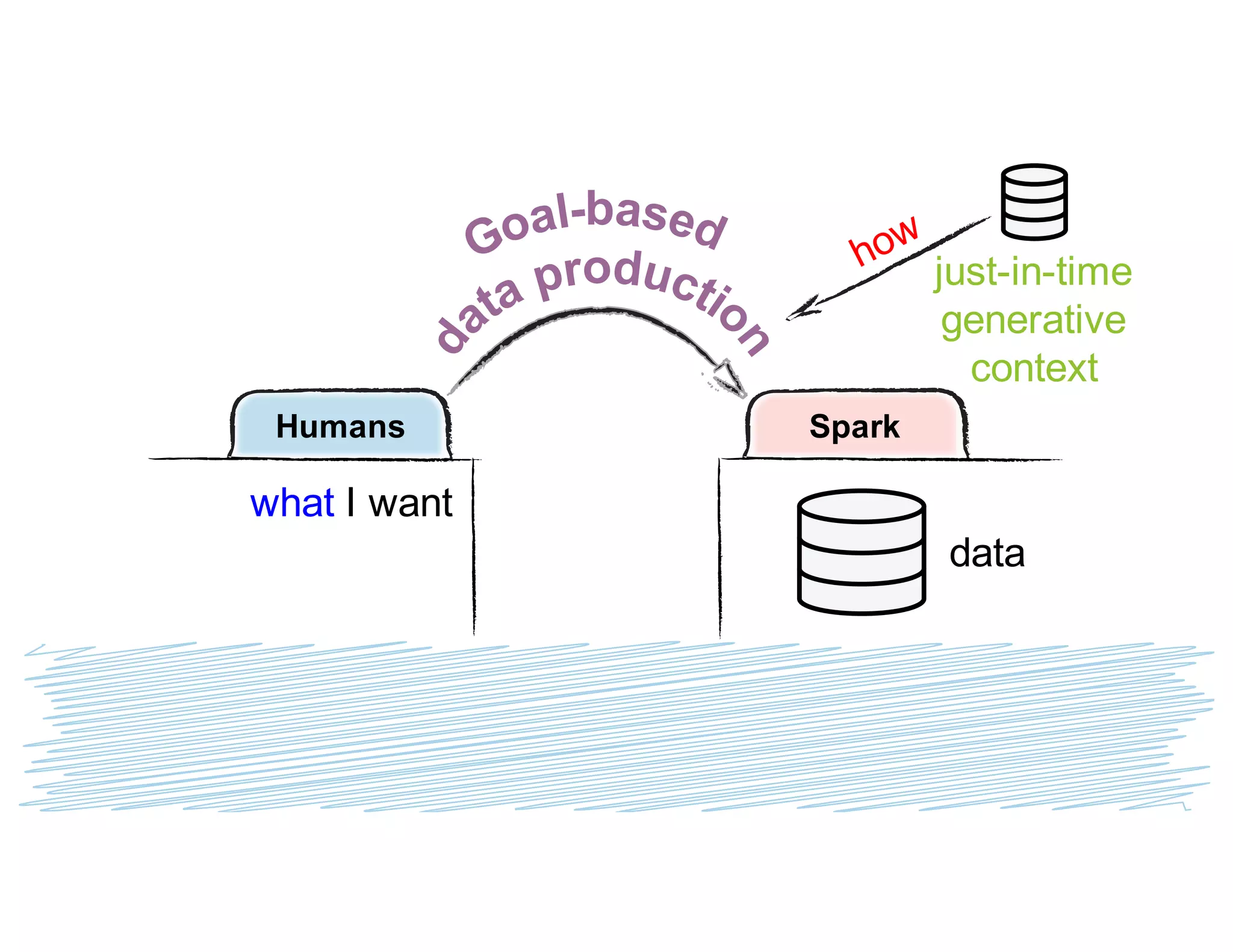

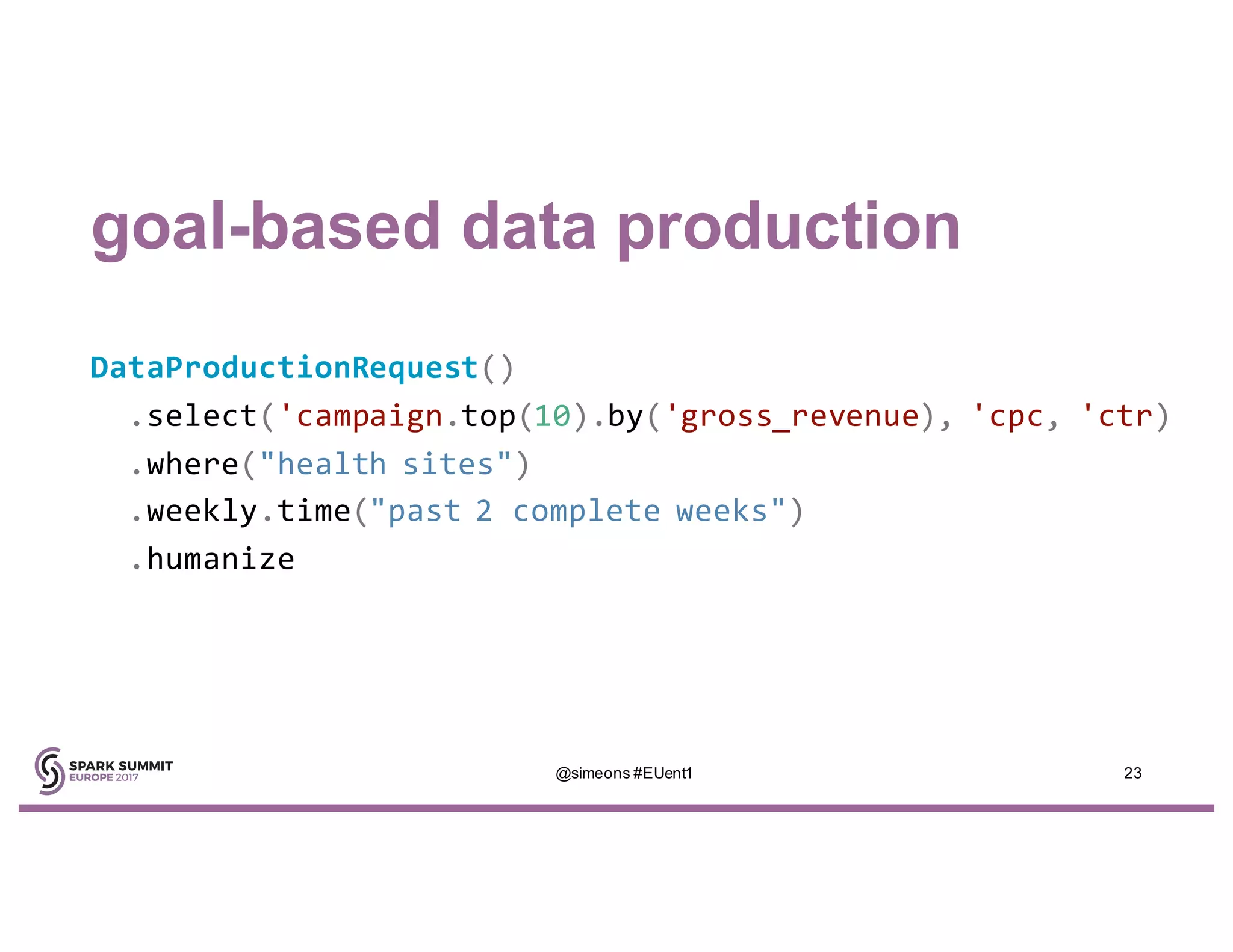

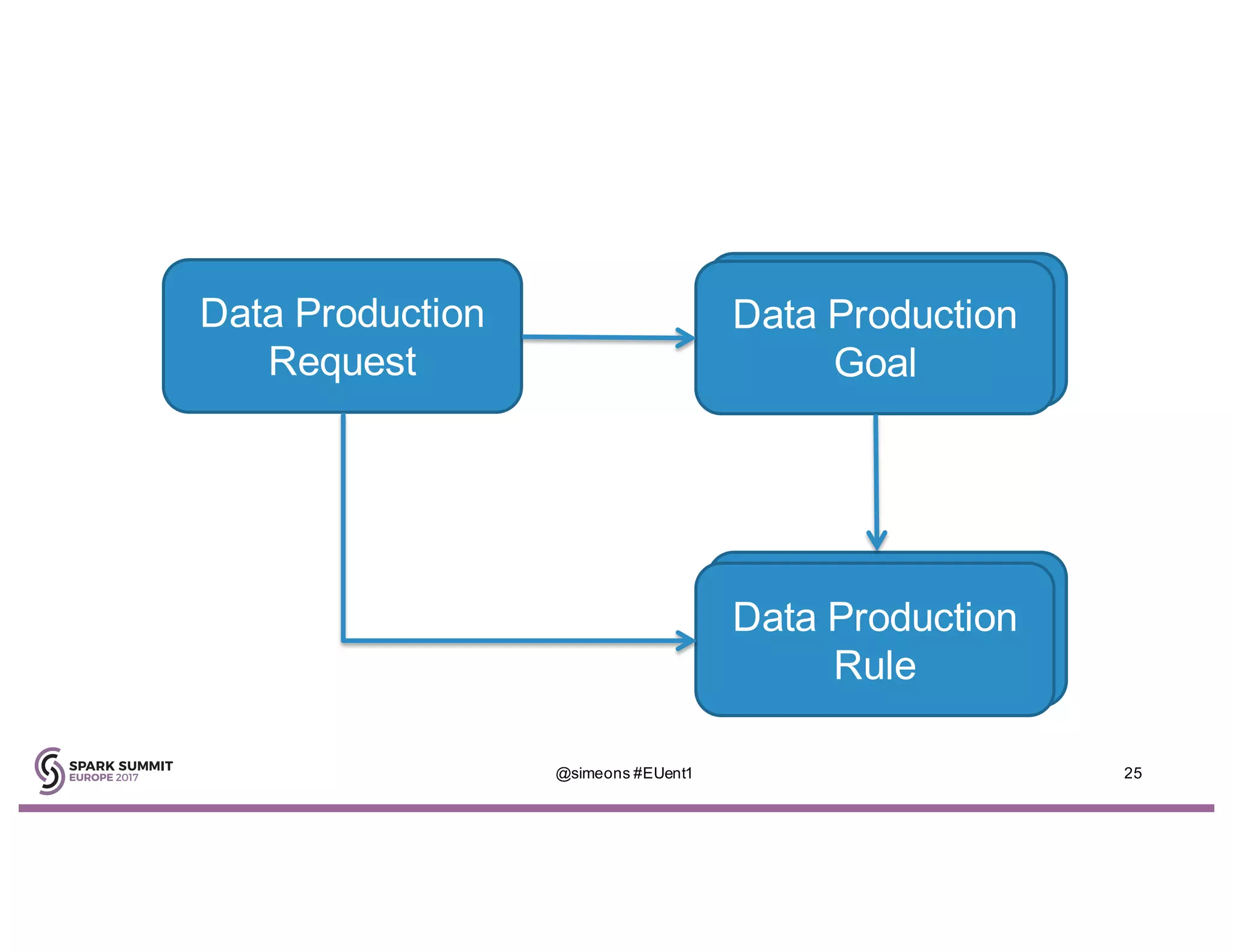

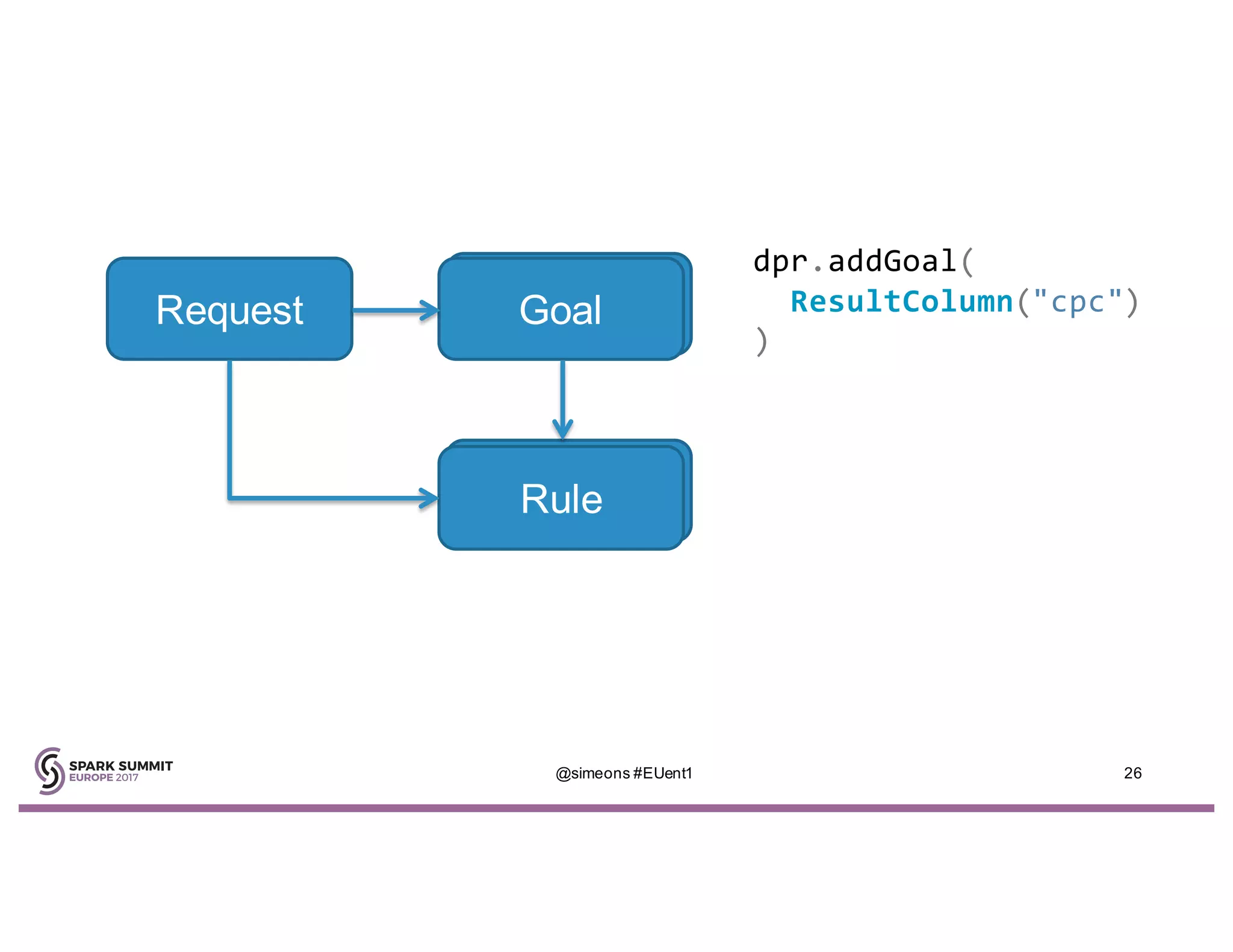

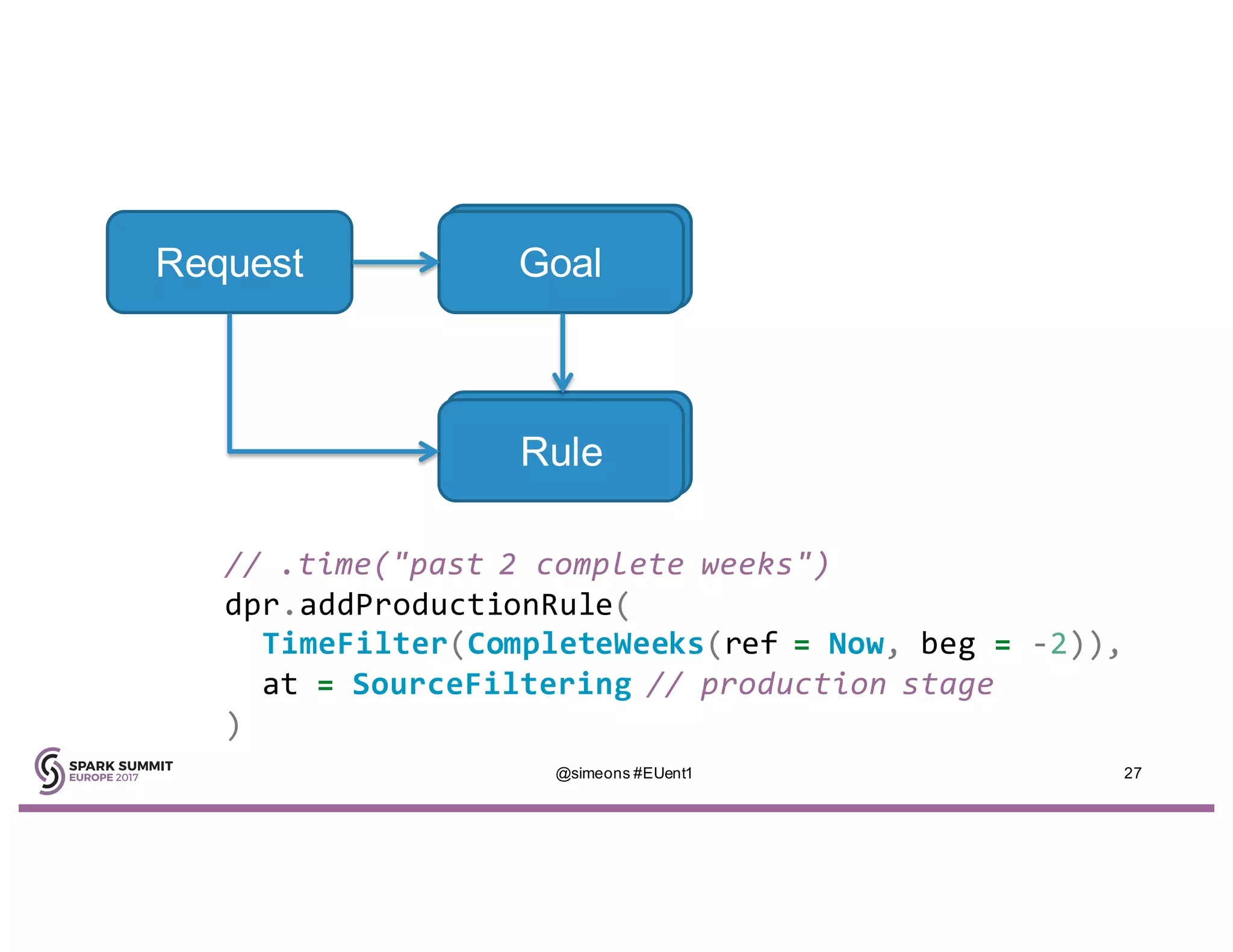

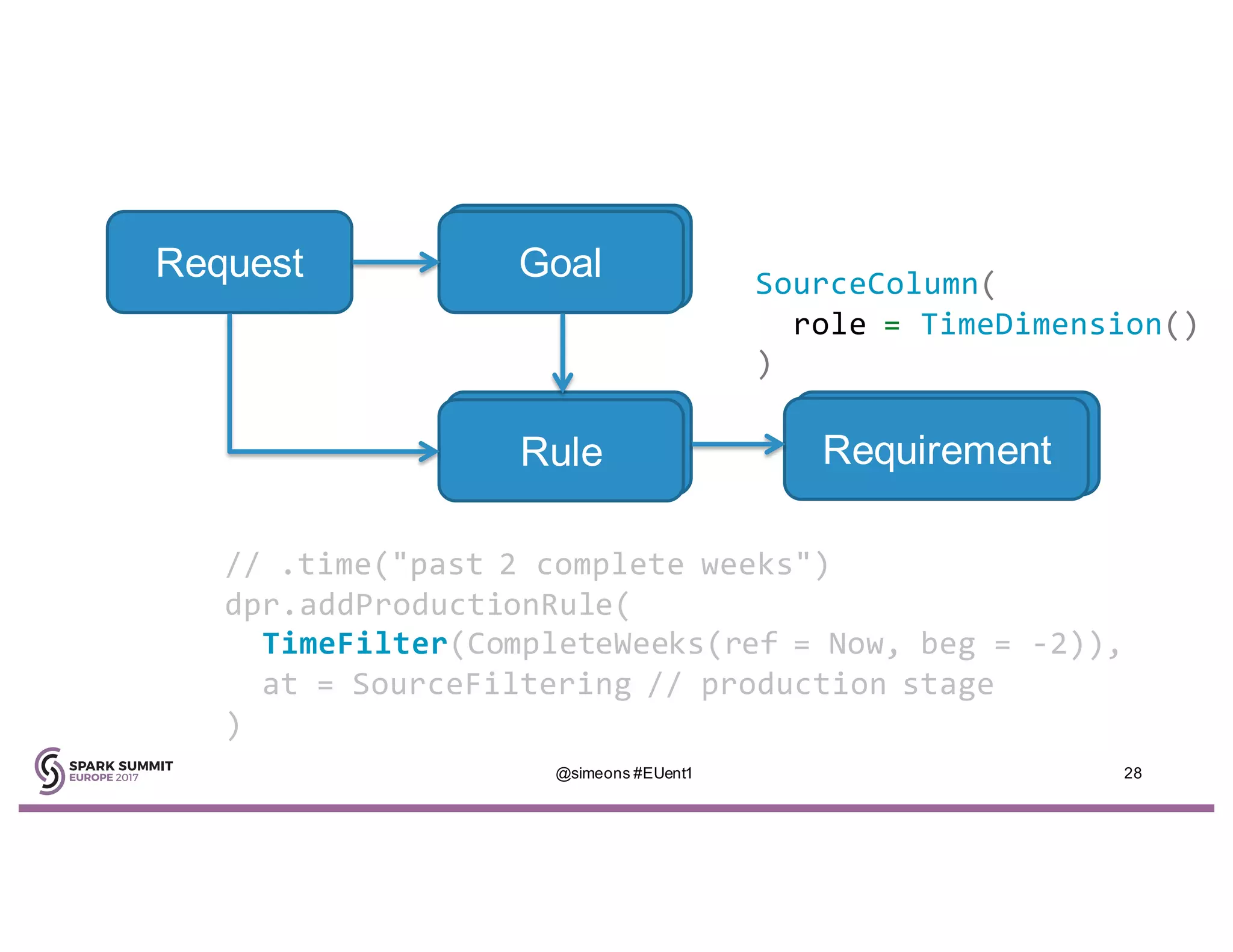

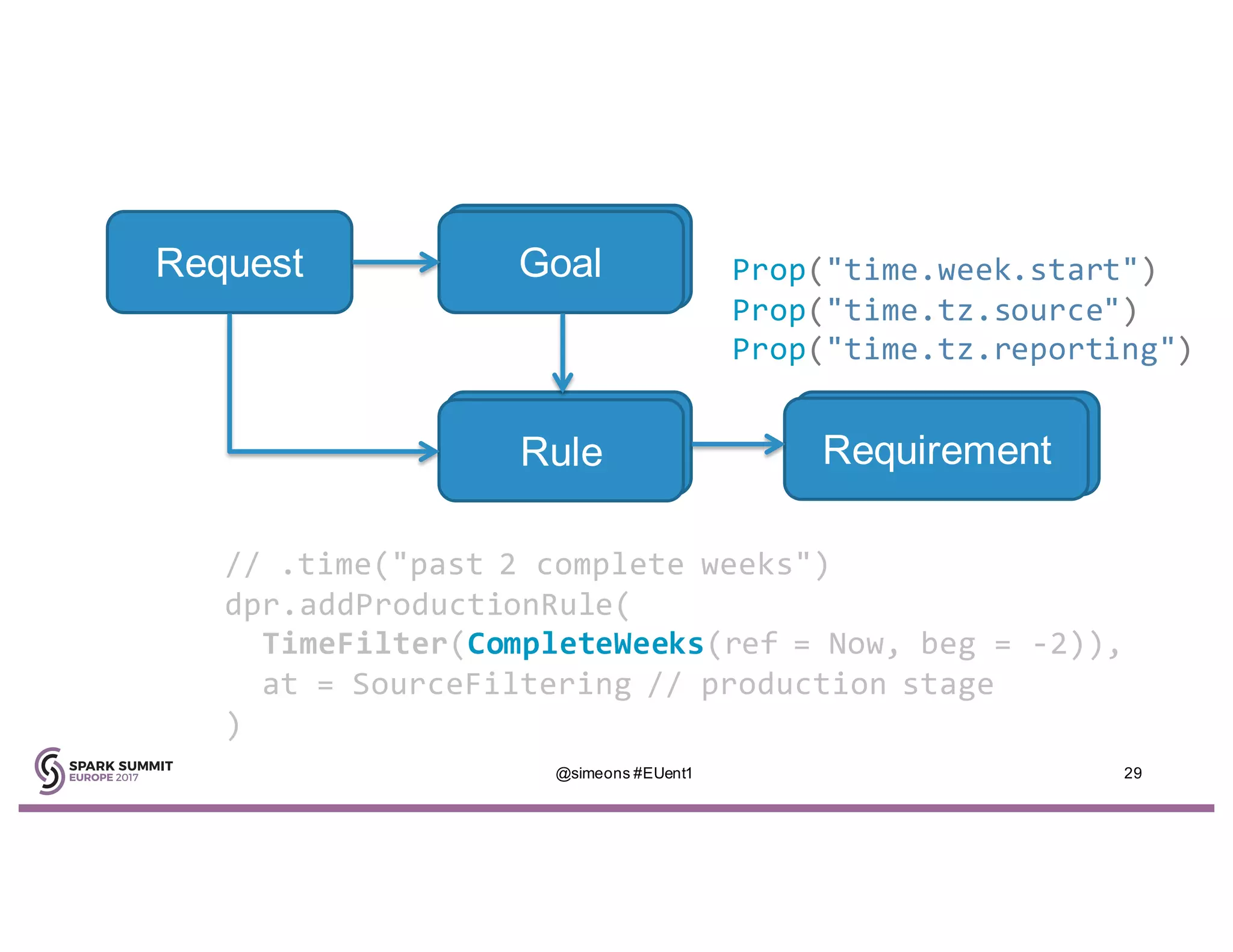

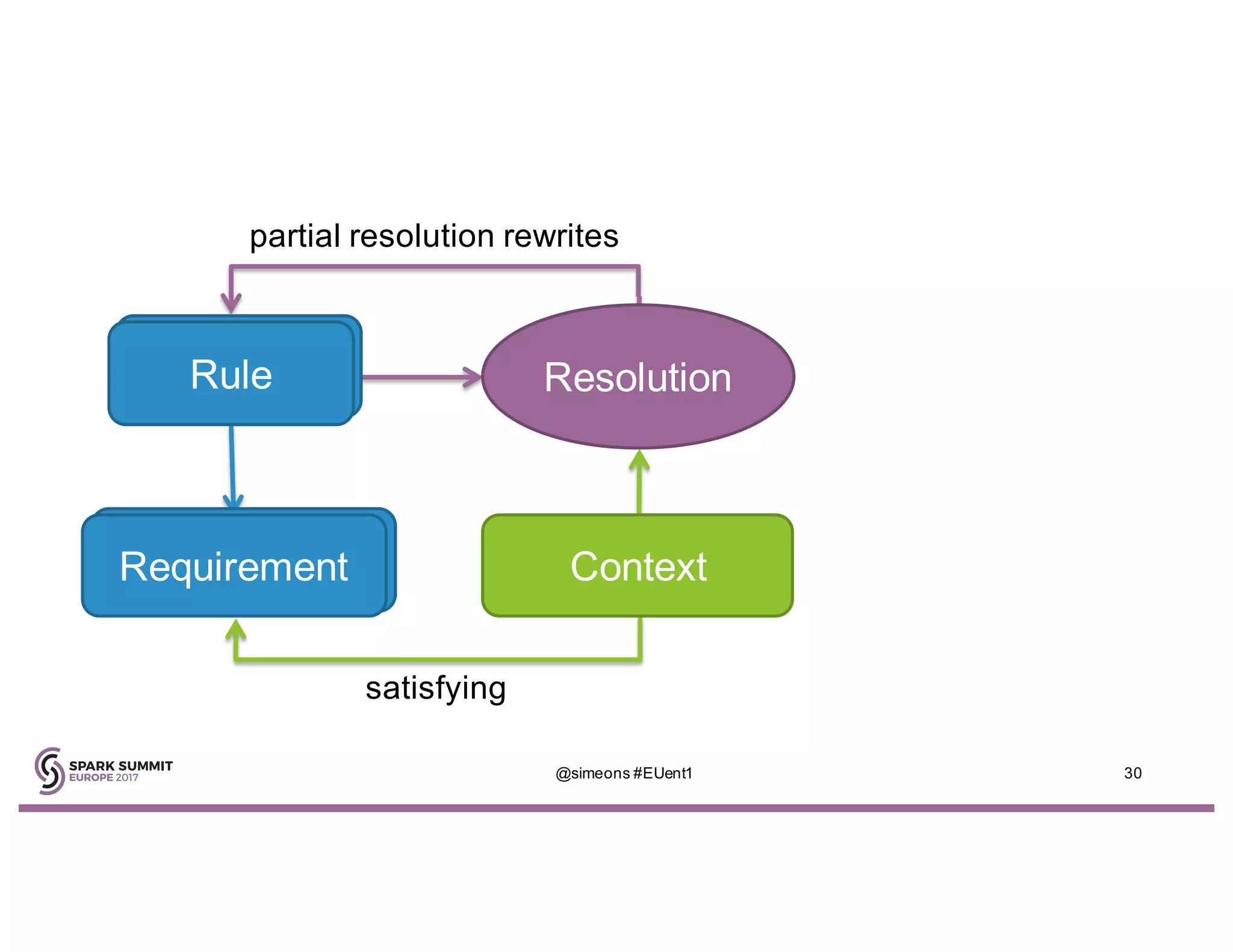

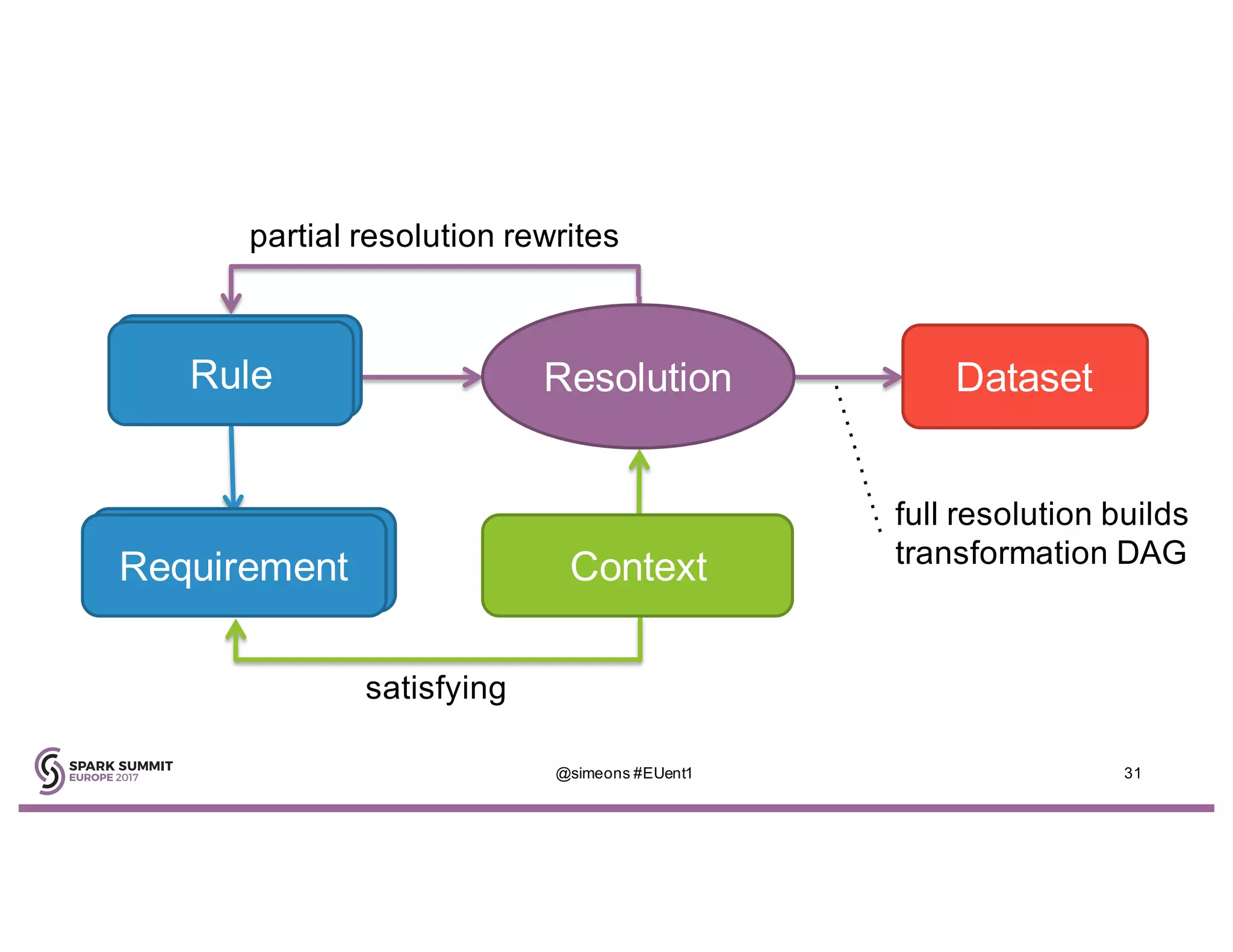

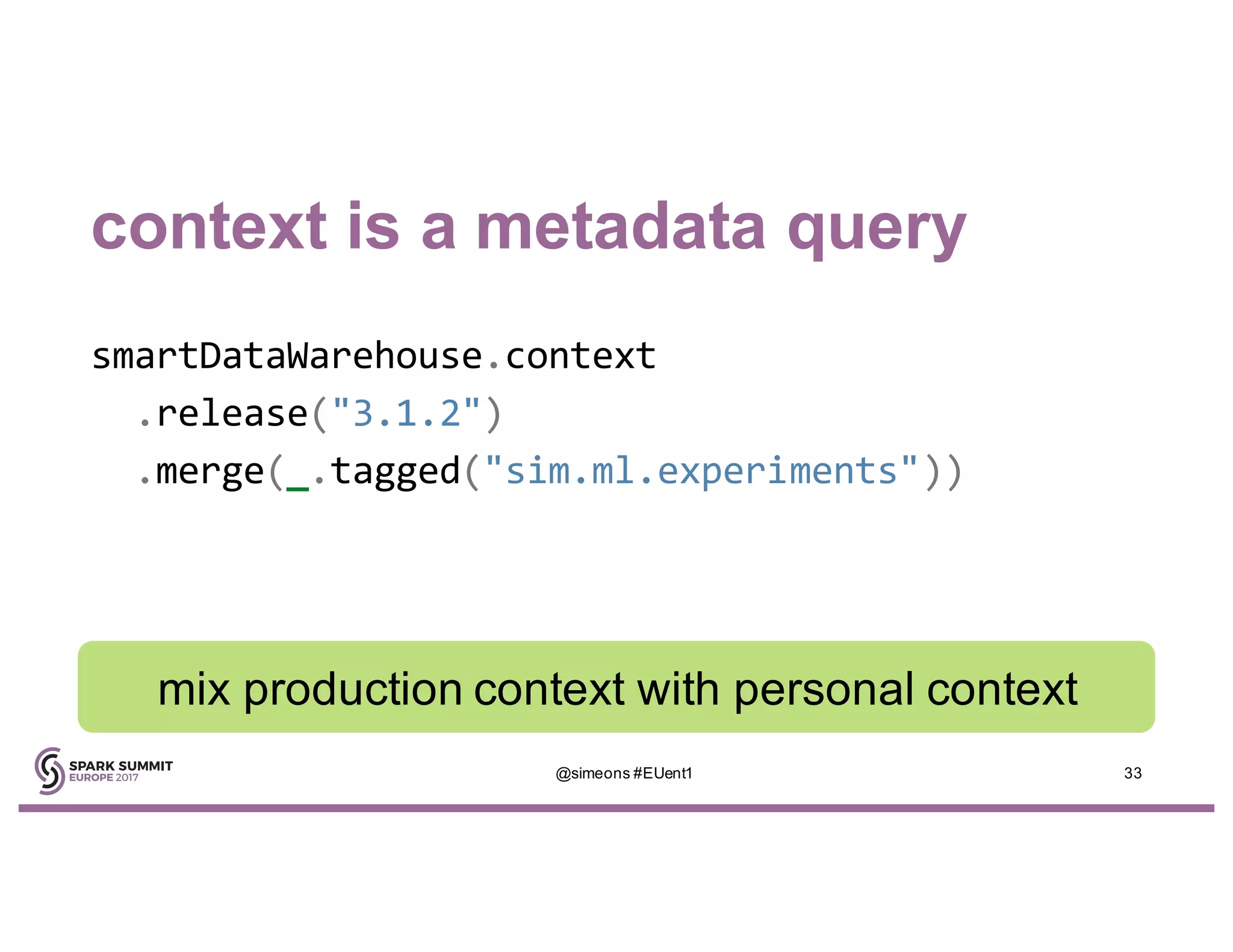

Simeon Simeonov discusses the concept of goal-based data production within the context of a data-powered culture, emphasizing the need for agile data production methods utilizing machine learning and AI. He highlights the complexity and inflexibility of traditional SQL abstractions and the advantages of a generative approach that enhances metadata queries and allows for real-time context resolution. The document advocates for a self-service model for data scientists and business users, aiming to improve productivity, flexibility, and collaboration through open-source solutions.

![automatic joins by column name

{

"table": "dimension_campaigns",

"primary_key": ["campaign_id"]

"joins": [ {"fields": ["campaign_id"]} ]

}

@simeons #EUent1 34

join any table with a campaign_id column](https://image.slidesharecdn.com/w21simsimeonov-171102153045/75/Goal-Based-Data-Production-with-Sim-Simeonov-34-2048.jpg)

![automatic semantic joins

{

"table": "dimension_campaigns",

"primary_key": ["campaign_id"]

"joins": [ {"fields": ["ref:swoop.campaign.id"]} ]

}

join any table with a Swoop campaign ID

@simeons #EUent1 35](https://image.slidesharecdn.com/w21simsimeonov-171102153045/75/Goal-Based-Data-Production-with-Sim-Simeonov-35-2048.jpg)