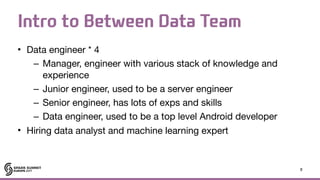

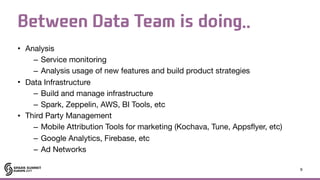

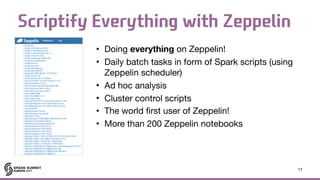

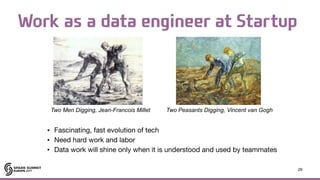

The document discusses the evolution of a startup called 'Between', led by Kevin Kim, detailing its growth from 100 beta users in 2011 to 20 million downloads by 2016. It highlights the team's use of Apache Spark for big data processing, the infrastructure they have built, and their current hiring needs for data analysts and machine learning experts. The document also emphasizes the importance of data analysis for product development and marketing strategies, along with the ongoing challenges and future plans for expanding operations.