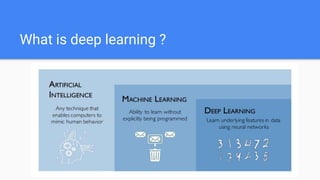

The document provides an overview of deep learning, including:

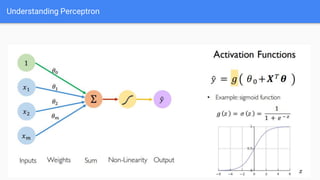

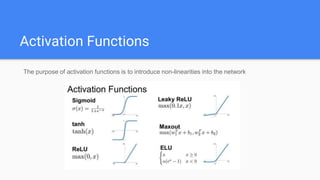

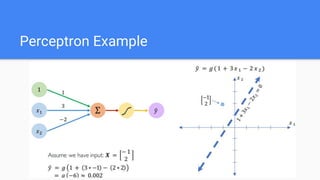

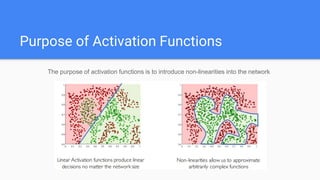

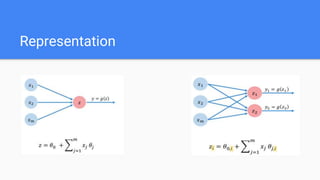

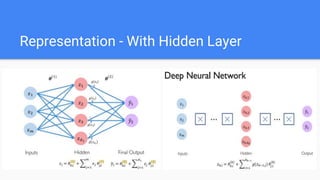

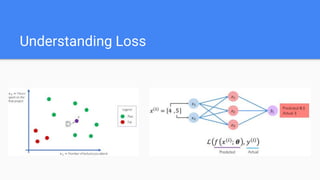

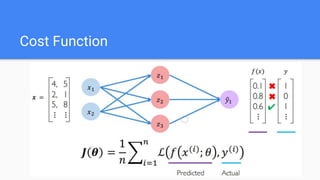

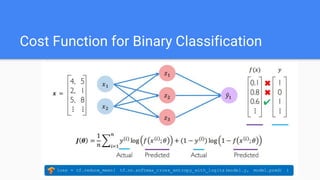

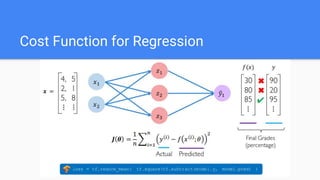

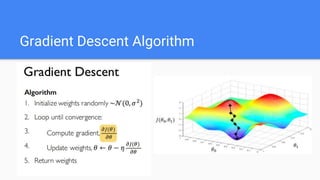

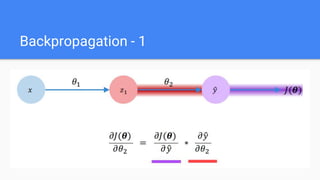

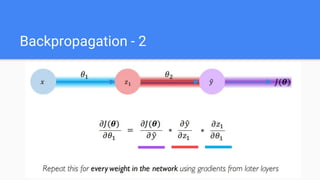

- An introduction to deep learning concepts like perceptrons, neural networks, forward and back propagation, and activation functions.

- How deep learning can be applied to problems in computer vision, text processing, audio, and unstructured data.

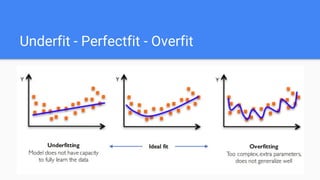

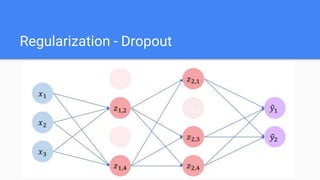

- The importance of regularization techniques like dropout and batch normalization to prevent overfitting in neural networks.

- That deep learning requires large amounts of data and compute power to be effective.