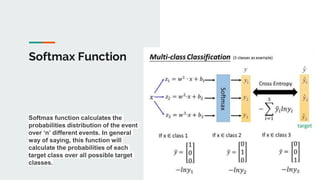

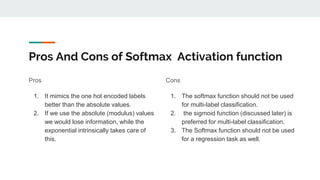

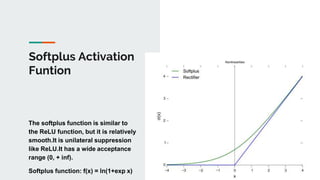

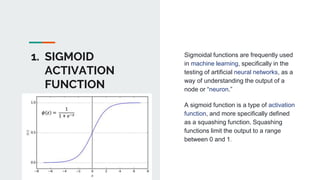

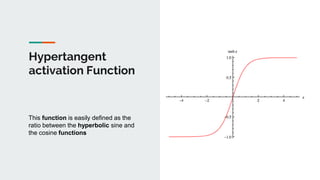

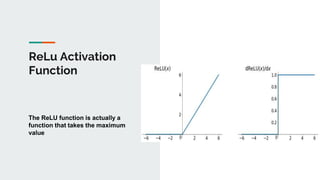

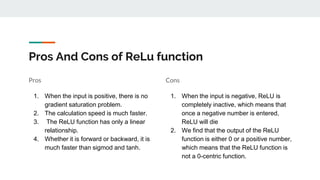

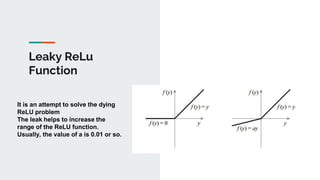

The document discusses various activation functions used in deep learning neural networks including sigmoid, tanh, ReLU, LeakyReLU, ELU, softmax, swish, maxout, and softplus. For each activation function, the document provides details on how the function works and lists pros and cons. Overall, the document provides an overview of common activation functions and considerations for choosing an activation function for different types of deep learning problems.

![Pros And Cons of ELU Activation function

Pros

1. ELU becomes smooth slowly until its

output equal to -α whereas RELU

sharply smoothes.

2. ELU is a strong alternative to ReLU.

3. Unlike to ReLU, ELU can produce

negative outputs.

Cons

1. For x > 0, it can blow up the activation

with the output range of [0, inf].](https://image.slidesharecdn.com/vkkajcdqeugo0amsntq9-signature-d6c9c09aa3528af14349e65e2ed4ff95984195ec14b5eb58eafc662914ccb582-poli-200520153327/85/Activation-functions-11-320.jpg)