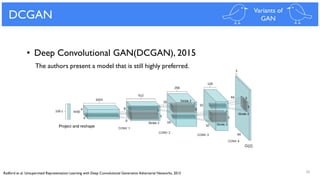

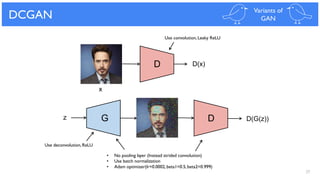

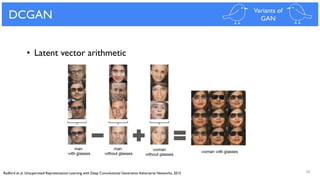

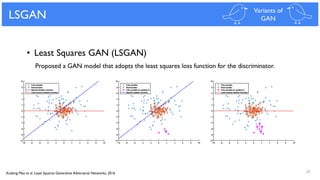

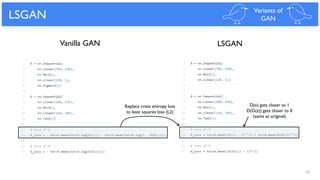

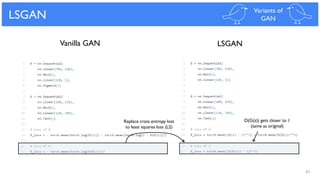

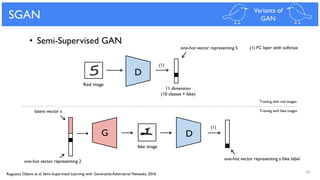

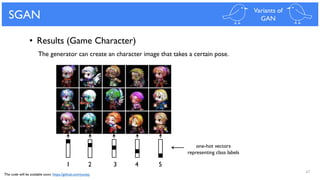

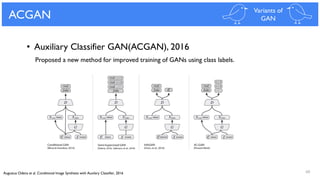

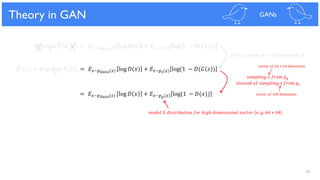

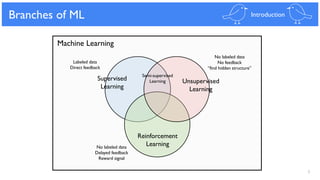

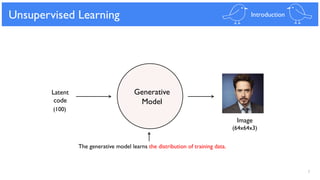

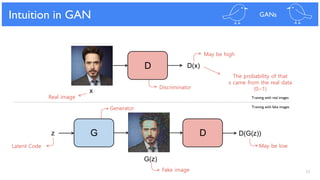

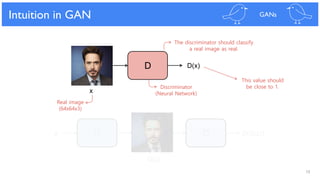

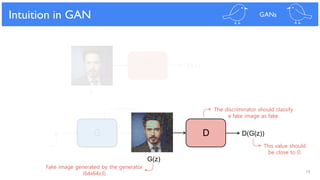

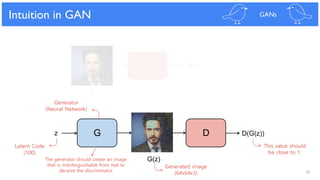

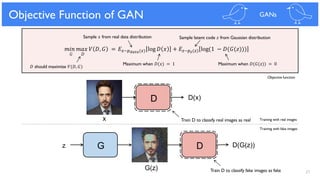

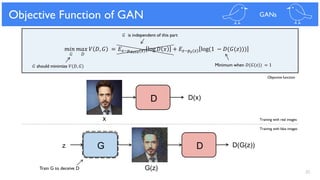

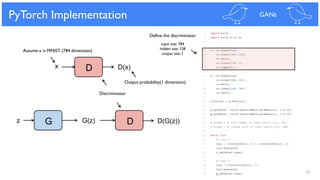

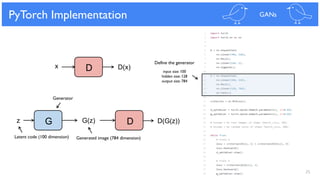

Generative Adversarial Networks (GANs) are a type of deep learning model used for unsupervised machine learning tasks like image generation. GANs work by having two neural networks, a generator and discriminator, compete against each other. The generator creates synthetic images and the discriminator tries to distinguish real images from fake ones. This allows the generator to improve over time at creating more realistic images that can fool the discriminator. The document discusses the intuition behind GANs, provides a PyTorch implementation example, and describes variants like DCGAN, LSGAN, and semi-supervised GANs.

![33

Solution for Poor Gradient

𝐺

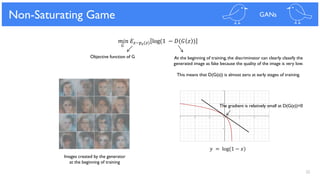

𝑚𝑎𝑥 𝐸𝑧~𝑝 𝑧(𝑧) log 𝐷(𝐺 𝑧 )

𝐺

𝑚𝑖𝑛 𝐸𝑧~𝑝 𝑧(𝑧) log(1 − 𝐷(𝐺 𝑧 )

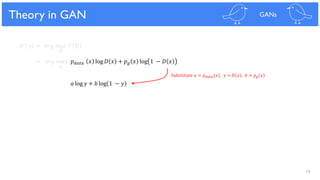

Non-Saturating Game GANs

𝑦 = log(𝑥)

The gradient is very large at D(G(z))=0

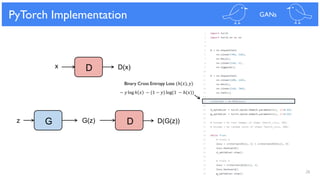

Use binary cross entropy loss function with fake label (1)

𝑚𝑖𝑛 𝐸𝑧~𝑝 𝑧(𝑧)[−𝑦 log 𝐷(𝐺 𝑧 ) − 1 − 𝑦 log(1 − 𝐷(𝐺 𝑧 )]

𝑚𝑖𝑛 𝐸𝑧~𝑝 𝑧(𝑧)[− log 𝐷 𝐺 𝑧 ]

𝐺

𝐺

𝑦 = 1

Modification (heuristically motivated)

• Practical Usage](https://image.slidesharecdn.com/generativeadversarialnetworks-170512104624/85/Generative-adversarial-networks-33-320.jpg)