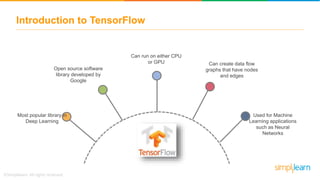

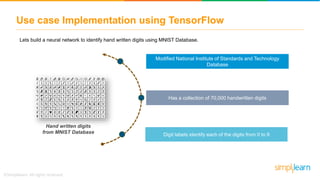

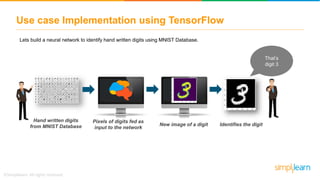

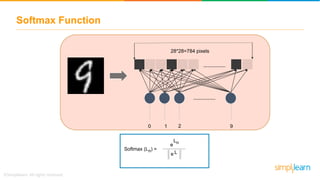

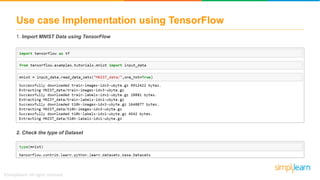

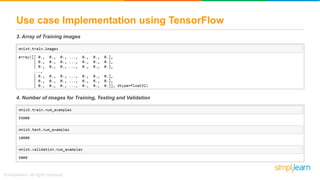

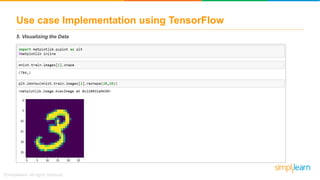

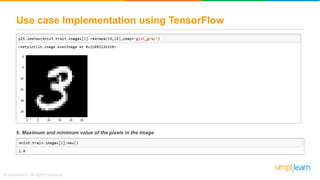

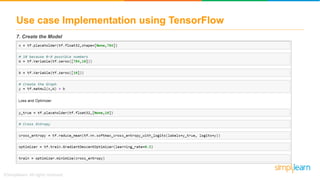

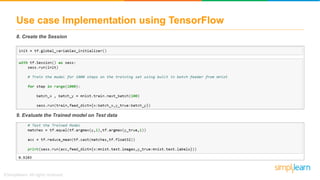

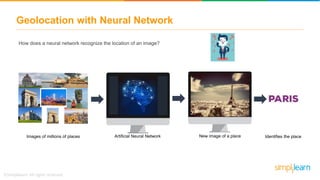

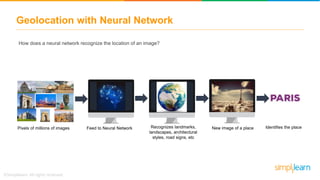

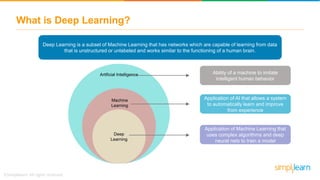

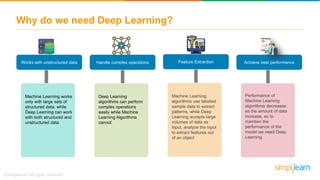

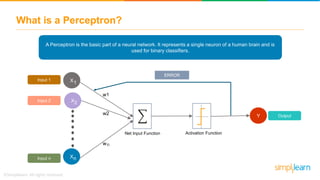

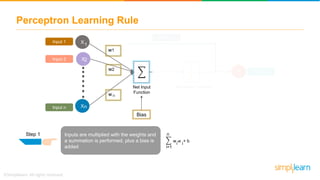

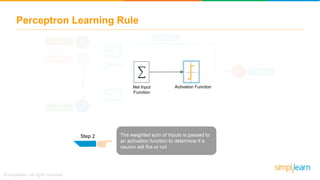

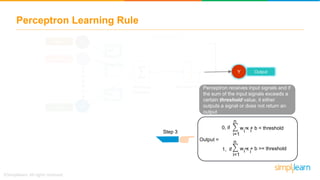

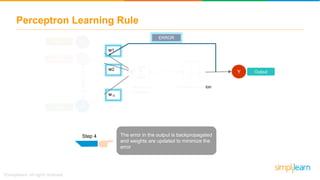

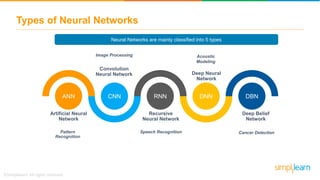

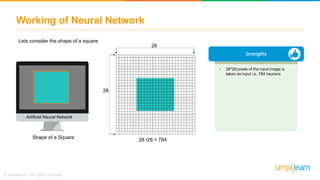

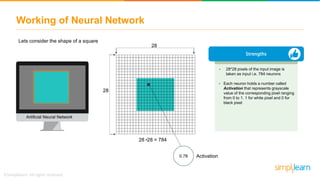

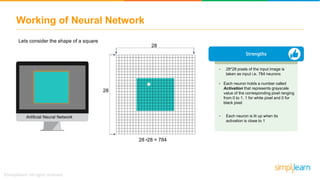

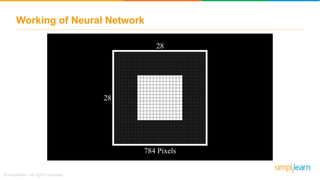

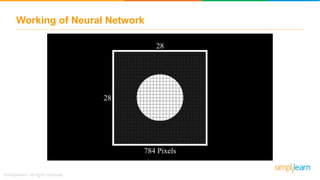

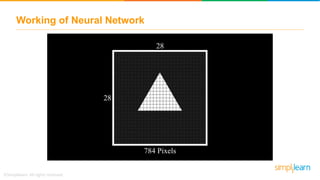

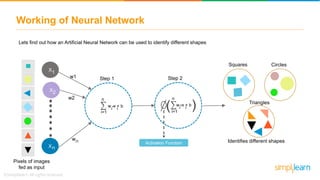

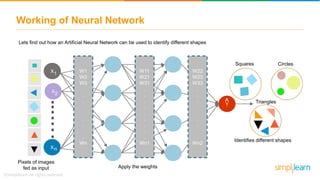

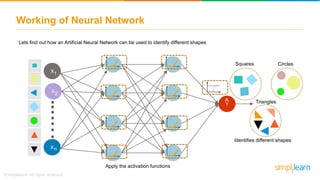

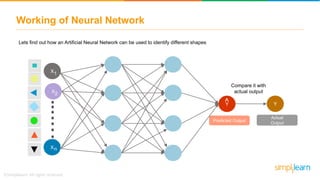

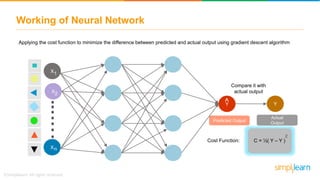

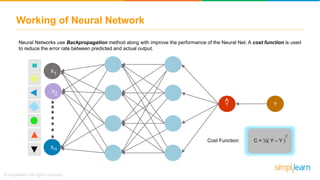

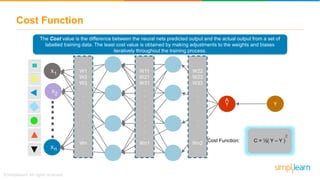

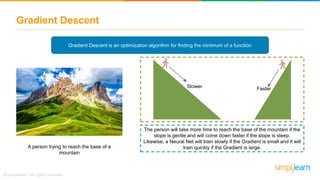

The document discusses how neural networks recognize image locations through geolocation, including the processing of vast image datasets to identify landmarks and architectural features. It explains the functionality and structure of neural networks, including the perceptron model and its ability to perform logical operations. Additionally, it covers various applications of deep learning, how neural networks are implemented, and specifically discusses TensorFlow as a tool for building neural networks.

![Introduction to TensorFlow

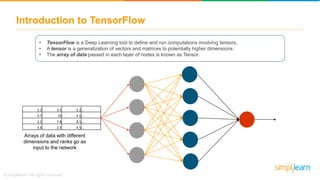

• TensorFlow is a Deep Learning tool to define and run computations involving tensors.

• A tensor is a generalization of vectors and matrices to potentially higher dimensions.

• The array of data passed in each layer of nodes is known as Tensor.

a

m

k

q

d

2

4

8

1

1

9

3

2

5

4

4

6

6

3

3

7

8

2

9

5

Tensor of Dimensions[5] Tensor of Dimensions[5,4]Tensor of Dimension[3,3,3]](https://image.slidesharecdn.com/deeplearningtutorialdeeplearningtensorflowdeeplearningwithneuralnetworkssimplilearn-180427065503/85/Deep-Learning-Tutorial-Deep-Learning-TensorFlow-Deep-Learning-With-Neural-Networks-Simplilearn-59-320.jpg)

![Tensor Ranks

m=V=[1,2,3],[4,5,6]

v=[10,20,30]

t=[[[1],[2],[3]],[[4],[5],[6]],[[7],[8],[9]]]

s= [107]

Tensor of Rank 0 Tensor of Rank 1

Tensor of Rank 2Tensor of Rank 3](https://image.slidesharecdn.com/deeplearningtutorialdeeplearningtensorflowdeeplearningwithneuralnetworkssimplilearn-180427065503/85/Deep-Learning-Tutorial-Deep-Learning-TensorFlow-Deep-Learning-With-Neural-Networks-Simplilearn-61-320.jpg)