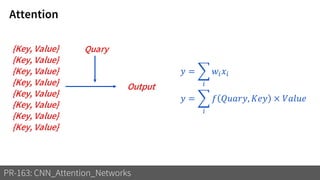

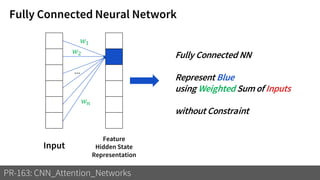

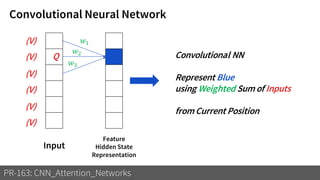

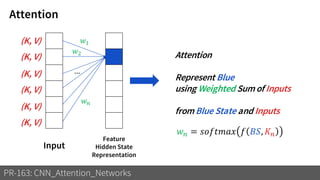

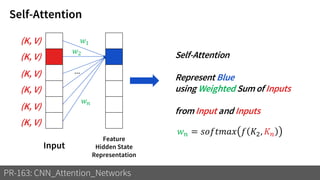

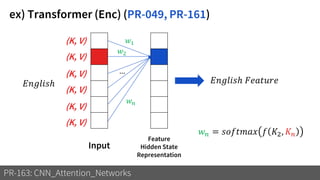

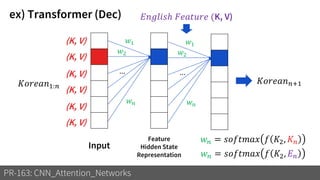

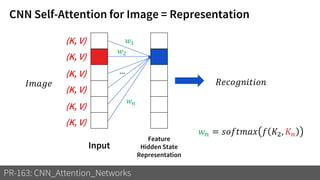

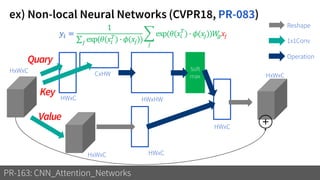

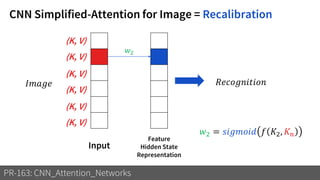

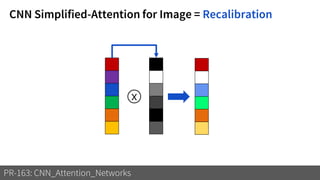

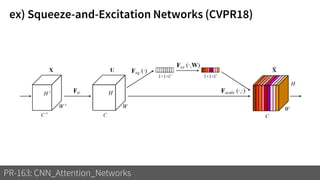

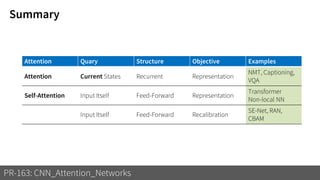

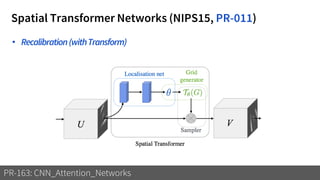

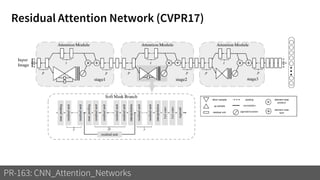

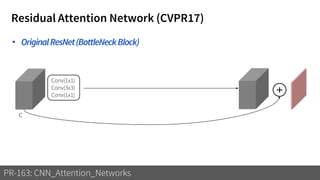

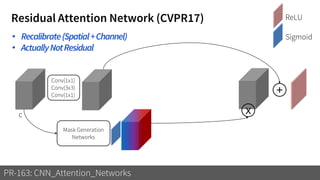

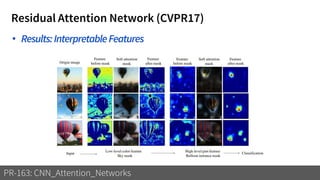

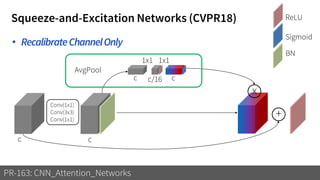

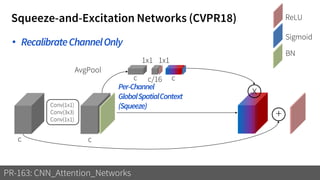

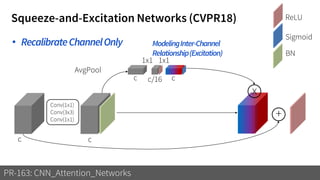

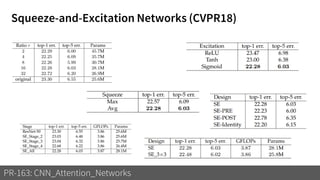

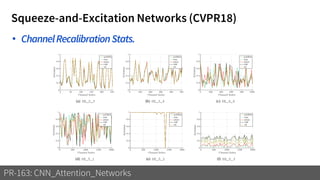

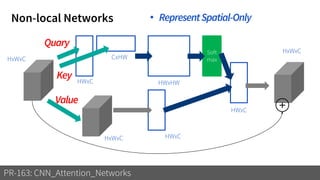

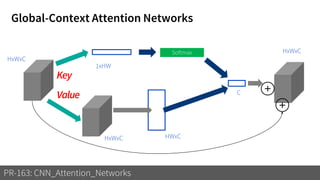

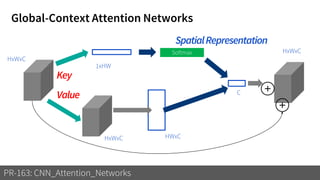

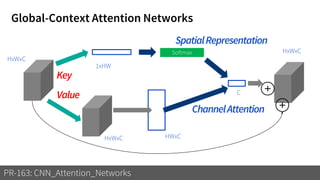

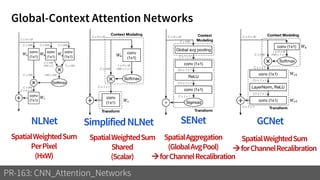

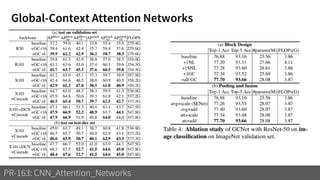

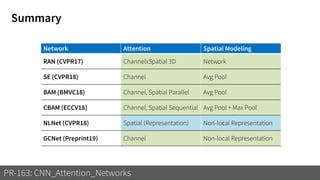

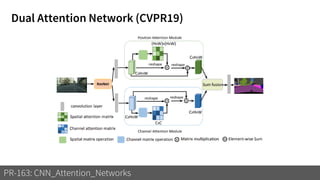

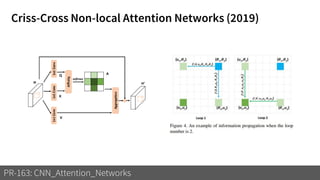

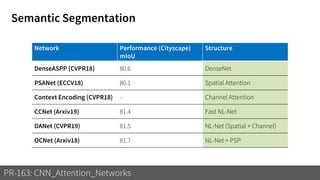

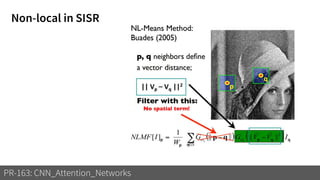

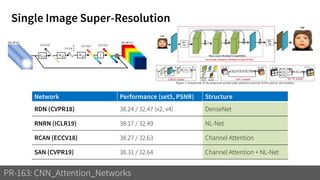

1) The document discusses different types of attention mechanisms in CNNs including self-attention and simplified attention for recalibration.

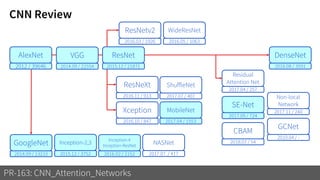

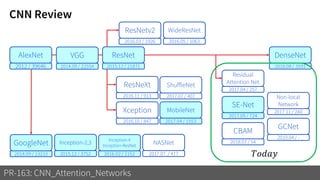

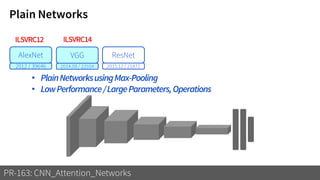

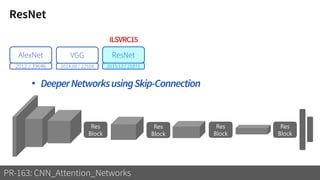

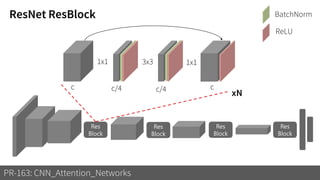

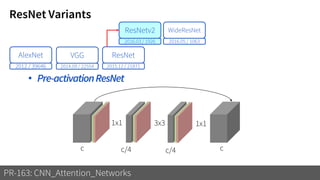

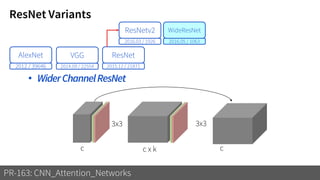

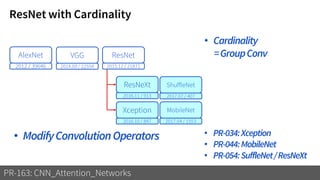

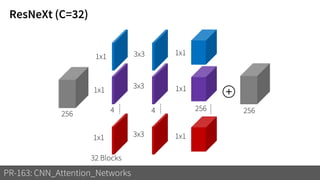

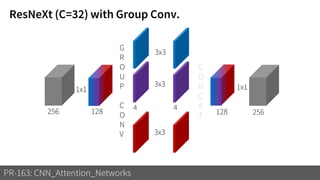

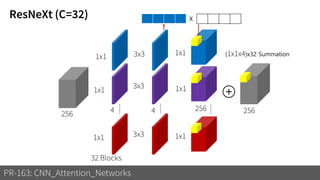

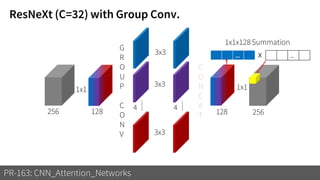

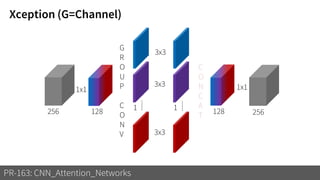

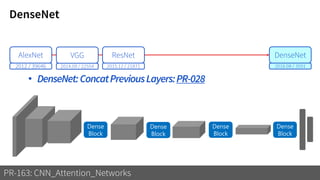

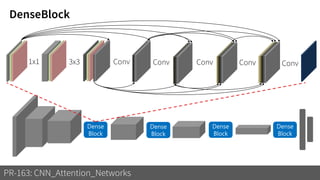

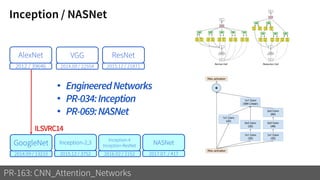

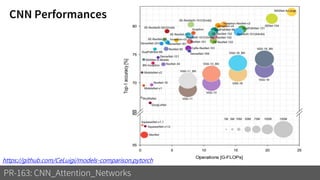

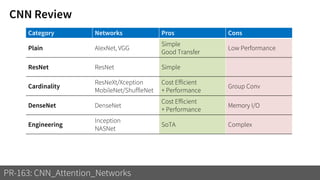

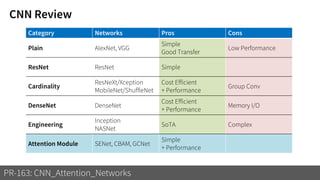

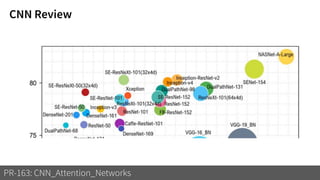

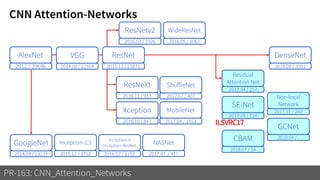

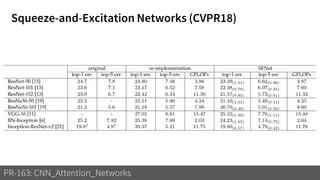

2) It reviews the evolution of CNN architectures including AlexNet, VGG, ResNet and variants, DenseNet, ResNeXt, Xception, MobileNet and ShuffleNet.

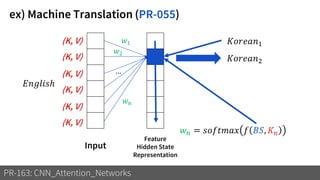

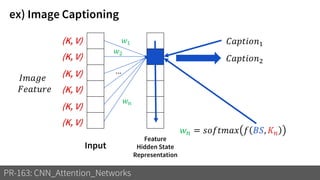

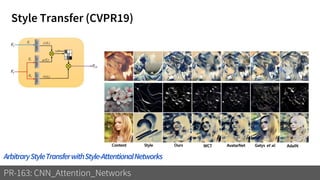

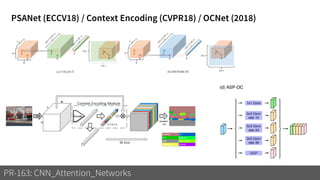

3) These attention mechanisms and CNN architectures are applied to tasks like image recognition, machine translation and image captioning.