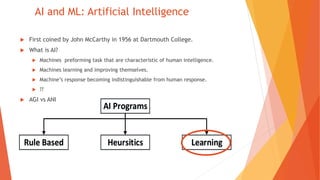

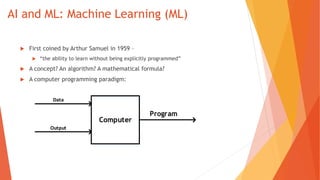

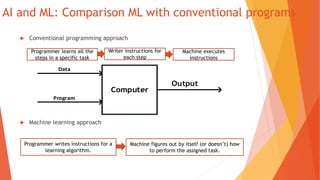

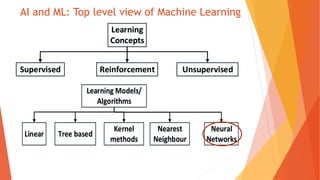

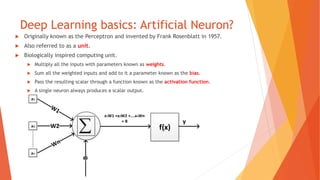

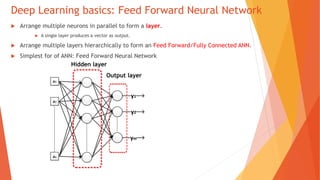

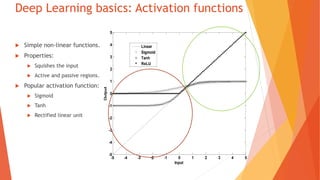

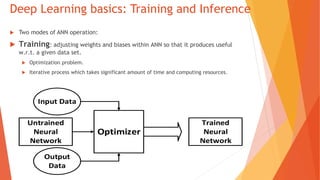

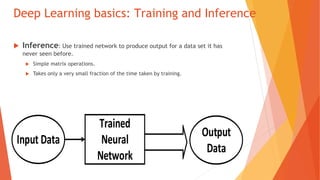

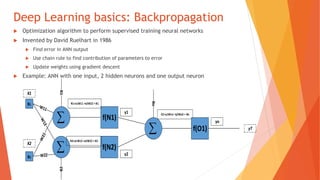

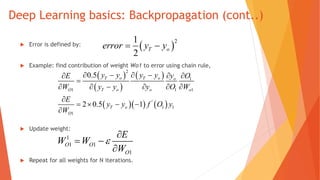

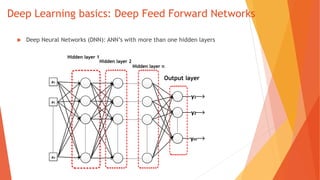

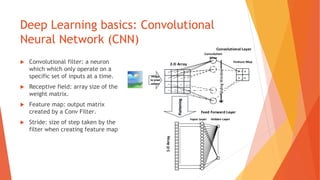

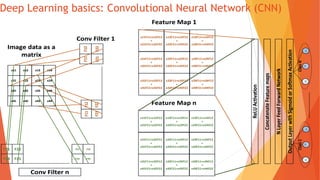

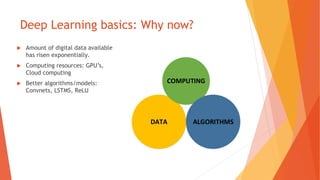

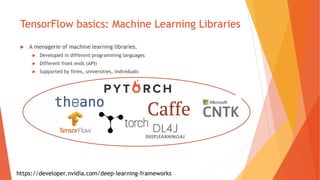

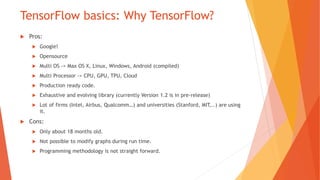

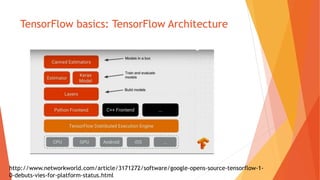

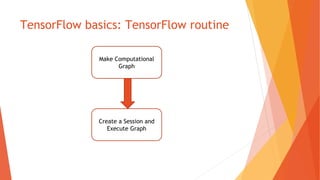

The document provides an introduction to deep learning using TensorFlow, covering essential concepts like artificial intelligence, machine learning, and neural networks. It details the mechanics of neural networks, including activation functions, training through backpropagation, and architectures such as convolutional neural networks. Additionally, it discusses the advantages of TensorFlow as a machine learning library, highlighting its open-source nature and wide adoption in various industries.

![References

S. S.1. Haykin and Simon, Neural networks: a comprehensive foundation, 2nd

ed. Prentice Hall, 1999.

Y.2. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no.

7553, pp. 436–444, May 2015.

A.3. Géron, Hands-on machine learning with Scikit-Learn and TensorFlow:

concepts, tools, and techniques to build intelligent systems, 1st ed. O’Reilly

Media, Inc, 2017.

J. Dean and R.4. Monga, “TensorFlow - Google’s latest machine learning

system, open sourced for everyone,” Google Research Blog, 2015. [Online].

Available: https://research.googleblog.com/2015/11/tensorflow-googles-

latest-machine_9.html.](https://image.slidesharecdn.com/tensorflowedurekademo-170602025745/85/Deep-Learning-Tensor-flow-An-Intro-26-320.jpg)