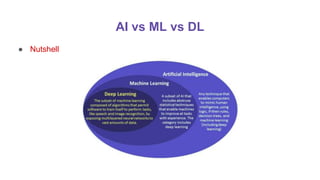

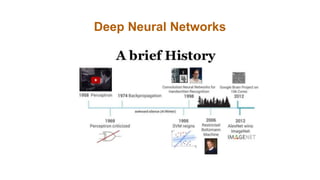

This document provides an introduction to deep learning, including:

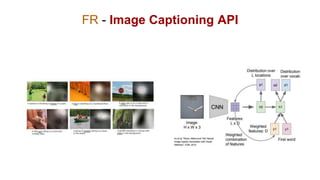

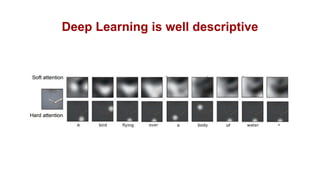

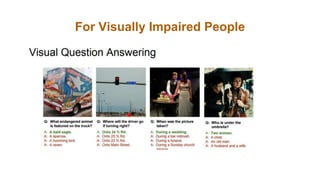

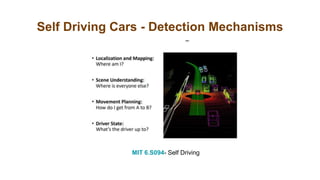

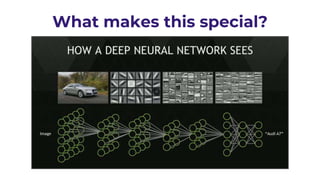

1. It discusses several applications of deep learning like image captioning, machine translation, virtual assistants, and self-driving cars.

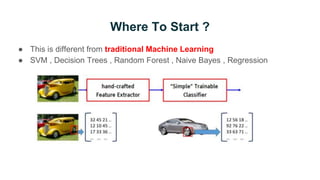

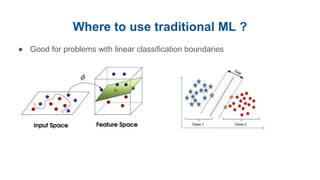

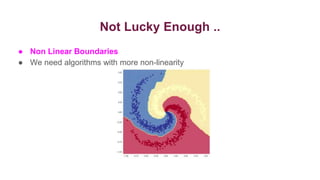

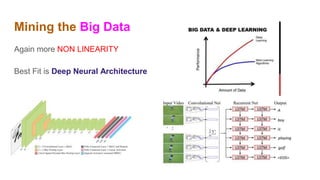

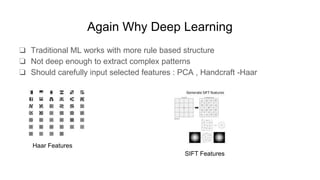

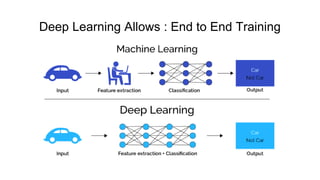

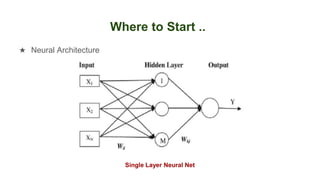

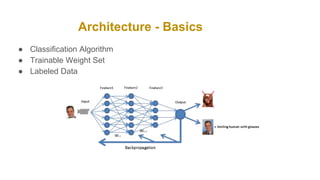

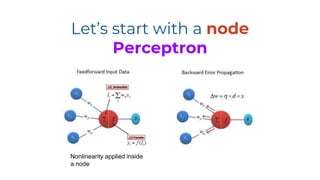

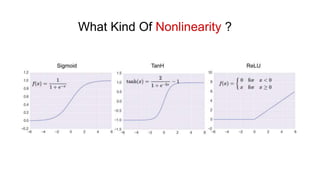

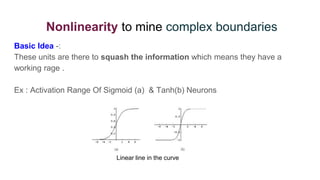

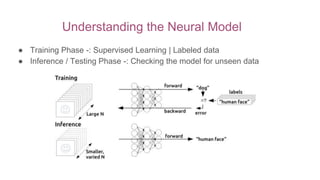

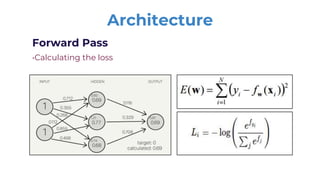

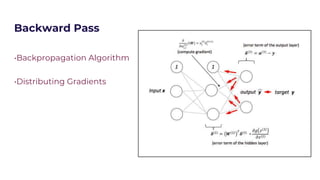

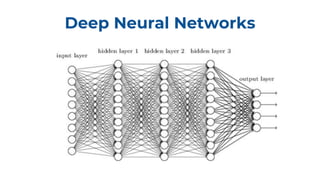

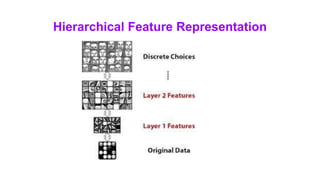

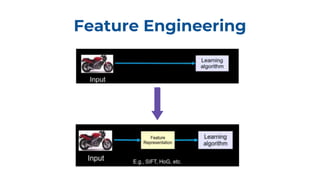

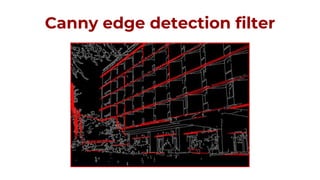

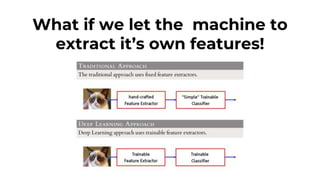

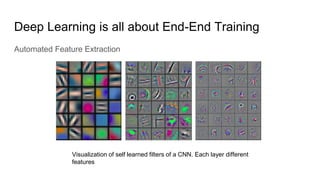

2. It explains that deep learning can automatically extract complex patterns from large amounts of data using neural networks with many layers, unlike traditional machine learning which relies on human-designed features.

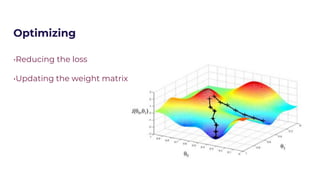

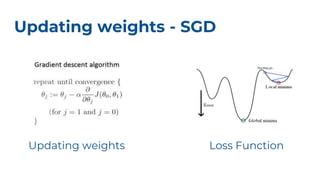

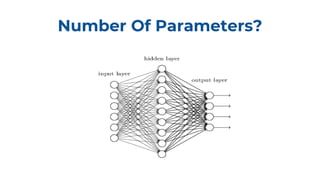

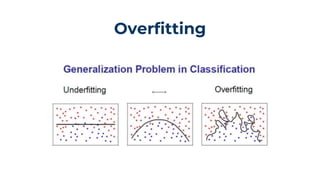

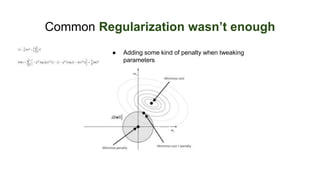

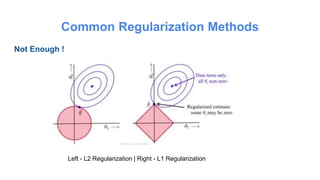

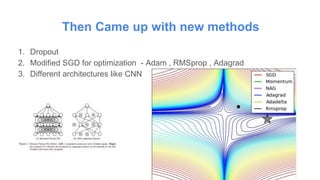

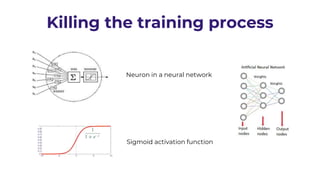

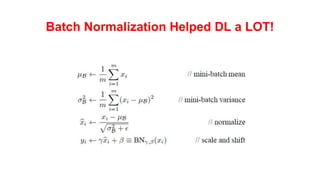

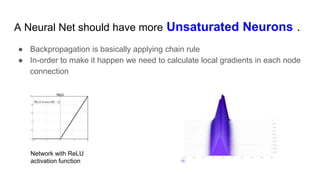

3. It outlines some of the challenges of training deep neural networks like overfitting and vanishing gradients, and how techniques like dropout, batch normalization, and improved optimization algorithms help address these issues.