- The authors propose a method called PRODEN for partial-label learning that is model-, loss-, and optimizer-agnostic.

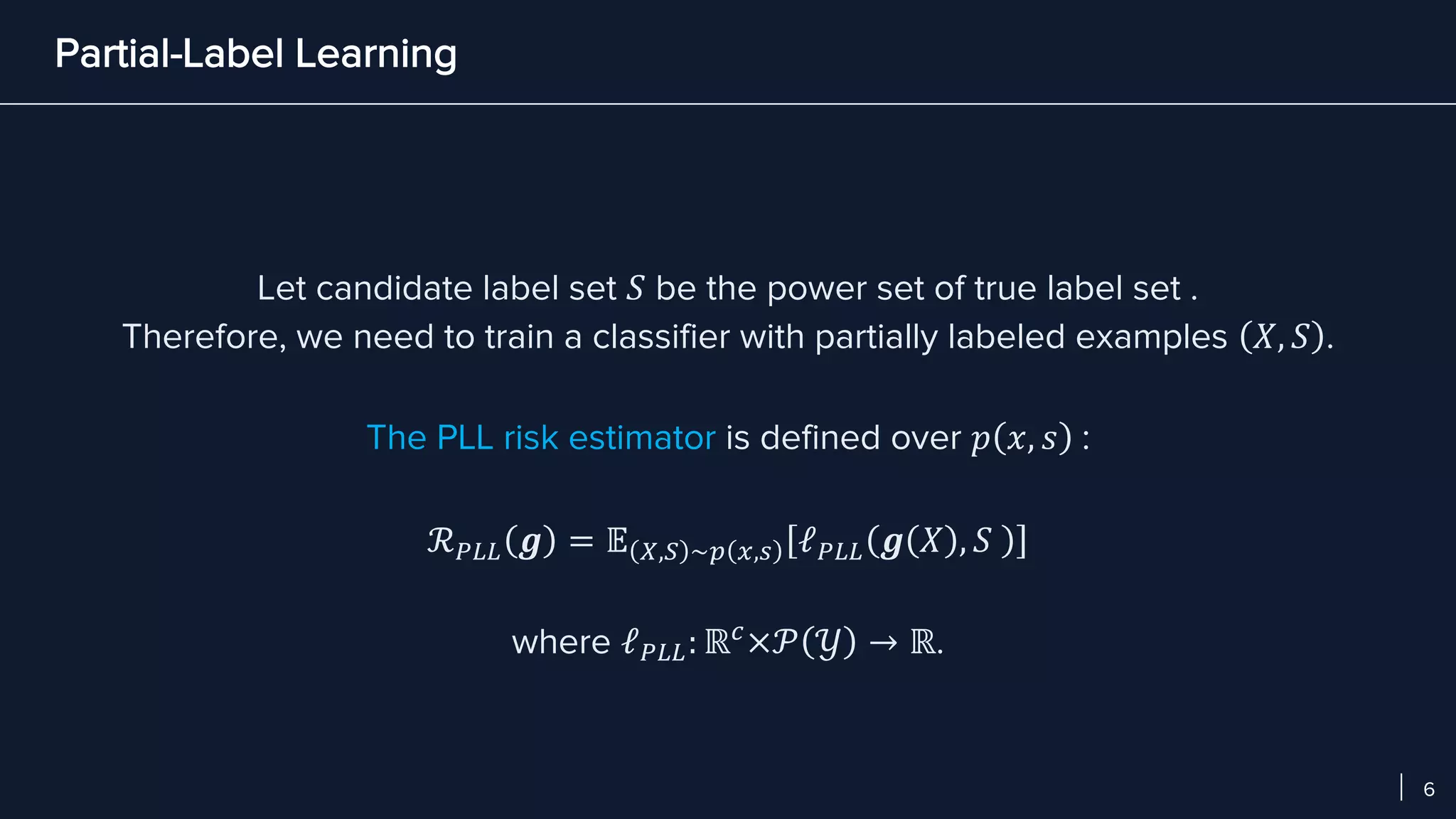

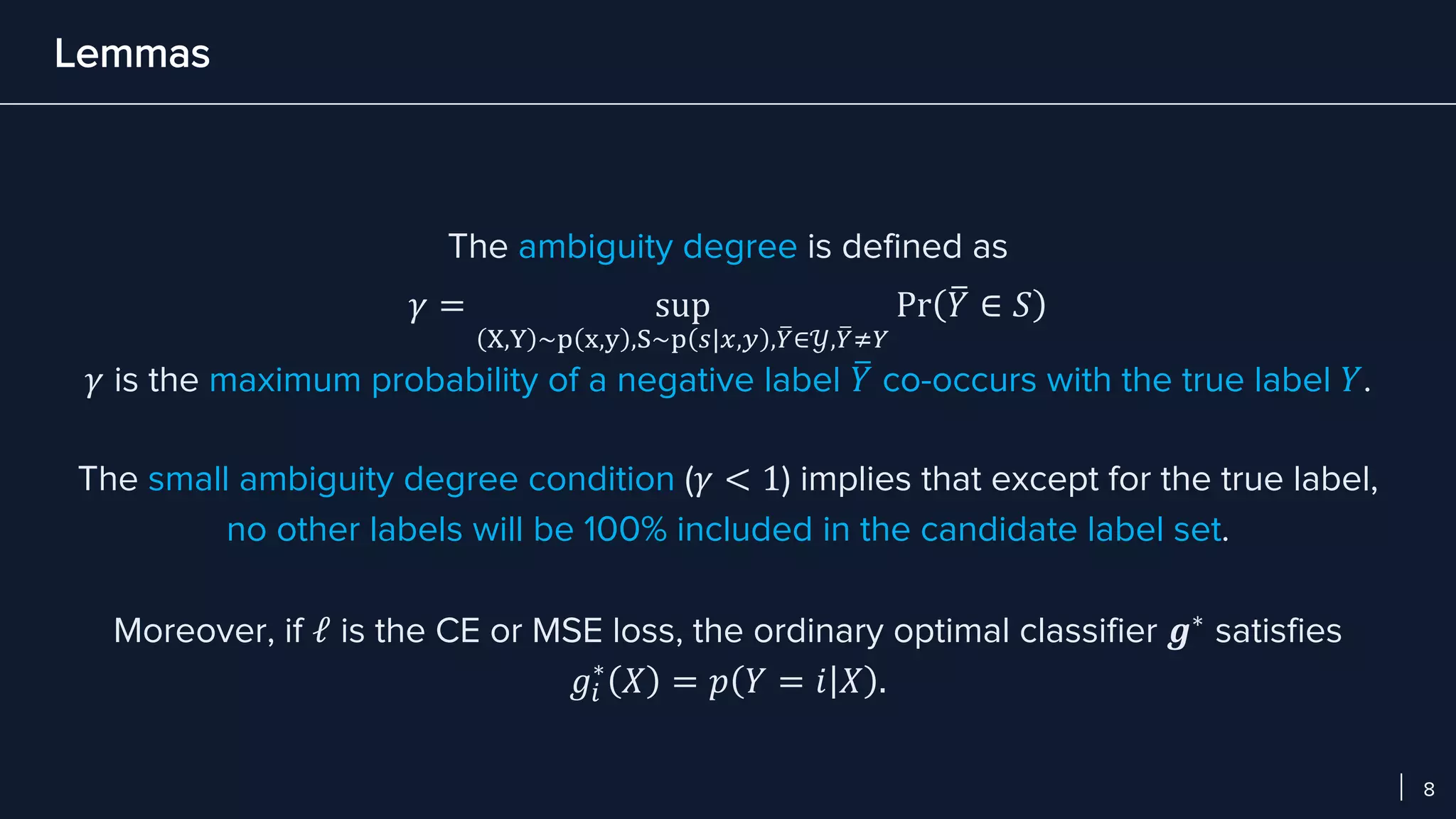

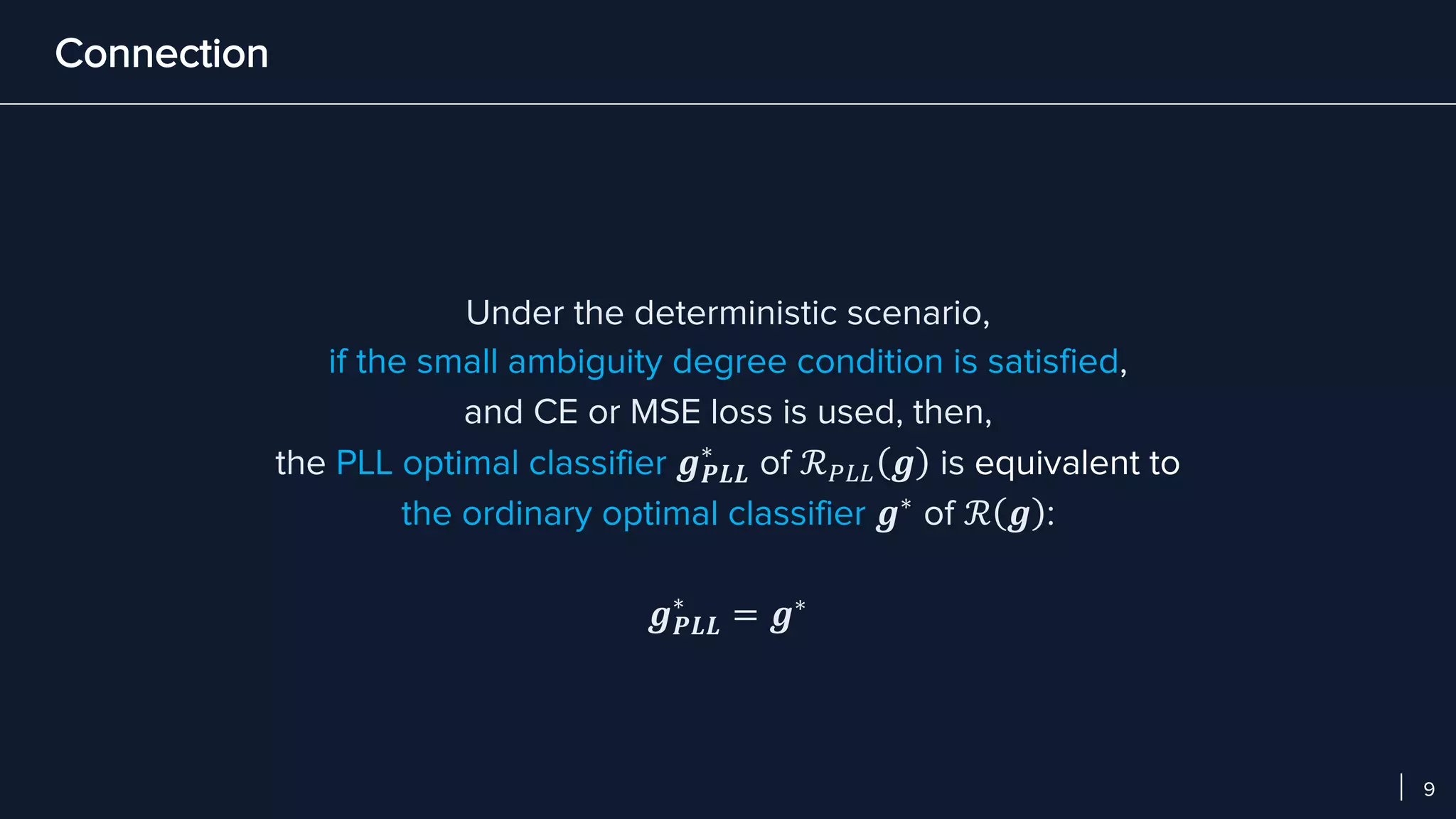

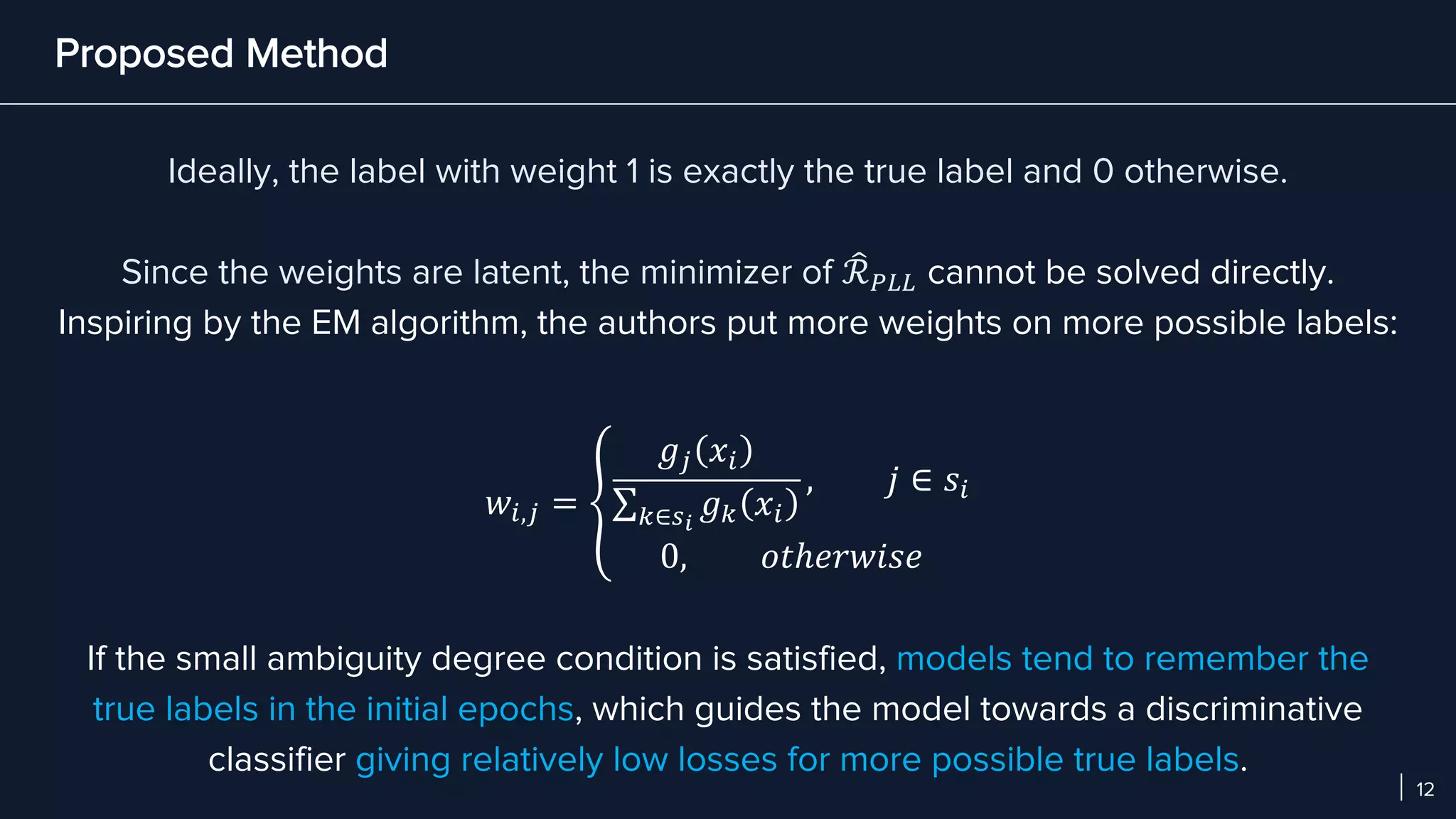

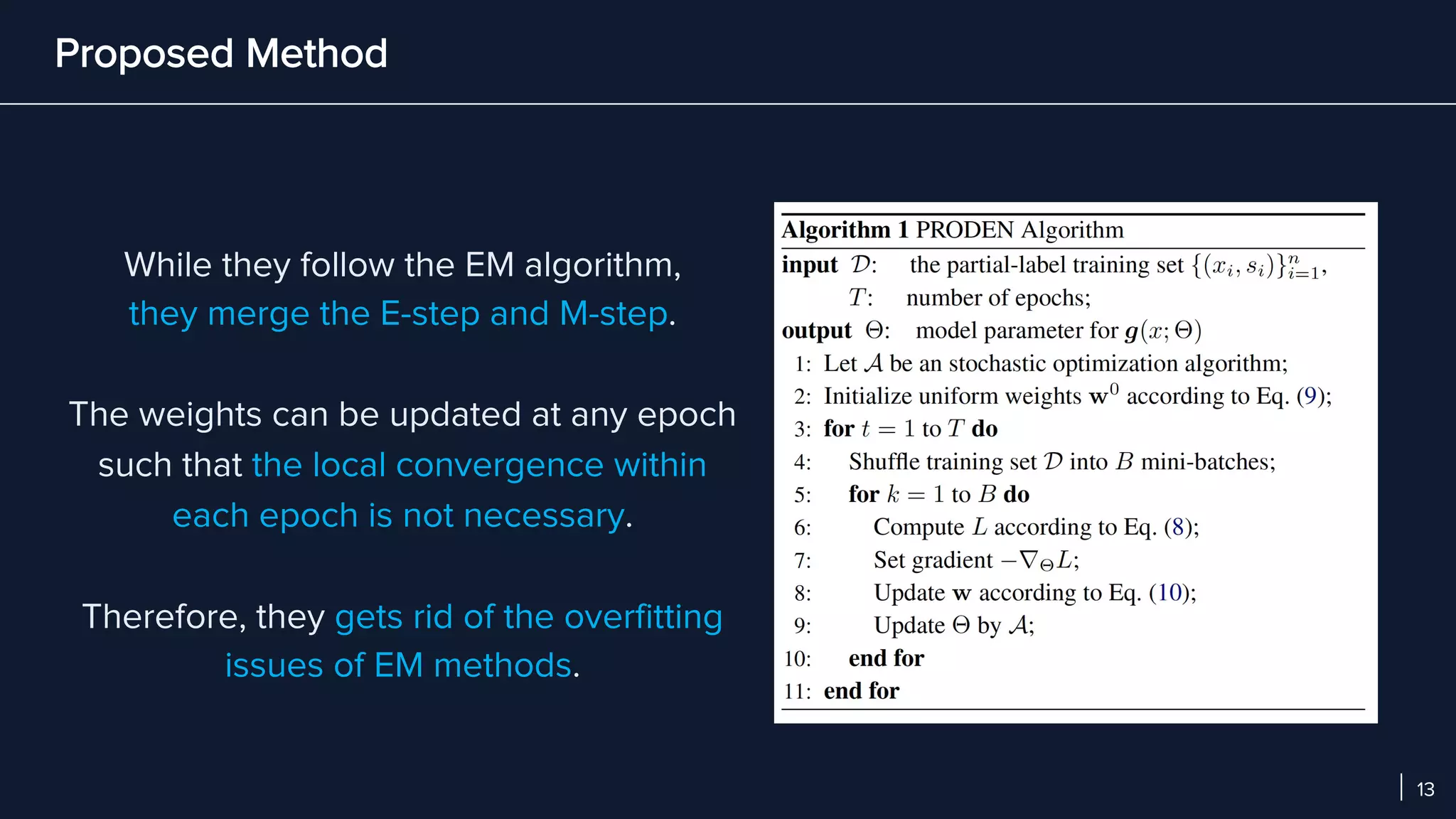

- PRODEN introduces a classifier-consistent risk estimator and dynamically updates label weights during training to guide the model towards the true labels.

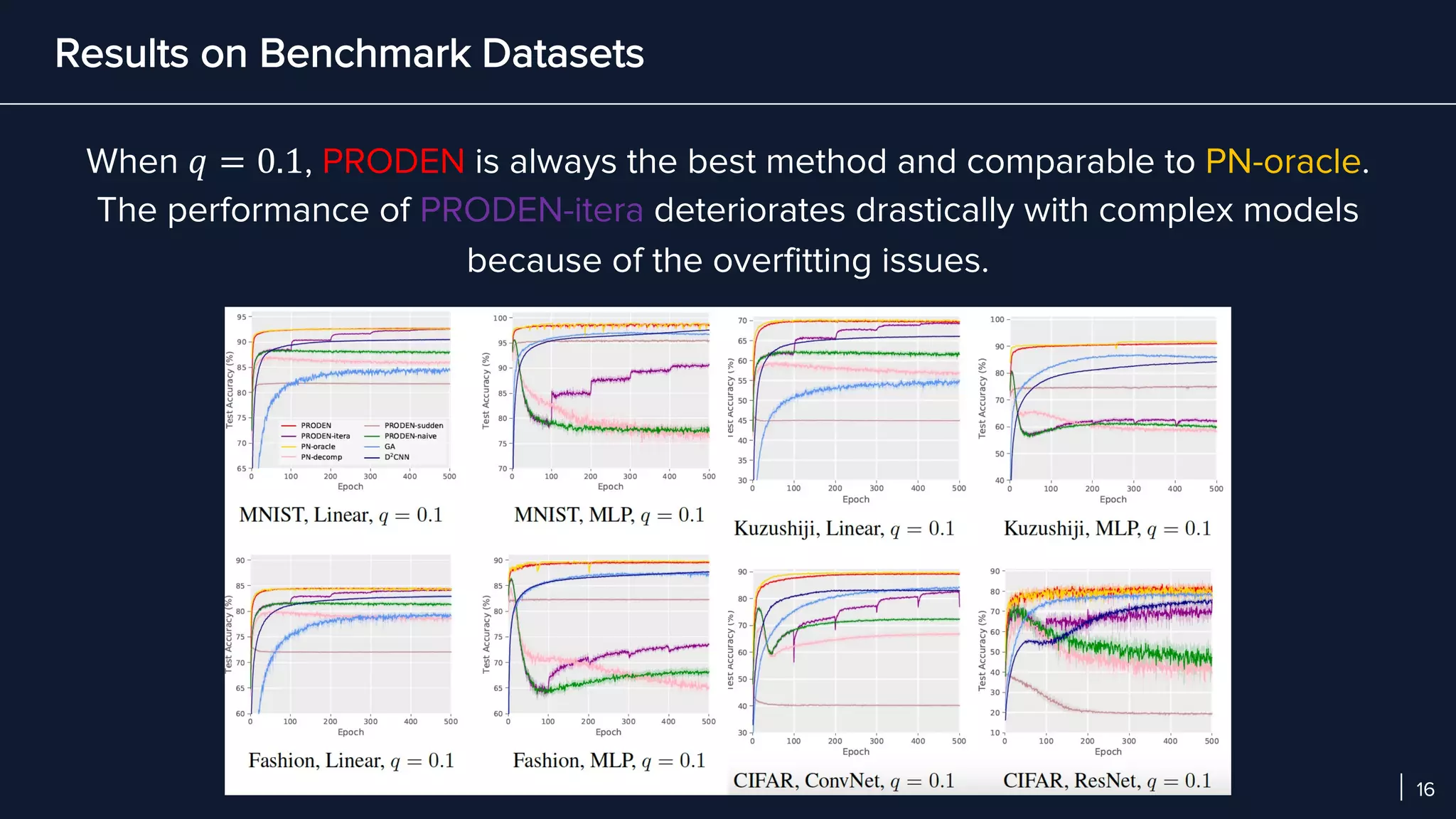

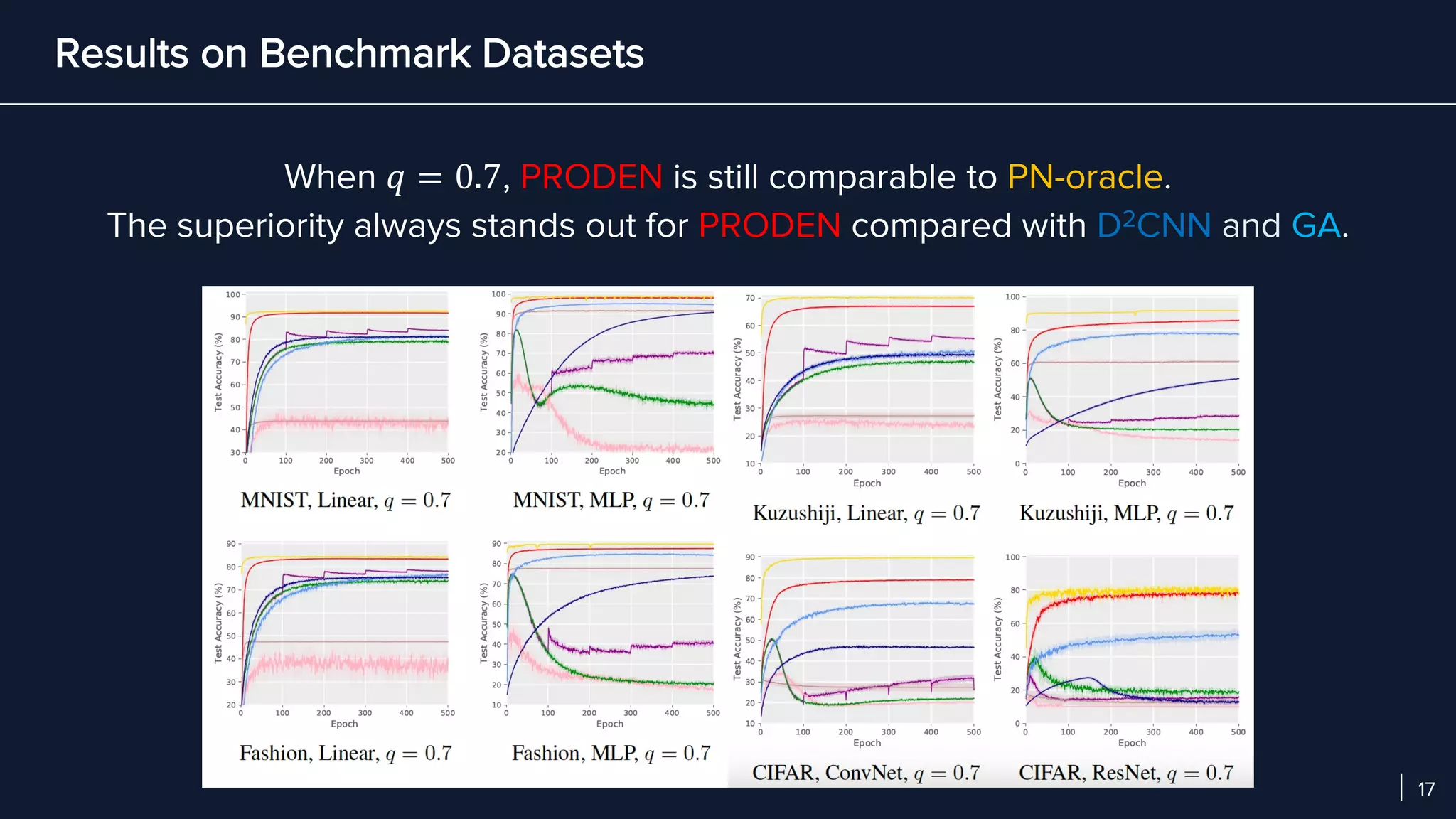

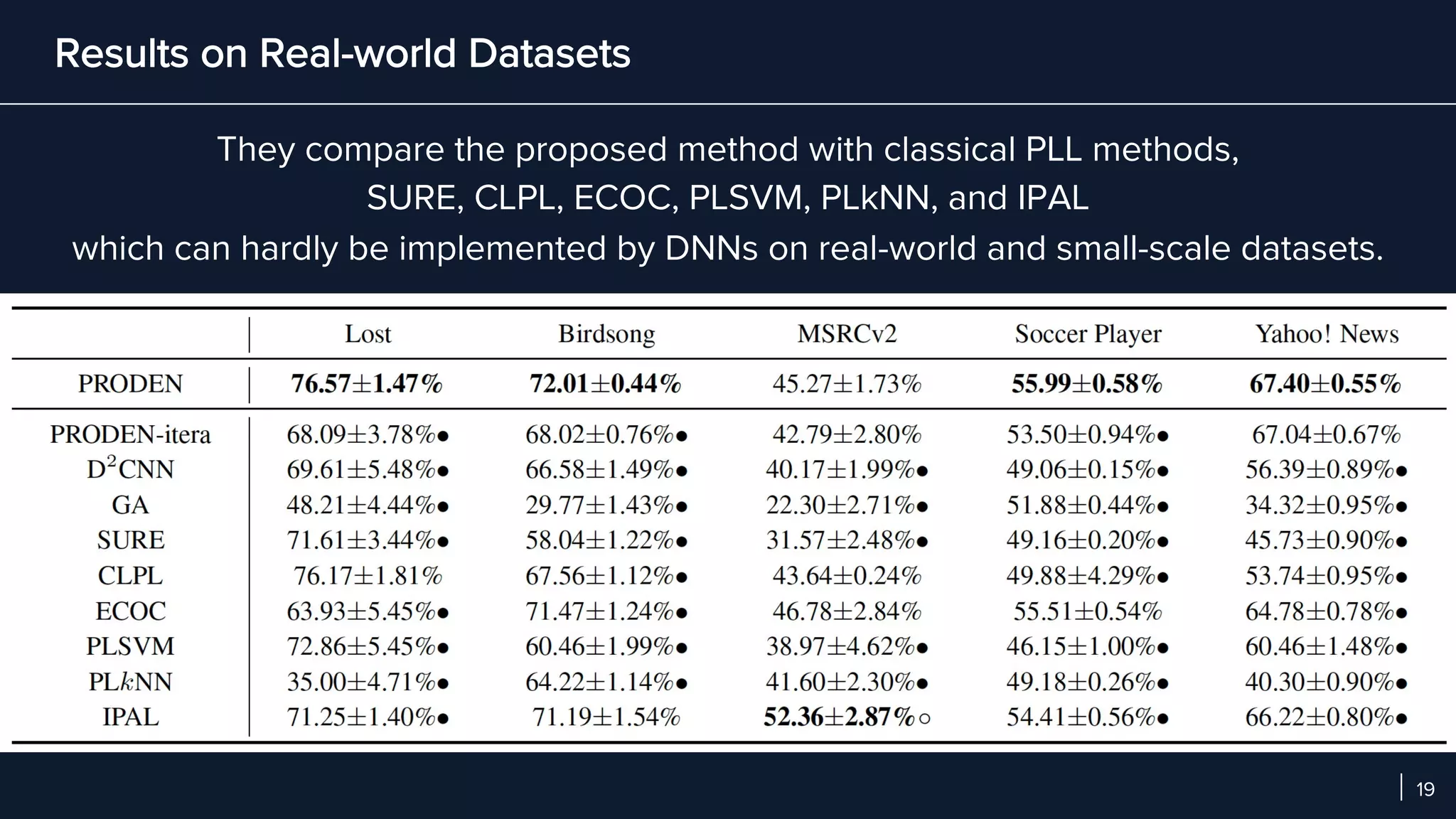

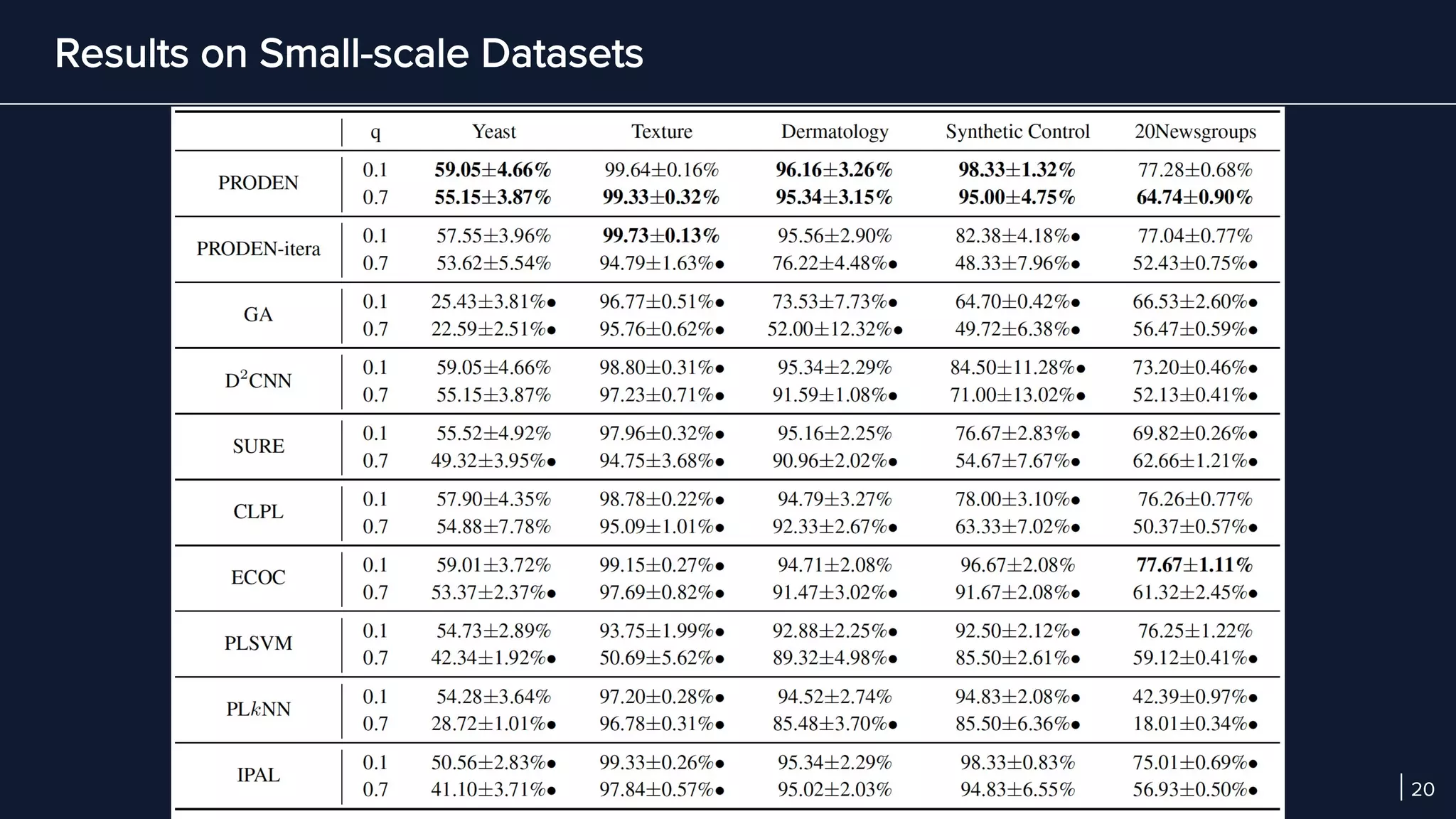

- In experiments on benchmark and real-world datasets, PRODEN achieves performance comparable to oracle labels and outperforms other partial-label learning baselines, demonstrating its effectiveness.