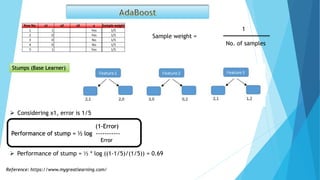

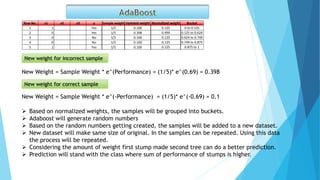

Boosting techniques like AdaBoost combine the predictions of many weak learner models to create a stronger joint model. AdaBoost uses stumps, or decision trees with one node and two leaves, as the weak learners. It adjusts the weights of samples to focus on incorrectly classified samples. Over many iterations, it boosts the weights of harder to classify samples to improve predictive performance compared to a single weak learner.