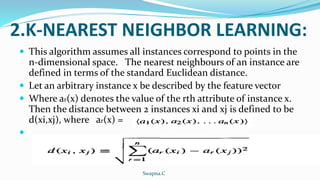

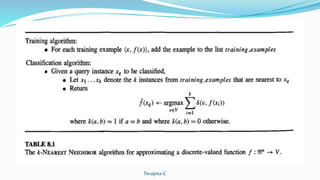

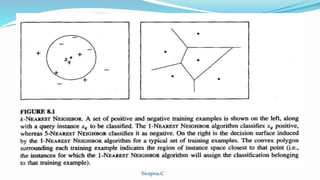

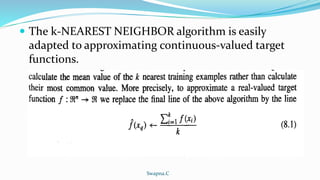

Instance-based learning algorithms like k-nearest neighbors (KNN) and locally weighted regression are conceptually straightforward approaches to function approximation problems. These algorithms store all training data and classify new query instances based on similarity to near neighbors in the training set. There are three main approaches: lazy learning with KNN, radial basis functions using weighted methods, and case-based reasoning. Locally weighted regression generalizes KNN by constructing an explicit local approximation to the target function for each query. Radial basis functions are another related approach using Gaussian kernel functions centered on training points.