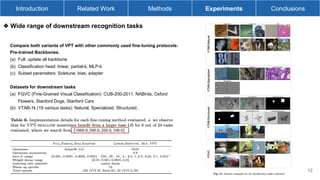

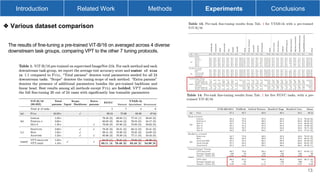

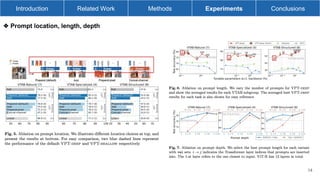

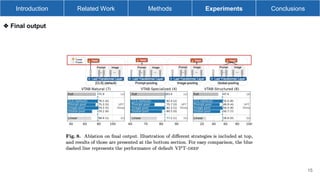

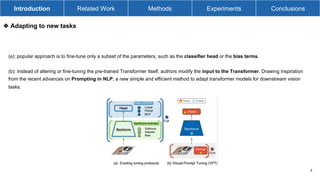

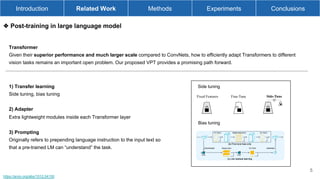

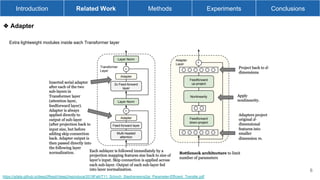

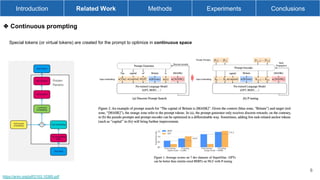

The document presents Visual Prompt Tuning (VPT), a parameter-efficient method for adapting large vision transformer models to various downstream tasks by introducing task-specific learnable prompts while keeping the pre-trained backbone fixed. The proposed approach is shown to outperform traditional fine-tuning methods, including full fine-tuning, while significantly reducing storage requirements. It discusses various adaptation strategies, including side tuning, bias tuning, and the use of lightweight adapter modules.

![❖ Visual-Prompt Tuning (VPT)

Introduction Related Work Methods Experiments Conclusions

VPT injects a small number of learnable parameters into Transformer’s input space and keeps the backbone frozen during

the downstream training stage.

For a plain ViT with 𝑁 layers, an input image is divided into 𝑚 fixed-sized patches 𝐼!∈ℝ" ×# ×$ 𝑗 ∈ ℕ, 1 ≤ 𝑗 ≤ 𝑚},

the collection of image patch embeddings, 𝐸% = 𝑒%

!

∈ ℝ& 𝑗 ∈ ℕ, 1 ≤ 𝑗 ≤ 𝑚}, as inputs to the 𝑖 + 1 -th Transformer layer 𝐿%'(

Together with an extra learnable classification tokens ([CLS]), the whole ViT is formulated as:

9](https://image.slidesharecdn.com/visualprompttuning1-230614022127-7109e9b5/85/Visual-prompt-tuning-9-320.jpg)