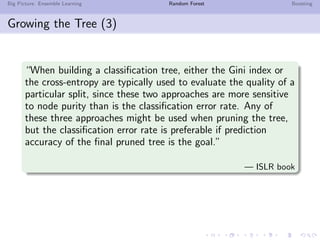

Random Forest, Boosted Trees and other ensemble learning methods build multiple models to improve predictive performance over single models. They combine "weak learners" like decision trees into a "strong learner". Random Forest adds randomness by selecting a random subset of features at each split. Boosting trains trees sequentially on weighted data from previous trees. Both reduce variance compared to bagging. Random Forest often outperforms Boosting while being faster to train. Neural networks can also be viewed as an ensemble method by combining simple units.

![Big Picture: Ensemble Learning Random Forest Boosting

Question 1: Why Bagging Works?

1 Bias-Variance Trade off:

E[(F(x) − fi (x))2

] = (F(x) − E[fi (x)])2

+ E[fi (x) − Efi (x)]2

= (Bias[fi (x)])2

+ Var[fi (x)]

2 |Bias(fi (x))| is small but Var(fi (x)) is big

3 Then for f (x), if {fi (x)} are mutually independent

Bias(f (x)) = Bias(fi (x))

Var(f (x)) =

1

n

Var(fi (x))

Variance is reduced by averaging](https://image.slidesharecdn.com/main-140407073347-phpapp02/85/Introduction-to-Some-Tree-based-Learning-Method-34-320.jpg)