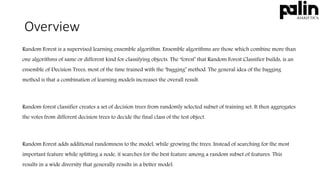

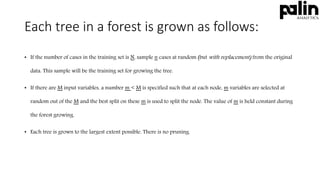

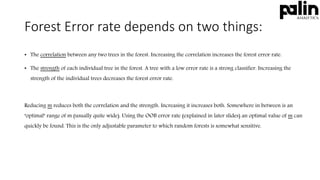

Random forest is a supervised learning ensemble algorithm that combines multiple decision trees to improve classification accuracy through the 'bagging' method. It references random subsets of both training data and features to enhance diversity and robustness in model predictions. This algorithm is efficient, handles large datasets with numerous variables, and provides insights into feature importance, making it a strong choice for quick model development.