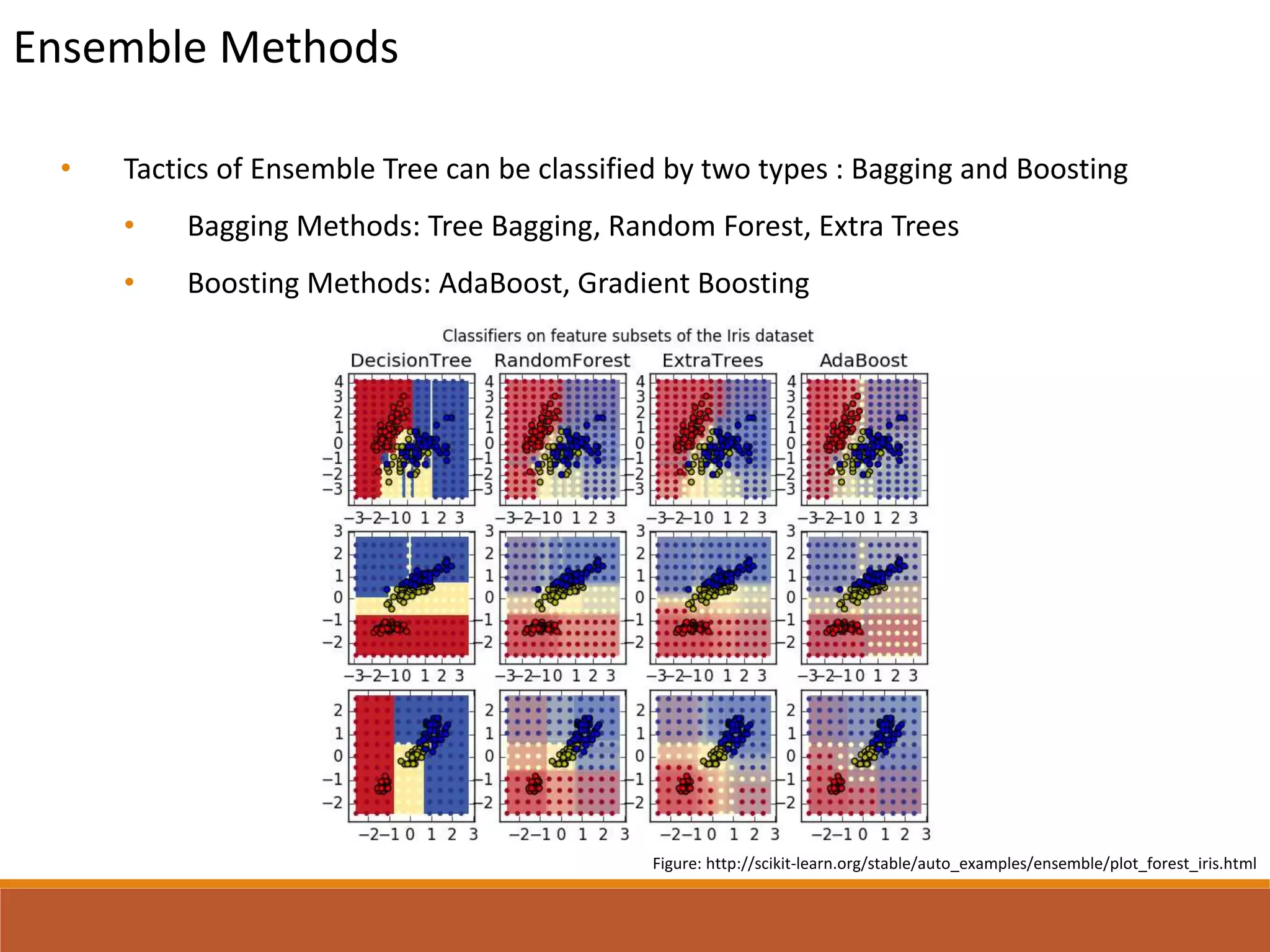

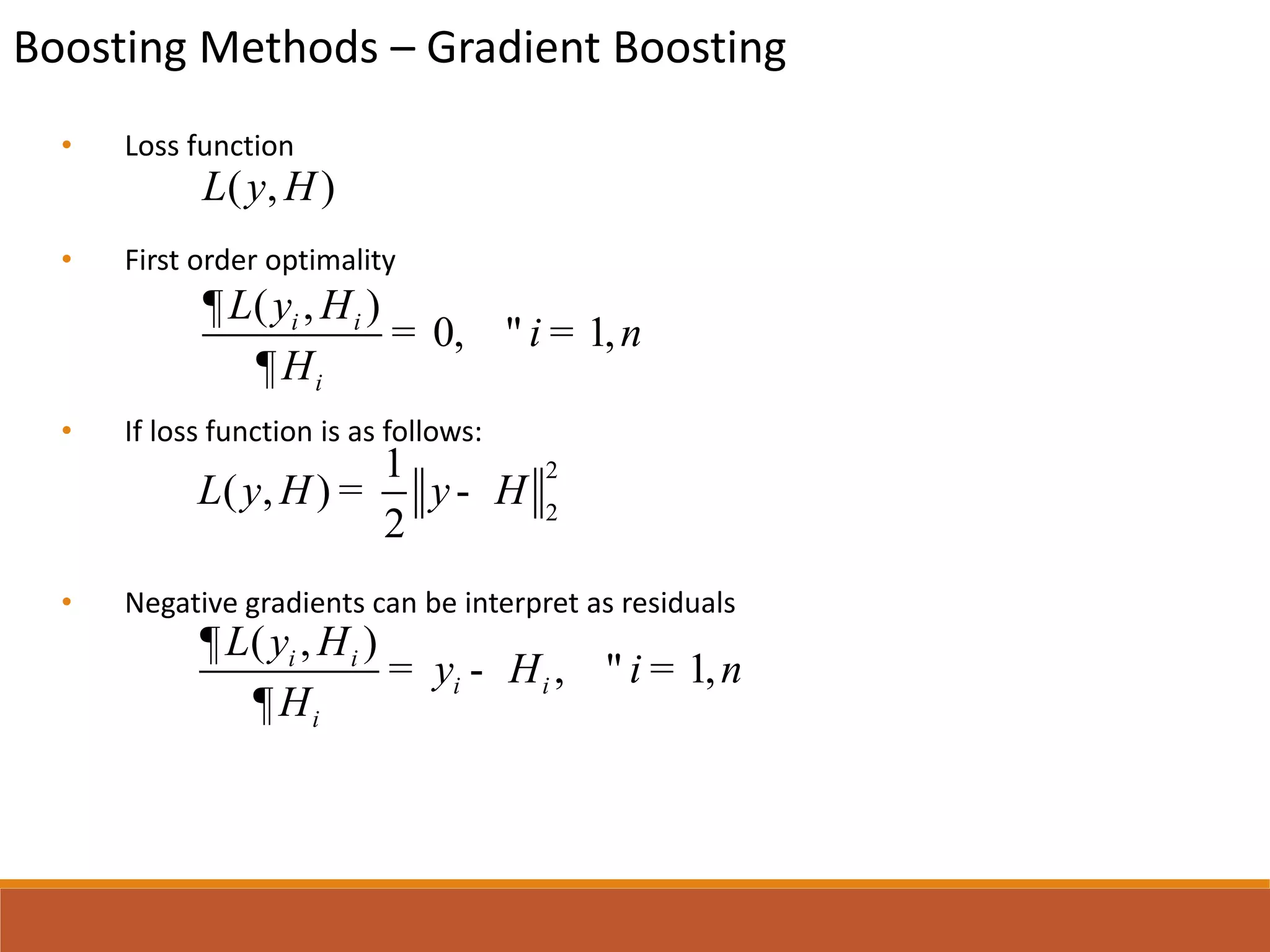

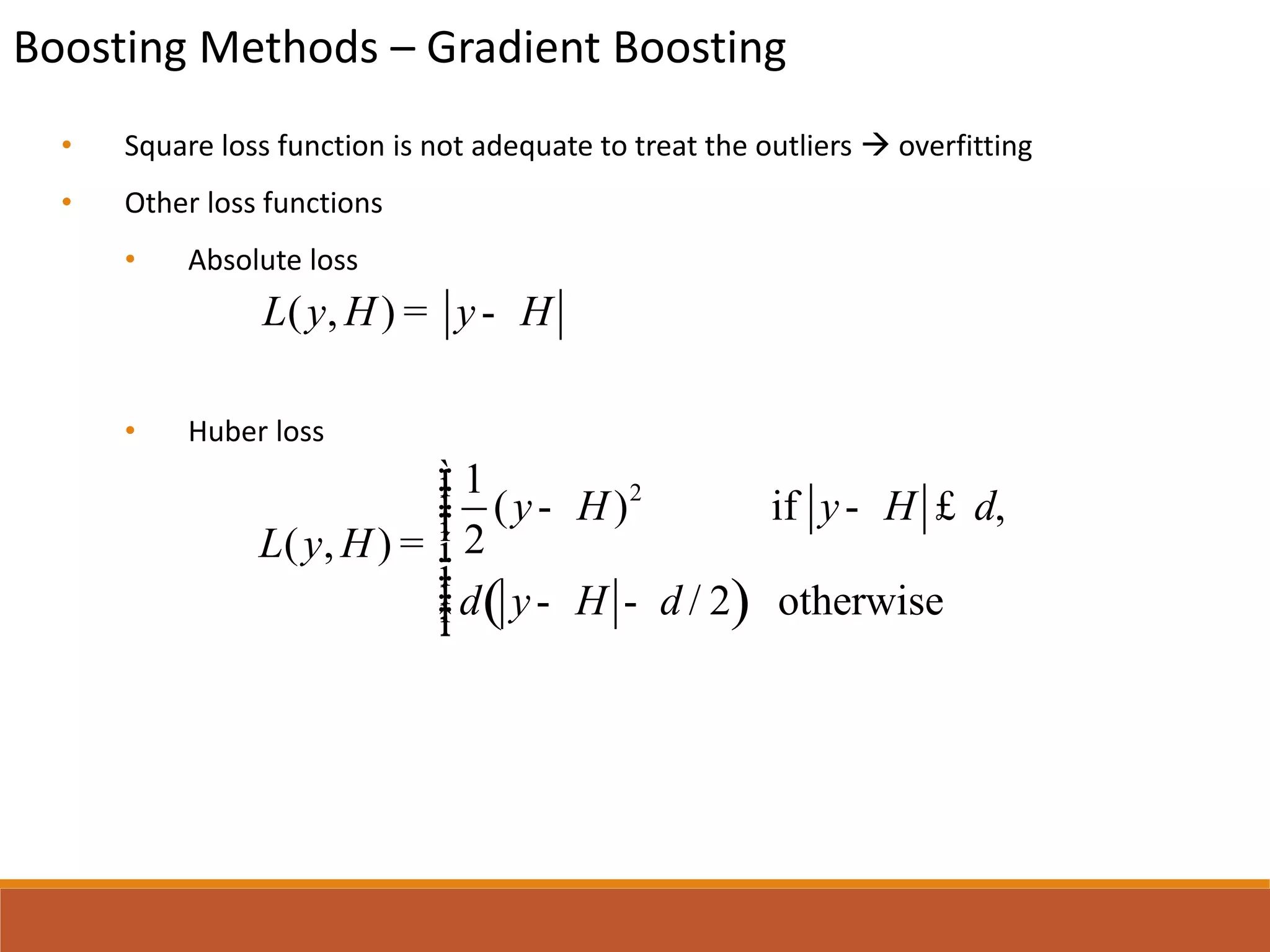

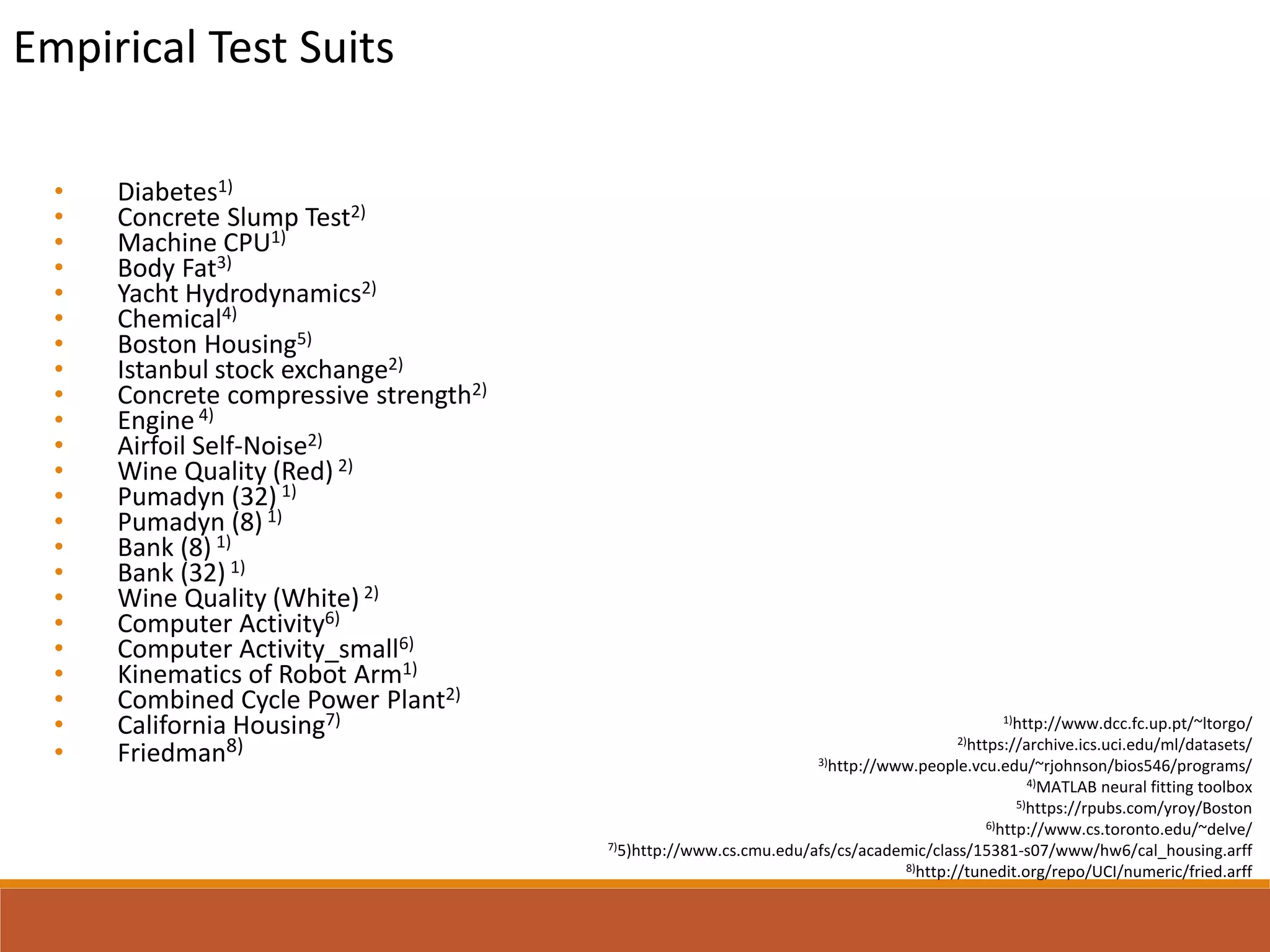

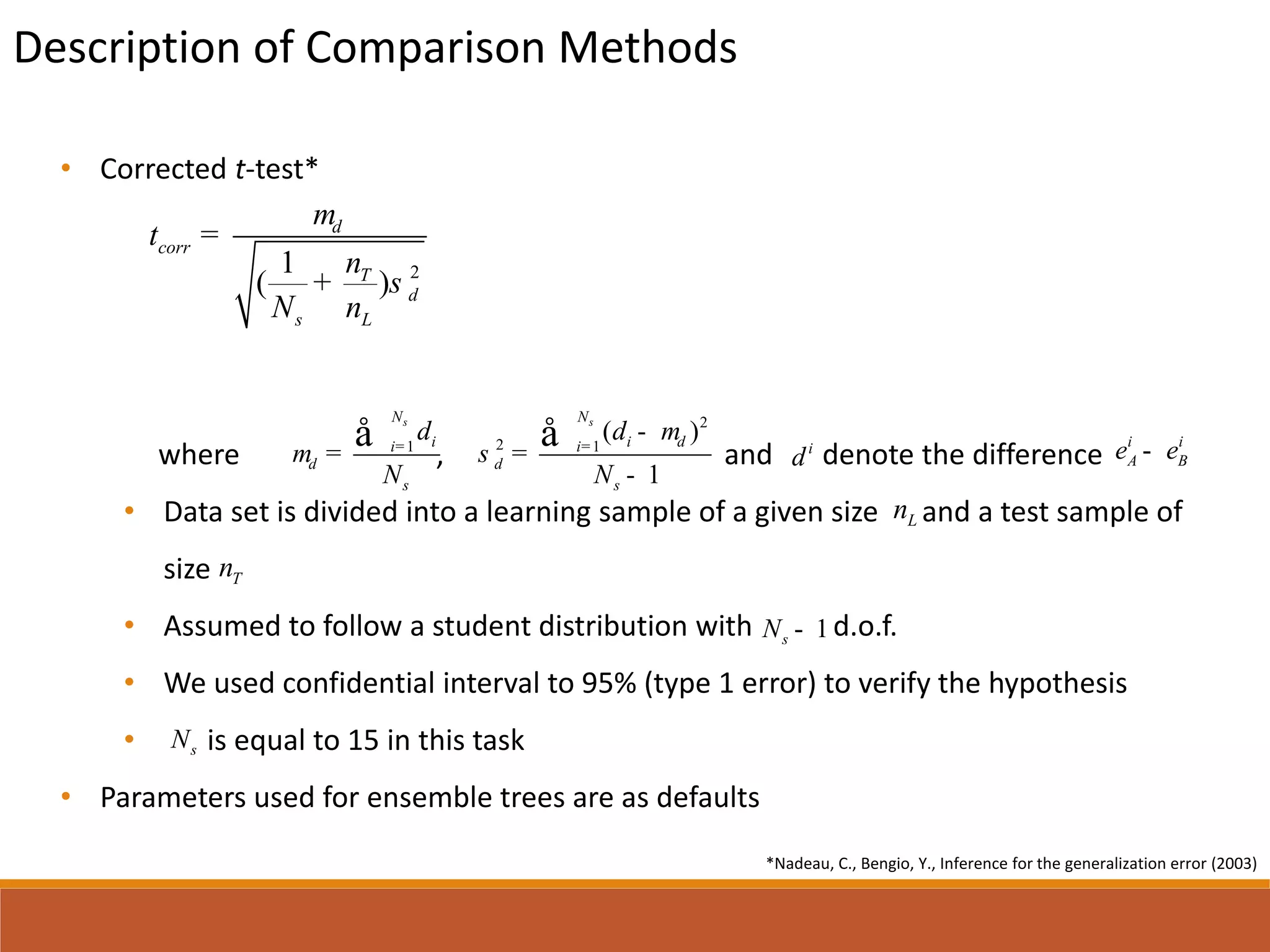

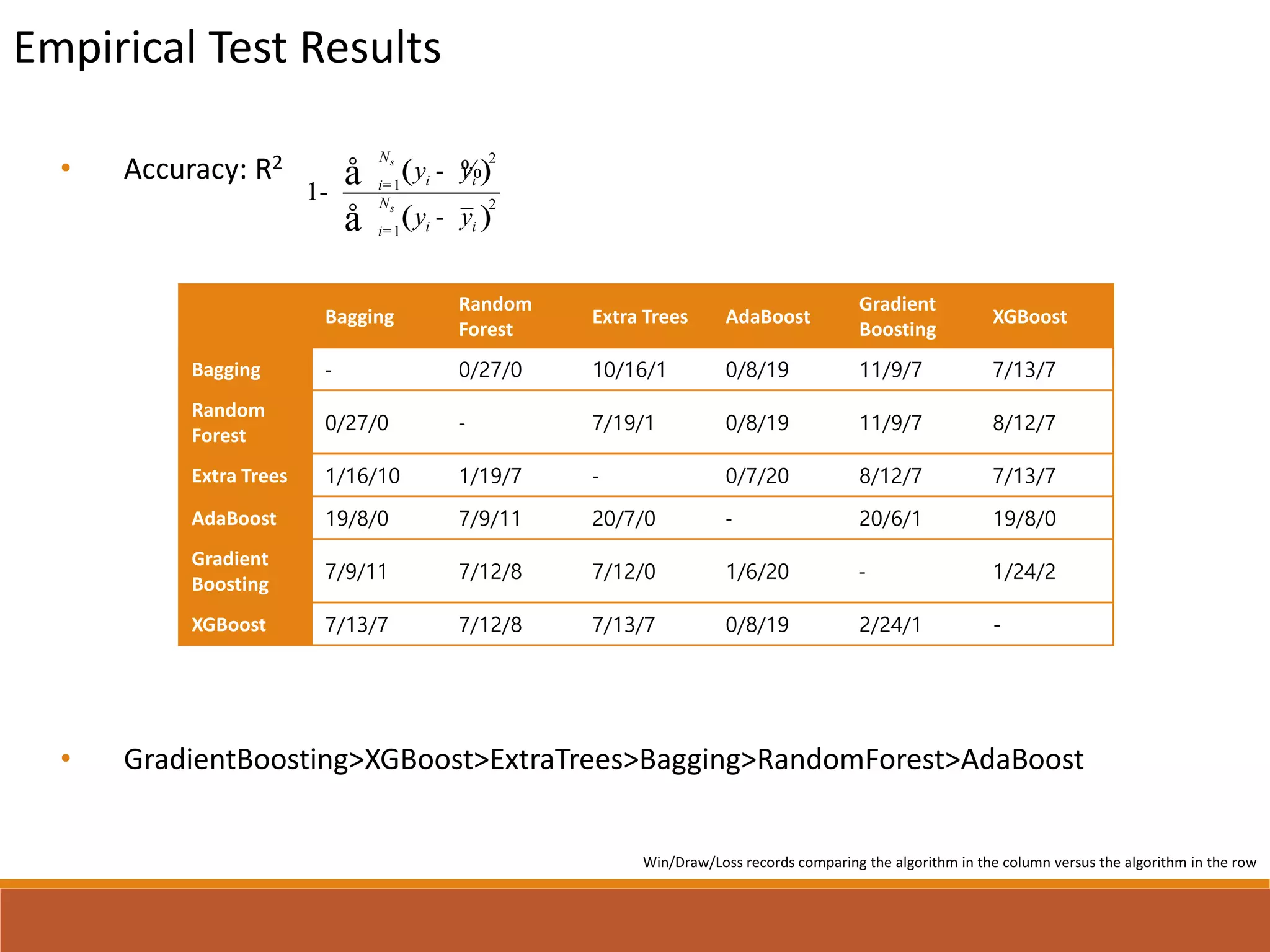

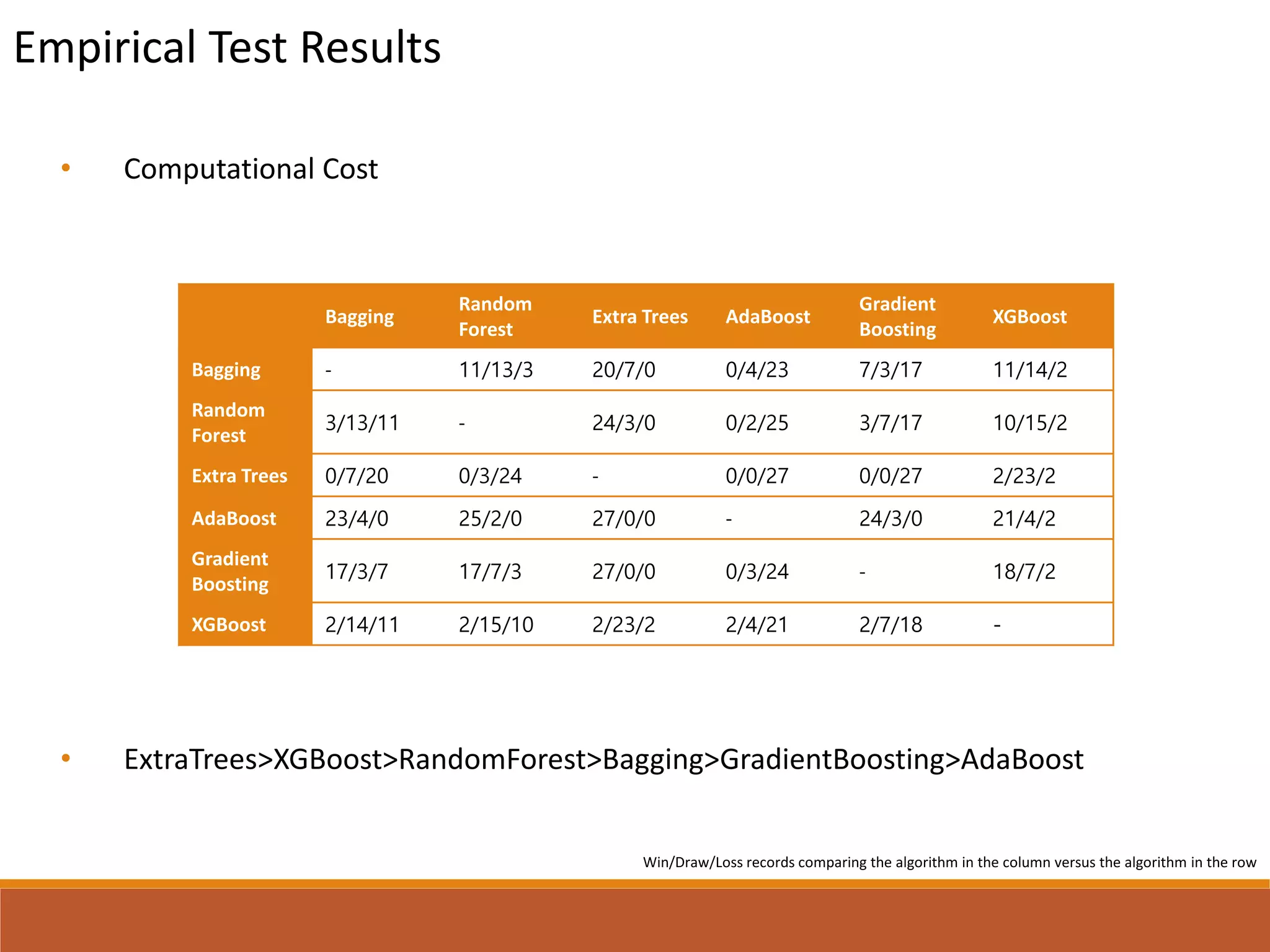

This document presents a comparative study of various decision tree ensembles for regression, focusing on their performance and time efficiency. Multiple algorithms such as bagging, boosting, and various tree-based methods are evaluated, with empirical test results indicating that gradient boosting and XGBoost generally outperform other methods. The study highlights the advantages and disadvantages of different ensemble methods, as well as the computational costs associated with each approach.

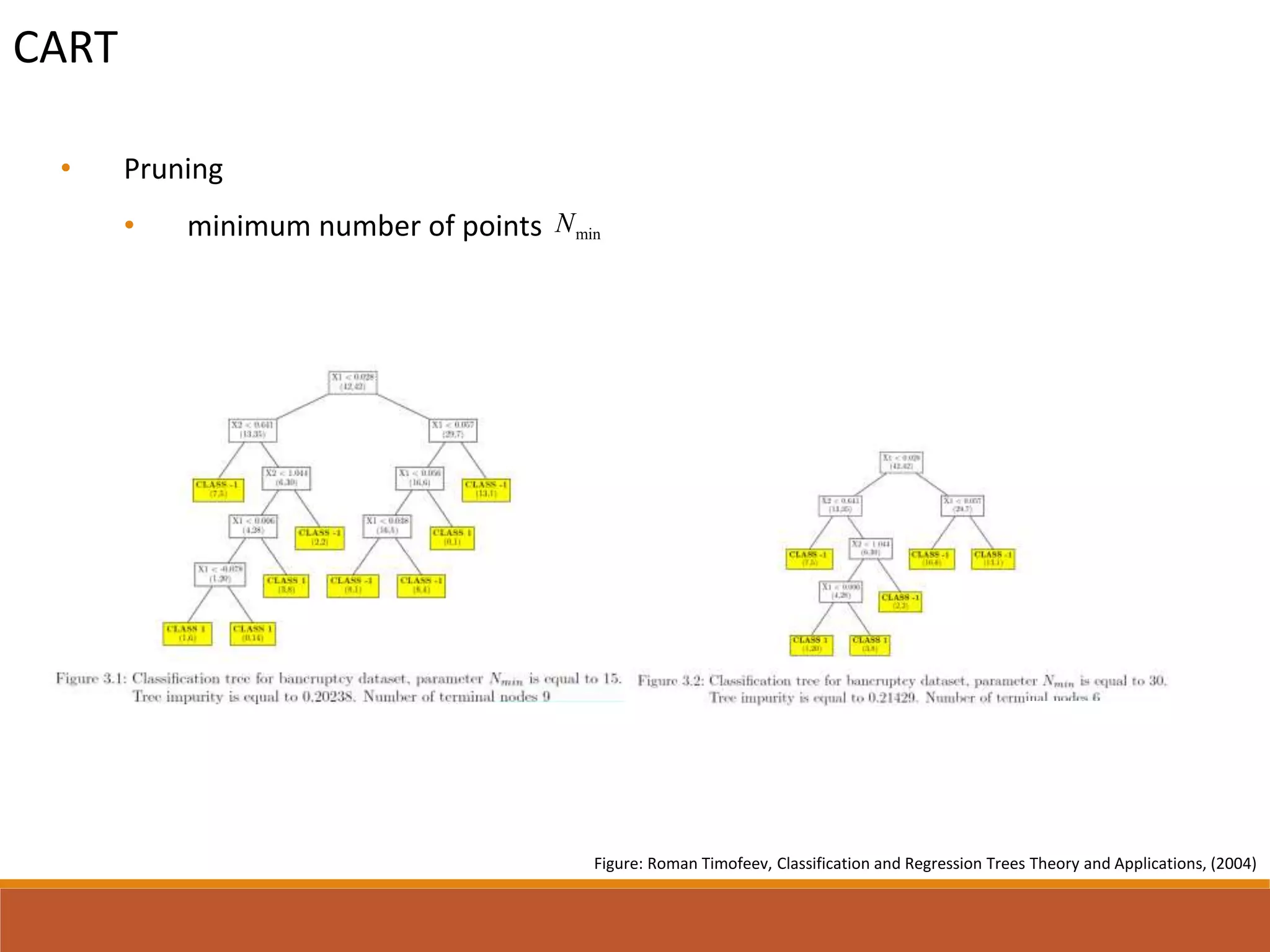

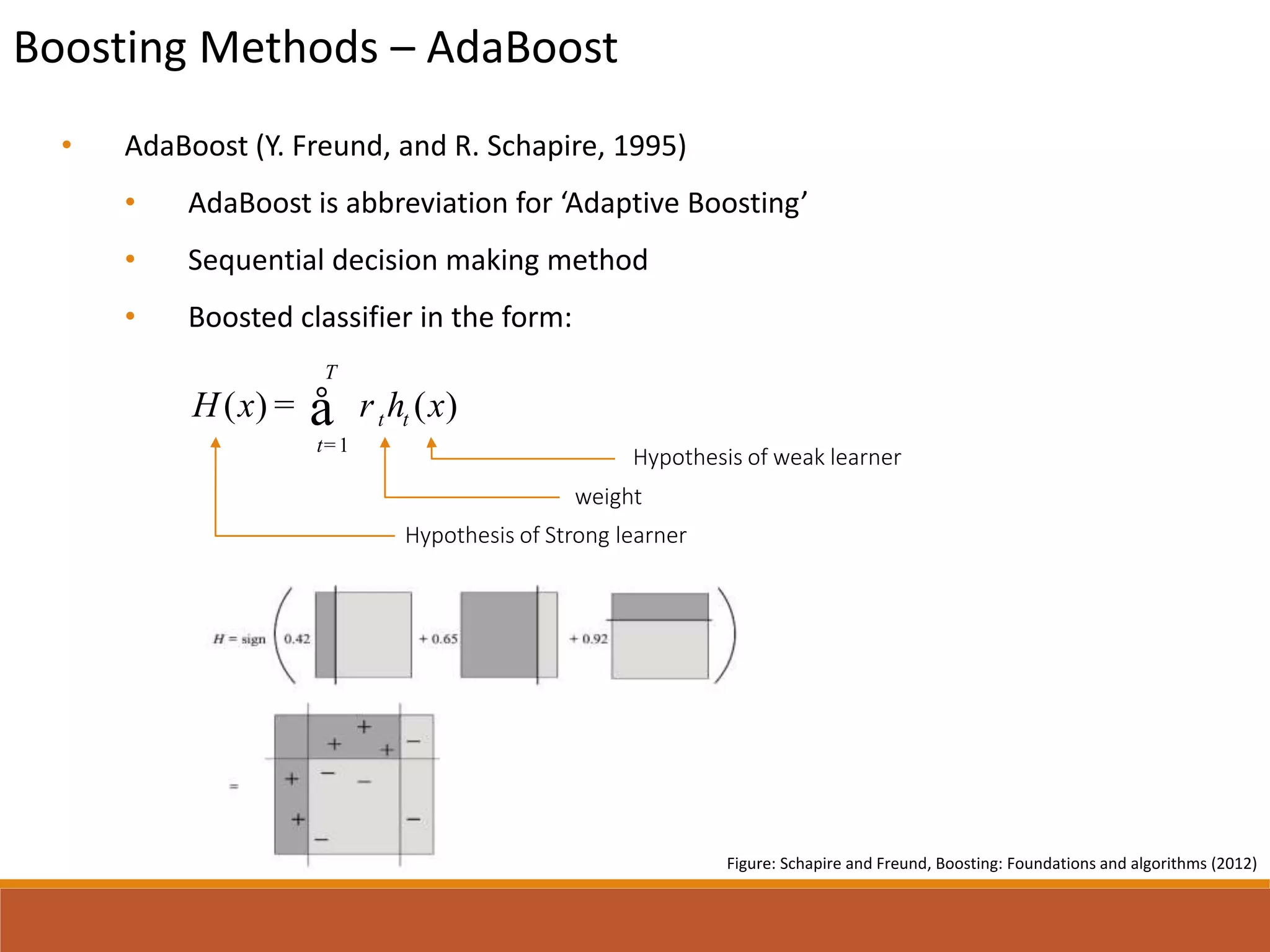

![CART

• CART stands for Classification and Regression Trees

• Has ability to generate regression trees

• Minimization of misclassification costs

• In regression, the costs are represented for least squares between target values and

expected values

• Maximization of change of impurity function:

• For regression,

argmax ( ) ( ( )) ( ( ))

j

R

j p l l r r

x

x i t P i t P i té ù= - -ê úë û

[ ]arg min Var( ) Var( )

j

R

j l r

x

x Y Y= +](https://image.slidesharecdn.com/20161223comparisonstudyofensembletrees-161223045840/75/Comparison-Study-of-Decision-Tree-Ensembles-for-Regression-5-2048.jpg)