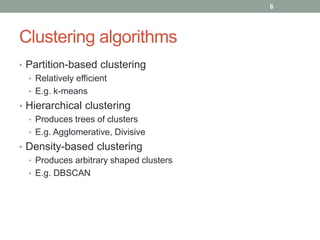

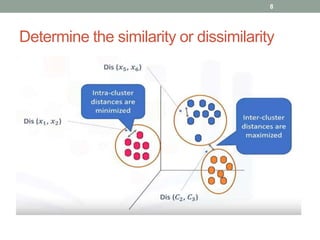

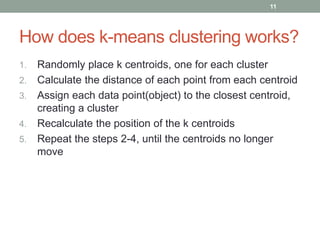

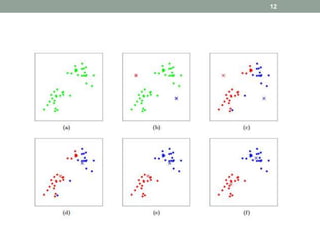

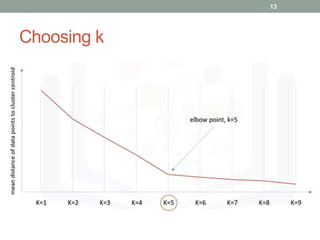

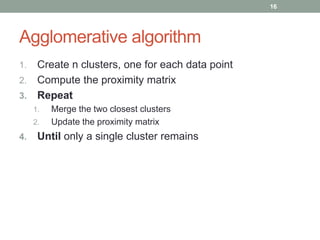

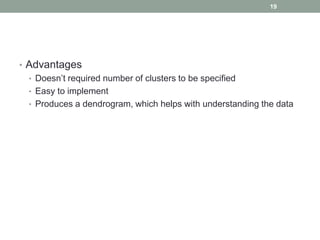

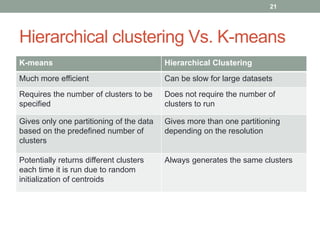

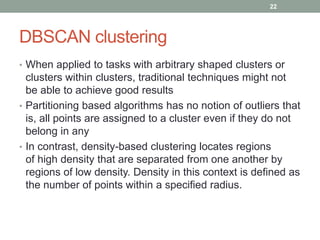

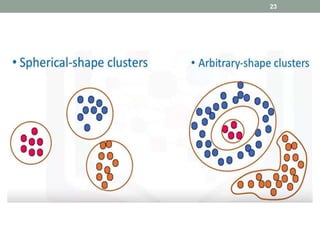

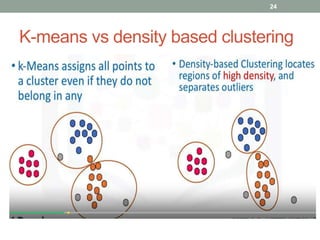

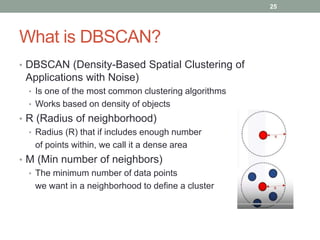

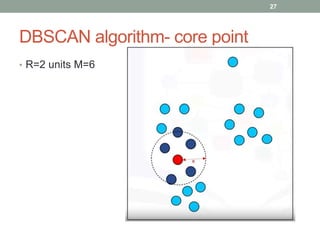

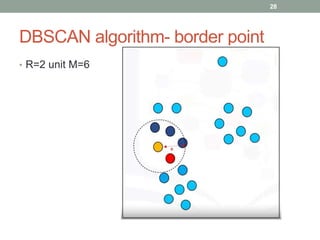

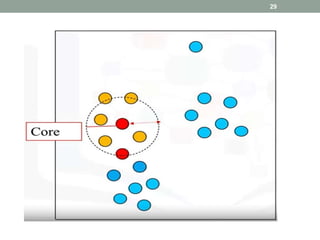

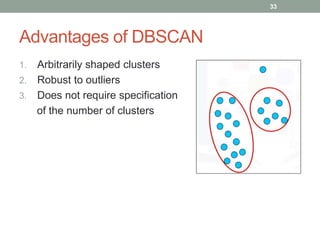

This document discusses various unsupervised machine learning clustering algorithms. It begins with an introduction to unsupervised learning and clustering. It then explains k-means clustering, hierarchical clustering, and DBSCAN clustering. For k-means and hierarchical clustering, it covers how they work, their advantages and disadvantages, and compares the two. For DBSCAN, it defines what it is, how it identifies core points, border points, and outliers to form clusters based on density.